Java

Java

JavaInterview questions

JavaInterview questions

Interviewer: How much do you know about high concurrency? Me: emmm...

Interviewer: How much do you know about high concurrency? Me: emmm...

Interviewer: How much do you know about high concurrency? Me: emmm...

High concurrency is an experience that almost every programmer wants to have. The reason is very simple: as the traffic increases, various technical problems will be encountered, such as interface response timeout, increased CPU load, frequent GC, deadlock, and large data storage Wait, these questions can drive us to continuously improve our technical depth.

In past interviews, if the candidate has done high-concurrency projects, I usually ask the candidate to talk about their understanding of high-concurrency, but I can answer them systematically. There are not many people who are good at this problem. They can be roughly divided into the following categories:

1, There is no data-based indicators Concept: Not sure what indicators to choose to measure high-concurrency systems? I can't tell the difference between concurrency and QPS, and I don't even know the total number of users of my system, the number of active users, QPS and TPS during flat and peak times, and other key data.

2. Some plans were designed, but the details were not thoroughly grasped: Can’t tell what to do Plan should focus on the technical points and possible side effects. For example, if there is a bottleneck in read performance, caching will be introduced, but issues such as cache hit rate, hot key, and data consistency are ignored.

3. One-sided understanding, equating high concurrency design with performance optimization: talks about concurrent programming, multi-level caching, asynchronousization, and horizontal expansion, but ignores high concurrency design Available design, service governance and operation and maintenance assurance.

4. Master the big plan, but ignore the most basic things: Be able to clearly explain vertical layering, There are big ideas such as horizontal partitioning and caching, but I have no intention to analyze whether the data structure is reasonable and whether the algorithm is efficient. I have never thought about optimizing details from the two most fundamental dimensions of IO and computing.

In this article, I want to combine my experience in high-concurrency projects to systematically summarize the knowledge and practical ideas that need to be mastered in high-concurrency. I hope it will be helpful to you. . The content is divided into the following 3 parts:

How to understand high concurrency? #What is the goal of high-concurrency system design? -

What are the practical solutions for high concurrency?

High concurrency means large traffic, and technical means need to be used to resist the impact of traffic. These means are like operating traffic, allowing the traffic to be processed by the system more smoothly and bringing better results to users. experience.

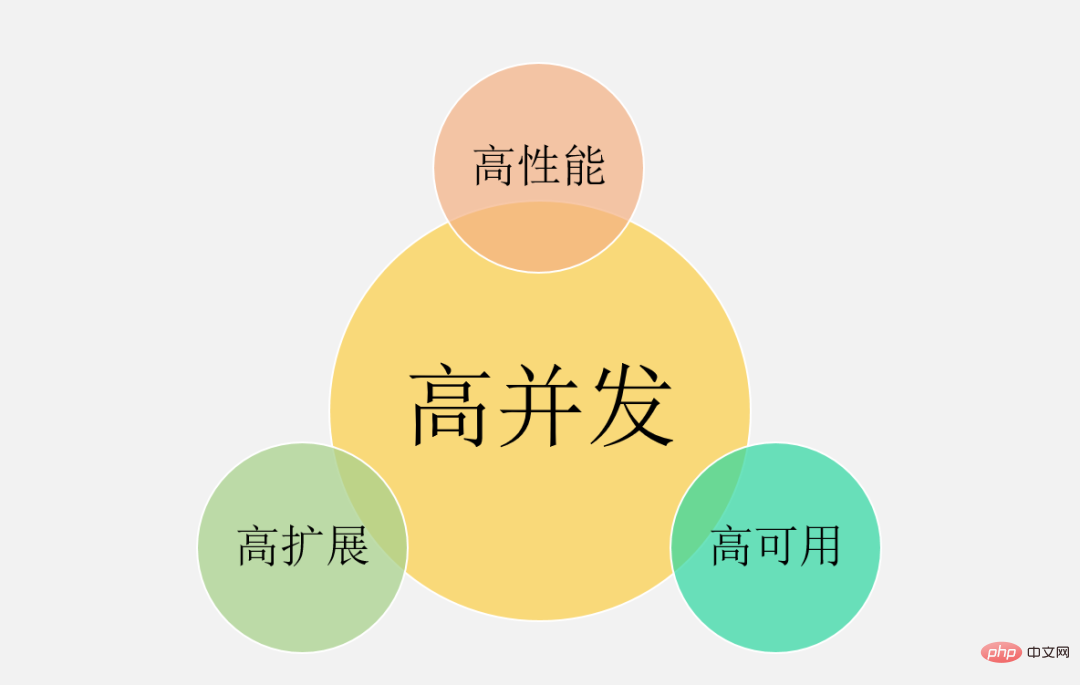

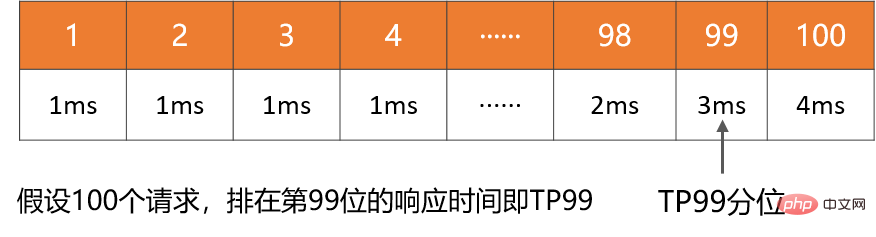

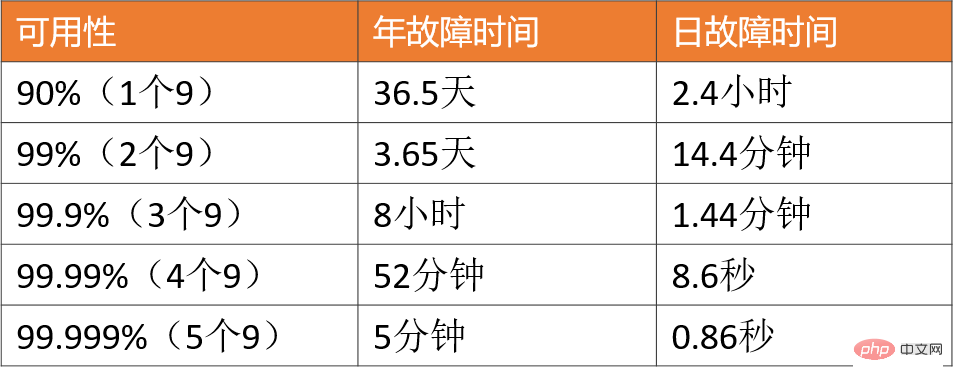

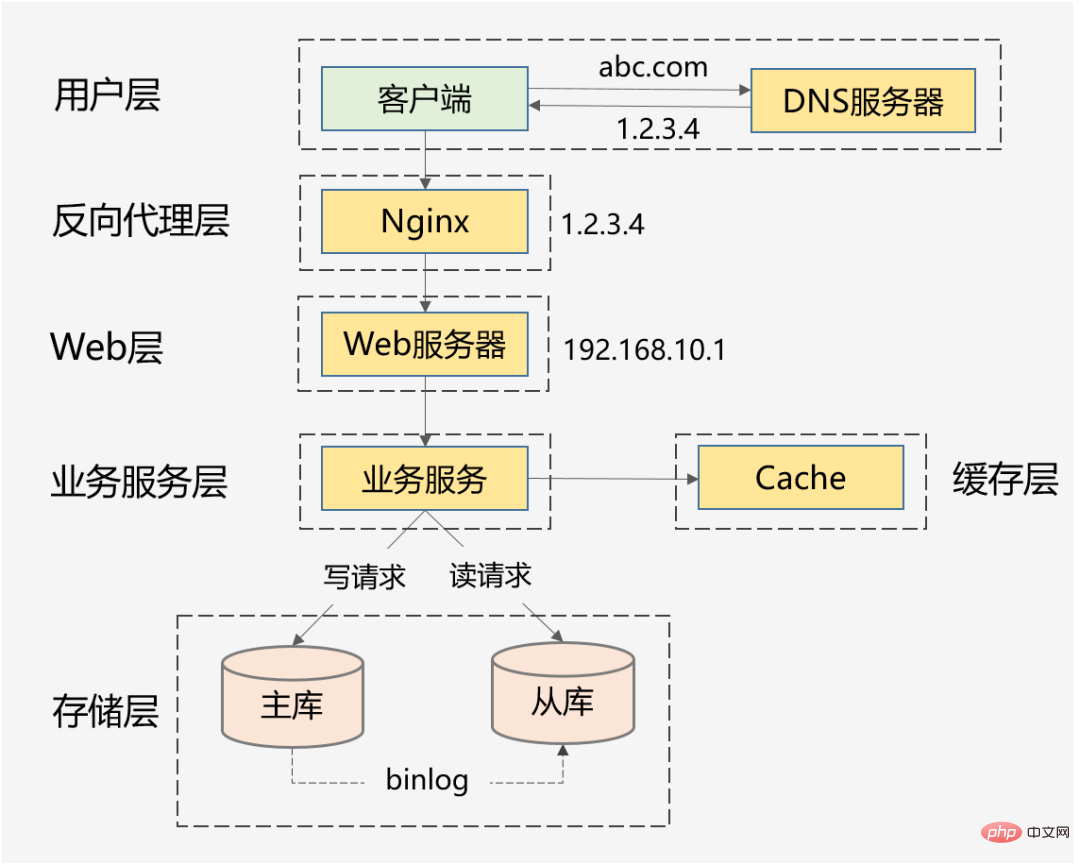

Our common high-concurrency scenarios include: Taobao’s Double 11, ticket grabbing during the Spring Festival, hot news from Weibo Vs, etc. In addition to these typical things, flash sale systems with hundreds of thousands of requests per second, order systems with tens of millions of orders per day, information flow systems with hundreds of millions of daily actives per day, etc., can all be classified as high concurrency. Obviously, in the high concurrency scenarios mentioned above, the amount of concurrency varies. So how much concurrency is considered high concurrency? ##1. Don’t just look at numbers, but also look at specific business scenarios. It cannot be said that the flash sale of 10W QPS is high concurrency, but the information flow of 1W QPS is not high concurrency. The information flow scenario involves complex recommendation models and various manual strategies, and its business logic may be more than 10 times more complex than the flash sale scenario. Therefore, they are not in the same dimension and have no comparative meaning. 2. Business is started from 0 to 1. Concurrency and QPS are only reference indicators. The most important thing is: when the business volume gradually increases to 10 times or 100 times, In the process of doubling, have you used high-concurrency processing methods to evolve your system, and prevent and solve problems caused by high concurrency from the dimensions of architecture design, coding implementation, and even product solutions? Instead of blindly upgrading hardware and adding machines for horizontal expansion. In addition, the business characteristics of each high-concurrency scenario are completely different: there are information flow scenarios with more reading and less writing, and there are transaction scenarios with more reading and writing, Is there a general technical solution to solve high concurrency problems in different scenarios? I think we can learn from the big ideas and other people’s plans, but in the actual implementation process, there will be countless pitfalls in the details. In addition, since the software and hardware environment, technology stack, and product logic cannot be completely consistent, these will lead to the same business scenario. Even if the same technical solution is used, different problems will be faced, and these pitfalls have to be overcome one by one. Therefore, in this article I will focus on basic knowledge, general ideas, and effective experiences that I have practiced, hoping to make You have a deeper understanding of high concurrency. First clarify the goals of high-concurrency system design, and then discuss the design plan and practical experience on this basis to be meaningful and targeted. ##High concurrency does not mean only pursuing high Performance, this is a one-sided understanding of many people. From a macro perspective, there are three goals for high concurrency system design: high performance, high availability, and high scalability. 1. High performance: Performance reflects the parallel processing capability of the system. With limited hardware investment, improvesPerformance means saving cost. At the same time, performance also reflects user experience. The response times are 100 milliseconds and 1 second respectively, which give users completely different feelings. 2. High availability : indicates the time when the system can serve normally. One has no downtime and no faults all year round; the other has online accidents and downtime every now and then. Users will definitely choose the former. In addition, if the system can only be 90% available, it will greatly hinder the business. 3. High expansion : Indicates the expansion capability of the system, whether it can be extended in a short time during peak traffic times Complete capacity expansion and more smoothly handle peak traffic, such as Double 11 events, celebrity divorces and other hot events. These three goals need to be considered comprehensively, because they are interrelated and even affect each other. thanlike saying:Considering the scalability of the system, you will design the service to be stateless,This kind of cluster is designed to ensure high scalability. In fact, also occasionallyupgrades the system Performance and usability. Another example: In order to ensure availability, timeout settings are usually set for service interfaces to prevent a large number of threads from blocking slow requests and causing a system avalanche. So what is a reasonable timeout setting? Generally, we will make settings based on the performance of dependent services. Let’s look at it from a micro perspective Look, what are the specific indicators to measure high performance, high availability and high scalability? Why were these indicators chosen? Performance indicators can be used to measure the current Performance issues, and also serve as the basis for evaluation of performance optimization. Generally speaking, the interface response time within a period of time is used as an indicator. 1. Average response time: most commonly used, but has obvious flaws and is insensitive to slow requests. For example, if there are 10,000 requests, of which 9,900 are 1ms and 100 are 100ms, the average response time is 1.99ms. Although the average time consumption has only increased by 0.99ms, the response time for 1% of requests has increased 100 times. 2, TP90, TP99 and other quantile values: Sort the response time from small to large, TP90 means ranking at the 90th point Bit response time, the larger the quantile value, the more sensitive it is to slow requests. 3. Throughput: It is inversely proportional to the response time. For example, the response time is 1ms. Then the throughput is 1000 times per second. Usually, when setting performance goals, both throughput and response time will be taken into consideration, such as this: at 1 per second Under 10,000 requests, AVG is controlled below 50ms, and TP99 is controlled below 100ms. For high-concurrency systems, AVG and TP quantile values must be considered at the same time. In addition, from the perspective of user experience, 200 milliseconds is considered the first dividing point, and users cannot feel the delay. 1 second is the second dividing point, and users can feel it. Delay, but acceptable. Therefore, for a healthy high-concurrency system, TP99 should be controlled within 200 milliseconds, and TP999 or TP9999 should be controlled within 1 second. Within. High availability refers to the system It has high fault-free operation capability. Availability = normal operation time / total system operation time. Several 9s are generally used to describe the availability of the system. For high-concurrency systems, the most basic requirement is: guarantee 3 9s or 4 9s. The reason is simple. If you can only achieve two nines, it means there is a 1% failure time. For example, some large companies often have more than 100 billion in GMV or revenue every year. 1% is a business impact of 1 billion level. In the face of sudden traffic, it is impossible to temporarily transform the architecture. The fastest The way is to add machines to linearly increase the processing power of the system. For business clusters or basic components, scalability = performance improvement ratio / machine addition ratio. The ideal scalability is: Resources are increased several times and performance is improved several times. Generally speaking, the expansion capability should be maintained above 70%. But from the perspective of the overall architecture of a high-concurrency system, the goal of is not just to extend the service It is enough to design it stateless, because when the traffic increases by 10 times, the business service can quickly expand 10 times, but the database may become a new bottleneck. Stateful storage services like MySQL are usually technically difficult to expand. If the architecture is not planned in advance (vertical and horizontal splitting), This will involve the migration of a large amount of data. Therefore, high scalability needs to be considered: service clusters, middleware such as databases, caches and message queues, load balancing, bandwidth, dependent third parties, etc. When concurrency reaches a certain amount Each of these factors can become a bottleneck for scaling later. Its goal is to improve the processing capabilities of a single machine, The plan also includes: 1. Do a good job of layered architecture: This is the advance of horizontal expansion, because high Concurrent systems often have complex businesses, and layered processing can simplify complex problems and make it easier to expand horizontally. The above diagram is the most common layered architecture on the Internet. Of course, the real high-concurrency system architecture will be further improved on this basis. For example, dynamic and static separation will be done and CDN will be introduced. The reverse proxy layer can be LVS Nginx, the Web layer can be a unified API gateway, the business service layer can be further micro-serviced according to vertical business, and the storage layer can be various heterogeneous databases. #2. Horizontal expansion of each layer: stateless horizontal expansion, stateful shard routing. Business clusters can usually be designed to be stateless, while databases and caches are often stateful. Therefore, partition keys need to be designed for storage sharding. Of course, read performance can also be improved through master-slave synchronization and read-write separation. 1. Cluster deployment reduces the pressure on a single machine through load balancing. The above solution is nothing more than considering all possible optimization points from the two dimensions of computing and IO. It requires a supporting monitoring system to understand the current performance in real time and support you Carry out performance bottleneck analysis, and then follow the 28/20 principle to focus on the main contradictions for optimization. #1. Failover of peer nodes. Both Nginx and the service governance framework support the failure of a node. Visit another node. High-availability solutions are mainly considered from three directions: redundancy, trade-offs, and system operation and maintenance. At the same time, they need to have supporting duty mechanisms and fault handling processes. When online problems occur, Can be followed up in time. 1. Reasonable layered architecture: For example, the most common ones on the Internet mentioned above Layered architecture, in addition, microservices can be further layered in a more fine-grained manner according to the data access layer and business logic layer (but performance needs to be evaluated, and there may be one more hop in the network). High concurrency is indeed a complex and systemic problem. Due to limited space, things such as distributed Trace, full-link stress testing, and flexible transactions are all is a technical point to consider. In addition, if the business scenarios are different, the high-concurrency implementation solutions will also be different, but the overall design ideas and the solutions that can be used for reference are basically similar. High concurrency design must also adhere to the three principles of architectural design: Simplicity, appropriateness and evolution. "Premature optimization is the root of all evil", cannot be divorced from the actual situation of the business, and do not over-design , The appropriate solution is the most perfect. I hope this article can give you a more comprehensive understanding of high concurrency. If you also have experience and in-depth thinking that you can learn from, please leave a message in the comment area for discussion.

❇ Performance Indicators

❇ Availability Indicators

❇ ScalabilityIndicators

##

❇ Vertical expansion (scale-up)

Because there will always be a limit to the performance of a single machine, it is ultimately necessary to Introducing horizontal expansion and further improving concurrent processing capabilities through cluster deployment, including the following two directions:

❇ High-performance practical solution

❇ High-availability practical solution

❇ Highly scalable practical solution

The above is the detailed content of Interviewer: How much do you know about high concurrency? Me: emmm.... For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Five common Go language interview questions and answers

Jun 01, 2023 pm 08:10 PM

Five common Go language interview questions and answers

Jun 01, 2023 pm 08:10 PM

As a programming language that has become very popular in recent years, Go language has become a hot spot for interviews in many companies and enterprises. For beginners of the Go language, how to answer relevant questions during the interview process is a question worth exploring. Here are five common Go language interview questions and answers for beginners’ reference. Please introduce how the garbage collection mechanism of Go language works? The garbage collection mechanism of the Go language is based on the mark-sweep algorithm and the three-color marking algorithm. When the memory space in the Go program is not enough, the Go garbage collector

Summary of front-end React interview questions in 2023 (Collection)

Aug 04, 2020 pm 05:33 PM

Summary of front-end React interview questions in 2023 (Collection)

Aug 04, 2020 pm 05:33 PM

As a well-known programming learning website, php Chinese website has compiled some React interview questions for you to help front-end developers prepare and clear React interview obstacles.

A complete collection of selected Web front-end interview questions and answers in 2023 (Collection)

Apr 08, 2021 am 10:11 AM

A complete collection of selected Web front-end interview questions and answers in 2023 (Collection)

Apr 08, 2021 am 10:11 AM

This article summarizes some selected Web front-end interview questions worth collecting (with answers). It has certain reference value. Friends in need can refer to it. I hope it will be helpful to everyone.

50 Angular interview questions you must master (Collection)

Jul 23, 2021 am 10:12 AM

50 Angular interview questions you must master (Collection)

Jul 23, 2021 am 10:12 AM

This article will share with you 50 Angular interview questions that you must master. It will analyze these 50 interview questions from three parts: beginner, intermediate and advanced, and help you understand them thoroughly!

Interviewer: How much do you know about high concurrency? Me: emmm...

Jul 26, 2023 pm 04:07 PM

Interviewer: How much do you know about high concurrency? Me: emmm...

Jul 26, 2023 pm 04:07 PM

High concurrency is an experience that almost every programmer wants to have. The reason is simple: as traffic increases, various technical problems will be encountered, such as interface response timeout, increased CPU load, frequent GC, deadlock, large data storage, etc. These problems can promote our Continuous improvement in technical depth.

Sharing of Vue high-frequency interview questions in 2023 (with answer analysis)

Aug 01, 2022 pm 08:08 PM

Sharing of Vue high-frequency interview questions in 2023 (with answer analysis)

Aug 01, 2022 pm 08:08 PM

This article summarizes for you some selected vue high-frequency interview questions in 2023 (with answers) worth collecting. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to everyone.

Take a look at these front-end interview questions to help you master high-frequency knowledge points (4)

Feb 20, 2023 pm 07:19 PM

Take a look at these front-end interview questions to help you master high-frequency knowledge points (4)

Feb 20, 2023 pm 07:19 PM

10 questions every day. After 100 days, you will have mastered all the high-frequency knowledge points of front-end interviews. Come on! ! ! , while reading the article, I hope you don’t look at the answer directly, but first think about whether you know it, and if so, what is your answer? Think about it and then compare it with the answer. Would it be better? Of course, if you have a better answer than mine, please leave a message in the comment area and discuss the beauty of technology together.

Take a look at these front-end interview questions to help you master high-frequency knowledge points (5)

Feb 23, 2023 pm 07:23 PM

Take a look at these front-end interview questions to help you master high-frequency knowledge points (5)

Feb 23, 2023 pm 07:23 PM

10 questions every day. After 100 days, you will have mastered all the high-frequency knowledge points of front-end interviews. Come on! ! ! , while reading the article, I hope you don’t look at the answer directly, but first think about whether you know it, and if so, what is your answer? Think about it and then compare it with the answer. Would it be better? Of course, if you have a better answer than mine, please leave a message in the comment area and discuss the beauty of technology together.