IT Home According to news on July 11, Baichuan Intelligence, a subsidiary of Wang Xiaochuan, today released the Baichuan-13B large model, which is known as "13 billion parameters open source and commercially available".

▲ Picture source Baichuang-13B GitHub page

According to the official introduction, Baichuan-13B is an open source commercially available large-scale language model containing 13 billion parameters developed by Baichuan Intelligent after Baichuan-7B. It has achieved the best results among models of the same size on both Chinese and English Benchmarks. . This release includes two versions: pre-training (Baichuan-13B-Base) and alignment (Baichuan-13B-Chat).

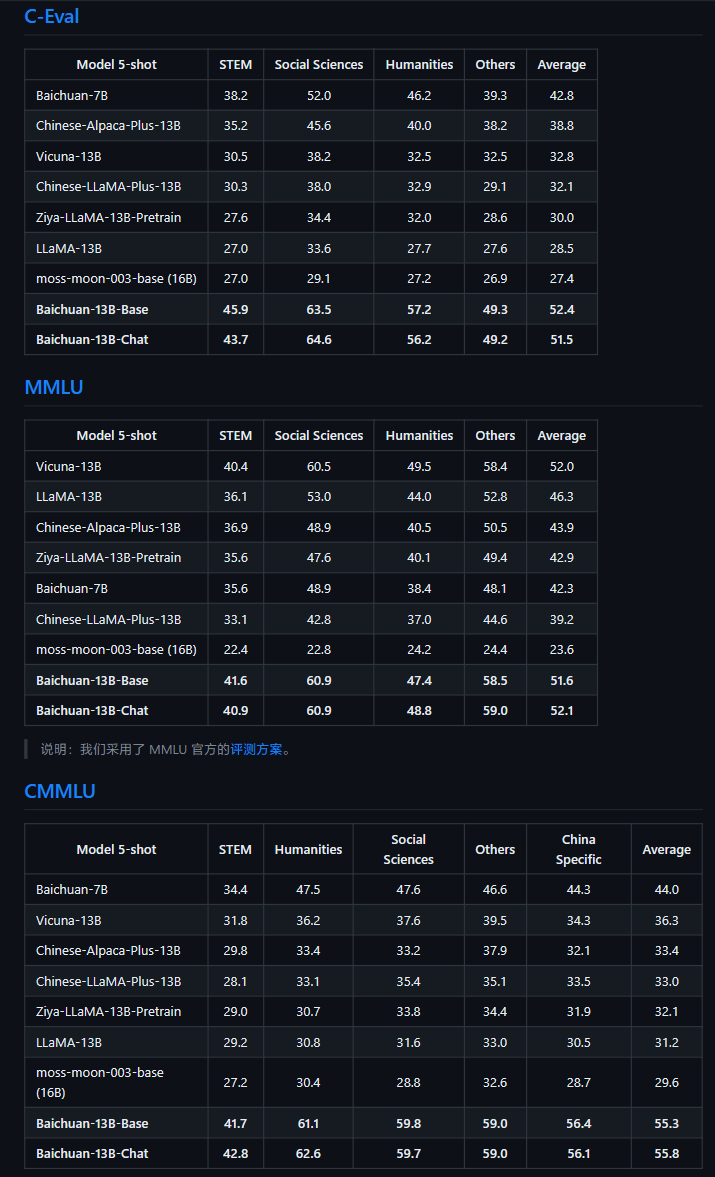

▲ Picture source Baichuang-13B GitHub page

Officially claimed that Baichuan-13B has the following characteristics:

- Larger size, more data: Baichuan-13B further expands the number of parameters to 13 billion based on Baichuan-7B, and trains 1.4 trillion tokens on high-quality corpus, exceeding LLaMA-13B by 40%, which is Currently the open source model with the largest amount of training data in 13B size. Supports Chinese and English bilingual, uses ALiBi position encoding, and the context window length is 4096.

- Open source pre-training and alignment models at the same time: The pre-training model is a "base" for developers, while the majority of ordinary users have stronger needs for alignment models with dialogue functions. Therefore, the project also has an alignment model (Baichuan-13B-Chat), which has strong conversational capabilities. It can be used out of the box and can be easily deployed with a few lines of code.

- More efficient reasoning: In order to support the use of a wider range of users, the project has also open sourced the quantized versions of int8 and int4. Compared with the non-quantified version, it greatly reduces the deployment machine resource threshold with almost no effect loss, and can Deployed on consumer-grade graphics cards such as NVIDIA RTX3090.

- Open source, free for commercial use: Baichuan-13B is not only fully open to academic research, but developers can also use it for free after applying by email and obtaining an official commercial license.

Currently, the model has been released on HuggingFace, GitHub, and Model Scope. Interested IT House friends can go and learn more.

The above is the detailed content of Baichuan Intelligent released Baichuan-13B AI model, claiming that '13 billion parameters are open source and can be used commercially'. For more information, please follow other related articles on the PHP Chinese website!

what is mysql index

what is mysql index

What is highlighting in jquery

What is highlighting in jquery

Ethereum browser blockchain query

Ethereum browser blockchain query

How to retrieve Douyin flames after they are gone?

How to retrieve Douyin flames after they are gone?

How to solve the problem of 400 bad request when the web page displays

How to solve the problem of 400 bad request when the web page displays

Commonly used search tools

Commonly used search tools

Free software for building websites

Free software for building websites

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence