Neo discovers that the world he lives in is not real, but a carefully designed simulated reality.

#And have you, for a moment, thought that the world we live in is a simulated matrix world.

And now, the matrix is officially opened.

静心感受,这个人类生存已久的地球,大自然的一切,都是虚幻世界。

The sun rises over the Arctic glacier. There are all kinds of strange fish and colorful coral reefs in the underwater world.

Snow is flying in the mountains, and eagles are soaring in the vast sky. In the scorching desert, dangerous snakes shuttle freely.

The small trees by the river were ablaze with fire and filled with smoke.

Crystal clear sea and creeks, sea turtles lazily basking in the sun on the beach, and many dragonflies in the air play.

The changes of light and shadow in the cave.

The falling raindrops and the maple leaves falling in the wind made time stop suddenly.

Everything you see is designed by artificial intelligence. Their simulations are so realistic that they are mistaken for reality itself.

The team that opened the door to this matrix is from Princeton. The moment the research came out, it caused an uproar on the Internet.

Netizens said one after another that we live in the matrix!

##In the paper, the researchers introduced a "Infinigen" is a procedural generator for realistic 3D scenes of the natural world.

Infinigen is completely procedural, from shape to texture, all generated from scratch through random mathematical rules.

Even, it can be transformed endlessly, covering plants, animals, terrain, fire, and clouds in nature , rain and snow and other natural phenomena.

The latest paper has been accepted by CVPR 2023.

Paper address: https://arxiv.org/pdf/2306.09310.pdf

Infinigen is built on the free and open source graphics tool Blender, and Infinigen is also open source.

It is worth mentioning that a pair of 1080p images can be generated in 3.5 hours!

Infinigen proposed by Princeton University can be easily customized to generate various The reality of a specific task.

is as follows, simulating the diversity in nature.

And its most important role is that it can be used as a generator of unlimited training data for a wide range of computer vision tasks.

Among them, including target detection, semantic segmentation, pose estimation, 3D reconstruction, view synthesis and video generation.

In addition, it can also be used to build a simulation environment for training physical robots and virtual embodied agents.

Of course, 3D printing, game development, virtual reality, film production and general content creation are all available.

Next, let’s take a look at how the Infinigen system is designed.

Preview of Blender.

The researchers mainly use Blender to develop procedural rules. Blender is an open source 3D modeling software that provides various primitives and practical tools.

Blender represents the scene as a hierarchy of placed objects.

The user modifies this representation by transforming objects, adding primitives, and editing meshes.

Blender provides import/export of most common 3D file formats.

Finally, everything in Blender can be automated via its Python API, or by checking out its open source code.

Node translator.

As part of Infinigen, researchers have developed a new set of tools to accelerate researchers’ programmatic modeling.

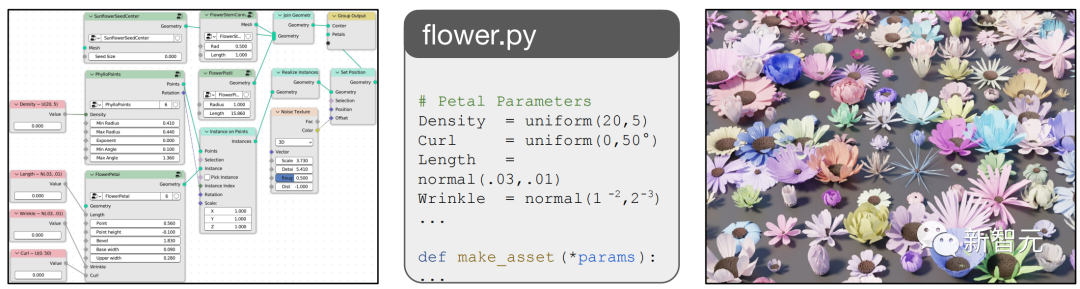

One notable example is the researchers’ Node Converter, which automatically converts a node graph into Python code, as shown.

The resulting code is more general and allows researchers to randomize graph structures beyond just input parameters.

This tool makes node diagrams more expressive and allows easy integration with other programmatic rules developed directly in Python or C.

It also allows non-programmers to contribute Python code to Infinigen by making node graphs.

Generator subsystem. Infinigen is made up of generators, which are probabilistic programs, each specialized in generating a subclass of assets (such as mountains or fish).

Each generator has a set of high-level parameters (such as the overall height of the mountain) that reflect user-controllable external degrees of freedom.

By default, researchers randomly sample these parameters based on distributions tuned to reflect nature, without user input.

However, users can also override any parameter using the researchers’ Python API to achieve fine-grained control over data generation.

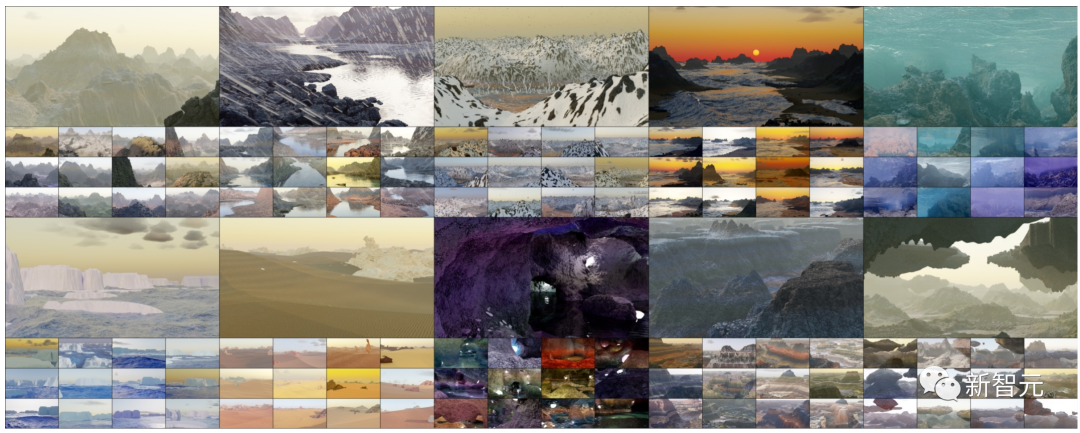

The picture below is a random scene that only contains terrain. The researchers selected 13 images of various natural scene types.

are: mountains, rising rivers, snowy mountains, coastal sunrises, underwater, arctic icebergs, deserts, caves, canyons and floating islands.

The picture below is a randomly generated picture simulating fire, including smoke, waterfalls, and volcanic eruption scenes.

##Leaves, flowers, mushrooms, pine cones.

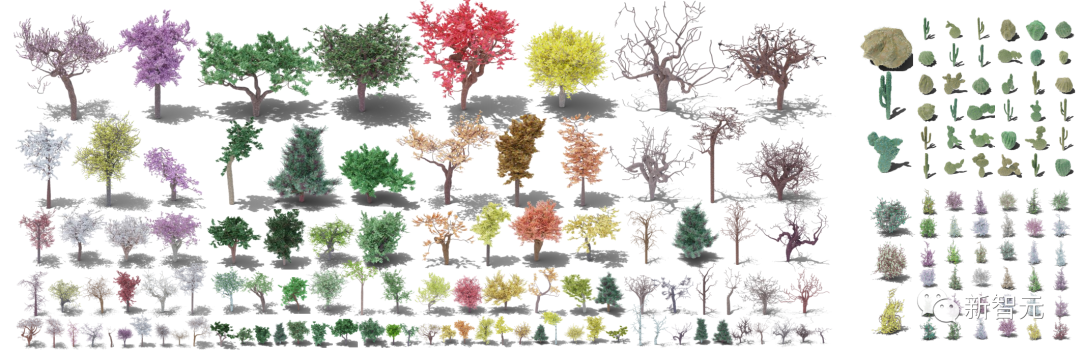

Trees, cacti, shrubs.

Sea life.

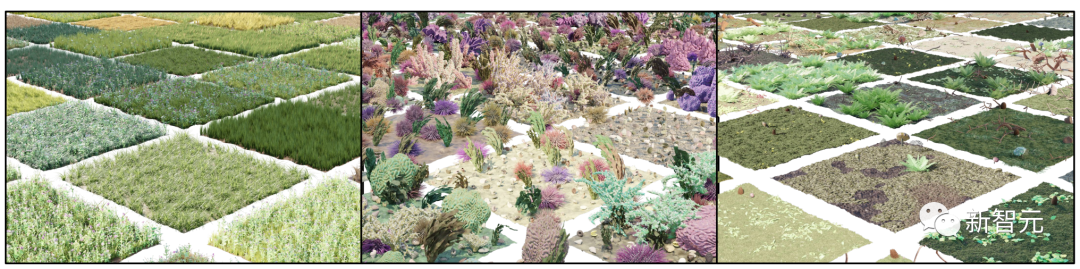

Various types of surfaces.

The picture below shows the generation of creatures.

The researchers automatically generated different genomes (a), body parts (b), body part splicing (c), hair (d) and body posture (e).

On the right side of the picture are the randomly generated carnivores, herbivores, birds, beetles and fish displayed by the researchers.

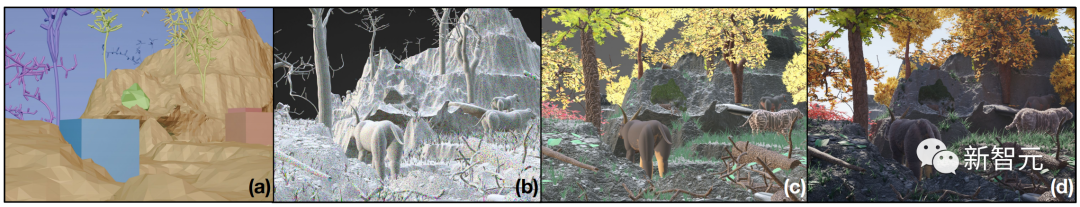

In the figure below, the researchers programmatically composed a random scene layout (a).

The researcher generates all necessary image content (b, to display the color of each mesh face) and applies procedural materials and displacements (c).

Finally render a real image (d).

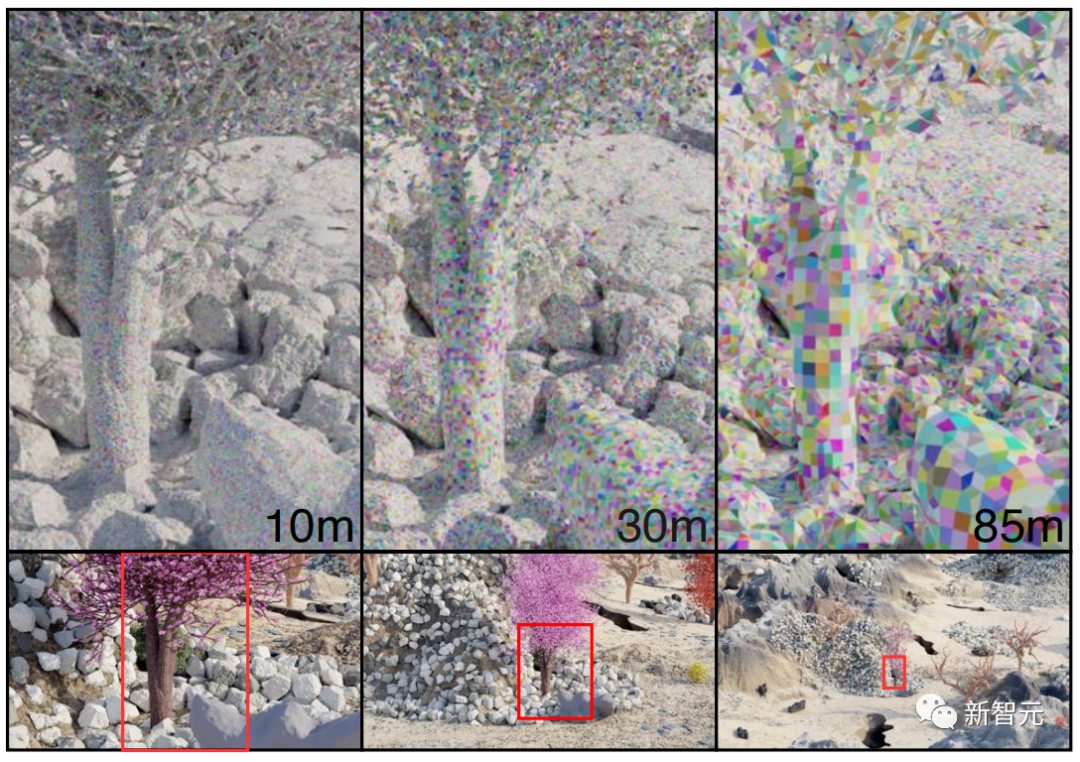

The picture below shows dynamic resolution scaling.

The researchers demonstrated close-up grid visualizations of three cameras at different distances but with the same content.

Although the grid resolution is different, no change is visible in the final image.

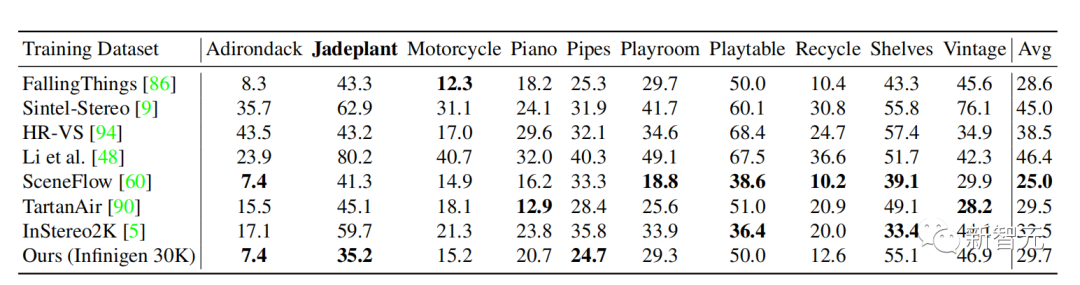

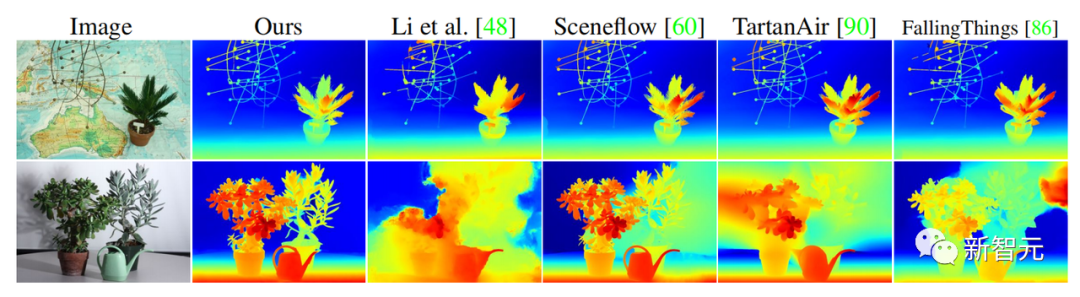

In order to evaluate Infinigen, the researchers produced 30K image pairs, These images have ground truth for rectified stereo matching.

The researchers started training RAFTStereo on these images and compared the results on the Middlebury validation set and test set.

#The research code was just released today , has collected 99 stars.

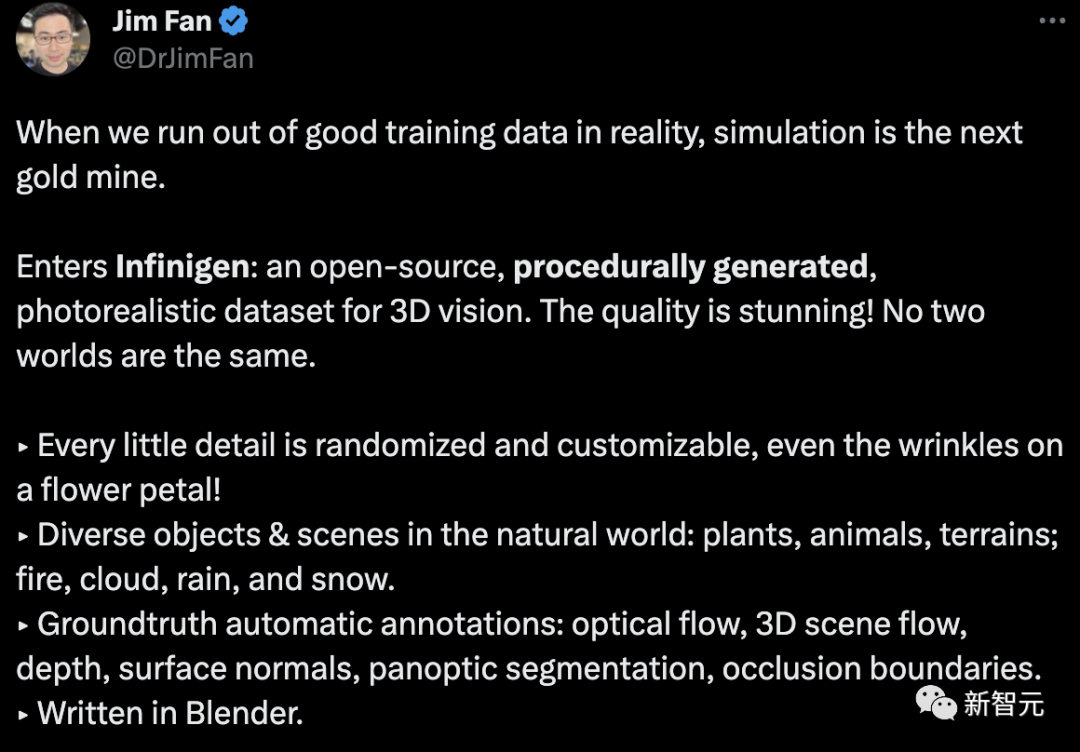

NVIDIA scientist Jim Fan said that when we are in reality When there is no good training data, simulation is the next "gold mine".

Here, Infinigen is an open source, programmatically generated, realistic data set for 3D vision. The quality is amazing! No two worlds are the same.

▸ Every little detail is random and customizable, even the pleats on the petals!

▸ Various objects and scenes in nature: plants, animals, terrain; fire, clouds, rain and snow.

▸ Base truth automatic annotation: optical flow, 3D scene flow, depth, surface normal vector, panoramic segmentation, closed boundary.

▸ Written in Blender.

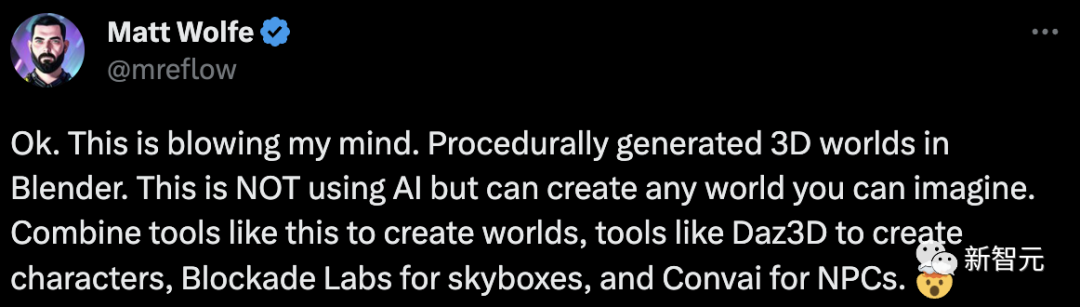

Some netizens said that this was really an eye-opener for me. Procedurally generate 3D worlds in Blender. Without using AI, you can create any world you can imagine.

Combine tools like Daz3D to create the world, Blockade Labs to create the skybox, and Convai to create the NPCs.

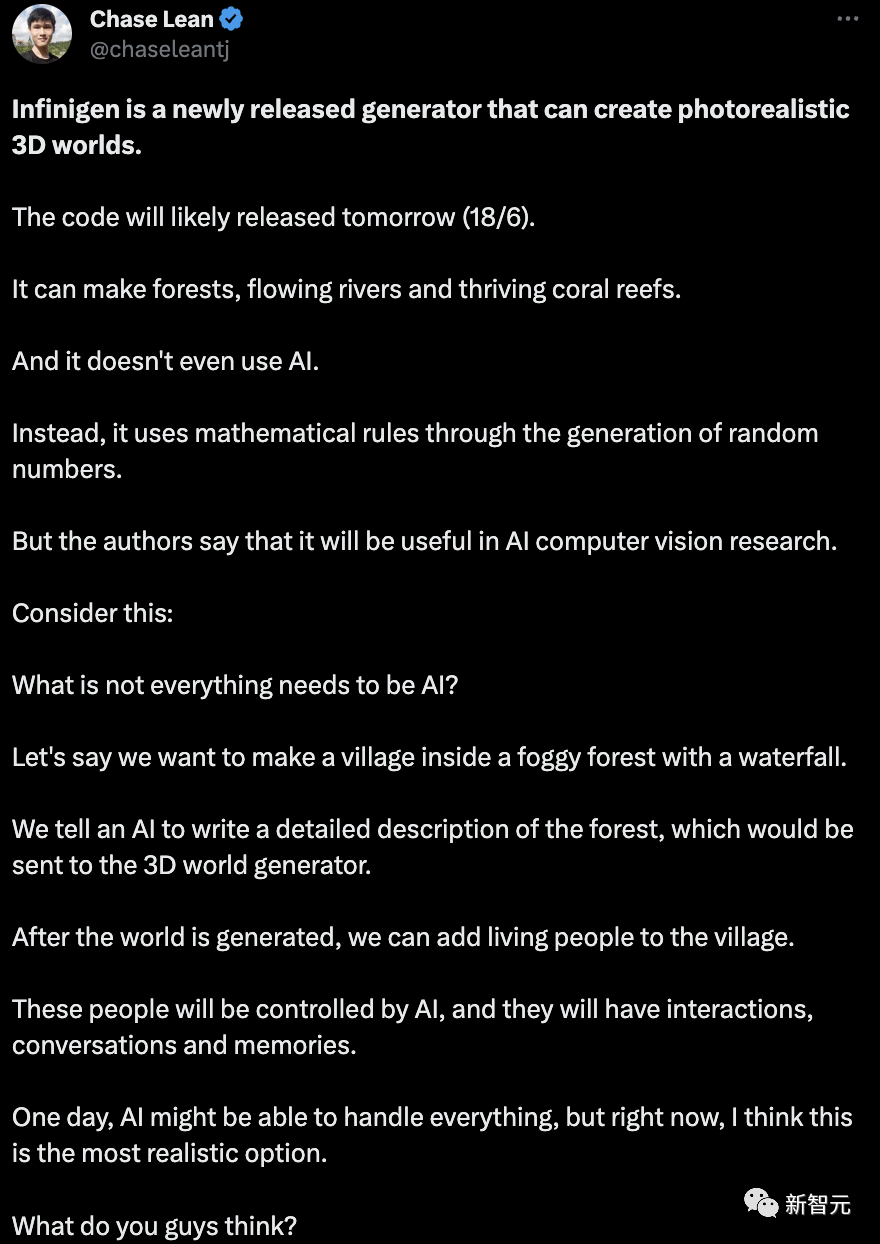

Others say Infinigen will play a role in artificial intelligence computer vision research.

He believes that artificial intelligence will handle everything in the future.

For example, we want to make a village with a waterfall in a foggy forest.

We tell an artificial intelligence to write a detailed description of the forest and send it to the 3D world generator.

After the world is generated, you can add characters to the village. These people will be controlled by artificial intelligence and they will have interactions, conversations and memories.

A kind of Westworld rush.

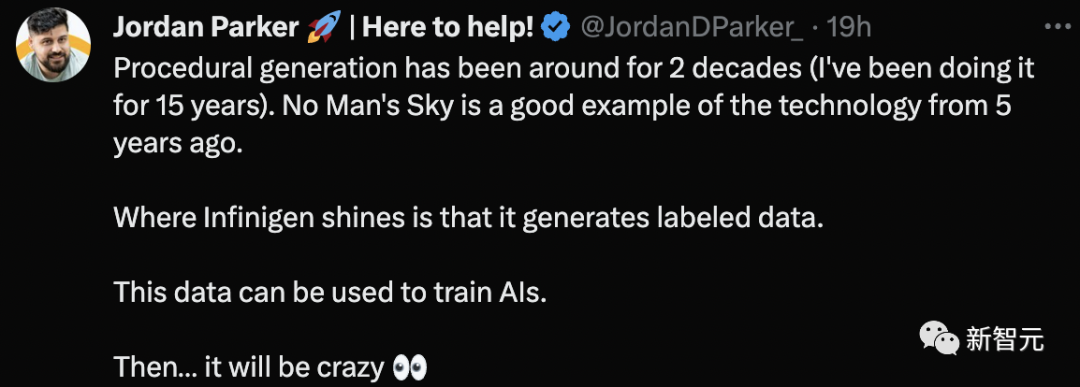

In addition, some netizens said that procedural generation has been around for 20 years (I have been doing it for 15 years). "No Man's SKy" is a great example of technology from 5 years ago.

The highlight of Infinigen is that it generates labeled data. This data can be used to train AI. That's crazy.

Some netizens imagine that in the near future, games will become crazy... "Independent" game development Players will be able to launch some crazy high-end content.

Infinigen generation is so realistic that some people think it was generated by Unreal Engine.

Alexander Raistrick

##Alexander Raistrick is a second-year doctoral student in the Department of Computer Science at Princeton University, and his supervisor is Jia Deng.

Lahav Lipson

Lahav Lipson is Third-year doctoral student at Princeton University.

His research focuses on building deep networks for 3D vision, leveraging strong assumptions about epipolar geometry to achieve better generalization and test accuracy.

Zeyu Ma

The above is the detailed content of Princeton Infinigen Matrix is open! AI creator creates nature 100%, so lifelike that it explodes. For more information, please follow other related articles on the PHP Chinese website!

What file is resource?

What file is resource?

How to set a scheduled shutdown in UOS

How to set a scheduled shutdown in UOS

Springcloud five major components

Springcloud five major components

The role of math function in C language

The role of math function in C language

What does wifi deactivated mean?

What does wifi deactivated mean?

iPhone 4 jailbreak

iPhone 4 jailbreak

The difference between arrow functions and ordinary functions

The difference between arrow functions and ordinary functions

How to skip connecting to the Internet after booting up Windows 11

How to skip connecting to the Internet after booting up Windows 11