Produced by Sohu Technology

Author|Zheng Songyi

On June 9, the 2023 Zhiyuan Artificial Intelligence Conference was held in Beijing. At four o'clock in the morning local time in France, Yann LeCun, known as one of the "Three Giants of Deep Learning in the World", delivered a speech on the French video link in Beijing with the theme "Towards a Large Model Capable of Learning, Reasoning and Planning" The speech expressed in-depth thinking about artificial intelligence.

Sohu Technology watched this speech at the Zhiyuan Conference. From Yang Likun’s smiling expression during the speech, we can feel Yang Likun’s positive and optimistic attitude towards the development of artificial intelligence. Previously, when Musk and others signed a joint letter stating that the development of artificial intelligence would bring risks to human civilization, Yang Likun publicly refuted it, believing that artificial intelligence has not yet developed to the extent of posing a serious threat to mankind. In his speech, Yang Likun once again emphasized that artificial intelligence is controllable. He said, "Fear is caused by the anticipation of potential negative results, while elation is generated by the prediction of positive results. With a goal-driven system like this, I Call it 'goal-driven AI', it will be controllable because we can set goals for it through a cost function, ensuring that these systems don't want to take over the world, but instead, it succumbs to humanity and security .”

Yang Likun said that the gap between artificial intelligence and humans and animals lies in logical reasoning and planning, which are important features of intelligence. Today's large models can only "react instinctively."

"If you train them with one trillion or two trillion tokens, the performance of the machine is amazing, but eventually the machine will make factual errors and logical errors, and their reasoning capabilities are limited."

Yang Likun emphasized that language models based on self-supervision cannot obtain knowledge about the real world. He believes that machines are not very good at learning compared to humans and animals. For decades, the way to develop systems has been to use supervised learning, but supervised learning requires too many labels, learning anything requires a lot of trials, and the results of reinforcement learning are unsatisfactory. These systems are fragile and make mistakes. Dull and wrong, and not really able to reason or plan.

"Just like when we give a speech, how to express one point to another and how to explain things is planned in our brains, rather than improvising word by word. Maybe at a low level, we are improvising play, but at a high level, we must be planning. So the need for planning is very obvious. My prediction is that in a relatively short few years, sane people will definitely not use autoregressive elements (self-supervised One of the models in learning methods). These systems will soon be abandoned because they are beyond repair."

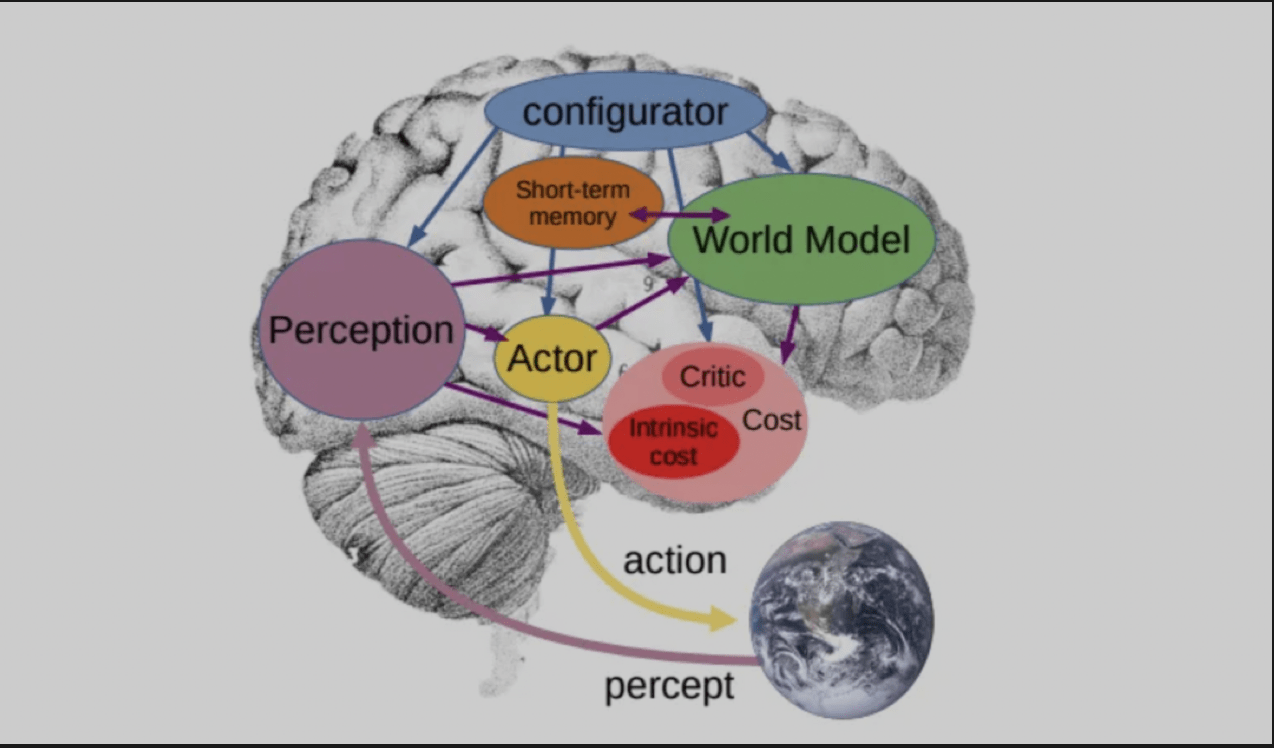

He said that in order for the language model to truly understand the knowledge of the real world, a new architecture is needed to replace it, and this architecture is the architecture he proposed in the paper he published a year ago, "Autonomous Intelligence (Autonomous Intelligence)" ". This is an architecture in which a configuration module controls the entire system and performs prediction, reasoning, and decision-making based on input information. The "world module" has the ability to estimate missing information and predict future external conditions.

Talking about the challenges that AI will face in the next few years, Yang Likun pointed out three aspects. The first is to learn the representation and prediction model of the world; the second is to learn to reason, that is, to learn how to use thinking consciously and purposefully to complete tasks. ;The final challenge is how to plan complex sequences of actions by breaking down complex tasks into simpler tasks, running them in a hierarchical manner.

Speaking of this, Yang Likun introduced another model he published in the paper, "World Model", which can imagine a scene and predict the results of actions based on the scene. The goal is to find a sequence of actions that is predicted by its own model of the world and that minimizes a range of costs.

When Yang Likun was asked during the Q&A session about an upcoming debate on the status and future of AGI, he said that the debate would revolve around the question of whether artificial intelligence systems pose existential risks to humans. Max Tegmark and Yoshua Bengio will side with the “yes,” arguing that powerful AI systems could pose existential risks to humans. And Liqun Yang and Melanie Mitchell will be on the “no” side.

"Our point is not that there are no risks, but that although these risks exist, they can easily be mitigated or suppressed through careful design."

Yang Likun believes that the super-intelligent system has not yet been developed. After it is invented, it will be too late to discuss "whether the super-intelligent system can be made safe for humans."

The above is the detailed content of Turing Award winner Yang Likun: AI has limited logical reasoning and planning capabilities and will make factual and logical errors.. For more information, please follow other related articles on the PHP Chinese website!