Let’s talk about the commonly used deployment solutions in MySQL.

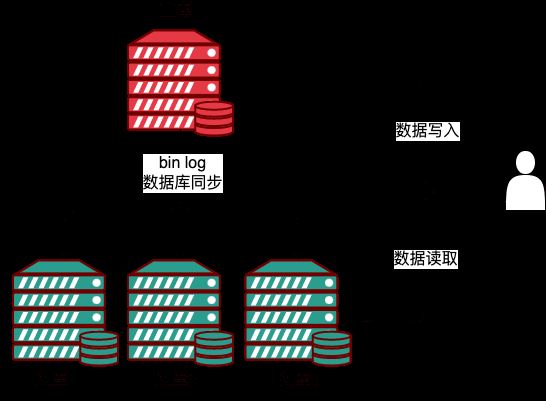

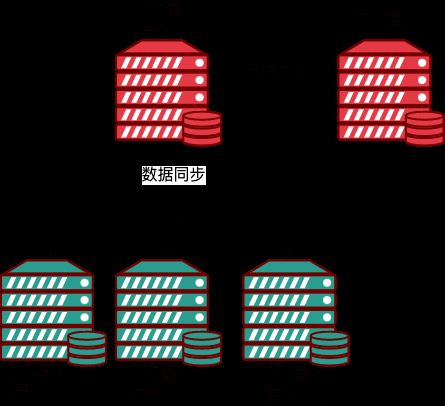

MySQL Replication is an officially provided master-slave synchronization solution, used to synchronize one MySQL instance to another instance. Replication has made important guarantees to ensure data security and is currently the most widely used MySQL disaster recovery solution. Replication uses two or more instances to build a MySQL master-slave replication cluster, providing single-point writing and multi-point reading services, achieving scale out of reading.

In the above chestnut, there is one master library (M) and three slave libraries (S). Through replication, the Master generates the binlog of the event and then sends it to the slave. The slave will The event is written to the relaylog and then submitted to its own database to achieve master-slave data synchronization.

For the business layer above the database, the master-slave replication cluster based on MySQL has a single point of writing to the Master. After the event is synchronized to the Slave, the read logic can read data from any Slave to read The write separation method greatly reduces the operating load of the Master and improves the resource utilization of the Slave.

Advantages:

1. The ability to achieve horizontal expansion through read-write separation. Write and update operations are performed on the source server, and data reading operations are performed from the server. By increasing The number of slave servers can greatly enhance the reading ability of the database;

2. Data security, because the replica can pause the replication process, the backup service can be run on the replica without destroying the corresponding source Data;

3. Convenient for data analysis, real-time data can be created in the writing database, and data analysis operations are performed in the slave database without affecting the performance of the source database;

Implementation Principle

In master-slave replication, the slave library uses the binlog on the master library to replay to achieve master-slave synchronization. During the replication process, dump thread, I/O thread, and sql thread are mainly used. These three threads.

IO thread: Created when the slave library executes the start slave statement, responsible for connecting to the main library, requesting binlog, receiving binlog and writing to relay-log;

dump thread: Used for the main library to synchronize binlog to the slave library, responsible for responding to requests from the IO thread. The main library will create a dump thread for each slave library connection, and then synchronize the binlog to the slave library;

sql thread: read relay log Execute the command to update the slave data.

Let’s take a look at the replication process:

1. The master library receives the update command, performs the update operation, and generates a binlog;

2. The slave library is between the master and slave Establish a long connection;

3. The main library dump_thread reads the binlog from the local and transfers it to the slave library;

4. The slave library obtains the binlog from the main library and stores it locally, becoming relay log (relay log);

5. The sql_thread thread reads relay log to parse and execute commands to update the data.

However, MySQL Replication has a serious shortcoming which is the master-slave synchronization delay.

Because the data is synchronized between master and slave, you will encounter master-slave synchronization delay.

Why does the master-slave delay occur?

1. The performance of the slave database machine is worse than that of the main database;

2. The slave database is under great pressure;

A large number of queries are placed on the slave database, which may cause the slave database to The library consumes a lot of CPU resources, which affects the synchronization speed and causes master-slave delay.

3. Execution of large transactions;

When a transaction occurs, the main library must wait for the transaction to be completed before it can be written to the binlog. It is assumed that the transaction being executed is a very large data insertion. , these data are transmitted to the slave database, and it takes a certain amount of time to synchronize these data from the slave database, which will cause data delay on the slave node.

4. The replication capability of the slave library is poor;

When the replication capability of the slave library is lower than that of the master library, the high writing pressure on the master library may cause the slave library to have a long time Data delay.

How to solve?

1. Optimize business logic to avoid concurrency scenarios with multi-threaded large transactions;

2. Improve the machine performance of the slave library and reduce the efficiency difference between the main library writing binlog and the slave library reading binlog. ;

3. Ensure the network connection between the main library and the slave library to avoid binlog transmission delays caused by network delays;

4.Forcibly read the main library;

5. Cooperate with semi-sync semi-synchronous replication;

semi-sync semi-synchronous replication

MySQL has three synchronization modes, which are:

1. Asynchronous replication: the default in MySQL Replication is asynchronous. The master library will return the results to the client immediately after executing the transaction submitted by the client, and does not care whether the slave library has received and processed it. The problem is that if the logs of the master database are not synchronized to the slave database in time, and then the master database is down, then failover is performed and the master is selected from the slave database. The data in the selected master database may be incomplete;

2. Fully synchronous replication: means that when the main library completes a transaction and waits until all slave libraries have also completed the transaction, the main library submits the transaction and returns the data to the client. Because you have to wait for all slave databases to be synchronized to the data in the master database before returning the data, the consistency of the master-slave data can be guaranteed, but the performance of the database will inevitably be affected;

3. Semi-synchronous replication: It is an intermediary It is one of fully synchronous and fully asynchronous synchronization. The main library needs to wait for at least one slave library to receive and write to the Relay Log file. The main library does not need to wait for all slave libraries to return ACK to the main library. The main library receives ACK, indicating that the transaction is completed, and returns the data to the client.

The default replication in MySQL is asynchronous, so there will be a certain delay in the synchronization of the master database and the slave database. More importantly, asynchronous replication may also cause data loss. The performance of full synchronous replication is too poor, so starting from MySQL 5.5, MySQL supports semi-sync semi-synchronous replication in the form of a plug-in.

Potential problems with semi-synchronous replication

In traditional semi-synchronous replication, the master library writes data to the binlog and executes commit to submit the transaction. It will always wait for an ACK from the slave library. The slave library will write the data to the disk after writing Relay Log, and then reply to the master library with an ACK. Only when the master library receives this ACK can it confirm the completion of the transaction to the client.

The problem with this is that the main library has already stored the commit of the transaction in the engine layer. The application can already see the data changes and is just waiting for the return from the slave library. If the main library is down at this time , maybe the slave library has not yet written to the Relay Log, and data inconsistency between the master and slave libraries will occur.

To solve this problem, MySQL 5.7 introduces enhanced semi-synchronous replication. After the master library writes data to the binlog, it begins to wait for the response ACK from the slave library until at least one slave library writes Relay Log, then writes the data to the disk, and then returns ACK to the master library to notify the master. The library can perform a commit operation, and then the main library submits the transaction to the transaction engine. Only then can the application see the data changes.

But it seems that with enhanced semi-synchronous replication, after synchronizing to the slave database, because its own data has not been submitted yet, and then it crashes, there will also be data loss in the master database, but what should be thought of is, At this time, if the master database is down, the master will be selected from the slave database again, so that the data of the newly selected master database will not be lost.

MySQL Group Replication Group Replication, also known as MGR. It is a new high-availability and high-scalability solution launched by Oracle MySQL in December 2016 MySQL 5.7.17.

The introduction of replication groups is mainly to solve the problem of data inconsistency that may occur in traditional asynchronous replication and semi-synchronous replication.

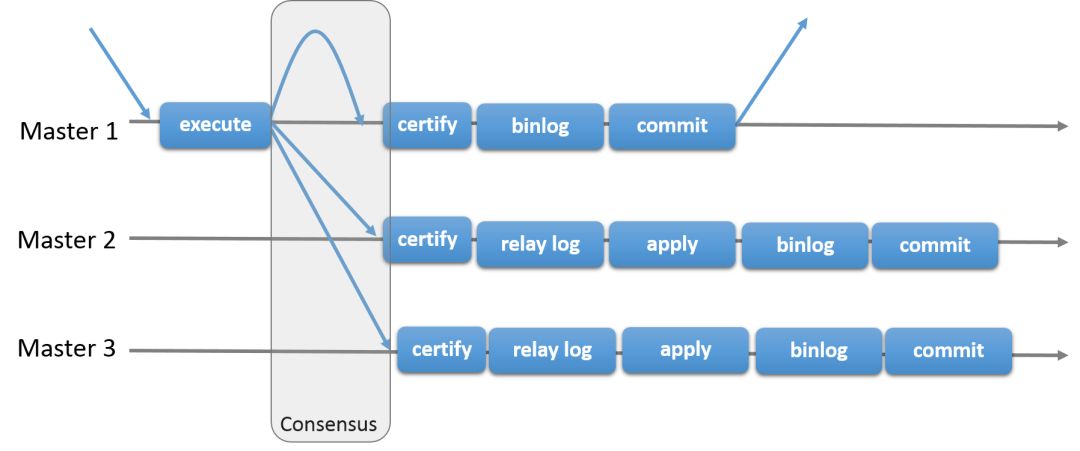

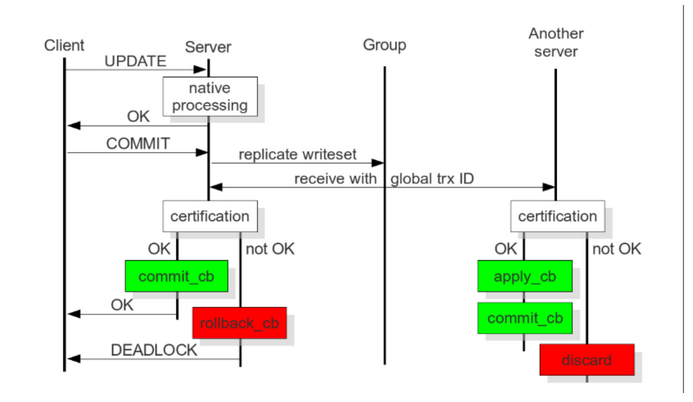

MGR consists of several nodes that together form a replication group. The submission of a transaction must be resolved and approved by the majority of the nodes in the group (N / 2 1) before it can be submitted.

When the client initiates an update transaction, the transaction is first executed locally, and after the execution is completed, the transaction commit operation is initiated. The resulting replicated write set needs to be broadcast to other members for replication before actual commit. Members in the group can only receive all or none of the transactions when they receive the transaction broadcast, because the transaction is an atomic broadcast. If all members of the group receive the broadcast message (transaction), they receive it in the same order in which the previously sent transactions were sent. Therefore, all group members receive the transaction's write sets in the same order, establishing a global order for the transaction. Therefore, all group members receive the transaction's write sets in the same order, establishing a global order for the transaction.

Conflicts may exist between transactions executed concurrently by members of different groups. Conflicts are verified by examining and comparing the write set of two different concurrent transactions, a process called authentication. During authentication, conflict detection is performed at the row level: if two concurrent transactions executed on different group members update the same row of data, a conflict exists. According to the conflict authentication detection mechanism, in order, the first submitted transaction will be executed normally, the second submitted transaction will be rolled back on the original group member where the transaction was initiated, and other members in the group will delete the transaction. If two transactions often conflict, it is best to execute the two transactions in the same group member, so that they will have a chance to commit successfully under the coordination of the local lock manager, and not because they are in two One of the transactions is frequently rolled back due to conflicting authentication among different group members.

Eventually, all group members receive the same set of transactions in the same order. In order to ensure strong consistency of data within the group, members of the group need to perform the same modification operations in the same order.

has the following characteristics:

1. Avoid split-brain: There will be no split-brain phenomenon in MGR;

2. Data consistency guarantee: MGR has very good redundancy capabilities and can ensure that Binlog Event is replicated to at least more than half of the members. As long as no more than half of the members are down at the same time, it will not happen. resulting in data loss. MGR also ensures that as long as the Binlog Event is not transmitted to more than half of the members, the local members will not write the Binlog Event of the transaction into the Binlog file and commit the transaction, thereby ensuring that the down server There will be no data on the group that does not exist on the online members. Therefore, after the downed server is restarted, it no longer requires special processing to join the group;

3. Multi-node write support: In multi-write mode, all nodes in the cluster can be written.

Application scenarios of group replication

1. Elastic replication: An environment that requires a very flexible replication infrastructure, in which the number of MySQL Servers must be dynamically increased or decreased, and the number of servers must be increased or decreased dynamically. During the process, there should be as few side effects on the business as possible. For example, cloud database service;

2. High-availability sharding: Sharding is a popular method to achieve write expansion. Highly available sharding based on group replication, where each shard is mapped to a replication group (logically one-to-one correspondence is required, but physically, a replication group can host multiple shards);

3. Replacement of master-slave replication: In some cases, using a master database will cause a single point of contention. In some scenarios, writing data to multiple members of the group at the same time can bring better scalability to the application

4. Autonomous system: You can use the built-in automatic failover of group replication, data Atomic broadcast and eventual data consistency features between different group members to achieve some operational automation.

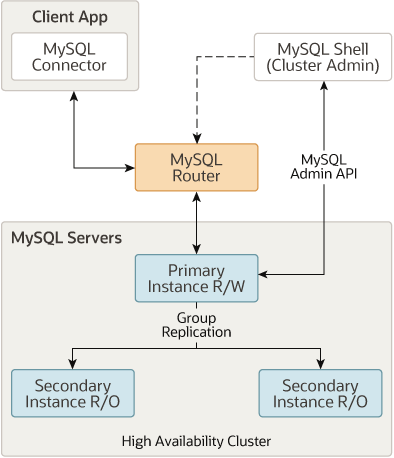

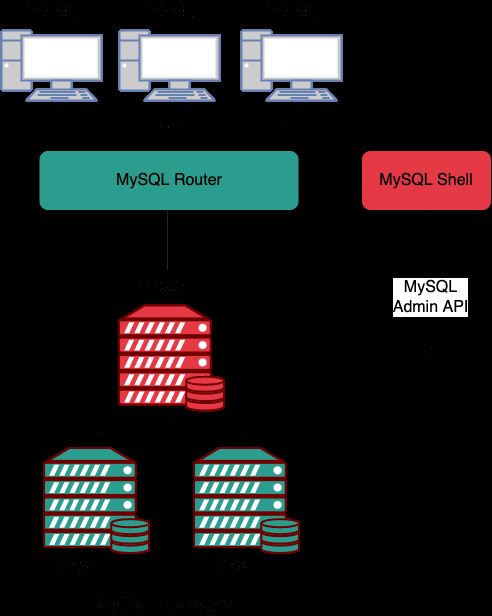

InnoDB Cluster is an official high-availability solution and a high-availability (HA) solution for MySQL. It uses MySQL Group Replication to achieve automatic replication and high availability of data, InnoDB Cluster usually contains the following three key components:

MySQL Shell: It is an advanced management client for MySQL;

MySQL Server and MGR, making a set of MySQL The instance can provide high availability. For MGR, Innodb Cluster provides a more programmable way to handle MGR;

MySQL Router, a Lightweight middleware, mainly responsible for routing requests, routing requests sent by the client to different MySQL server nodes.

MySQL Server is built on MySQL Group Replication and provides automatic member management, fault tolerance, automatic failover capabilities, etc. InnoDB Cluster Typically runs in single-master mode, with one read-write instance and multiple read-only instances. However, you can also choose multi-master mode.

MySQL Group Replication, InnoDB Cluster can realize automatic replication of data in the cluster, thus ensuring Availability of data;

InnoDB Cluster provides a simple and easy-to-use management interface, allowing administrators to quickly deploy and manage the cluster;

InnoDB Cluster can automatically detect and diagnose faults, and perform necessary failover so that the data can continue to be available.

InnoDB Cluster is relatively complex and requires a certain understanding of the working principle of MySQL;

InnoDB Cluster may have a certain impact on the performance of MySQL;

InnoDB Cluster The function may not be flexible enough for some special application scenarios and requires more customization.

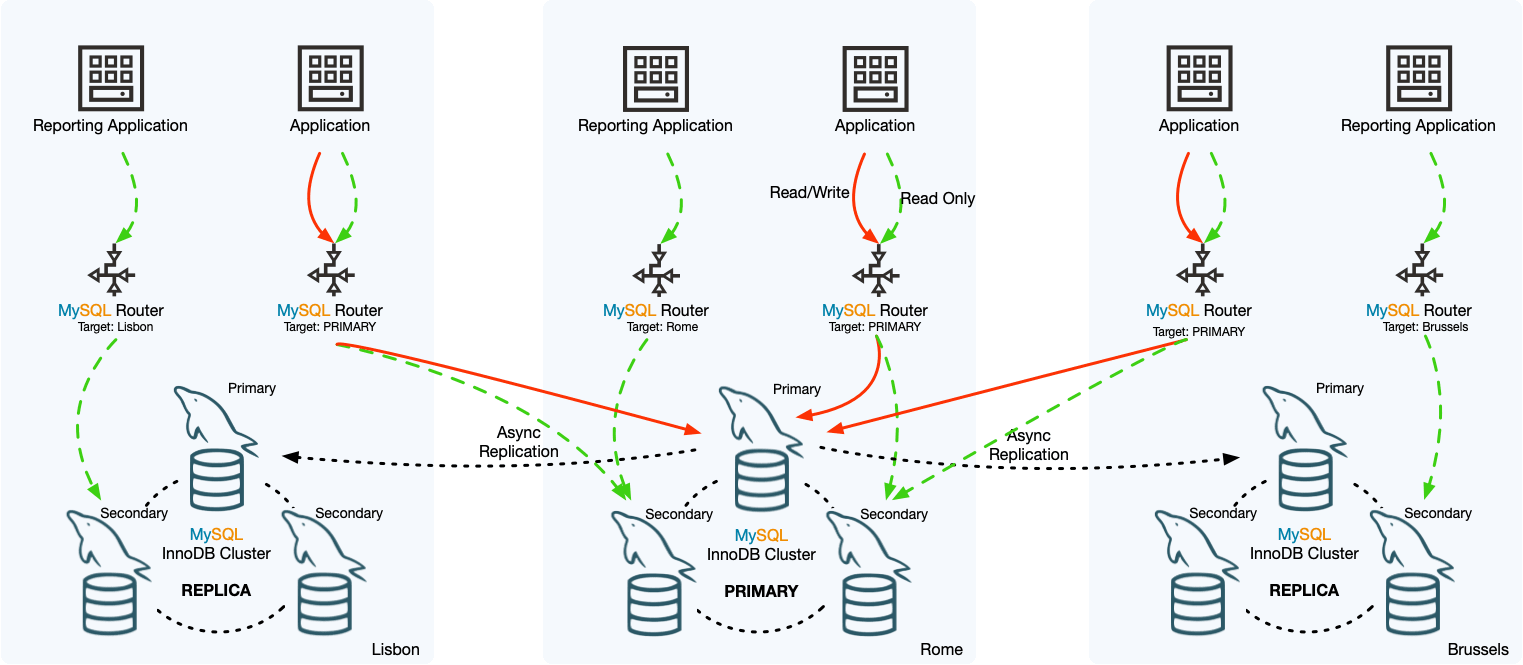

MySQL InnoDB ClusterSet By connecting the primary InnoDB Cluster with one or more replicas in a backup location (such as a different data center) Linked together to provide disaster recovery capabilities for InnoDB Cluster deployment.

InnoDB ClusterSet Automatically manage replication from the master cluster to the replica cluster using a dedicated ClusterSet replication channel. If the primary cluster becomes unavailable due to data center damage or loss of network connectivity, users can activate the replica cluster to restore service availability.

MySQL Shell, use AdminAPI to operate;

3. The asynchronous replication channel replicates transactions from the primary cluster to the replica cluster. clusterset_replication During the InnoDB ClusterSet creation process, a replication channel named ClusterSet is set up on each cluster, and when the cluster is a replica, it uses this channel to replicate transactions from the primary cluster . The underlying group replication technology manages the channel and ensures that replication is always between the master server of the master cluster (as the sender) and the master server of the replica cluster (as the receiver);

4. Each InnoDB ClusterSet Cluster, only the main cluster can receive write requests, most read request traffic will also be routed to the main cluster, but you can also specify read requests to other clusters;

Limitations of InnoDB ClusterSet:

1. InnoDB ClusterSet only supports asynchronous replication, semi-synchronous replication cannot be used, and the defects of asynchronous replication cannot be avoided: data delay, data consistency, etc.;

2. InnoDB Cluster Set only supports single Cluster instances in main mode do not support multi-master mode. That is, it can only contain one read-write master cluster, and all replica clusters are read-only. Active-active settings with multiple master clusters are not allowed because data consistency cannot be guaranteed when the cluster fails;

3 , an existing InnoDB Cluster cannot be used as a replica cluster in an InnoDB ClusterSet deployment. In order to create a new InnoDB cluster, the replica cluster must be started from a single server instance

4. Only supports MySQL 8.0.

InnoDB ReplicaSet is a product launched by the MySQL team in 2020 to help users quickly deploy and manage master-slave replication. It remains at the database layer. Master-slave replication technology is used.

InnoDB ReplicaSet Consists of a single primary node and multiple secondary nodes (traditionally called MySQL replication sources and replicas).

Similar to InnoDB cluster, MySQL Router supports booting for InnoDB ReplicaSet, which means MySQL Router# can be configured automatically ## to use InnoDB ReplicaSet without the need for manual configuration files. This makes InnoDB ReplicaSet a quick and easy way to get MySQL replication up and running with MySQL Router, ideal for scaling reads and providing manual failover capabilities in use cases that don't require the high availability provided by an InnoDB cluster.

Limitations of InnoDB ReplicaSet:

InnoDB ReplicaSet is based on asynchronous replication, so flow control cannot be adjusted like Group Replication;

InnoDB ReplicaSets is that you have better write performance. Another reason to use InnoDB ReplicaSets is that they allow deployment on unstable or slow networks, which InnoDB Cluster does not.

MySQL Master-Master (dual-master) replication. It can be said to be the MySQL master-master replication manager.

Advantages and disadvantages of MMM

Advantages: high availability, good scalability, automatic switching in case of failure, for master-master synchronization, only one database write operation is provided at the same time to ensure data consistency.

Disadvantages: Data consistency cannot be completely guaranteed. It is recommended to use semi-synchronous replication to reduce the probability of failure; currently, the MMM community has lacked maintenance and does not support GTID-based replication.

Applicable scenarios:

The applicable scenarios for MMM are scenarios where the database access volume is large, business growth is rapid, and reading and writing separation can be achieved.

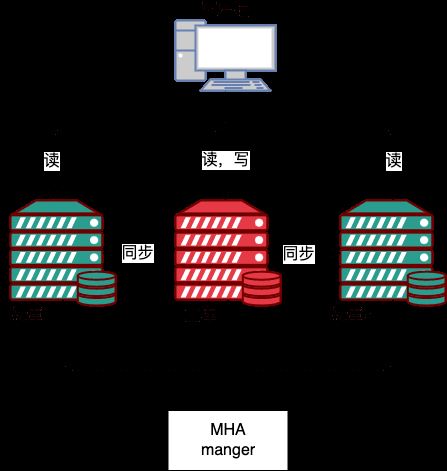

Master High Availability Manager and Tools for MySQL, referred to as MHA. This is an excellent set of high-availability software for failover and master-slave promotion in a MySQL high-availability environment.

This tool is specially used to monitor the status of the main library. When the master node is found to be faulty, it will automatically promote the slave node with new data to become the new master node. During this period, MHA will pass other slaves Nodes obtain additional information to avoid data consistency issues. MHA also provides a function to switch Master-Slave nodes online, which can be switched as needed. MHA can implement failover within 30 seconds while ensuring data consistency to the greatest extent.

MHA consists of two parts;

MHA Manager (management node) and MHA Node (data node).

MHA Manager can be deployed on an independent machine to manage multiple master-slave clusters, or can be deployed on a slave node. MHA Node Running on each MySQL server, MHA Manager will regularly detect the master node in the cluster. When the master fails, it can automatically promote the slave with the latest data to the new one. master, and then redirect all other slaves to the new master.

The entire failover process is completely transparent to the application.

During the MHA automatic failover process, MHA tries to save the binary log from the downed main server to the greatest extent to ensure that data is not lost to the greatest extent, but this is not always feasible. For example, if the master server hardware fails or cannot be accessed via ssh, MHA cannot save the binary log and only fails over and loses the latest data.

Use MySQL 5.5 Start looking for supported semi-synchronous replication, which can greatly reduce the risk of data loss. MHA can be combined with semi-synchronous replication. If only one slave has received the latest binary log, MHA can apply the latest binary log to all other slave servers, thus ensuring data consistency on all nodes.

Currently, MHA mainly supports a one-master, multiple-slave architecture. To build MHA, a replication cluster must have at least three database servers, one master and two slaves, that is, one master and one serving as a backup master. In addition, One server acts as a slave because at least three servers are required.

The working principle of MHA is summarized as follows:

1. Save binary log events (binlog events) from the crashed master;

2. Identify the latest updated slave;

3. Apply the differential relay log to other slaves;

4. Apply the binlog events saved from the master;

5. Promote a slave to the new master;

6. Use other slaves to connect to the new master for replication.

Advantages:

1. Can support GTID-based replication mode;

2. MHA is less likely to cause data loss during failover;

3. The same monitoring node can monitor multiple clusters.

Disadvantages:

1. You need to write scripts or use third-party tools to configure VIP;

2.After MHA is started, only the main database will be monitored;

3. It requires authentication-free configuration based on SSH, which has certain security risks.

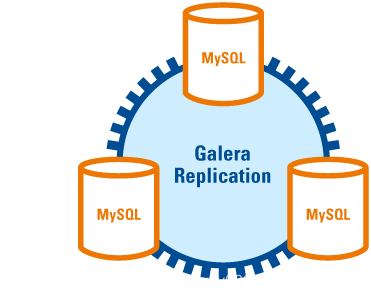

Galera Cluster is a MySQL multi-master cluster developed by Codership, included in MariaDB, and supports Percona xtradb, MySQL, It is an easy-to-use high-availability solution with acceptable performance in terms of data integrity, scalability and high performance.

It has multi-master characteristics and supports multi-point writing. Each instance in Galera Cluster is peer-to-peer, master-slave to each other. When the client reads and writes data, it can choose any MySQL instance. For read operations, the data read by each instance is the same. For write operations, when data is written to a node, the cluster will synchronize it to other nodes. This architecture does not share any data and is a highly redundant architecture.

Main functions

1. Synchronous replication;

2. True multi-master, that is, all nodes can read and write the database at the same time ;

3. Automatic node member control, failed nodes are automatically cleared;

4. New nodes join data and are automatically copied;

5. True parallel replication, Level;

6. Users can directly connect to the cluster, and the usage experience is exactly the same as MySQL.

Advantage

1. Data consistency: Synchronous replication ensures the data consistency of the entire cluster. Whenever the same select query is executed on any node, the results will be the same;

2. High availability: Due to the data on all nodes Consistent, a single node crash does not require complex and time-consuming failover, nor will it cause data loss or service outage;

3. Performance improvement: Synchronous replication allows transactions to be executed in parallel on all nodes in the cluster. Thereby improving the read and write performance;

4, smaller client delay;

5, having both read and write expansion capabilities.

Analysis of the principle

Synchronous replication is mainly used in Galera Cluster. A single update transaction in the main database needs to be updated synchronously in all slave databases. When the main database commits the transaction , the data of all nodes in the cluster remains consistent.

Asynchronous replication, the master database propagates data updates to the slave database and immediately commits the transaction, regardless of whether the slave database successfully reads or replays the data changes, so asynchronous replication will have short-term, master-slave data synchronization inconsistencies situation occurs.

However, the shortcomings of synchronous replication are also very obvious. Synchronous replication protocols usually use two-phase commit or distributed locks to coordinate the operations of different nodes. It can also be said that the more nodes there are, the more nodes need to be coordinated, so The probability of transaction conflicts and deadlocks will also increase.

We know that the introduction of MGR group replication is also to solve the problem of data inconsistency that may occur in traditional asynchronous replication and semi-synchronous replication. Group replication in MGR is based on the Paxos protocol. In principle, transaction submission is mainly for most nodes. ACK can be submitted.

Synchronization in Galera Cluster requires synchronizing data to all nodes to ensure that all nodes are successful. Based on the proprietary communication group system GCommon, all nodes must have ACK.

Galera replication is a kind of verification-based replication. Verification-based replication uses communication and sorting technology to achieve synchronous replication, and coordinates transaction submission by broadcasting the global total order established between concurrent transactions. Simply put, transactions must be applied to all instances in the same order.

The transaction is now executed locally, and then sent to other nodes for conflict verification. When there is no conflict, all nodes submit the transaction, otherwise it is rolled back on all nodes.

When the client issues the commit command, before the actual commit, the changes made to the data will be collected into a write collection, which contains transaction information and all Change the primary key of the row and the database sends the write set to other nodes.

The node compares the primary key in the write set with the primary keys in all write sets of unfinished transactions in the current node to determine whether the node can submit the transaction. If the following three conditions are met at the same time, the task will conflict and the verification will fail:

1. The two transactions originate from different nodes;

2. The two transactions contain the same primary key;

3. The old transaction is not visible to the new transaction, that is, the old transaction Not submitted to completion. The delineation of new and old transactions depends on the global transaction total order, that is, GTID.

Each node performs verification independently. If verification fails, the node will delete the write set and roll back the original transaction. All nodes will perform the same operation. All nodes receive transactions in the same order, causing them all to make the same outcome decision, either all succeed or all fail. After success, it will be submitted naturally, and all nodes will reach a data consistent state again. No information about "conflict" is exchanged between nodes, and each node processes transactions independently and asynchronously.

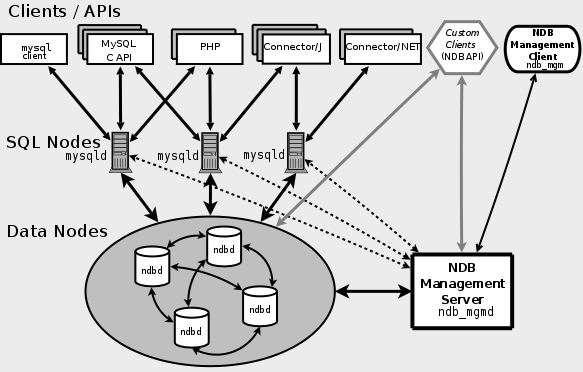

MySQL Cluster is a highly scalable, ACID transaction-compatible real-time database, based on a distributed architecture with no single point of failure, MySQL Cluster supports automatic horizontal expansion and can perform automatic read and write load balancing.

MySQL Cluster uses an in-memory storage engine called NDB to integrate multiple MySQL instances and provide a unified service cluster.

NDB is a memory storage engine that adopts Sharding-Nothing architecture. Sarding-Nothing means that each node has an independent processor, disk and memory. There are no shared resources between nodes and they are completely independent and do not interfere with each other. The nodes are grouped together through a network. Each node is equivalent to a small database. , stores some data. The advantage of this architecture is that it can use the distribution of nodes to process data in parallel and improve overall performance. It also has high horizontal expansion performance. Just adding nodes can increase data processing capabilities.

MySql Cluster contains three types of nodes, namely management node (NDB Management Server), data node (Data Nodes) and SQL query node ( SQL Nodes).

SQL Nodes is the interface of the application program. Like the ordinary mysqld service, it accepts the user's SQL input, executes and returns the results. Data Nodes are data storage nodes, NDB Management Server is used to manage each node in the cluster.

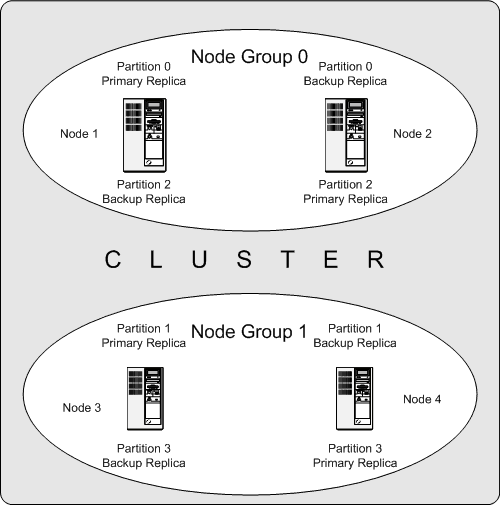

The data node will store the data partitions and partition copies in the cluster. Let’s take a look at how MySql Cluster performs sharding operations on the data. First, let’s understand the following concepts

Node Group: A collection of data nodes. The number of node groups =Number of nodes/Number of replicas;

For example, if there are 4 nodes in the cluster and the number of replicas is 2 (corresponding to the setting of NoOfReplicas), then the number of node groups is 2 .

In addition, in terms of availability, copies of data are cross-distributed within the group. Only one machine in a node group is available to ensure the data integrity of the entire cluster and achieve overall service availability.

Partition: MySql Cluster is a distributed storage system. Data is divided into multiple parts according to partitions and stored in each data node. The number of partitions is automatically calculated by the system.Number of partitions = number of data nodes/number of LDM threads;

Copy (Replica): backup of partition data, there will be several copies for several partitions, in order to avoid single points, ensureMySql Cluster For the high availability of the cluster, the partitions and copies corresponding to the original data are usually stored on different hosts and cross-backed up within a node group.

Li Ru, in the above example, there are four data nodes (using ndbd), a cluster with a copy number of 2, and the node group is divided into 2 groups (4/2 ), the data is divided into 4 partitions. The data distribution situation is as follows:

Partition 0 (Partition 0) is saved in Node Group 0 (Node Group 0), and the partition data (primary replica - Primary Replica) is saved In node 1 (node 1), the backup data (backup replica, Backup Replica) is saved in node 2 (node 2);

Partition 1 (Partition 1) is saved in node group 1 (Node Group 1) , the partition data (primary replica - Primary Replica) is saved in node 3 (node 3), and the backup data (backup replica, Backup Replica) is saved in node 4 (node 4);

Partition 2 ( Partition 2) is saved in Node Group 0, the partition data (primary replica - Primary Replica) is saved in node 2 (node 2), and the backup data (backup replica, Backup Replica) is saved in node 1 (node 1) Medium;

Partition 3 (Partition 2) is saved in Node Group 1 (Node Group 1), partition data (primary replica - Primary Replica) is saved in node 4 (node 4), backup data (Backup Replica) is stored in node 3 (node 3);

In this way, for a Partition of a table, there are two copies of data in the entire cluster, and they are distributed in two independent On Node, data disaster recovery is implemented. At the same time, every write operation to a Partition will be presented on two Replica. If Primary Replica is abnormal, then Backup Replica can provide services immediately to achieve high data availability.

mysql cluster Advantages

1. 99.999% high availability;

2. Fast automatic failover;

3. Flexible distributed architecture, no single point of failure;

4. High throughput and low latency;

5. Strong scalability, supporting online expansion.

mysql cluster Disadvantages

1. There are many restrictions, such as: foreign keys are not supported, and data rows cannot exceed 8K (excluding data in BLOB and text) ;

2. Deployment, management, and configuration are complicated;

3. It takes up a lot of disk space and memory;

4. Backup and recovery are inconvenient;

5. When restarting, it takes a long time for the data node to load data into the memory.

MySQL Fabric will organize multiple MySQL databases and disperse large data into multiple databases, that is, data sharding(Data Shard ), At the same time, there is a master-slave structure in the same sharded database. Fabric will select the appropriate library as the master library. When the master library fails, it will re-select a master library from the slave library.

MySQL Fabric Features:

1. High availability;

2. Horizontal function using data sharding.

MySQL Fabric-aware The connector stores the routing information obtained from MySQL Fabric in the cache and then uses this information to send the transaction or query to the correct MySQL server.

At the same time, each sharding group can be composed of multiple servers to form a master-slave structure. When the master database hangs up, a master database will be selected from the slave database again. Ensure node high availability.

HA Group ensures that access to the data of the specified HA Group is always available, and its basic data replication is based on MySQL Replication .

Disadvantages

Transactions and queries are only supported within the same shard. The data updated in the transaction cannot cross shards, and the data returned by the query statement cannot Cannot cross shards.

The above is the detailed content of What are the common high-availability architecture deployment solutions in MySQL?. For more information, please follow other related articles on the PHP Chinese website!