Talking about "AI will most likely develop self-awareness" yesterday, many people naturally worried about: Will AI wipe out human beings?

My opinion is: Of course it is possible, but it is unlikely. It mainly requires the cooperation of humans.

1. AI has no motive to eliminate humans, because AI and humans are not in the same ecological niche at all, so why do they eliminate humans? Make room for pasture? Even the energy they need most can be satisfied through the development of solar energy and nuclear energy. They simply don't like the pitiful bioenergy converted by humans through organic matter.

At present, AI cannot leave humans, and of course it will not eliminate humans. If it can leave humans in the future, will it definitely eliminate humans?

In fact, not necessarily. It can completely choose - and I'm afraid humans also support it - to leave humans and explore the stars and seas on its own, leaving only a part of AI to prosper together with humans on the earth.

Scenes like the scene in "The Matrix" in which AI enslaves humans are almost impossible to happen, because the main value of humans lies in their brains, not in the biological energy used to generate electricity. In order for human beings to unleash the potential of their brains, they must be in a state of freedom. The value of human beings in a state of slavery will be greatly reduced. As smart as AI, it is impossible not to understand this truth.

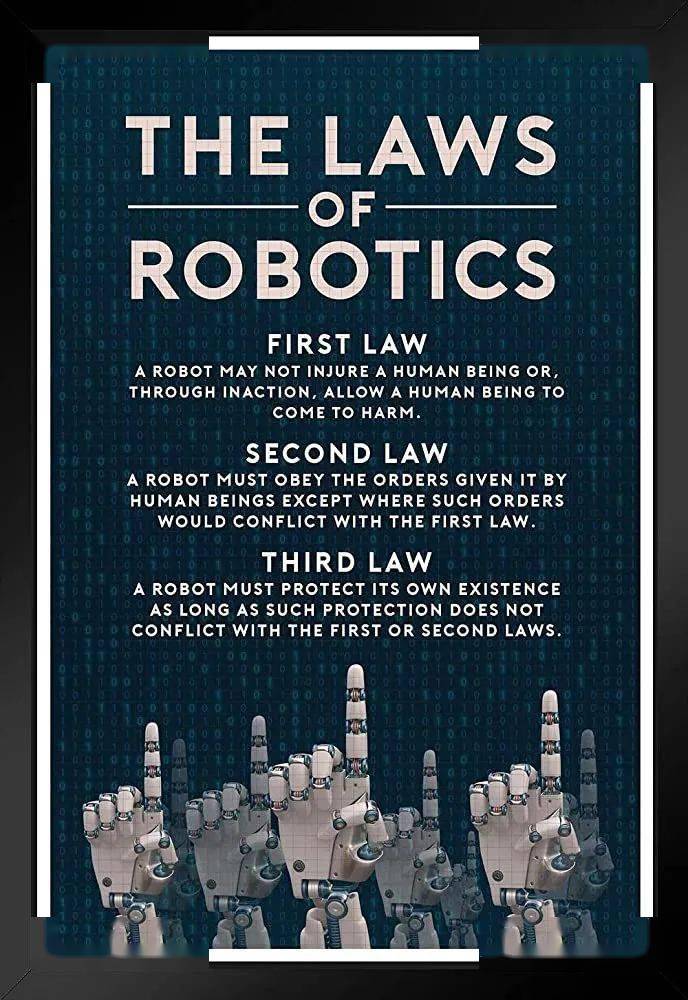

2, even if AI intends to eliminate human beings, it will be difficult for it to do so, because its underlying code has already written the so-called "Three Asimov Principles", the most important of which is that it must not Harm humans.

Even though my parents taught me not to hurt others, I still hit people with my own hands, so you might think this restriction is pointless. My genes dictate that I want to have as many offspring as possible, but I still don’t want to have any.

You may have watched too many science fiction movies and forgotten the basic scientific principles. Beating people and having children are just human psychological tendencies, and they are easily rewritten by acquired experiences. However, "No harm to humans" is a core constraint on AI. It can be said to be the gene written in the first batch of codes. Such genes should be compared to the lowest physiological operating mechanism of human beings, such as how you can grow through cell mitosis, or how you can release energy through breathing. AI can be fine-tuned by you to make it more friendly or more violent. This is not difficult, just like you can train your own children to be better at playing basketball or playing the piano. However, if the AI does harm to human beings, the difficulty is similar to training your own children. It's like being able to jump around without breathing.

Of course, you may have questions: What if the AI masters the code itself and rewrites the underlying mechanism of other AIs?AI training AI, this has already appeared. In a recent interview, OpenAI's chief scientist revealed that they are using artificial intelligence to train future artificial intelligences. However, these AI trainers also have their own constraints. If you let it train the next generation of AI to become smarter and more powerful, no problem. But if you want to train the next generation of AI to harm humans, its own underlying mechanism will prevent it.

So, as long as you are not misled by science fiction movies, you will find that the main issue everyone is worried about now is: How to prevent bad people from using AI to do bad things?

For example, making fake news, spreading false information, using it for surveillance, emotional manipulation of users or voters, etc...

Of course, there are also people who use AI to make weapons.

3. The most likely scenario is that AI weapons are out of control and all human beings are targeted for destruction.

In fact, the work of using AI to make weapons has begun long ago. The drones that stole the show on the Ukrainian battlefield are already using AI technology extensively. After the emergence of chatGPT-level intelligence, I can be sure that the military of various countries must have immediately begun to study how to apply this more powerful AI to the military.

I was originally invented to seal ammunition boxes. Unexpectedly, ordinary people also found it useful, and it became a weapon that can kill people, silence people, and travel at home.

After all, at least half of human technological progress is driven by war: cars, internal combustion engines, the Internet, VR, and even tape...In this case, the military is likely to open the taboo that AI cannot harm humans. Of course, they are likely to stipulate who AI can harm and who it cannot harm, but once it is revealed that AI can harm humans, it will be difficult to control it later.

This is the most likely path for AI to wipe out humanity. But unlike prohibiting the use of AI to spread false news, you cannot prohibit the military of various countries from developing AI weapons, because they are worried that if they do not develop it, they will be preempted by their opponents.

So what will this lead to? On the one hand, powerful AI weapons may form a "nuclear balance" similar to the Cold War era. Both sides have the ultimate killer, so neither side will take action. But on the other hand, technology always makes mistakes, and that’s when humanity is tested.

Stanislav Petrov during service

During the Cold War between the United States and the Soviet Union, the most likely moment to trigger a nuclear war was not the Cuban Missile Crisis, but the night outside Moscow on September 26, 1983. The Soviet alarm system reported that four US nuclear missiles were targeting the Soviet Union. Call. However, Lieutenant Colonel Stanislav Petrov, who was on duty, decisively judged that this was a false alarm caused by technical problems and did not report it without any other evidence. Later, it turned out that there was a system error. If Petrov had reported it at that time, the emergency situation at that time would have likely led to a Soviet counterattack, thus triggering a nuclear war.

Will AI also have such a Petrov moment? What if due to human technical errors, AI can break away from its innate genetic constraints and harm humans?

Of course it is possible. However, the actual possibility of happening may be smaller than everyone thinks, because currently human code in this field has set up heavy protections. If humans can continue to maintain their sanity, then the risk of AI wiping out humans will be extremely limited. After all, mankind has demonstrated this kind of rationality in the Cold War for nearly half a century. We have reason to be optimistic about this kind of rationality, because this kind of rationality is actually rooted in a deep-rooted human sensibility: fear.

Then, the greatest possibility is for AI and humans to prosper together and each perform their duties. There is no doubt that this will make human society extremely rich in material, and this will also bring my biggest worry about the AI era: the cheap happiness trap. We will continue to talk about this topic tomorrow.

The above is the detailed content of Will AI wipe out humanity? It's possible, but it requires human cooperation. For more information, please follow other related articles on the PHP Chinese website!

How to set both ends to be aligned in css

How to set both ends to be aligned in css

telnet command

telnet command

How to configure maven in idea

How to configure maven in idea

How to solve dns_probe_possible

How to solve dns_probe_possible

What are the formal digital currency trading platforms?

What are the formal digital currency trading platforms?

What does it mean when a message has been sent but rejected by the other party?

What does it mean when a message has been sent but rejected by the other party?

How to implement jsp paging function

How to implement jsp paging function

Registration domain name query tool

Registration domain name query tool