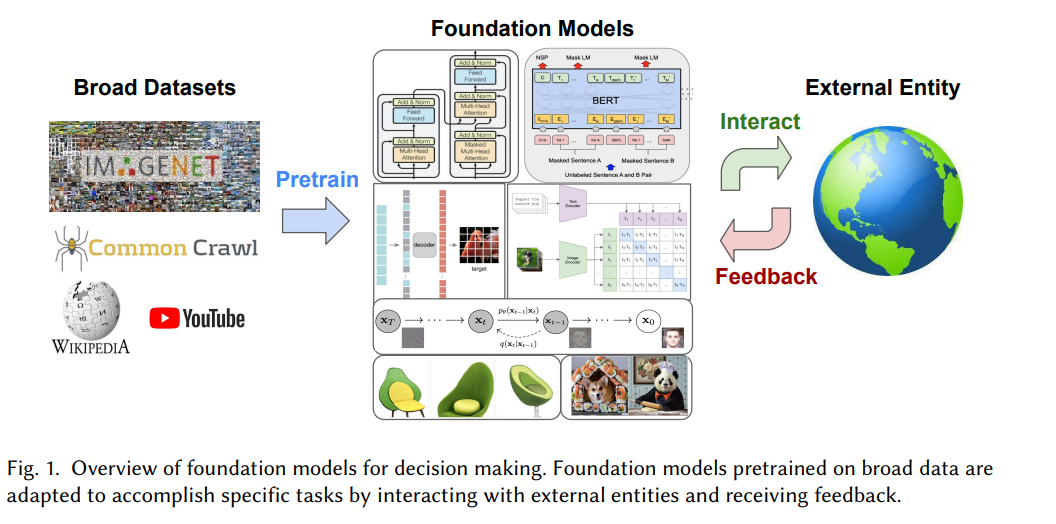

The pre-trained basic model based on self-supervised learning on a wide range of data sets has demonstrated excellent ability to transfer knowledge to different downstream tasks. As a result, these models are also applied to more complex problems such as long-term reasoning, control, search, and planning, or deployed in applications such as dialogue, autonomous driving, healthcare, and robotics. In the future, they will also provide interfaces to external entities and agents. For example, in conversational applications, language models communicate with people in multiple rounds; in the field of robotics, perception control models perform actions in real environments.

These scenarios pose new challenges for the basic model, including: 1) how to learn from feedback from external entities (such as human evaluations of conversation quality), 2) how to adapt to large scale uncommon modalities in language or visual datasets (such as robot actions), 3) how to reason and plan for the long term in the future.

These problems have always been the core of sequential decision-making in the traditional sense, covering reinforcement learning, imitation learning, planning, and search and optimal control. In contrast to the paradigm where base models are pre-trained using extensive datasets of billions of image and text tokens, previous work on sequential decision making has mainly focused on task-specific or whiteboard settings with limited prior knowledge.

Although the lack or absence of prior knowledge makes sequential decision-making seem difficult, research on sequential decision-making has surpassed human performance on multiple tasks, such as playing board games, elegance, etc. Dali (Atari) video games and operating robots to complete navigation and operation.

However, since these methods learn to solve tasks from scratch without extensive knowledge from vision, language, or other datasets, they often perform poorly in terms of generalization and sample efficiency, For example, it takes 7 GPUs to run for a day to solve a single Atari game. Intuitively, extensive data sets similar to those used by the base model should also be useful for sequential decision-making models. For example, there are countless articles and videos on the Internet about how to play Atari games. In the same way that extensive knowledge about object and scene properties is useful for robots, knowledge about human desires and emotions can improve conversational models.

Although the research on basic models and sequential decision-making are generally disjoint due to different applications and concerns, there are more and more intersecting studies. In terms of basic models, with the emergence of large language models, target applications have expanded from simple zero-shot or few-shot tasks to problems that now require long-term reasoning or multiple interactions. In contrast, in the field of sequential decision-making, inspired by the success of large-scale vision and language models, researchers began to prepare increasingly larger data sets for learning multi-model, multi-task, and general interactive agents.

The lines between the two fields are becoming increasingly blurred, and some recent work has studied pre-trained base models (such as CLIP and ViT) to bootstrap interactive intelligence in visual environments. training of agents, while other work has studied the base model as a conversational agent optimized through reinforcement learning and human feedback. There is also work on adapting large language models to interact with external tools such as search engines, calculators, translation tools, MuJoCo simulators, and program interpreters.

Recently, researchers from the Google Brain team, UC Berkeley, and MIT wrote that the combination of basic models and interactive decision-making research will benefit from each other. On the one hand, applying the underlying model to tasks involving external entities can benefit from interactive feedback and long-term planning. Sequential decision-making, on the other hand, can exploit the world knowledge of the underlying model to solve tasks faster and generalize better.

Paper address: https://arxiv.org/pdf/2303.04129v1.pdf

To stimulate further research at the intersection of these two fields, researchers limited the problem space of the underlying model for decision making. It also provides technical tools for understanding current research, reviews current challenges and unanswered questions, and predicts potential solutions and promising approaches to address these challenges.

The paper is mainly divided into the following 5 main chapters.

Chapter 2 reviews the relevant background on sequential decision-making and provides some example scenarios where underlying models and decision-making are best considered together. This is followed by a description of how the different components of a decision-making system are built around the underlying model.

Chapter 3 explores how basic models can be used as behavioral generative models (such as skill discovery) and environmental generative models (such as model-based deduction).

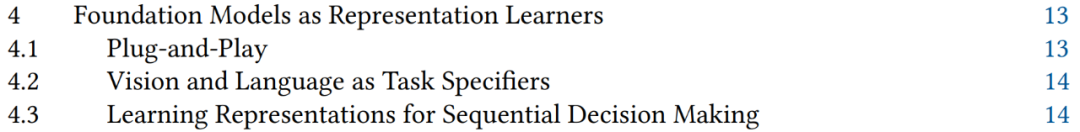

Chapter 4 explores how basic models can serve as representation learners for state, action, reward, and transfer dynamics (e.g. plug-and-play vision - Language models, model-based representation learning).

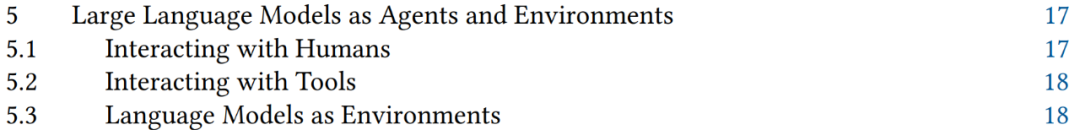

Chapter 5 explores how language-based models serve as interactive agents and environments that enable sequential decision-making in sequential decision-making frameworks (language model reasoning, dialogue , tool use) to consider new problems and applications.

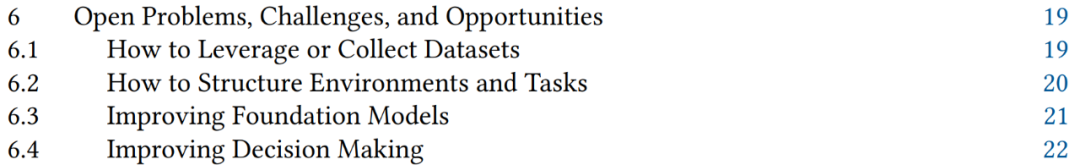

In the last chapter, the researcher outlines the unresolved issues and challenges and proposes potential solutions ( For example, how to exploit a wide range of data, how to structure the environment, and what aspects of the underlying model and sequential decision-making can be improved).

Please refer to the original paper for more details.

The above is the detailed content of How do sequential decision-making and underlying models intersect and reciprocate? Google, Berkeley and others explore more possibilities. For more information, please follow other related articles on the PHP Chinese website!