Author | Wu Feining Li Shuiqing

Editor | Xin Yuan

In recent days, new AI scams have exploded across the country.

In the past, there were falsified AI papers and news. Later, Yang Mi and Dilraba, who were suspected of having their faces changed by AI, appeared in the live broadcast room to sell goods. Yesterday, an "AI telecom fraud defrauded 4.3 million yuan in 10 minutes" in Baotou, Inner Mongolia, was trending on Weibo and attracted national attention.

Just this morning, a set of pictures of the Pentagon explosion that caused a sharp fall in US stocks was officially confirmed to be fake and suspected to be synthesized by AI.

As AI technology sets off a new wave of implementation, AI deepfake content has spread virally around the world, causing strong concerns in the industry.

As early as 2019, Zhixixi investigated and reported on the deep fake AI face-changing black product ("AI face-changing black product: 100 yuan package 200 face-swapping pornographic films, 5 photos can customize the video") in recent years , the game between deep forgery technology and anti-deep forgery technology continues, and relevant departments are gradually improving regulatory laws and regulations. At present, with the iterative evolution of AI large model technology represented by GPT-4, deep fake content is making a comeback, causing a greater disturbance around the world.

From articles to pictures, from human voices to the dynamic effects of videos, the dynamic effects can be restored to the greatest extent, making it possible to deceive the public through all kinds of tricks. There are also various fraud schemes that are endless and difficult to guard against. It is difficult for many young people to distinguish the authenticity of these AI forged contents, while the elderly and children are more likely to be deceived. And once their trust is used by criminals to commit crimes, the consequences will be disastrous.

An expert in artificial intelligence technology told Zhidongzhi that it is very difficult for ordinary people to identify the audio and video content generated by these current AI deep forgery technologies with the naked eye.

The traditional scam has been put on a "new coat" wrapped by AI, making it more hidden and more difficult to detect. How do these new AI scams work? How to detect fake AI content that is difficult to distinguish? What are the solutions to industrial chaos? By sorting out and analyzing various new AI scams on the market, this article conducts an in-depth discussion of these issues.

1. A 10-minute face-changing video defrauded 4.3 million yuan. Be wary of these four new AI scams

In March this year, an open letter titled "Suspension of Large-scale Artificial Intelligence Research" was released on the official website of the Future Life Institute, including Tesla CEO Elon Musk, Apple co-founder Shi Thousands of scientists and business executives, including Steve Wozniak and others, have signed a petition calling for a moratorium on the development of versions above GPT-4. They all point to the possible negative uses of powerful AI technology.

The negative uses of AI are exploding much faster than we imagine. As AI technology capabilities accelerate iteration and implementation, AI forgery becomes easier and the content becomes more realistic. With this comes an endless stream of fake news and fake pictures, and vicious incidents caused by voice and face changes have caused social concern. Many people feel that the common sense of "pictures and truth" and "seeing is believing" seems to have received the most violent impact in history.

1. Face-swapping video: defrauded 4.3 million in 10 minutes

Perhaps many vicious events caused by AI face-changing have already happened around us, but we haven’t noticed it yet.

Recently, the Internet Investigation Department of the Public Security Bureau of Baotou Municipal Autonomous Region in Inner Mongolia detected a case of telecommunications fraud using AI. The criminal pretended to be a friend and claimed that he was in urgent need of money, and called the victim Mr. Guo via WeChat video.

Out of trust in his friend, and having already verified the other party’s identity through video chat, Mr. Guo relaxed his vigilance and transferred 4.3 million to the criminal’s bank card in two remittances, but he never expected that the so-called The "friends" in the WeChat video call are made by AI face-changing technology. Criminals used AI face-changing technology to pretend to be friends and commit telecom fraud against him. At present, 3.3684 million yuan has been recovered after being intercepted by the police, and 931,600 yuan has been transferred away.

AI face-changing is the easiest type of fraud method to gain the trust of the other party. Scammers often use AI technology to change the face of someone familiar to the victim, and then call the other party to confirm the information.

Faced with this new type of fraud, firstly, you need to protect your personal information, do not easily provide any information involving personal privacy to others, and do not click on unfamiliar links or download unfamiliar software at will; secondly, you must verify through various channels identify the other party and remain alert to potential risks.

The function that is very similar to the "AI face-changing" function is "AI undressing". As early as March this year, a news article about "a woman's subway photo was disseminated by AI with one click of undressing" hit the hot search. A photo of a woman riding the subway was "undressed" with one click using AI software and was criticized. Malicious dissemination, criminals also widely spread false information to slander the woman.

Such "one-click undressing" technology has appeared abroad as early as 2019, but due to widespread public controversy and denunciation, the project was quickly closed and removed from the shelves. This bad trend has once again spread to China during this year’s AI craze. When searching on the Internet with keyword combinations such as “AI”, “dress-up”, and “software”, many deeply hidden related software can still be found. Those who are interested may even use it to produce and disseminate pornographic and obscene videos. AI video cross-dressing services have become a black industry with a complete chain.

"AI face-changing" has been widely used in the secondary creation of film and television dramas, fun spoof videos, and expression pack production, but the hidden legal risks and infringement disputes cannot be ignored. Recently, according to information on the official website of Qichacha, Shanghai Houttuynia Information Technology Co., Ltd. was sued by many Internet celebrity bloggers for one of its face-swapping mobile apps.

▲There are nearly a hundred “AI face-changing” applications in the App Store

Earlier, a video blogger named "Special Effects Synthesizer Hong Liang" replaced the face of the male lead Yang Yang in the TV series "You Are My Glory" with AI face-changing technology in his video. He lost his face and was eventually chased and beaten by fans of the leading actor, which attracted the attention of many netizens.

▲ Some AI synthesized face-changing videos uploaded in the account of "Special Effects Synthesizer Hong Liang"

This video blogger used the face-swapping of male stars as a gimmick, but in fact it was to attract traffic to his paid course on film and television special effects production. However, his behavior has caused infringement to actors such as Dilmurat Dilraba and Yang Yang who appeared in the TV series. After "Kurst" became popular, many fans also created it again by exchanging the plot clips with the TV series version of "Journey to the West", which contributed to the popularity of the TV series in terms of dissemination and topic discussion, but they were not satisfied with the TV series. The actor caused some trouble.

The behavior of attracting traffic has had a negative impact and troubles both on celebrities whose faces have been changed and on the public who are curious about technology. The boundary between entertainment and technology should not be blurred to this point.

2. Sound synthesis: replicating the human voice to conceal the truth

In the past, "AI Stefanie Sun" became popular, and later, AI human voice cloning broke into the dubbing circle. AI's voice synthesis technology was highly hyped, and it also spawned many vicious incidents and related victims.

A netizen used clips of songs by the well-known American singer Frank Ocean to train songs that imitated his musical style, and posted these "musical works" on underground music forums, and also forged these songs into It was a version that was accidentally leaked during recording. It finally got through and was sold for nearly $970 (equivalent to 6,700 yuan).

Not long ago, Peabody Films, a film production company in Spain, also suffered an AI phone scam. The scammer pretended to be the famous actor "Benedict Cumberbatch" and claimed that he was against the company. I am very interested in the film and have the intention to cooperate, but the company needs to transfer a deposit of 200,000 pounds (equivalent to about 1.7 million yuan) first, and then we can discuss the specific cooperation details.

The film company realized that something was wrong, and after checking with the actor's agency, they discovered that it was an AI scam from beginning to end.

There are many overseas news about the use of AI cloned voices to commit fraud and extortion. Some cases involve staggering amounts of up to one million US dollars. This type of scammers often extract someone's voice from phone recordings and other materials to synthesize the voice, and then use the forged voice to deceive acquaintances. This requires the public to be vigilant about strange calls. Even acquaintances may have their voices forged.

In its latest episode on May 22, the US CBS television news magazine program 60 Minutes demonstrated live how hackers cloned voices to deceive colleagues and successfully steal passport information.

▲60 Minutes uploaded the full video on Twitter

In just 5 minutes, the hacker copied Sharyn’s voice through AI cloning, and used tools to replace the name on the caller ID on the phone. He successfully deceived her colleagues and easily obtained her name and passport. numbers and other private information.

3. Falsified photos: Trump was "arrested" and the Pope wore a down jacket on the catwalk

With AI face-changing videos spreading overwhelmingly, AI fake photos have become even more widespread.

On May 22, Eastern Time, a picture of an explosion near the Pentagon went viral on social media. The widespread circulation of this false photo even caused the S&P 500 index to fall by about 0.3% in the short term to an intraday low, turning from a rise to a fall.

A spokesman for the US Department of Defense immediately confirmed that this was also a fake picture generated by AI. This picture had obvious AI-generated characteristics, such as the street lights being a little crooked and the fence growing on the sidewalk.

The photos of couples in the 1990s generated by Midjourney have made it difficult for netizens to tell whether they are real or fake. In March, several photos of former US President Trump being arrested went viral on Twitter.

In the photo, Trump was surrounded by police and pushed to the ground, looking embarrassed and panicked. During the hearing, he hid his face and cried bitterly, and even put on prison uniform in the damp and dark cell. The images were created using Midjounrney by Eliot Higgins, founder of citizen journalism website Bellingcat. After the photo was posted, his account was also blocked.

▲A set of Trump "arrested" images generated by Midjounrney

Not long after the "Trump Arrest Picture" was released, the photo of Pope Francis wearing a Paris World down jacket caused an uproar on the Internet. Not long after it was released, it had more than 28 million views, making many netizens believe it. .

▲The Pope wore a Balenciaga down jacket on the catwalk

Creator Pablo Xavier said that he had no idea that this set of pictures would become popular. He just suddenly thought of Balenciaga's down jackets, the streets of Paris or Rome, etc.

In the Midjourney section of the Reddit forum, a group of "The 2001 Cascadia magnitude 9.1 earthquake caused a tsunami that devastated Oregon" was widely circulated. The disaster scene in the photo was in chaos, with relief workers, Journalists, government officials and others appeared one after another, and people looked worried, frightened and panicked. But in fact, this is a "disaster" caused by an AI that does not exist in history. These pictures and scenes with complete elements and real colors make it difficult to tell the authenticity.

4. Compiling news: AI becomes a rumor mill

On May 7, the Cyber Security Brigade of the Public Security Bureau of Pingliang City, Gansu Province detected a case of AI concocting false information.

It is reported that the criminal used ChatGPT to modify and edit the hot social news he collected from the entire Internet, and compiled a fake news that "a train hit a road construction worker in Gansu this morning, killing 9 people", in order to Get network traffic and attention to monetize.

This news received over 15,000 views as soon as it was released. Fortunately, the police intervened in the investigation before public opinion further fermented, and confirmed that the suspect used AI technology to fabricate news.

Not only readers need to identify AI fake news in phishing websites, but also established media are sometimes deceived by AI fake news.

The British "Daily Mail" (The DailyMail) published a news on April 24, saying that "a 22-year-old Canadian man had 12 plastic surgeries in order to debut as an idol in South Korea and eventually died." .

▲ According to reports, in order to play Jimin from the K-pop star BTS group in an upcoming American drama, the 22-year-old Canadian actor spent US$22,000 on 12 plastic surgeries and eventually died

This news attracted the attention of many media around the world. TV stations such as South Korea's YTN News Channel quickly followed up with reports. Netizens also expressed their sympathy and regret.

▲Netizens expressed regret at the news of his death

However, things completely reversed after a reporter in South Korea and the American radio station iHeartRadio raised questions: The man may not exist at all. Netizens took their photos to the AI content detection website for verification and found that there was a 75% chance that the photos were generated by AI. After South Korea's MBC TV station checked with the police, they also received a reply that "no similar death case reports have been received." This news It was immediately confirmed as "AI fake news."

But the matter is not over yet. At the beginning of this month, the man's family decided to sue the media that reported "fake news" and the agency to which the man belonged, as well as the first reporter who claimed that the man was dead. The implication is that the photo was not generated by AI, and the man did die from surgical complications caused by excessive plastic surgery.

Without verifying his identity and holding no substantive evidence, many media claimed that the man's identity and information were compiled, turning a farce caused by AI into a tragic incident. tragedy.

These news compiled by AI not only confuse the public's hearing and hearing, but also make passers-by who do not have the time and patience to see the whole story lose the ability to distinguish the truth.

2. Celebrities who have been "AI": "AI Stefanie Sun" became a hit with her covers, and "AI Yang Mi" changed her face to sell goods

When AI technology becomes rampant, entertainment stars become the first to be hardest hit.

Stefanie Sun, who has become popular recently, posted a long post last night in response to the emergence of "AI Stefanie Sun".

▲Stefanie Sun responds to the emergence of "AI Stefanie Sun" (Chinese translation version)

She adopted a more tolerant attitude towards the emergence of "AI Stefanie Sun" and did not pursue legal measures. She said: "It is impossible to compare with a 'human' who can release a new album in just a few minutes." She believes that although it (AI) has neither emotions nor changes in pitch, no matter how fast humans can Go beyond it.

It is not difficult to see from the response that although Stefanie Sun does not appreciate the music generated by AI, it is indeed difficult for humans to catch up with the learning ability of robots in a short time. She chose to treat these hot song cover videos indifferently.

Singer Stefanie Sun was the first to re-enter the public eye because of AI covers. Because of her unique timbre and singing style, she has a high degree of adaptability to many popular songs. With the help of netizens' AI technology, she "covered" Songs such as "Hair Like Snow", "Rainy Day" and "I Remember" all have more than one million views.

▲The most played songs of "AI Stefanie Sun"

Stefanie Sun is not the only singer to be "covered" by AI, AI Jay Chou, AI Cyndi Wang, AI Tengger and others have appeared one after another.

Although Stefanie Sun chooses not to pursue legal responsibility, when AI technology has been abused to the extent of harming commercial interests and touching legal red lines, she still needs to safeguard her legitimate rights and interests in a timely manner.

According to China News Network, recently, many live broadcast rooms have begun to use AI to change the faces of celebrities to carry out live broadcasts. Female celebrities such as Yang Mi, Dilraba, Anglebaby and others have become key face-swapping targets. Anchors with celebrity faces can achieve better traffic-draining effects and save advertising costs. Consumers or celebrity fans may be deceived by consuming based on the celebrity effect.

According to a website that provides face-changing services, if you purchase a ready-made model and have enough material, you can directly synthesize a video of several hours with only half an hour to a few hours of events. The purchase price of a full set of models is 3.5 10,000 yuan, far lower than the price quoted for inviting a celebrity to live broadcast the goods.

In several major e-commerce platforms, keywords such as "AI face-changing" and "video face-changing" are blocked by the platform, but many related products can still be found by changing search keywords and other methods.

AI face-changing technology is not a recent development. As early as 2017, a netizen named DeepFake changed the face of the heroine in an erotic movie into Hollywood star Gal Gadot. This was the first time that AI face-changing technology appeared in the public eye. Later, due to the dissatisfaction of a large number of complainants, his account was officially banned, and the subsequent AI face-changing technology was named "DeepFake" after him.

AI face-changing really became popular in China at the beginning of 2019. A video in which the face of Zhu Yin, the actor Huang Rong in "The Legend of the Condor Heroes" was replaced with Yang Mi through AI technology, went viral on the Internet. Some netizens expressed that there was no Some netizens also suggested that it might infringe on the original actor's portrait rights due to the sense of violation. The producer, Mr. Xiao, responded: It is mainly suitable for technical exchanges and will not be used for profit-making.

▲Netizens replaced the role of "Huang Rong" played by actor Zhu Yin in "The Legend of the Condor Heroes" with the face of actor Yang Mi

This has also become the early start of the domestic use of AI for secondary creation of film and television dramas.

AI technology has long been targeted by criminals and used in various face-changing tricks. Not only celebrities in the entertainment industry, but also many ordinary people have been blackmailed by so-called "private photos". Several police officers from the cybersecurity department said that the lack of supervision of AI deep synthesis technology has caused many information security risks and chaos in network communication, and even indirectly led to the occurrence of criminal cases.

3. A large-scale AI law popularization movement has emerged. What behaviors are infringing and illegal?

Many people's portraits and voices have been disseminated without permission, audio and video products have been used for profit without discussing copyright, and even worse, criminals have used forged information to commit property fraud or even kidnap and extort. , harming people’s personal and property rights.

Faced with these more severe situations, people need to take up legal weapons to protect themselves. What will follow will be a large-scale AI legal popularization movement that is beneficial to industrial development.

Facial and voice forgery are rampant, and the first problem faced is the infringement of personal privacy. According to Article 1019 of the Civil Code: “No organization or individual may infringe upon the portrait rights of others by defaming, defacing, or using information technology means to forge. Without the consent of the portrait rights holder, no organization or individual may produce or infringe upon the portrait rights of others. Use and disclose the portrait of the person with the right to portrait, except as otherwise provided by law." At the same time, "for the protection of natural persons' voices, refer to the relevant regulations applicable to the protection of portrait rights." What makes people even more worried is that if the forged information falls into If it is not in the hands of elements, trust may be further exploited to infringe on people's property rights and even their health rights.

In addition to personal rights, the issue of copyright infringement of audio and video materials involved in AI forged content has also attracted much attention. Recently, singer Stefanie Sun posted an article stating that she was indifferent to the "AI Stefanie", but more people who have been forged have chosen to take up legal weapons to protect themselves. For example, according to media reports, Shanghai Houttuynia Information Technology Co., Ltd. was involved in a certain model replacement. Face mobile APP was sued by many Internet celebrity bloggers.

You Yunting, a senior partner and intellectual property lawyer at Shanghai Dabang Law Firm, wrote that according to intellectual property laws, using real people’s voices to train artificial intelligence and generate songs will most likely not infringe if it is not used for commercial purposes, but it will still be published online. You should first obtain authorization for relevant song and song copyrights, accompanying music, and video materials. Artificial intelligence-generated works have inherent copyright defects and should not enjoy copyright and charge licensing fees. If the works generated by users using artificial intelligence are infringed by others, they can protect their rights according to the Anti-Unfair Competition Law.

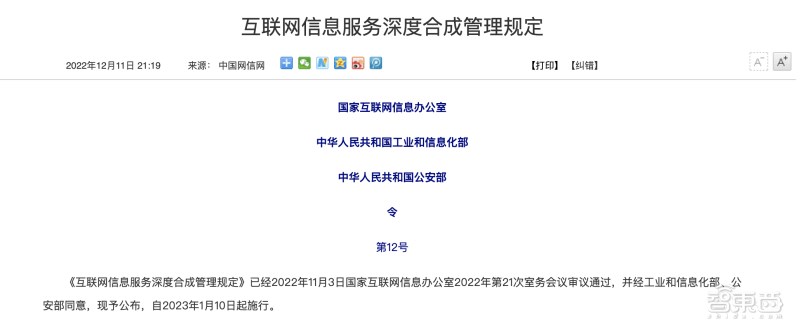

In fact, some special regulations in the field of artificial intelligence in our country have also been stipulated. The Cyberspace Administration of China and other departments have promulgated the "Regulations on the Management of Deep Synthesis of Internet Information Services" in December 2022, using 25 regulations to delineate the specifications for the use of deep synthesis technology, including laws and applications such as the prohibition of using deep synthesis services to infringe on the legitimate rights and interests of others. Distribution platforms should implement regulations such as shelf review, service provision and technical supporters should strengthen technical management.

On April 11, the Cyberspace Administration of China quickly drafted the "Measures for the Management of Generative Artificial Intelligence Services (Draft for Comments)". The generative artificial intelligence involved includes generating text and images based on algorithms, models, and rules. , sound, video, code and other content technologies, it can be said that the current AI forgery has been comprehensively regulated. ("Breaking news! The first national AIGC regulatory document, Generative AI Service Management Measures Released for Comments")

In addition, the "Network Security Law of the People's Republic of my country", "Data Security Law of the People's Republic of China", "Personal Information Protection Law of the People's Republic of China", "Internet Information Services Management Measures" and other laws and administrative laws and regulations promulgated by our country are all There are provisions for this, which can become the relevant basis for safeguarding legitimate rights and interests.

4. Ordinary people have “defense skills” in AI content recognition, and platforms must take responsibility

"Counterfeiting" and "anti-counterfeiting" are not caused by AI, but today's AI technology makes "counterfeiting" easier and more difficult to distinguish. In the face of AI forged content coming from the market, do people have the skills to identify it themselves?

Xiao Zihao, co-founder and algorithm scientist of RealAI, a well-known security company, told Zhidongzhi that deepfake technology is constantly evolving, and the generated sounds and videos are becoming more and more realistic, making it very difficult for ordinary people to identify with the naked eye.

"If ordinary people encounter this kind of situation, they can consciously guide the other party to do some actions during the video, such as shaking their head or opening their mouths. If the scammer's technical means are weak, then we may find the edges of the other party's face. Or teeth defects, so as to identify AI face-changing. However, this method is still difficult to identify 'high-level' fraudsters. In addition, you can also ask for several private information that only you and the borrower know. , to verify the identity of the other party."

"While preventing yourself from being deceived, you should also pay attention to protecting your personal images and try to avoid posting a large number of your photos and videos on public platforms to provide convenience to criminals. Because the raw materials for making deep fake videos are personal pictures , videos, the more data these data have, the more realistic and difficult to identify the videos trained will be," Xiao Zihao added.

AI makes scams and misinformation more difficult to detect, and the review and control of technology companies and content distribution platforms becomes more important.

Apple co-founder Steve Wozniak warned in a recent interview that AI content should be clearly labeled and the industry needs to be regulated. Any content generated by AI should be held accountable by the publisher, but this does not mean that large technology companies can escape legal sanctions. Wozniak believes that regulatory agencies should hold large technology companies accountable.

According to the "Internet Information Services Deep Synthesis Management Regulations" promulgated by our country, content providers need to label the generated pictures, videos and other content in accordance with the regulations. In fact, we have already seen domestic social platforms such as Xiaohongshu flag suspected AI-generated content, which can play a certain preventive role. But the last hurdle for AI-generated content lies with the users themselves, and media platforms can only play a filtering role.

Conclusion: Resist negative applications, AI offensive and defensive battle begins

The evolving AI technology makes it easier and more realistic to generate text, images, and videos. At the same time, deep fake content is also rampant. Deep fake content can at least confuse people and be used for profit, or at worst, it can be used by criminals to commit fraud or even blackmail, which has attracted urgent attention on the supervision of AI-generated content.

As new methods of AI fraud cause social problems, users, technology providers and operators, content distribution platforms and content producers in the industry all need to strengthen self-discipline. In fact, our country has promulgated a series of relevant laws and regulations in advance. The exposure of negative AI application issues is expected to lead to a nationwide technology legalization movement, thus promoting healthier development of the industry.

The above is the detailed content of Be wary of the four new AI scams! Someone was defrauded of 4.3 million in 10 minutes. For more information, please follow other related articles on the PHP Chinese website!