Translator | Bugatti

Reviewer | Sun Shujuan

The past ten years have been the era of deep learning. We're excited about a series of big events, from AlphaGo to the DELL-E 2. Countless products or services driven by artificial intelligence (AI) have appeared in daily life, including Alexa devices, advertising recommendations, warehouse robots, and self-driving cars.

# In recent years, the size of deep learning models has grown exponentially. This is not news: the Wu Dao 2.0 model contains 1.75 trillion parameters, and training GPT-3 on 240 ml.p4d.24xlarge instances in the SageMaker training platform only takes about 25 days.

But as deep learning training and deployment evolves, it becomes more and more challenging. As deep learning models evolve, scalability and efficiency are two major challenges in training and deployment.

This article will summarize the five major types of machine learning (ML) accelerators.

Before introducing the ML accelerator in a comprehensive way, you might as well take a look at the ML life cycle.

ML life cycle is the life cycle of data and models. Data can be said to be the root of ML and determines the quality of the model. Every aspect of the life cycle has opportunities for acceleration.

MLOps can automate the process of ML model deployment. However, due to its operational nature, it is limited to the horizontal process of AI workflow and cannot fundamentally improve training and deployment.

AI engineering goes far beyond the scope of MLOps. It can design the machine learning workflow process as well as the training and deployment architecture as a whole (horizontally and vertically). Additionally, it accelerates deployment and training through efficient orchestration of the entire ML lifecycle.

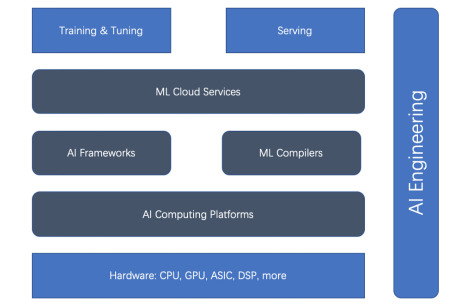

Based on the holistic ML life cycle and AI engineering, there are five main types of ML accelerators (or acceleration aspects): hardware accelerators, AI computing platforms, AI frameworks, ML compilers, and cloud services. First look at the relationship diagram below.

Figure 1. The relationship between training and deployment accelerator

We can see that hardware accelerators and AI frameworks are the mainstream of acceleration . But recently, ML compilers, AI computing platforms, and ML cloud services have become increasingly important.

The following are introduced one by one.

When it comes to accelerating ML training and deployment, choosing the right AI framework cannot be avoided. Unfortunately, there is no one-size-fits-all perfect or optimal AI framework. Three AI frameworks widely used in research and production are TensorFlow, PyTorch, and JAX. They each excel in different aspects, such as ease of use, product maturity, and scalability.

TensorFlow: TensorFlow is the flagship AI framework. TensorFlow has dominated the deep learning open source community from the beginning. TensorFlow Serving is a well-defined and mature platform. For the Internet and IoT, TensorFlow.js and TensorFlow Lite are also mature.

But due to the limitations of early exploration of deep learning, TensorFlow 1.x was designed to build static graphs in a non-Pythonic way. This becomes a barrier to immediate evaluation using the "eager" mode, which allows PyTorch to rapidly improve in the research field. TensorFlow 2.x tries to catch up, but unfortunately upgrading from TensorFlow 1.x to 2.x is cumbersome.

TensorFlow also introduces Keras to make it easier to use overall, and XLA (Accelerated Linear Algebra), an optimizing compiler, to speed up the bottom layer.

PyTorch: With its eager mode and Python-like approach, PyTorch is a workhorse in today’s deep learning community and is used in everything from research to production. In addition to TorchServe, PyTorch also integrates with framework-agnostic platforms such as Kubeflow. In addition, PyTorch's popularity is inseparable from the success of Hugging Face's Transformers library.

JAX: Google introduced JAX, based on device-accelerated NumPy and JIT. Just as PyTorch did a few years ago, it is a more native deep learning framework that is quickly gaining popularity in the research community. But it's not yet an "official" Google product, as Google claims.

There is no doubt that NVIDIA’s GPU can accelerate deep learning training, but it was originally designed for video cards.

After the emergence of general-purpose GPUs, graphics cards used for neural network training have become extremely popular. These general-purpose GPUs can execute arbitrary code, not just rendering subroutines. NVIDIA's CUDA programming language provides a way to write arbitrary code in a C-like language. General-purpose GPU has a relatively convenient programming model, large-scale parallelism mechanism and high memory bandwidth, and now provides an ideal platform for neural network programming.

Today, NVIDIA supports a range of GPUs from desktop to mobile, workstation, mobile workstation, gaming console and data center.

With the great success of NVIDIA GPU, there has been no shortage of successors along the way, such as AMD's GPU and Google's TPU ASIC.

As mentioned earlier, the speed of ML training and deployment depends largely on hardware (such as GPU and TPU). These driver platforms (i.e., AI computing platforms) are critical to performance. There are two well-known AI computing platforms: CUDA and OpenCL.

CUDA: CUDA (Compute Unified Device Architecture) is a parallel programming paradigm released by NVIDIA in 2007. It is designed for numerous general-purpose applications on graphics processors and GPUs. CUDA is a proprietary API that only supports NVIDIA's Tesla architecture GPUs. Graphics cards supported by CUDA include GeForce 8 series, Tesla and Quadro.

OpenCL: OpenCL (Open Computing Language) was originally developed by Apple and is now maintained by the Khronos team for heterogeneous computing, including CPUs, GPUs, DSPs and other types of processors . This portable language is adaptable enough to enable high performance on every hardware platform, including Nvidia's GPUs.

NVIDIA is now OpenCL 3.0 compliant for use with R465 and higher drivers. Using the OpenCL API, one can launch computational kernels written in a limited subset of the C programming language on the GPU.

ML compiler plays a vital role in accelerating training and deployment. ML compilers can significantly improve the efficiency of large-scale model deployment. There are many popular compilers such as Apache TVM, LLVM, Google MLIR, TensorFlow XLA, Meta Glow, PyTorch nvFuser and Intel PlaidML.

ML cloud platform and services manage the ML platform in the cloud. They can be optimized in several ways to increase efficiency.

Take Amazon SageMaker as an example. This is a leading ML cloud platform service. SageMaker provides a wide range of features for the ML lifecycle: from preparation, building, training/tuning to deployment/management.

It optimizes many aspects to improve training and deployment efficiency, such as multi-model endpoints on GPUs, cost-effective training using heterogeneous clusters, and a proprietary Graviton processor suitable for CPU-based ML inference.

As the scale of deep learning training and deployment continues to expand, the challenges are becoming more and more challenging. Improving the efficiency of deep learning training and deployment is complex. Based on the ML life cycle, there are five aspects that can accelerate ML training and deployment: AI framework, hardware accelerator, computing platform, ML compiler and cloud service. AI engineering can coordinate all of these and use engineering principles to improve efficiency across the board.

Original title: 5 Types of ML Accelerators, author: Luhui Hu

The above is the detailed content of Briefly describe the five types of machine learning accelerators. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

What is the function of mobile phone NFC?

What is the function of mobile phone NFC?

What are the core technologies necessary for Java development?

What are the core technologies necessary for Java development?

freelaunchbar

freelaunchbar

sp2 patch

sp2 patch

Solution to 0x84b10001

Solution to 0x84b10001

Common encryption methods for data encryption storage

Common encryption methods for data encryption storage