Imagine that you have a huge problem to solve and you are alone. You need to calculate the square roots of eight different numbers. What do you do? You don't have much choice. Start with the first number and calculate the result. Then you move on to other people.

What if you have three friends who are good at math and are willing to help you? Each of them will calculate the square root of two numbers, and your job will be easier because the workload is evenly distributed among your friends. This means your issue will be resolved faster.

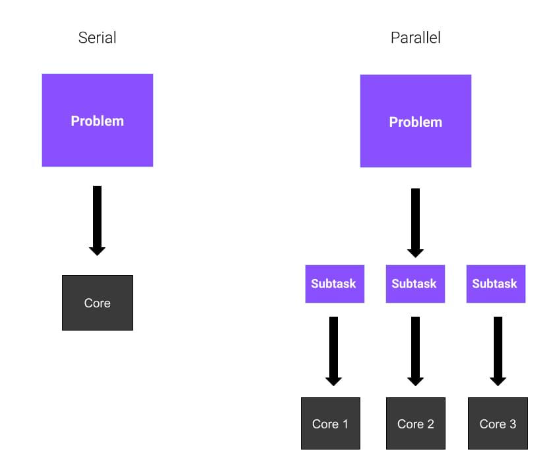

Okay, is everything clear? In these examples, each friend represents a core of the CPU. In the first example, the entire task is solved by you sequentially. This is called Serial Computation. In the second example, since you are using a total of four cores, you are using Parallel Computing. Parallel computing involves the use of parallel processes or processes divided among multiple cores of a processor.

We’ve established what parallel programming is, but how do we use it? We said before that parallel computing involves executing multiple tasks across multiple cores of a processor, meaning that these tasks are executed simultaneously. Before proceeding with parallelization, you should consider several issues. For example, are there other optimizations that can speed up our calculations?

Now, let us take it for granted that parallelization is the most suitable solution. There are three main modes of parallel computing:

completely parallel. Tasks can run independently and do not need to communicate with each other.

Shared Memory Parallel. Processes (or threads) need to communicate, so they share a global address space.

Message passing. Processes need to share messages when needed.

In this article, we will explain the first model, which is also the simplest.

One way to achieve parallelism in Python is to use the multiprocessing module. multiprocessing The module allows you to create multiple processes, each with its own Python interpreter. Therefore, Python multiprocessing implements process-based parallelism.

You may have heard of other libraries, such as threading, which are also built into Python, but there are important differences between them. The multiprocessing module creates new processes, while threading creates new threads.

You may ask, "Why choose multi-process?" Multi-process can significantly improve the efficiency of a program by running multiple tasks in parallel instead of sequentially. A similar term is multithreading, but they are different.

A process is a program that is loaded into memory and does not share its memory with other processes. A thread is an execution unit in a process. Multiple threads run in a process and share the process's memory space with each other.

Python's Global Interpreter Lock (GIL) only allows one thread to run under the interpreter at a time, which means that if you need the Python interpreter, you will not enjoy the performance benefits of multi-threading. This is why multi-processing is more advantageous than threading in Python. Multiple processes can run in parallel because each process has its own interpreter that executes the instructions assigned to it. Additionally, the operating system will look at your program in multiple processes and schedule them separately, i.e., your program will have a larger share of the total computer resources. Therefore, when a program is CPU bound, multi-processing is faster. In situations where there is a lot of I/O in a program, threads may be more efficient because most of the time, the program is waiting for the I/O to complete. However, multiple processes are usually more efficient because they run simultaneously.

Here are some benefits of multi-processing:

Better use of CPU when handling highly CPU-intensive tasks

More control over child threads compared to threads

Easy to code

The first advantage is related to performance. Since multiprocessing creates new processes, you can better utilize the CPU's computing power by dividing tasks among other cores. Most processors these days are multi-core and if you optimize your code you can save time through parallel computing.

The second advantage is an alternative to multi-threading. Threads are not processes, and this has its consequences. If you create a thread, it is dangerous to terminate it like a normal process or even interrupt it. Since the comparison between multi-processing and multi-threading is beyond the scope of this article, I will write a separate article later to talk about the difference between multi-processing and multi-threading.

The third advantage of multiprocessing is that it is easy to implement because the task you are trying to handle is suitable for parallel programming.

We are finally ready to write some Python code!

We will start with a very basic example that we will use to illustrate core aspects of Python multiprocessing. In this example, we will have two processes:

parentoften. There is only one parent process and it can have multiple child processes.

childprocess. This is generated by the parent process. Each child process can also have new child processes.

We will use the child procedure to execute a certain function. In this way, parent can continue execution.

This is the code we will use for this example:

from multiprocessing import Process

def bubble_sort(array):

check = True

while check == True:

check = False

for i in range(0, len(array)-1):

if array[i] > array[i+1]:

check = True

temp = array[i]

array[i] = array[i+1]

array[i+1] = temp

print("Array sorted: ", array)

if __name__ == '__main__':

p = Process(target=bubble_sort, args=([1,9,4,5,2,6,8,4],))

p.start()

p.join()In this snippet, we define a process named bubble_sort(array). This function is a very simple implementation of the bubble sort algorithm. If you don't know what it is, don't worry because it's not important. The key thing to know is that it's a function that does something.

From multiprocessing, we import class Process. This class represents activities that will run in a separate process. In fact, you can see that we have passed some parameters:

target=bubble_sort, meaning that our new process will run that bubble_sortFunction

args=([1,9,4,52,6,8,4],), this is passed as a parameter to the target function An array of

Once we have created an instance of the Process class, we just need to start the process. This is done by writing p.start(). At this point, the process begins.

We need to wait for the child process to complete its calculations before we exit. The join() method waits for the process to terminate.

In this example, we only create one child process. As you might guess, we can create more child processes by creating more instances in the Process class.

What if we need to create multiple processes to handle more CPU-intensive tasks? Do we always need to explicitly start and wait for termination? The solution here is to use the Pool class. The

Pool class allows you to create a pool of worker processes, in the following example we will look at how to use it. Here is our new example:

from multiprocessing import Pool

import time

import math

N = 5000000

def cube(x):

return math.sqrt(x)

if __name__ == "__main__":

with Pool() as pool:

result = pool.map(cube, range(10,N))

print("Program finished!") In this code snippet, we have a cube(x) function that takes just an integer and returns its square root. Pretty simple, right?

Then, we create an instance of the Pool class without specifying any properties. By default, the Pool class creates one process per CPU core. Next, we run the map method with a few parameters. The

map method applies the cube function to each element of the iterable we provide - in this case, it's from 10# A list of each number from ## to N.

joblib is a set of tools that make parallel computing easier. It is a general-purpose third-party library for multi-process. It also provides caching and serialization capabilities. To install the joblib package, use the following command in the terminal:

pip install joblib

joblib:

from joblib import Parallel, delayed

def cube(x):

return x**3

start_time = time.perf_counter()

result = Parallel(n_jobs=3)(delayed(cube)(i) for i in range(1,1000))

finish_time = time.perf_counter()

print(f"Program finished in {finish_time-start_time} seconds")

print(result)delayed()A function is a wrapper around another function that generates a "delayed" version of a function call. This means it does not execute the function immediately when called.

然后,我们多次调用delayed函数,并传递不同的参数集。例如,当我们将整数1赋予cube函数的延迟版本时,我们不计算结果,而是分别为函数对象、位置参数和关键字参数生成元组(cube, (1,), {})。

我们使用Parallel()创建了引擎实例。当它像一个以元组列表作为参数的函数一样被调用时,它将实际并行执行每个元组指定的作业,并在所有作业完成后收集结果作为列表。在这里,我们创建了n_jobs=3的Parallel()实例,因此将有三个进程并行运行。

我们也可以直接编写元组。因此,上面的代码可以重写为:

result = Parallel(n_jobs=3)((cube, (i,), {}) for i in range(1,1000))使用joblib的好处是,我们可以通过简单地添加一个附加参数在多线程中运行代码:

result = Parallel(n_jobs=3, prefer="threads")(delayed(cube)(i) for i in range(1,1000))

这隐藏了并行运行函数的所有细节。我们只是使用与普通列表理解没有太大区别的语法。

创建多个进程并进行并行计算不一定比串行计算更有效。对于 CPU 密集度较低的任务,串行计算比并行计算快。因此,了解何时应该使用多进程非常重要——这取决于你正在执行的任务。

为了让你相信这一点,让我们看一个简单的例子:

from multiprocessing import Pool

import time

import math

N = 5000000

def cube(x):

return math.sqrt(x)

if __name__ == "__main__":

# first way, using multiprocessing

start_time = time.perf_counter()

with Pool() as pool:

result = pool.map(cube, range(10,N))

finish_time = time.perf_counter()

print("Program finished in {} seconds - using multiprocessing".format(finish_time-start_time))

print("---")

# second way, serial computation

start_time = time.perf_counter()

result = []

for x in range(10,N):

result.append(cube(x))

finish_time = time.perf_counter()

print("Program finished in {} seconds".format(finish_time-start_time))此代码段基于前面的示例。我们正在解决同样的问题,即计算N个数的平方根,但有两种方法。第一个涉及 Python 进程的使用,而第二个不涉及。我们使用time库中的perf_counter()方法来测量时间性能。

在我的电脑上,我得到了这个结果:

> python code.py Program finished in 1.6385094 seconds - using multiprocessing --- Program finished in 2.7373942999999996 seconds

如你所见,相差不止一秒。所以在这种情况下,多进程更好。

让我们更改代码中的某些内容,例如N的值。 让我们把它降低到N=10000,看看会发生什么。

这就是我现在得到的:

> python code.py Program finished in 0.3756742 seconds - using multiprocessing --- Program finished in 0.005098400000000003 seconds

发生了什么?现在看来,多进程是一个糟糕的选择。为什么?

与解决的任务相比,在进程之间拆分计算所带来的开销太大了。你可以看到在时间性能方面有多大差异。

The above is the detailed content of How to apply Python multi-process. For more information, please follow other related articles on the PHP Chinese website!