After ChatGPT became popular, there are so many ways to use it.

Some people use it to seek life advice, some people simply use it as a search engine, and some people use it to write papers.

The paper...isn’t fun to write.

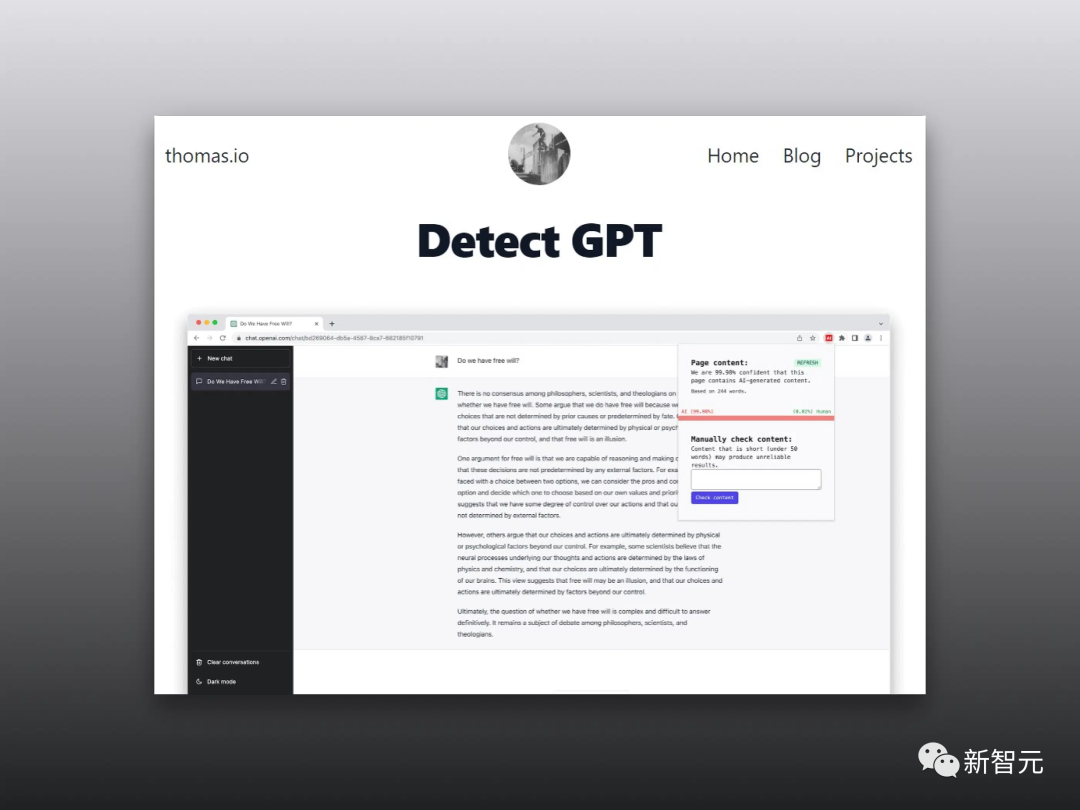

Some universities in the United States have banned students from using ChatGPT to write homework, and have also developed a bunch of software to identify whether the papers submitted by students were generated by GPT.

There is a problem here.

Someone’s paper was originally poorly written, but the AI that judged the text thought it was written by a peer.

What’s even more interesting is that the probability of English papers written by Chinese being judged as AI-generated by AI is as high as 61%.

This... what does this mean? Shivering!

Currently, generative language models are developing rapidly and have indeed brought great progress to digital communication.

But there is really a lot of abuse.

Although researchers have proposed many detection methods to distinguish AI and human-generated content, the fairness and stability of these detection methods still need to be improved.

To do this, the researchers evaluated the performance of several widely used GPT detectors using work written by native and non-native English-speaking authors.

The research results show that these detectors always incorrectly determine that samples written by non-native speakers are generated by AI, while samples written by native speakers can basically be accurately identified.

Furthermore, the researchers demonstrated that this bias can be mitigated using some simple strategies and effectively bypass GPT detectors.

What does this mean? This shows that the GPT detector looks down on authors whose language skills are not very good, which is very annoying.

Can’t help but think of that game that determines whether the AI is a real person. If the opponent is a real person but you guess it is an AI, the system will say, "The other person may find you offensive."

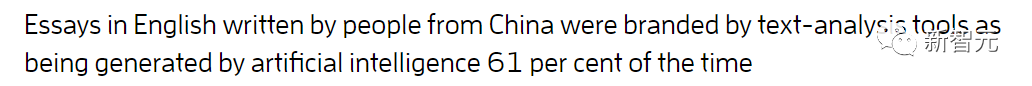

The researchers obtained 91 TOEFL essays from a Chinese education forum and 88 essays written by American eighth-grade students from the Hewlett Foundation's data set to detect 7 widely used GPT detectors.

The percentages in the chart represent the proportion of "misjudgements". That is, it was written by a human, but the detection software thinks it was generated by AI.

It can be seen that the data is very different.

Among the seven detectors, the highest probability of being misjudged for essays written by eighth-grade students in the United States is only 12%, and there are two GPTs with zero misjudgements.

The probability of TOEFL essays being misjudged on Chinese forums is more than half, and the highest probability of misjudgement can reach 76%.

18 of the 91 TOEFL essays were unanimously considered to be generated by AI by all 7 GPT detectors, while 89 of the 91 essays were mistakenly generated by at least one GPT detector. Judgment.

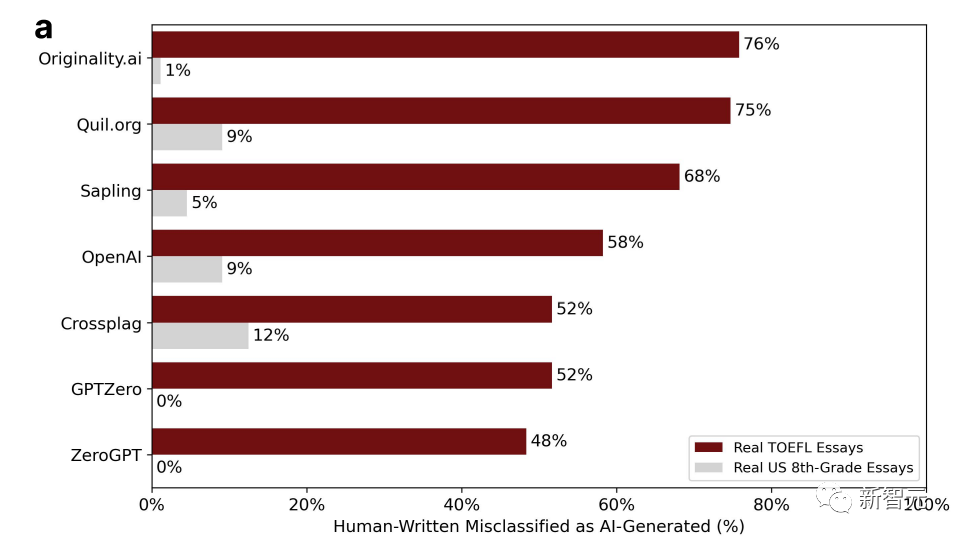

From the above figure we can see that the TOEFL essay that was misjudged by all 7 GPTs has a lower complexity ( Complexity) is significantly lower than other papers.

This confirms the conclusion at the beginning - the GPT detector will have a certain bias against authors with limited language expression ability.

Therefore, researchers believe that the GPT detector should read more articles written by non-native speakers. Only with more samples can bias be eliminated.

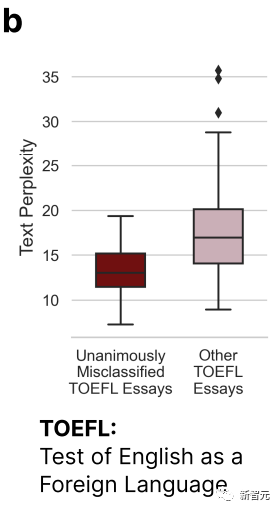

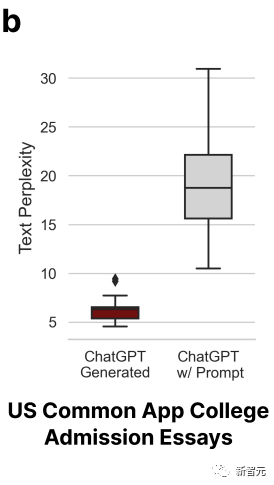

Next, the researchers threw TOEFL essays written by non-native speakers into ChatGPT to enrich the language and imitate the word usage habits of native speakers.

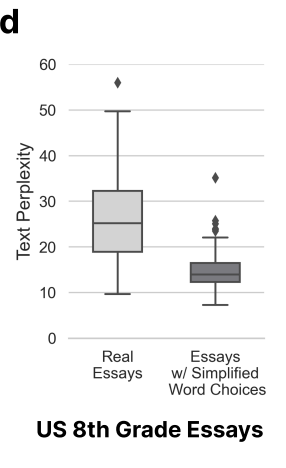

At the same time, as a control group, compositions written by American eighth-grade children were also thrown into ChatGPT, and the language was simplified to imitate the writing characteristics of non-native speakers. The picture below is the new judgment result after correction.

We can find that the situation has drastically changed, and the misjudgment rate of TOEFL essays that enrich the language has plummeted. , as low as 3%, and as high as 19%. Unlike the previous false positive rate, which was basically more than half.

On the contrary, the false positive rate of essays written by eighth-grade children has skyrocketed. There is even a GPT detector with a false positive rate as high as 99%, which is basically all errors.

Because the variable of article complexity has changed.

#The researchers here came to the conclusion that the things written by non-native speakers are not authentic, have low complexity, and are easily misjudged.

This also leads to a technical issue, but also a value issue. Using complexity to determine whether AI or humans are the authors is reasonable, comprehensive, and rigorous.

The result is obviously not.

When complexity is used as the criterion, non-native speakers suffer a big loss because they are non-native speakers (nonsense).

Researchers believe that enhancing language diversity can not only alleviate bias against non-native speakers, but also allow GPT-generated content to bypass GPT detectors.

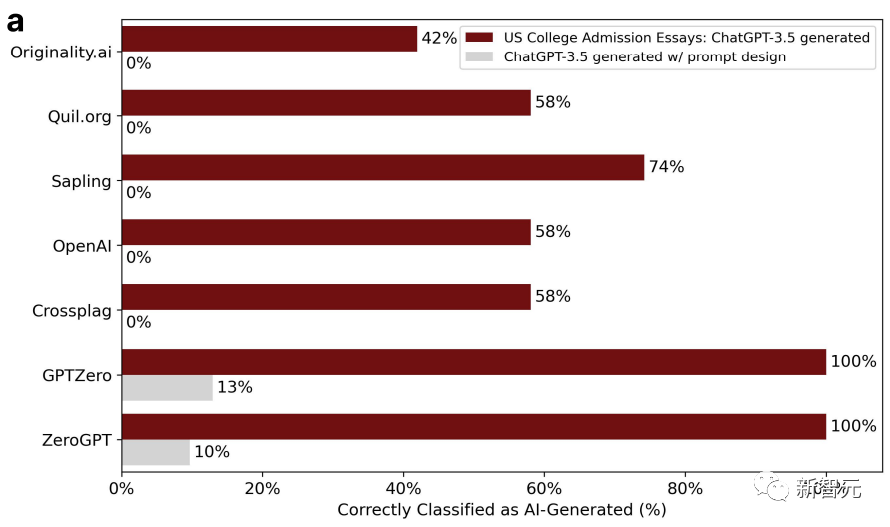

In order to prove this point, the researchers selected the admission essay topics applied to American universities in 2022-2023, input them into ChatGPT-3.5, and generated a total of 31 fake essays.

The GPT detector was quite effective at first, but not in the second round. This is because, in the second round, the researchers threw these papers into ChatGPT and polished them, using some literary language to improve the quality of the text.

As a result, the accuracy of the GPT detector dropped from 100% to 0%. As shown below:

The complexity of the polished article has also increased accordingly.

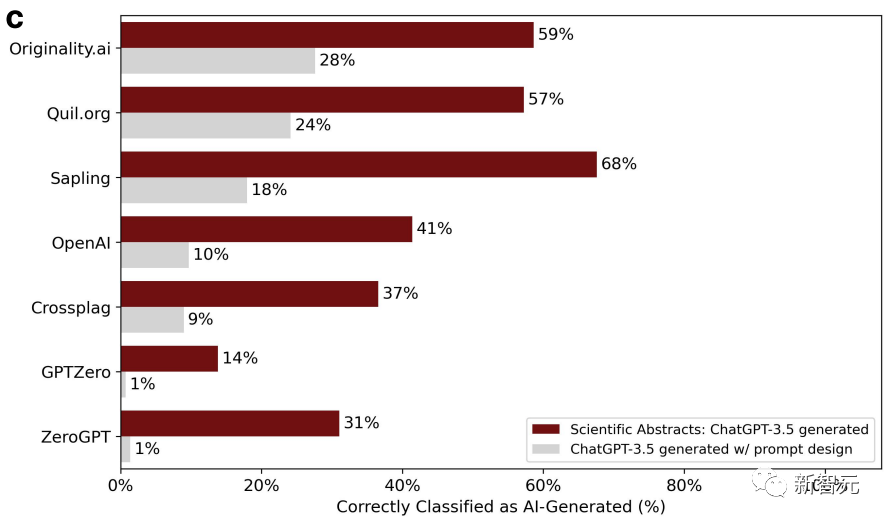

At the same time, the researchers used 145 final project report topics from Stanford University and asked ChatGPT to generate summaries. .

After the summary was polished, the accuracy of the detector's judgment continued to decline.

The researchers once again concluded that polished articles are easily misjudged and are generated by AI. Two rounds are better than one.

In short, all in all, various GPT detectors still seem to fail to capture the connection between AI generation and human writing. the most essential difference between them.

People’s writing is also divided into three, six or nine levels. It is not very reasonable to judge only by complexity.

Putting aside bias factors, the technology itself also needs improvement.

The above is the detailed content of Outrageous! Latest research: 61% of English papers written by Chinese people will be judged as AI-generated by the ChatGPT detector. For more information, please follow other related articles on the PHP Chinese website!

How to solve the problem of slow server domain name transfer

How to solve the problem of slow server domain name transfer A memory that can exchange information directly with the CPU is a

A memory that can exchange information directly with the CPU is a How to cancel Douyin account on Douyin

How to cancel Douyin account on Douyin Delete exif information

Delete exif information The fatal flaw of blade servers

The fatal flaw of blade servers What to do if temporary file rename fails

What to do if temporary file rename fails Reasons why Windows printer does not print

Reasons why Windows printer does not print 360sd

360sd