Ultra-high resolution is welcomed by many researchers as a standard for recording and displaying high-quality images and videos. Compared with lower resolutions (1K HD format), scenes captured at high resolutions are usually The details are very clear, and the pixel information is amplified by small patches. However, there are still many challenges in applying this technology to image processing and computer vision.

In this article, researchers from Alibaba focus on new view synthesis tasks and propose a framework called 4K-NeRF. Its NeRF-based volume rendering method can be implemented in High-fidelity view synthesis at 4K ultra-high resolution.

##Paper address: https://arxiv.org/abs/2212.04701

Project homepage: https://github.com/frozoul/4K-NeRF

Without further ado, let’s take a look at the effect first (below The videos have been downsampled. For the original 4K video, please refer to the original project).

MethodNext let’s take a look at how this research was implemented.

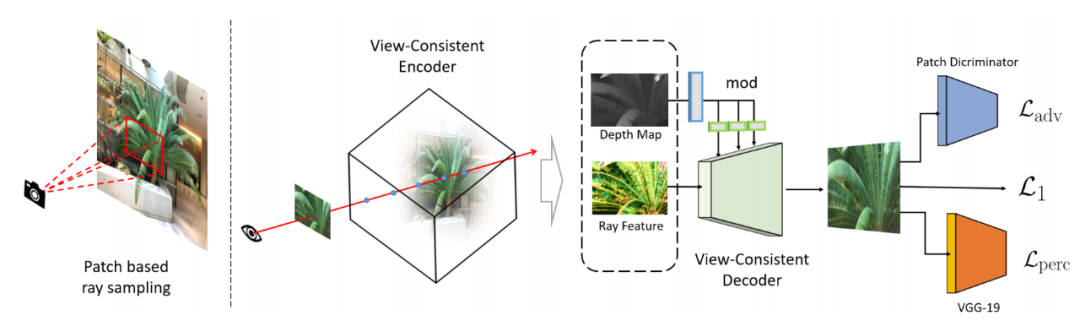

4K-NeRF pipeline (as shown below): Using patch-based ray sampling technology, jointly train VC-Encoder (View-Consistent) (based on DEVO) on a lower resolution Three-dimensional geometric information is encoded in space, and then passed through a VC-Decoder to achieve high-frequency, fine- and high-quality rendering and enhanced view consistency.

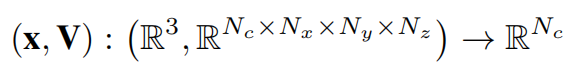

This study instantiates the encoder based on the formula defined in DVGO [32], and the learned voxel grid-based representation is explicitly Geographically encoded geometry:

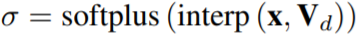

For each sampling point, trilinear interpolation of the density estimate is equipped with a softplus activation function to generate the Volume density value:

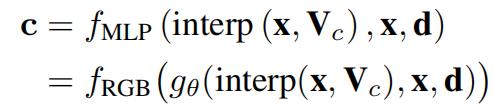

Color is estimated using a small MLP:

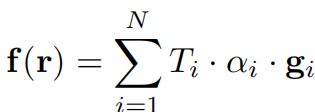

In this way, the characteristic value of each ray (or pixel) can be obtained by accumulating the characteristics of the sampling points along the set line r:

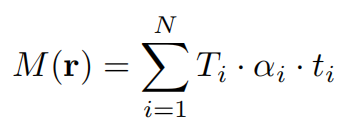

To better exploit the geometric properties embedded in the VC-Encoder, this study also generated a depth map by estimating the depth of each ray r along the sampled ray axis. The estimated depth map provides a strong guide to the three-dimensional structure of the scene generated by the Encoder above: Built using blocks (neither non-parametric normalization nor downsampling operations) and interleaved upsampling operations. In particular, instead of simply concatenating the feature F and the depth map M, this study joins the depth signal in the depth map and injects it into each block through a learned transformation to modulate block activations.

Different from the pixel-level mechanism in traditional NeRF methods, the method of this study aims to capture the spatial information between rays (pixels). Therefore, the strategy of random ray sampling in NeRF is not suitable here. Therefore, this study proposes a patch-based ray sampling training strategy to facilitate capturing the spatial dependence between ray features. During training, the image of the training view is first divided into patches p of size N_p×N_p to ensure that the sampling probability on the pixels is uniform. When the image space dimension cannot be accurately divided by the patch size, the patch needs to be truncated until the edge to obtain a set of training patches. Then one (or more) patches are randomly selected from the set, and the rays of the pixels in the patch form a mini-batch for each iteration.

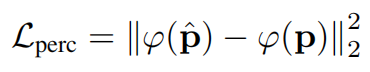

To solve the problem of blurring or over-smoothing visual effects on fine details, this research adds adversarial loss and perceptual loss to regularize fine detail synthesis. The perceptual loss estimates the similarity between the predicted patch

estimates the similarity between the predicted patch and the true value p in the feature space through the pre-trained 19-layer VGG network:

and the true value p in the feature space through the pre-trained 19-layer VGG network:

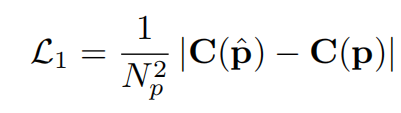

This study uses  loss instead of MSE to supervise the reconstruction of high-frequency details

loss instead of MSE to supervise the reconstruction of high-frequency details

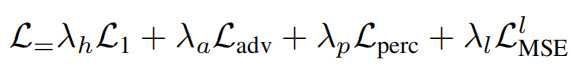

In addition, the study also added an auxiliary MSE loss, and the final total loss function form is as follows:

Qualitative analysis

##The experiment compares 4K-NeRF with other models , it can be seen that methods based on ordinary NeRF have varying degrees of detail loss and blurring. In contrast, 4K-NeRF delivers high-quality photorealistic rendering of these complex and high-frequency details, even on scenes with a limited training field of view.

Quantitative analysis

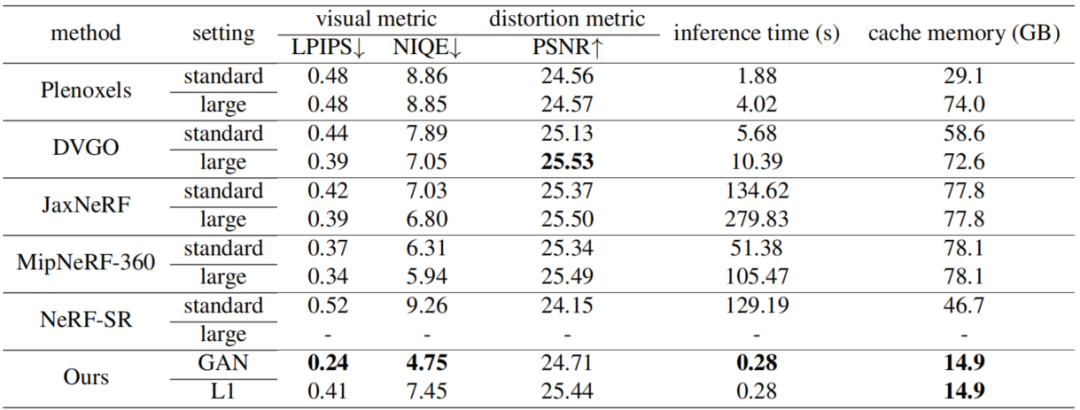

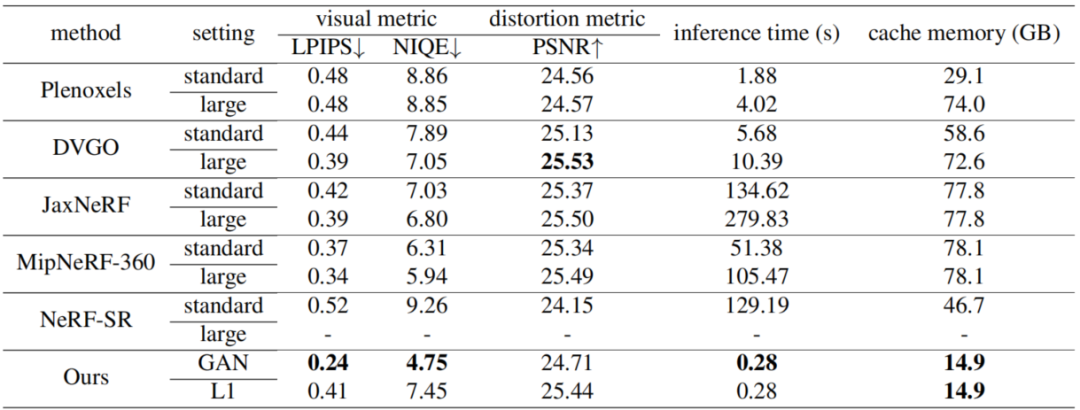

This study is compared with several current methods on the basis of 4k data, including Plenoxels, DVGO, JaxNeRF, MipNeRF-360 and NeRF-SR. The experiment not only uses the evaluation indicators of image recovery as a comparison, but also provides inference time and cache memory for comprehensive evaluation reference. The results are as follows:

Although the results are not much different from the results of some methods in some indicators, they benefit from their voxel-based method in reasoning. Stunning performance is achieved both in terms of efficiency and memory cost, allowing a 4K image to be rendered in 300 ms.

This study explores the capabilities of NeRF in modeling fine details, proposing a novel framework to enhance its ability to recover views in scenes at extremely high resolutions Consistent expressiveness of fine details. In addition, this research also introduces a pair of encoder-decoder modules that maintain geometric consistency, effectively model geometric properties in lower spaces, and utilize local correlations between geometry-aware features to achieve views in full-scale space The enhanced consistency and patch-based sampling training framework also allows the method to integrate supervision from perceptron-oriented regularization. This research hopes to incorporate the effects of the framework into dynamic scene modeling, as well as neural rendering tasks as future directions.

The above is the detailed content of God restores complex objects and high-frequency details, 4K-NeRF high-fidelity view synthesis is here. For more information, please follow other related articles on the PHP Chinese website!