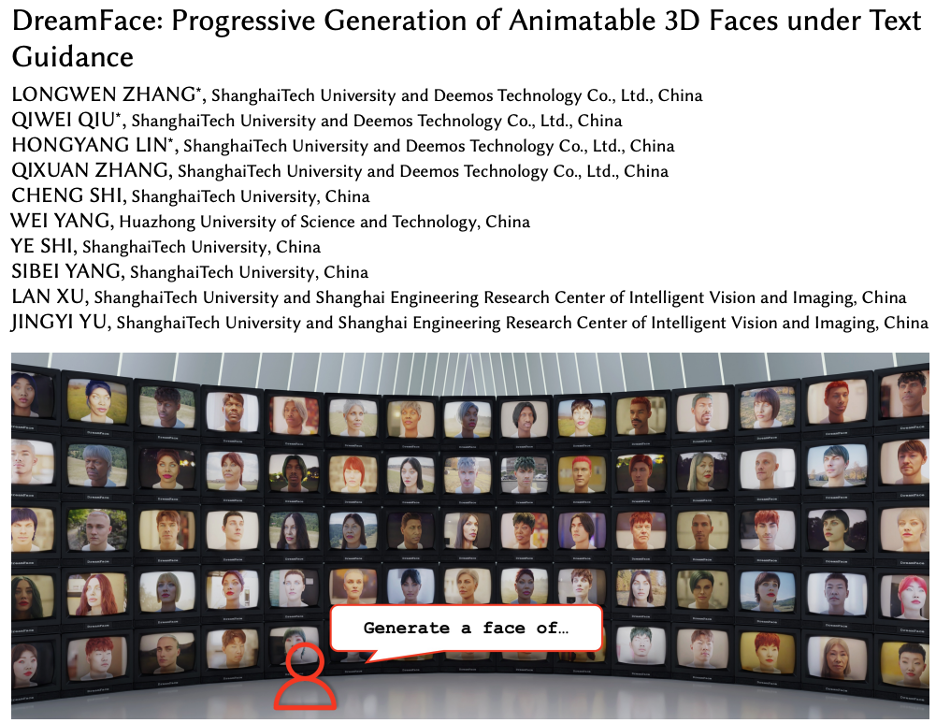

Today, with the rapid development of science and technology, research in the fields of generative artificial intelligence and computer graphics is increasingly attracting attention. Industries such as film and television production and game development are facing huge challenges and opportunities. This article will introduce you to a research in the field of 3D generation - DreamFace, which is the first text-guided progressive 3D generation framework that supports Production-Ready 3D asset generation, enabling text generation-driven 3D hyper-realistic digital people.

This work has been accepted by Transactions on Graphics, the top international journal in the field of computer graphics, and will be presented at SIGGRAPH 2023, the top international conference on computer graphics.

Project website: https://sites.google.com/view/dreamface

Preprint version of the paper: https://arxiv.org/abs/2304.03117

Web Demo : https://hyperhuman.top

##HuggingFace Space:https://huggingface.co/spaces/DEEMOSTECH/ChatAvatar

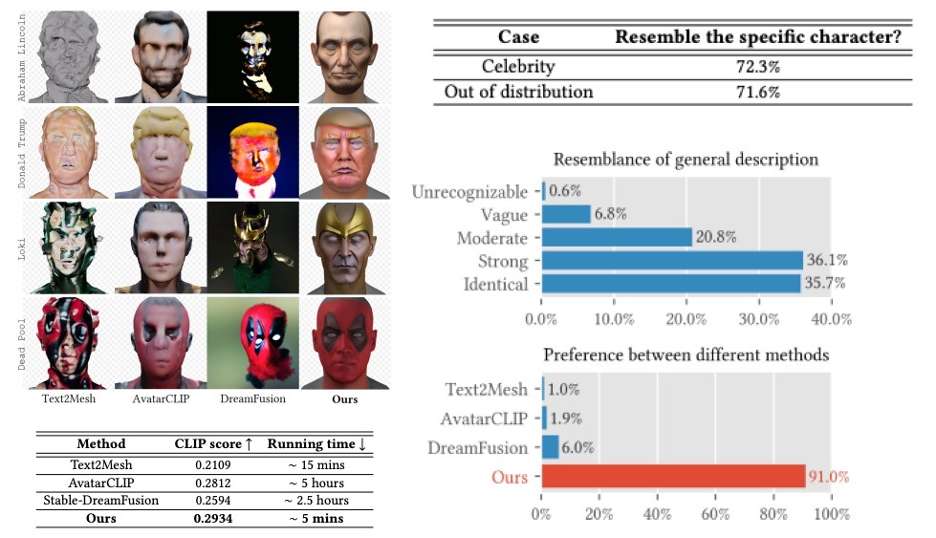

IntroductionSince the great breakthroughs in text and image generation technology, 3D generation technology has gradually become the focus of scientific research and industry. However, 3D generation technologies currently on the market still face many challenges, including CG pipeline compatibility issues, accuracy issues, and running speed issues.

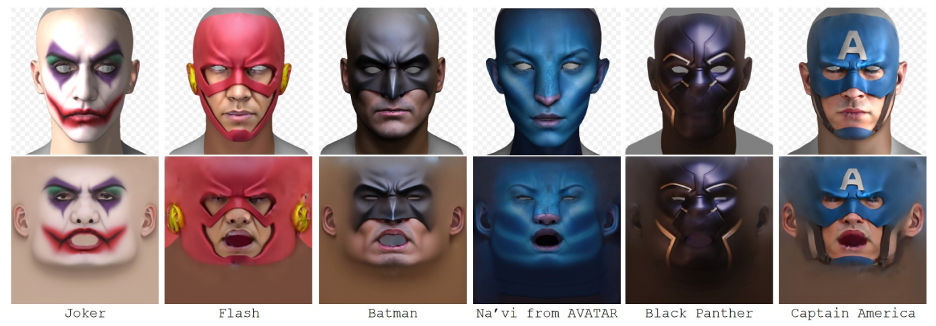

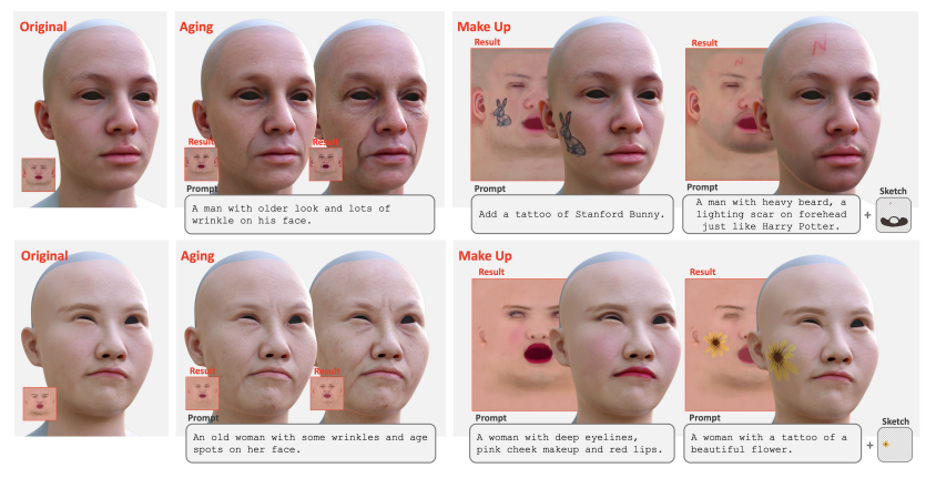

In order to solve these problems, the R&D team from Yingmo Technology and Shanghai University of Science and Technology proposed a text-guided progressive 3D generation framework - DreamFace. The framework can directly generate 3D assets that comply with CG production standards, with higher accuracy, faster running speed and better CG pipeline compatibility. This article will introduce the main functions of DreamFace in detail and explore its application prospects in film and television production, game development and other industries.

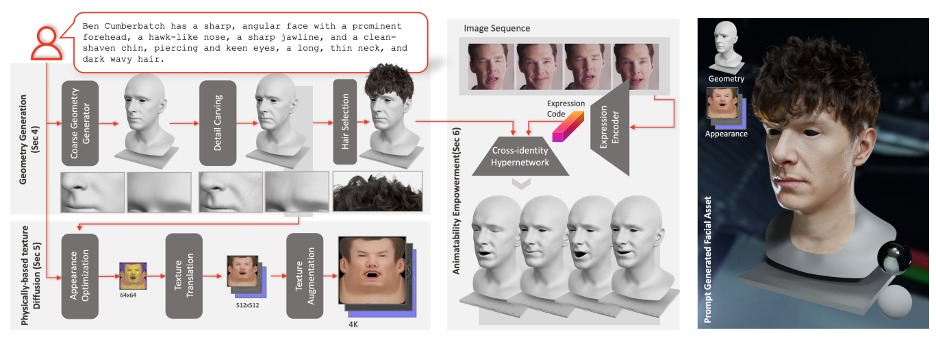

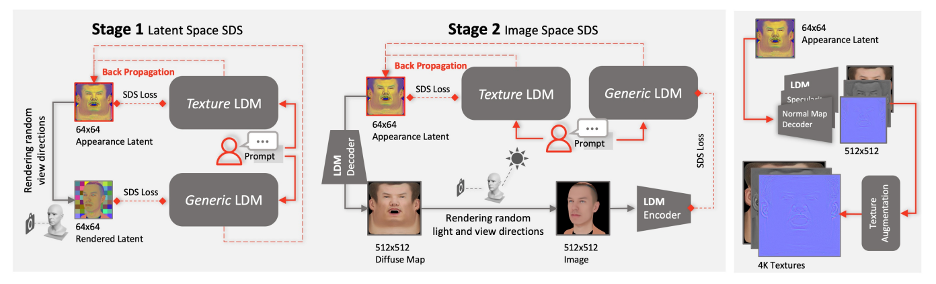

DreamFace Framework Overview

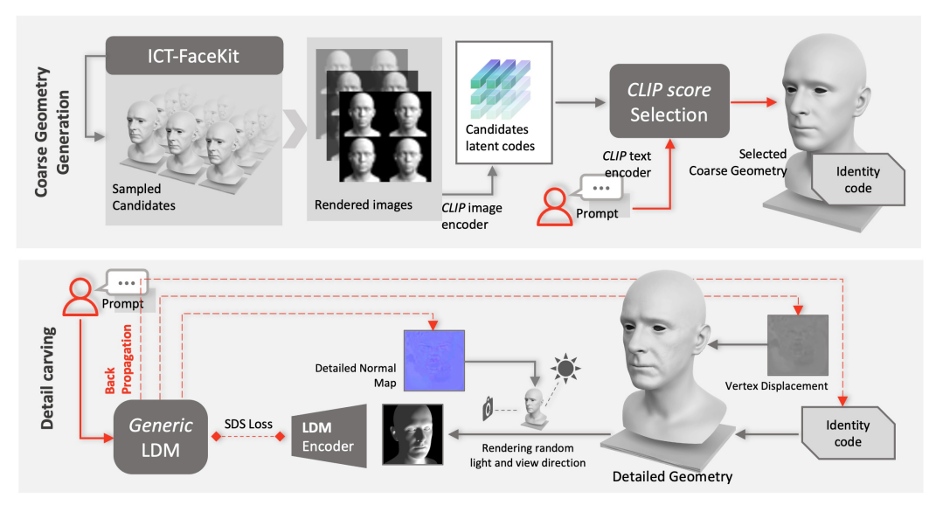

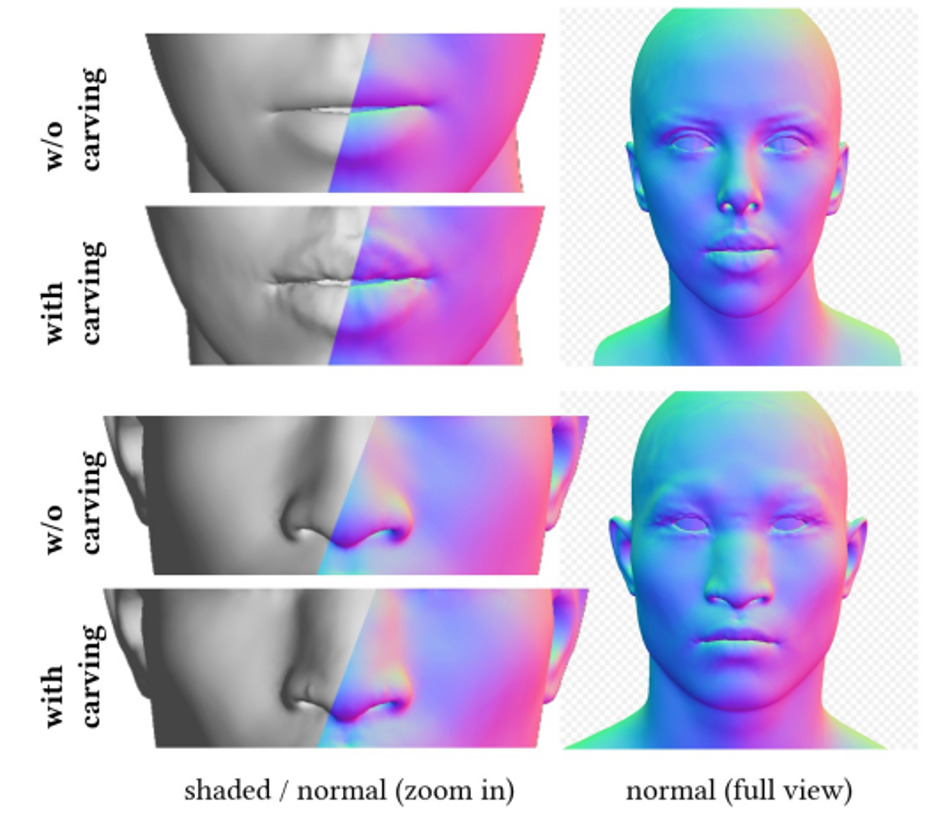

Geometry generation

To ensure that the texture maps created do not contain undesirable features or lighting situations, while still maintaining diversity, a cue learning strategy was designed. The team uses two methods to generate high-quality diffuse maps: (1) Prompt Tuning. Unlike hand-crafted domain-specific text cues, DreamFace combines two domain-specific continuous text cues Cd and Cu with corresponding text cues, which will be optimized during U-Net denoiser training to avoid instability and Time-consuming manual writing of prompts. (2) Masking of non-face areas. The LDM denoising process will be additionally constrained by non-face area masks to ensure that the resulting diffuse map does not contain any unwanted elements.

Finally, 4K physically based textures are generated via the super-resolution module for high-quality rendering.

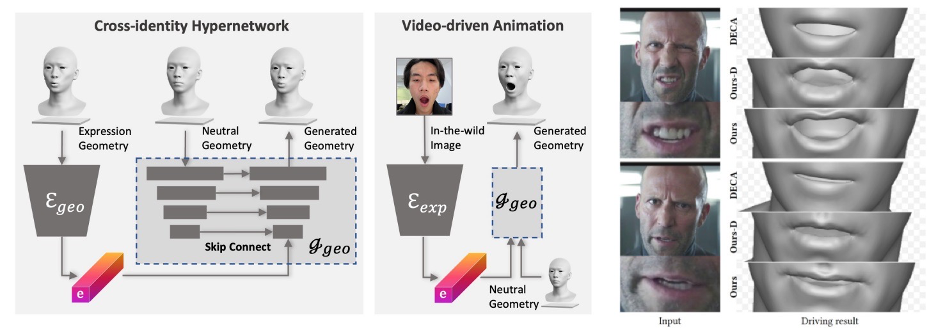

#Animation ability generation

Applications and Outlook

The above is the detailed content of DreamFace: Generate 3D digital human in one sentence?. For more information, please follow other related articles on the PHP Chinese website!

What is an .Xauthority file?

What is an .Xauthority file?

Solution to split word table into two pages

Solution to split word table into two pages

Excel table slash divided into two

Excel table slash divided into two

What is an optical drive

What is an optical drive

Solutions to unknown software exception exceptions in computer applications

Solutions to unknown software exception exceptions in computer applications

What platform is Fengxiangjia?

What platform is Fengxiangjia?

What directory search engines are there?

What directory search engines are there?

What software is Penguin?

What software is Penguin?