How to configure load balancing for TCP in Nginx server

1. Install nginx

1. Download nginx

# wget http://nginx.org/download/nginx-1.2.4.tar.gz

2. Download tcp module patch

# wget https://github.com/yaoweibin/nginx_tcp_proxy_module/tarball/master

Source code homepage: https ://github.com/yaoweibin/nginx_tcp_proxy_module

3. Install nginx

# tar xvf nginx-1.2.4.tar.gz # tar xvf yaoweibin-nginx_tcp_proxy_module-v0.4-45-ga40c99a.tar.gz # cd nginx-1.2.4 # patch -p1 < ../yaoweibin-nginx_tcp_proxy_module-a40c99a/tcp.patch #./configure --prefix=/usr/local/nginx --with-pcre=../pcre-8.30 --add-module=../yaoweibin-nginx_tcp_proxy_module-ae321fd/ # make # make install

2. Modify the configuration file

Modify the nginx.conf configuration file

# cd /usr/local/nginx/conf # vim nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

tcp {

upstream mssql {

server 10.0.1.201:1433;

server 10.0.1.202:1433;

check interval=3000 rise=2 fall=5 timeout=1000;

}

server {

listen 1433;

server_name 10.0.1.212;

proxy_pass mssql;

}

}3. Start nginx

# cd /usr/local/nginx/sbin/ # ./nginx

View 1433 port:

#lsof :1433

4. Test

# telnet 10.0.1.201 1433

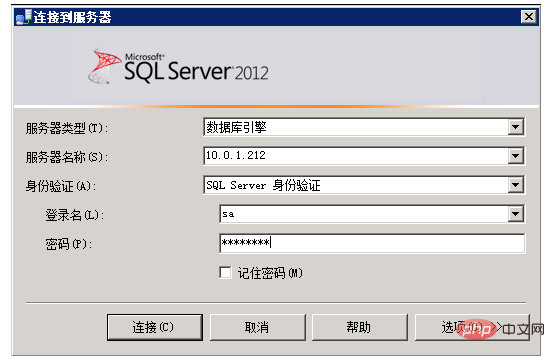

5. Use sql server client tool to test

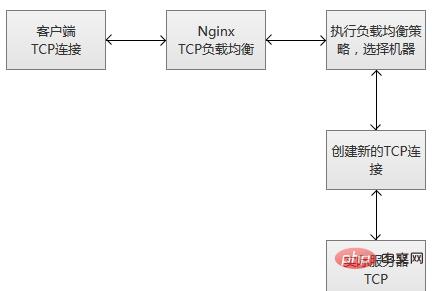

##6. The execution principle of tcp load balancing

When nginx receives a new client link from the listening port, it immediately executes the routing scheduling algorithm, obtains the specified service IP that needs to be connected, and then creates a new upstream connection. to the specified server.

ps: Service robustness monitoring

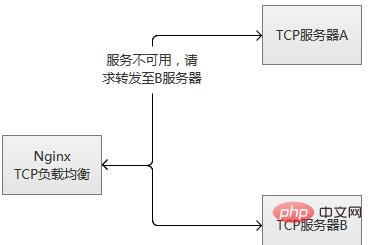

tcp load balancing module supports built-in robustness detection. If an upstream server refuses a tcp connection for more than the proxy_connect_timeout configured time, it will is considered to have expired. In this case, nginx immediately tries to connect to another normal server in the upstream group. Connection failure information will be recorded in the nginx error log. (2) Prepare "commonly used" data in advance, actively "preheat" the service, and then open access to the server after the preheating is completed.

The tcp load balancing module supports built-in robustness detection. If an upstream server refuses a tcp connection for more than the proxy_connect_timeout configured time, it will be considered to have failed. In this case, nginx immediately tries to connect to another normal server in the upstream group. Connection failure information will be recorded in the nginx error log.

If a server fails repeatedly (exceeding the parameters configured by max_fails or fail_timeout), nginx will also kick the server. 60 seconds after the server is kicked off, nginx will occasionally try to reconnect to it to check whether it is back to normal. If the server returns to normal, nginx will add it back to the upstream group and slowly increase the proportion of connection requests.

The reason for "slowly increasing" is because usually a service has "hot data", that is to say, more than 80% or even more of the requests will actually be blocked in the "hot data cache" , only a small part of the requests are actually processed. When the machine is just started, the "hot data cache" has not actually been established. At this time, a large number of requests are forwarded explosively, which is likely to cause the machine to be unable to "bear" and hang up again. Taking mysql as an example, more than 95% of our mysql queries usually fall into the memory cache, and not many queries are actually executed.

In fact, whether it is a single machine or a cluster, restarting or switching in a high concurrent request scenario will have this risk. There are two main ways to solve it:

(1) The requests gradually increase, from less to more, gradually accumulating hotspot data, and finally reaching normal service status.

(2) Prepare "commonly used" data in advance, actively "preheat" the service, and then open access to the server after the preheating is completed.

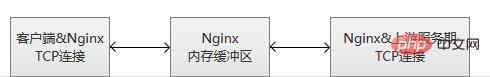

The principle of tcp load balancing is the same as that of lvs. It works at a lower level and its performance will be much higher than the original http load balancing. However, it will not be better than lvs. lvs is placed in the kernel module, while nginx works in user mode, and nginx is relatively heavy. Another point, which is very regrettable, is that this module is a paid function.

The above is the detailed content of How to configure load balancing for TCP in Nginx server. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

How to execute php code after writing php code? Several common ways to execute php code

May 23, 2025 pm 08:33 PM

How to execute php code after writing php code? Several common ways to execute php code

May 23, 2025 pm 08:33 PM

PHP code can be executed in many ways: 1. Use the command line to directly enter the "php file name" to execute the script; 2. Put the file into the document root directory and access it through the browser through the web server; 3. Run it in the IDE and use the built-in debugging tool; 4. Use the online PHP sandbox or code execution platform for testing.

After installing Nginx, the configuration file path and initial settings

May 16, 2025 pm 10:54 PM

After installing Nginx, the configuration file path and initial settings

May 16, 2025 pm 10:54 PM

Understanding Nginx's configuration file path and initial settings is very important because it is the first step in optimizing and managing a web server. 1) The configuration file path is usually /etc/nginx/nginx.conf. The syntax can be found and tested using the nginx-t command. 2) The initial settings include global settings (such as user, worker_processes) and HTTP settings (such as include, log_format). These settings allow customization and extension according to requirements. Incorrect configuration may lead to performance issues and security vulnerabilities.

How to limit user resources in Linux? How to configure ulimit?

May 29, 2025 pm 11:09 PM

How to limit user resources in Linux? How to configure ulimit?

May 29, 2025 pm 11:09 PM

Linux system restricts user resources through the ulimit command to prevent excessive use of resources. 1.ulimit is a built-in shell command that can limit the number of file descriptors (-n), memory size (-v), thread count (-u), etc., which are divided into soft limit (current effective value) and hard limit (maximum upper limit). 2. Use the ulimit command directly for temporary modification, such as ulimit-n2048, but it is only valid for the current session. 3. For permanent effect, you need to modify /etc/security/limits.conf and PAM configuration files, and add sessionrequiredpam_limits.so. 4. The systemd service needs to set Lim in the unit file

Specific steps to configure the self-start of Nginx service

May 16, 2025 pm 10:39 PM

Specific steps to configure the self-start of Nginx service

May 16, 2025 pm 10:39 PM

The steps for starting Nginx configuration are as follows: 1. Create a systemd service file: sudonano/etc/systemd/system/nginx.service, and add relevant configurations. 2. Reload the systemd configuration: sudosystemctldaemon-reload. 3. Enable Nginx to boot up automatically: sudosystemctlenablenginx. Through these steps, Nginx will automatically run when the system is started, ensuring the reliability and user experience of the website or application.

What are the Debian Nginx configuration skills?

May 29, 2025 pm 11:06 PM

What are the Debian Nginx configuration skills?

May 29, 2025 pm 11:06 PM

When configuring Nginx on Debian system, the following are some practical tips: The basic structure of the configuration file global settings: Define behavioral parameters that affect the entire Nginx service, such as the number of worker threads and the permissions of running users. Event handling part: Deciding how Nginx deals with network connections is a key configuration for improving performance. HTTP service part: contains a large number of settings related to HTTP service, and can embed multiple servers and location blocks. Core configuration options worker_connections: Define the maximum number of connections that each worker thread can handle, usually set to 1024. multi_accept: Activate the multi-connection reception mode and enhance the ability of concurrent processing. s

Configure PhpStorm and Docker containerized development environment

May 20, 2025 pm 07:54 PM

Configure PhpStorm and Docker containerized development environment

May 20, 2025 pm 07:54 PM

Through Docker containerization technology, PHP developers can use PhpStorm to improve development efficiency and environmental consistency. The specific steps include: 1. Create a Dockerfile to define the PHP environment; 2. Configure the Docker connection in PhpStorm; 3. Create a DockerCompose file to define the service; 4. Configure the remote PHP interpreter. The advantages are strong environmental consistency, and the disadvantages include long startup time and complex debugging.

What are the SEO optimization techniques for Debian Apache2?

May 28, 2025 pm 05:03 PM

What are the SEO optimization techniques for Debian Apache2?

May 28, 2025 pm 05:03 PM

DebianApache2's SEO optimization skills cover multiple levels. Here are some key methods: Keyword research: Use tools (such as keyword magic tools) to mine the core and auxiliary keywords of the page. High-quality content creation: produce valuable and original content, and the content needs to be conducted in-depth research to ensure smooth language and clear format. Content layout and structure optimization: Use titles and subtitles to guide reading. Write concise and clear paragraphs and sentences. Use the list to display key information. Combining multimedia such as pictures and videos to enhance expression. The blank design improves the readability of text. Technical level SEO improvement: robots.txt file: Specifies the access rights of search engine crawlers. Accelerate web page loading: optimized with the help of caching mechanism and Apache configuration

How to implement automated deployment of Docker on Debian

May 28, 2025 pm 04:33 PM

How to implement automated deployment of Docker on Debian

May 28, 2025 pm 04:33 PM

Implementing Docker's automated deployment on Debian system can be done in a variety of ways. Here are the detailed steps guide: 1. Install Docker First, make sure your Debian system remains up to date: sudoaptupdatesudoaptupgrade-y Next, install the necessary software packages to support APT access to the repository via HTTPS: sudoaptinstallapt-transport-httpsca-certificatecurlsoftware-properties-common-y Import the official GPG key of Docker: curl-