When it comes to special effects gameplay, Douyin’s ability to “do all the work” has always been obvious to all. Recently, a "cartoon face" special effect has been in the limelight. No matter men, women or children, after using this special effect, they will look as smart and cute as the characters coming out of Disney animation. Once "Cartoon Face" was launched, it quickly fermented on Douyin and was deeply loved by users. "One-click transformation into a tall and sweet cartoon face", "All the fugitive princesses on Douyin are here", "Show off your baby with cartoon face style", "Prince Related hot spots such as "Princess Sprinkling Sugar Gesture Dance" and "Capturing the Moment of Fairy Tale Magic Failure" are constantly growing. Among them, "All the fugitive princesses from Douyin are here" and "Capturing the Moment of Fairy Tale Magic Failure" have even appeared on Douyin's national hot list. Currently, the number of users of this special effect has exceeded 9 million.

"Cartoon face" is a 3D style special effect. The difficulties in the development of this type of special effects are mainly due to the difficulty in obtaining diverse CG training data, the difficulty in restoring vivid expressions, and the difficulty in realistic fit. Three-dimensional skin light and shadow are difficult to achieve, and facial features deformation GAN with exaggerated and strong style is difficult to learn. In this regard, ByteDance's intelligent creation team has focused on breakthrough optimization in the direction of 3D stylization, which not only solved all the above problems, but also precipitated a set of universal technical solutions.

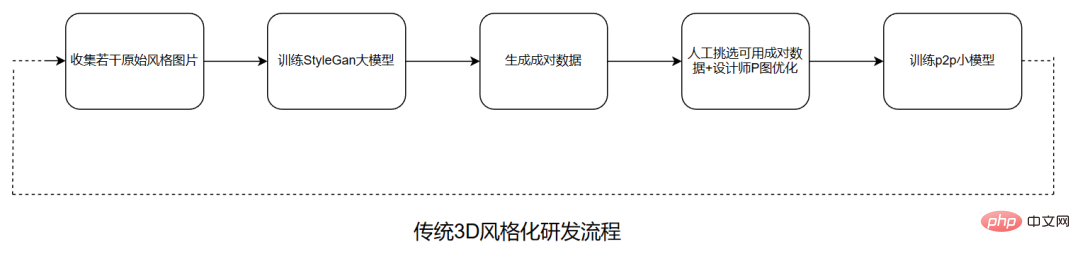

In the past, a complete 3D stylization The research and development process is divided into the following modules:

Collect a number of original style pictures -> train the StyleGan large model -> generate paired data -> manually select available paired data designer P pictures Optimize -> train p2p small model, and then repeat.

The problems with the traditional R&D process are very obvious: the iteration cycle is long, the designer’s participation is weak, and it is not easy to Precipitation and reuse.

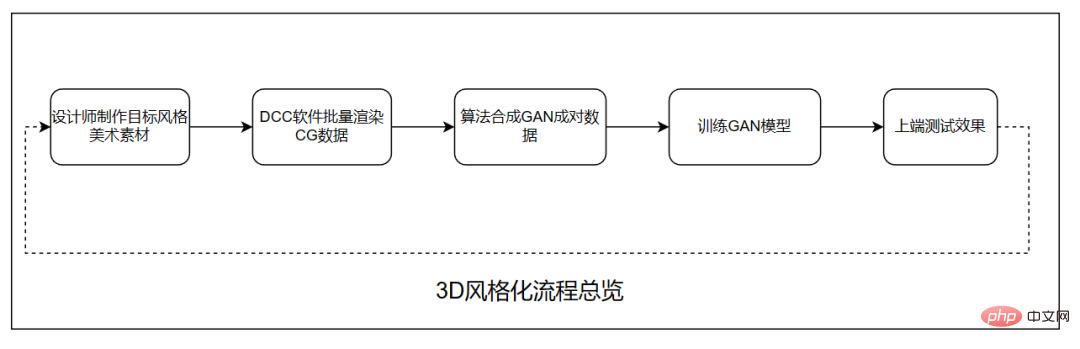

In the research and development of the "cartoon face" special effect, the ByteDance intelligent creation team adopted an innovative research and development process:

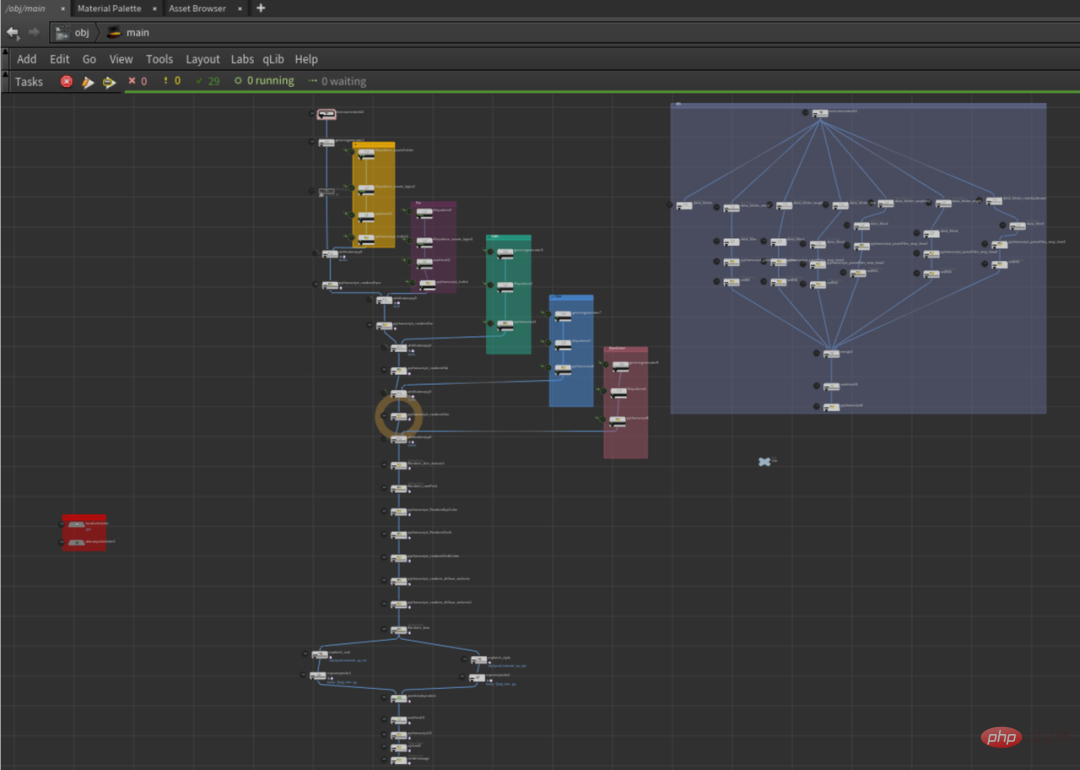

Start from the designer producing the target style effect. The designer provides some 3D art materials according to the requirements agreed by the algorithm. Then the ByteDance intelligent creation team uses DCC software to batch render a number of diverse CG data. During the rendering process, the technical team introduced the most popular AIGC technology for the first time to enhance the data, then used GAN to synthesize the paired data required for training, and finally used the self-developed deformation pix2pix model for training to obtain the final effect.

R&D flow chart of “Cartoon Face” by ByteDance Intelligent Creation Team

It can be seen from the process link that this method greatly reduces the iteration cycle, improves the degree of automation, and allows designers to have a higher degree of participation. Practice shows that innovative engineering links The iteration cycle is reduced from 6 months to 1 month, and the solution is easier to accumulate and reuse.

Nowadays, there are more and more transformation special effects on social media, and people pay more and more attention to special effects Aesthetics and accuracy, in order to allow users to better achieve the effect of stylized transformation, the designers of Douyin special effects have carefully researched, combined with popular animation styles, and innovatively designed a set of cartoon face special effects to allow users to You can experience the animation-like and flexible character style, and at the same time meet the user's needs to become more beautiful and handsome.

Douyin special effects designers conducted in-depth research on the existing transformation special effects on the market and found that the existing special effects have problems such as insufficient style, insufficient exaggeration of expressions, and insufficient realistic lighting effects. Therefore, Douyin's special effects designers redesigned the style of cartoon faces based on domestic aesthetics, exaggerating the facial proportions of men and women, and reconstructing them into "girls" with cute round faces and smart facial features, and "boys" with tough, long faces and handsome facial features. During this process, the designer retained the user's own hair, enhanced the fluffiness and glossiness of the hair, and made it more natural to blend with the cartoon face. The cartoon-textured skin also incorporated the details of the user's own skin, making the special effects more natural. More personalized characteristics of the user.

In addition, the designers of Douyin special effects also defined the texture of light and shadow under different lights to meet the needs of lighting restoration in complex scenes, making the cartoon face more three-dimensional and natural, integrating into daily life There’s nothing wrong with taking selfies. Finally, the designer also created exaggerated symbolic facial expressions, used facial capture technology to generate facial expression CG data for digital human assets, and continuously improved training data and algorithms to produce expression effects that can more vividly display the user's personality.

The source of training data for 3D style special effects relies on high-quality CG rendering data, and is The diversity of data distribution requirements is relatively high. At the same time, manual modeling of 3D assets is also a very labor-intensive process, and the reusability is also insufficient. Often a project spends expensive manpower and time to produce a batch of 3D assets. At the end of the project It was later completely abandoned.

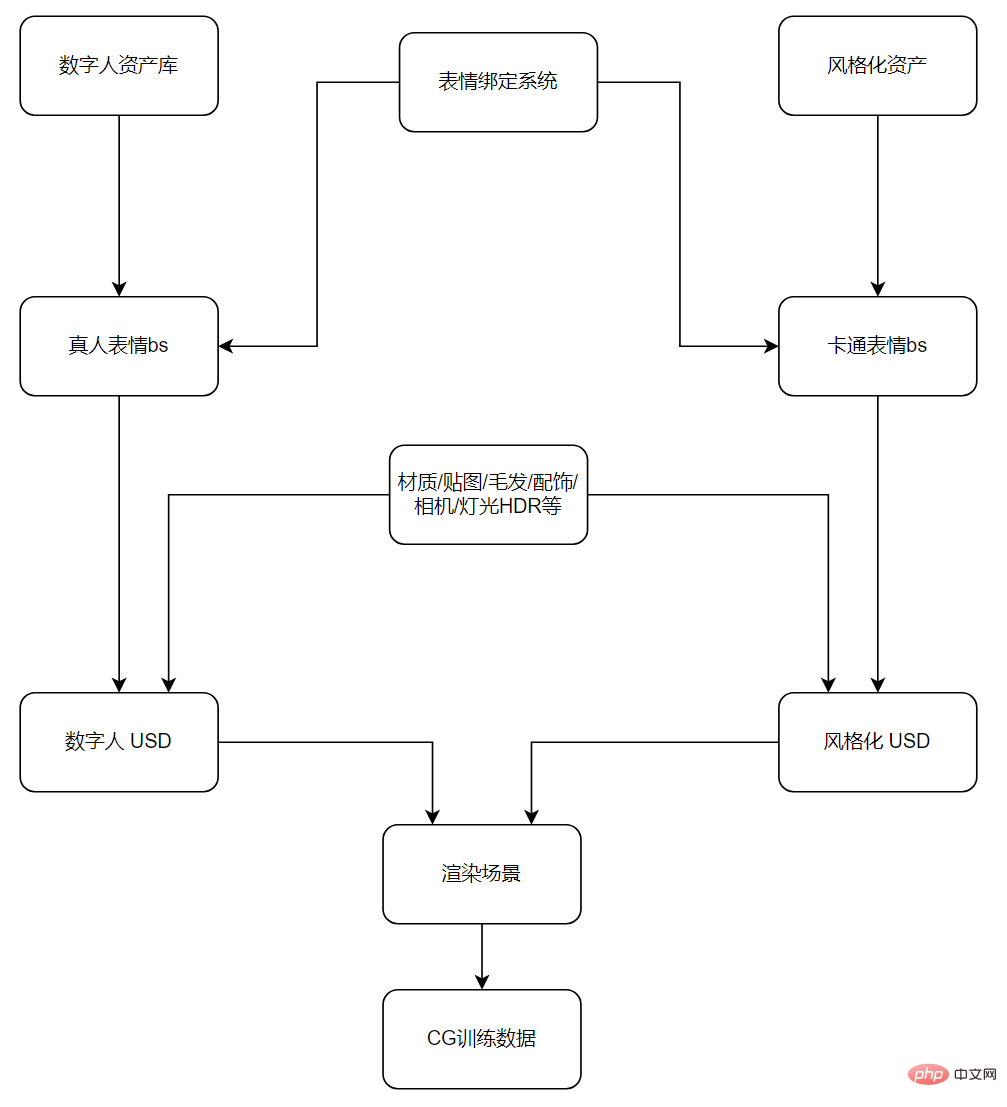

This time, ByteDance’s intelligent creation team has built a set of universal and easy-to-expand CG synthesis data workflow.

Flowchart of the CG synthesis data flow of the Bytedance Intelligent Creation Team

The workflow of this synthetic data flow is as follows:

1. Programmatically generate digital assets through Houdini, programmatically pinch faces, bind bones, and adjust Weights, etc., to establish a realistic digital human model asset library.

#Diversity 3D digital assets

2. Build a USD template through Houdini's Solaris, and combine assets such as hair, fur, head model, clothing, expression coefficients, etc. USD reference import.

Skin map sample

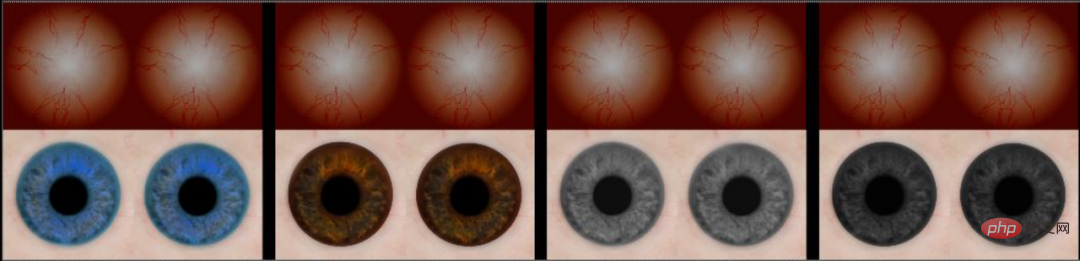

Iris map sample

3. Use Houdini’s PDG to modify assets, camera angles, lighting environments, etc. Random combination. Use PDG to control workitem to accurately control data distribution.

Automated PDG node graph

Since the research and development process requires frequently providing a large amount of rendering data for effect iteration, this requires a lot of computing power costs and rendering waiting time. Previously, the team spent millions on external farms for data rendering on Douyin’s “Magic Transformation” special effects. As for the "cartoon face" special effects, the team relied on the solid infrastructure of ByteDance's cloud platform Volcano Engine to greatly reduce computing power costs.

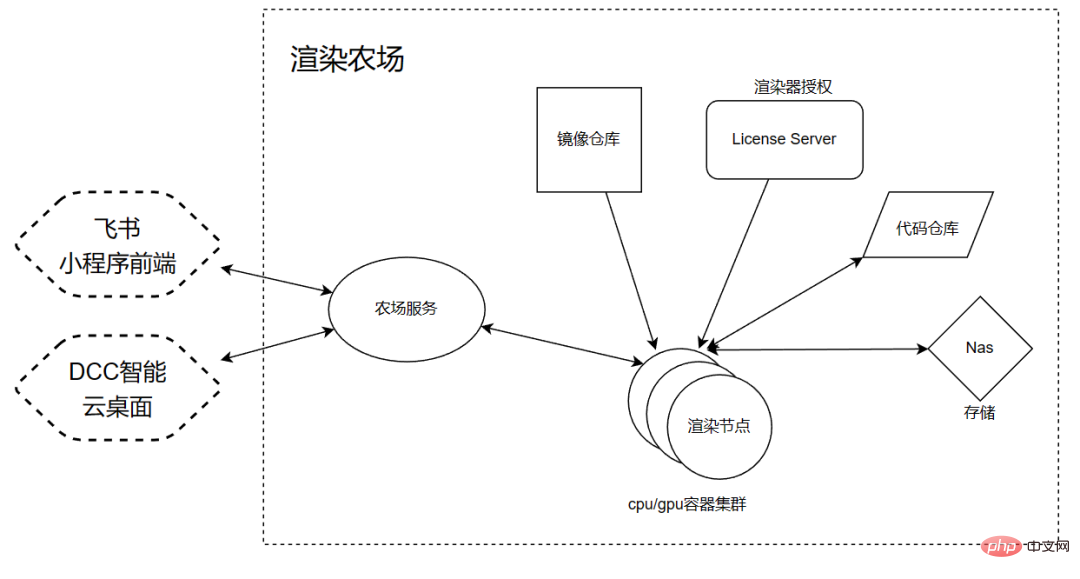

ByteDance’s intelligent creation team referred to the processes of the film and television industry and built a self-developed rendering farm platform. It can split offline tasks into several rendering machines for parallel processing. Through the Volcano Engine mirroring platform for image hosting, the resource pooling platform for resource application and release, the cpu/gpu cluster for dynamic expansion and contraction of containers, and the use of nas for asset management, the rendering farm has one-click expansion of thousands of rendering nodes. The ability to calculate efficiently.

Based on this, the ByteDance intelligent creation team customized the single-task processing logic, including pre-processing, engine rendering, post-processing and other steps. And dynamically expand/shrink the cluster size at any time as needed to maximize the use of computing resources.

In order to further improve efficiency and make it easier for designers to participate in effect optimization, the technical team also created a Feishu applet for designers to use, which can trigger automated processes in the cloud through Feishu To iterate on the art effects, after the cloud task is completed, a message will be sent back to Feishu for designers to view, which greatly improves the efficiency of designers' work.

At the same time, the ByteDance intelligent creation team customized the event driver (EventTrigger) and API to connect the farm, Feishu platform and cloud desktop platform to maximize the All in one concept. This allows designers and engineers to more conveniently complete collaborative research and development based on Feishu and Cloud Desktop.

Self-developed rendering farm platform

With the advent of DALL・E, the ByteDance intelligent creation team began the follow-up and planning of related technologies in early 2021. The ByteDance intelligent creation team developed the Stable Diffusion open source model On the basis of , a data set with a data volume of one billion was constructed, and two models were trained. One is a general-purpose model Diffusion Model, which can generate pictures in the style of oil paintings and ink paintings; the other is an animation-style Diffusion Model. .

Not long ago, the "AI painting" special effects supported by ByteDance's intelligent creation team became popular on Douyin, using this new technology. This time on Douyin's "Cartoon Face", the technical team further explored the Diffusion Model's ability to generate 3D cartoon styles and adopted a picture-generating strategy. They first added noise to the picture, and then used the trained Vincentian graph model. Guided denoising of text. Based on a pre-trained Stable Diffusion model, input the target 3D style result image generated by GAN that matches the real person image, and guide the target style closer to the desired direction through a set of finely tuned text keywords. Stable Diffusion outputs The result is used as the final data and handed over to the subsequent GAN model for learning.

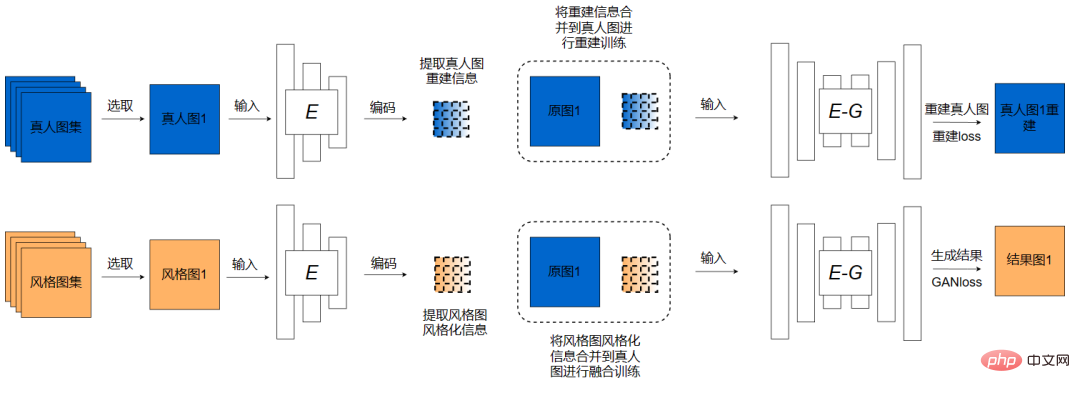

Since the target style of Douyin’s “cartoon face” has a larger deformation compared with the original portrait, it is difficult to directly use the traditional p2p framework To achieve high-quality training results, ByteDance's intelligent creation team has self-developed a set of p2p deformation GAN training framework, which has a good effect on training large-deformation, strong-style cartoon targets. The deformation GAN training framework self-developed by ByteDance’s intelligent creation team consists of two parts:

#1. Stylized preliminary training to extract cartoon face stylized information. The technical team built a non-paired training framework for interactive fusion of stylized information. By inputting real-person and cartoon face data sets into the framework, cartoon face stylized information can be extracted. This framework is an end-to-end training framework that includes stylized feature encoding, feature fusion, reconstruction training and stylized preliminary training. After the training is completed, a cartoon face stylized information is obtained for the next step of refined training.

#2. Integrate cartoon face stylized information and conduct precise training. The stylized information of the cartoon face obtained in the first step includes information such as style and deformation. This part of the information is integrated into the real-person image for refined training. P2P-related strong supervision loss is used for pairing training. After the training converges, the cartoon face model is obtained. .

Based on the above innovative technical solutions, Douyin’s “Cartoon Face” not only simplifies engineering links and greatly improves iteration efficiency, but also improves large angles, rich expressions, effect style restoration, light and shadow consistency, and multiple skin colors. Obvious optimization effects have been achieved in aspects such as matching. It is understood that the ByteDance intelligent creation team responsible for the "Cartoon Face" project has been focusing on breakthrough optimization in the direction of 3D stylization since 2021. This technical solution has supported a variety of 3D style special effects and achieved popular results on the platform.

About Bytedance Intelligent Creation Team:

##The Intelligent Creation Team is Bytedance AI & Multimedia Technology The middle platform supports many of the company's product lines such as Douyin, Jianying, and Toutiao by building leading technologies such as computer vision, audio and video editing, and special effects processing. At the same time, it provides external ToB partners with industry-leading intelligent creation capabilities through the Volcano Engine. and industry solutions.

The above is the detailed content of The 'cartoon face' special effects technology used by more than 9 million people on Douyin is revealed. For more information, please follow other related articles on the PHP Chinese website!

What skills are needed to work in the PHP industry?

What skills are needed to work in the PHP industry?

How to shut down after running the nohup command

How to shut down after running the nohup command

How to solve the problem when the computer CPU temperature is too high

How to solve the problem when the computer CPU temperature is too high

What is highlighting in jquery

What is highlighting in jquery

Why does the printer not print?

Why does the printer not print?

Reasons why computers often have blue screens

Reasons why computers often have blue screens

Solution to failedtofetch error

Solution to failedtofetch error

How to set cad point style

How to set cad point style