In the past year, both startups and established companies have been slow to announce, launch and deploy artificial intelligence (AI) and machine learning (ML) accelerators. But it’s not unreasonable, and for many companies that publish accelerator reports, they spend three to four years researching, analyzing, designing, validating, and weighing the design of the accelerator and building the technology stack to program the accelerator. For those companies that have released upgraded versions of their accelerators, development cycles are still at least two to three years, although they report that they are shorter. The focus of these accelerators is still on accelerating deep neural network (DNN) models. The application scenarios range from extremely low-power embedded speech recognition and image classification to data center large model training. Competition in typical market and application areas continues, which is An important part of the shift from modern traditional computing to machine learning solutions for industrial and technology companies.

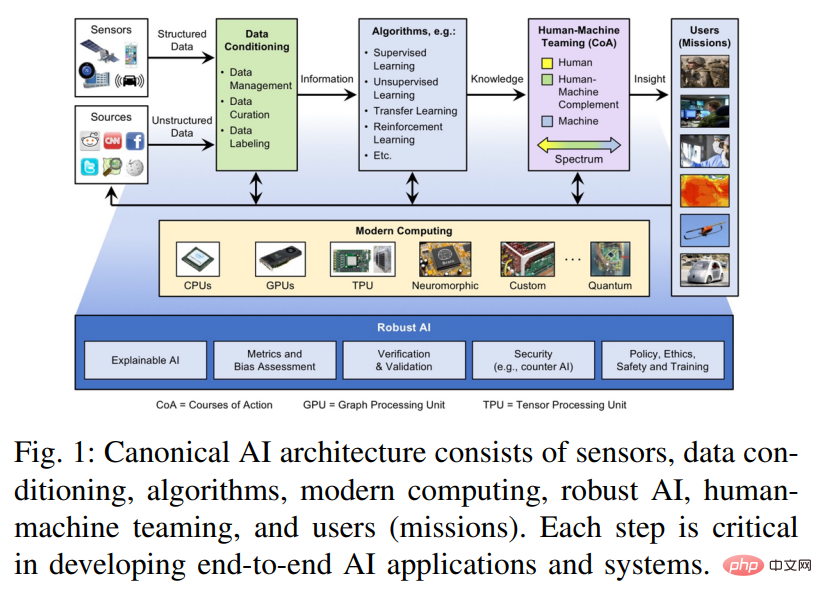

The AI ecosystem brings together components of edge computing, traditional high-performance computing (HPC), and high-performance data analytics (HPDA) that must work together to be effective Empower decision-makers, frontline staff and analysts. Figure 1 shows an architectural overview of this end-to-end AI solution and its components.

The original data first needs to be data curated. In this step, the data is fused, aggregated, structured, accumulated and converted into information. The information generated by the data wrangling step serves as input to supervised or unsupervised algorithms such as neural networks that extract patterns, fill in missing data or find similarities between data sets, and make predictions, thereby converting the input information into actionable Knowledge. This actionable knowledge will be transferred to humans and used in the decision-making process during the human-machine collaboration phase. The human-machine collaboration stage provides users with useful and important insights, transforming knowledge into actionable intelligence or insight.

Underpinning this system is a modern computing system. The trend of Moore's Law has ended, but at the same time many related laws and trends have been proposed, such as Denard's Law (power density), clock frequency, core count, instructions per clock cycle and instructions per Joule (Koomey's Law). From the system-on-a-chip (SoC) trend that first appeared in automotive applications, robotics, and smartphones, innovation continues to advance through the development and integration of accelerators of commonly used cores, methods, or functions. These accelerators offer different balances between performance and functional flexibility, including an explosion of innovation in deep learning processors and accelerators. By reading a large number of related papers, this article explores the relative advantages of these technologies, as they are particularly important when applying artificial intelligence to embedded systems and data centers that have extreme requirements on size, weight, and power.

This article is an update of IEEE-HPEC papers from the past three years. As in past years, this article continues to focus on accelerators and processors for deep neural networks (DNN) and convolutional neural networks (CNN), which are extremely computationally intensive. This article focuses on the development of accelerators and processors in inference, because many AI/ML edge applications rely heavily on inference. This article addresses all numeric precision types supported by accelerators, but for most accelerators their best inference performance is int8 or fp16/bf16 (IEEE 16-bit floating point or Google's 16-bit brain float).

##Paper link: https://arxiv.org/pdf/2210.04055.pdf

Currently, there have been many papers discussing AI accelerators. For example, the first paper in this series of surveys discusses the peak performance of FPGAs for certain AI models. Previous surveys have covered FPGAs in depth and are therefore no longer included in this survey. This ongoing survey effort and article aims to collect a comprehensive list of AI accelerators, including their computational capabilities, energy efficiency, and computational efficiency using accelerators in embedded and data center applications. At the same time, the article mainly compares neural network accelerators for government and industrial sensor and data processing applications. Some accelerators and processors included in previous years' papers have been excluded from this year's survey because they may have been replaced by new accelerators from the same company, are no longer maintained, or are no longer relevant to the topic .

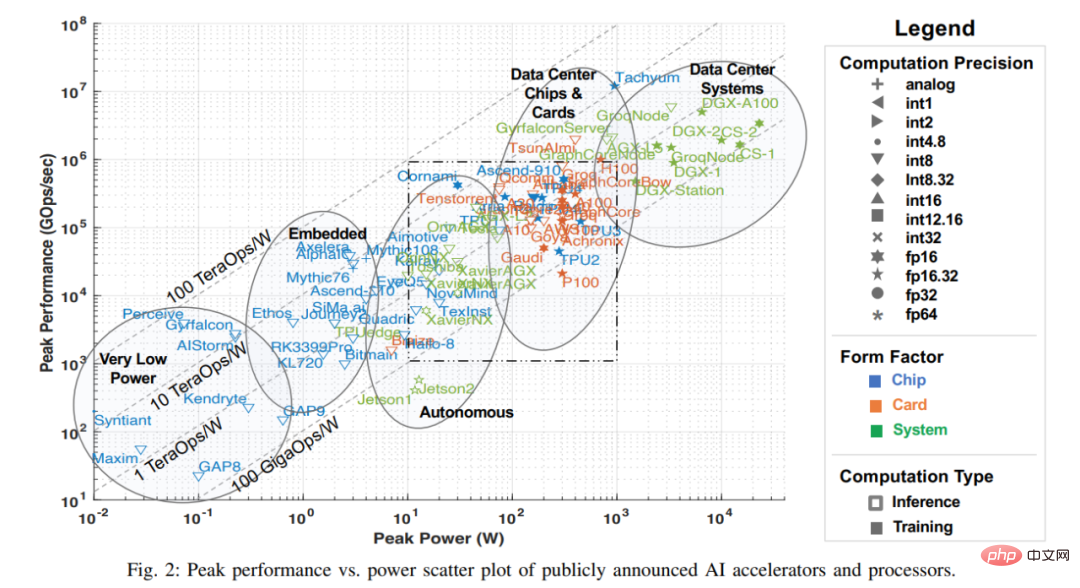

Many of the latest advances in artificial intelligence are due in part to improvements in hardware performance, which enable machine learning algorithms that require huge amounts of computing power, especially networks such as DNNs. The survey for this article gathered a variety of information from publicly available materials, including various research papers, technical journals, company-published benchmarks, etc. While there are other ways to obtain information on companies and startups (including those in silent periods), this article omits this information at the time of this survey and the data will be included in the survey when it becomes public. Key metrics from this public data are shown in the chart below, which reflects the latest processor peak performance versus power consumption capabilities (as of July 2022).

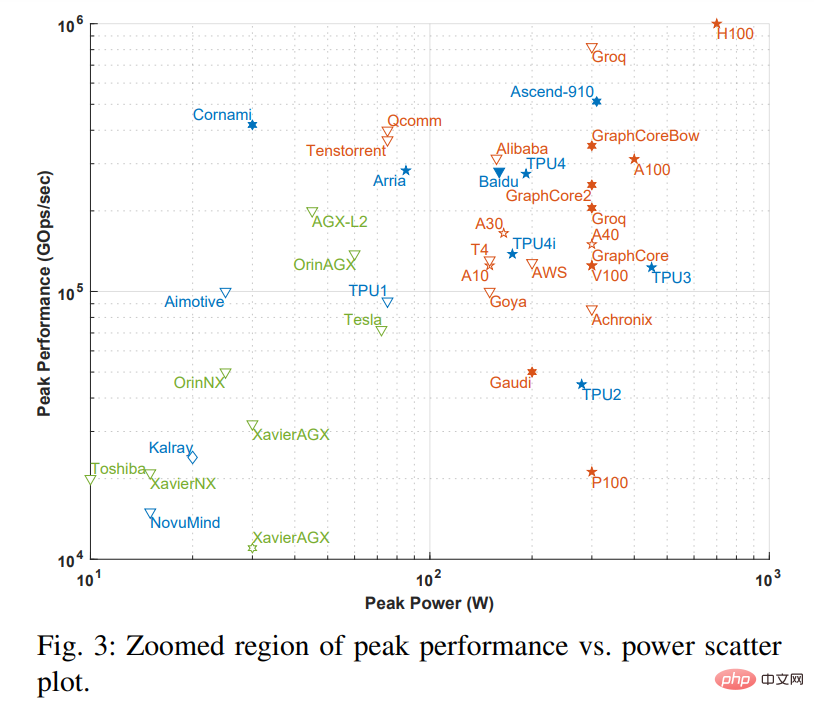

Note: The dotted box in Figure 2 corresponds to Figure 3 below. Figure 3 is an enlarged version of the dotted box. .

The x-axis in the figure represents the peak power, and the y-axis represents the peak gigabit operations per second (GOps/s), both are Logarithmic scale. The calculation accuracy of the processing power is represented by different geometries, ranging from int1 to int32 and from fp16 to fp64. There are two types of precision displayed. The left side represents the precision of multiplication operations, and the right side represents the precision of accumulation/addition operations (such as fp16.32 represents fp16 multiplication and fp32 accumulation/addition). Use colors and shapes to differentiate between different types of systems and peak power. Blue represents a single chip; orange represents a card; green represents an overall system (single-node desktop and server systems). This investigation is limited to single motherboard, single memory systems. The open geometries in the figure represent the top performance of accelerators that perform only inference, while the solid geometries represent the performance of accelerators that perform both training and inference.

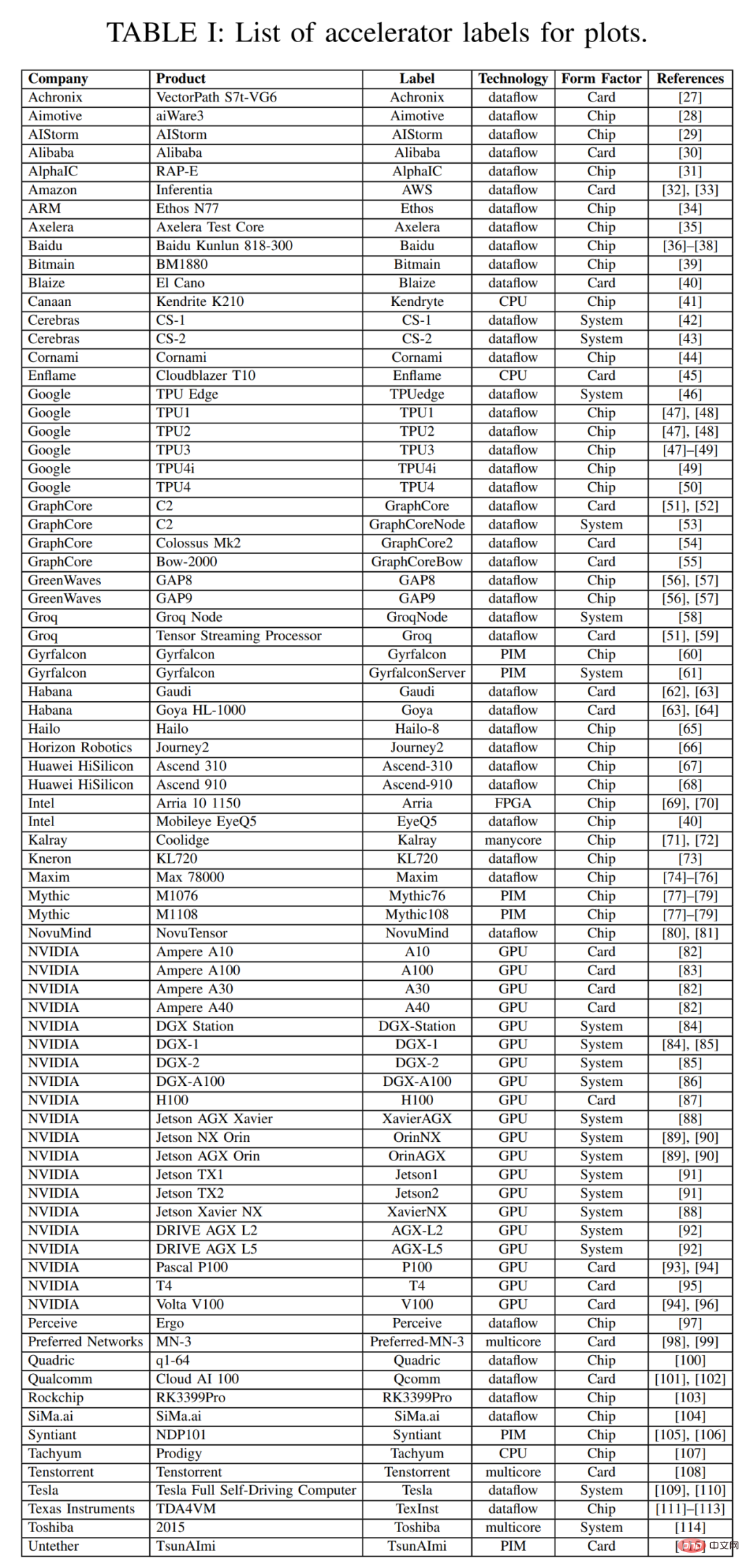

In this survey, this article begins with a scatter plot of survey data over the past three years. This article summarizes some important metadata for the accelerator, card, and overall system in Table 1 below, including labels for each point in Figure 2, with many points drawn from last year's survey. Most columns and entries in Table 1 are accurate and clear. But two technology items probably aren't: Dataflow and PIM. Dataflow-type processors are processors customized for neural network inference and training. Because neural network training and inference computations are built fully deterministically, they are suitable for dataflow processing, where computations, memory accesses, and inter-ALU communications are explicitly/statically programmed or placed and routed to the compute hardware. Processor in Memory (PIM) accelerators integrate processing elements with memory technology. Among these PIM accelerators are those based on analog computing technology that enhances flash memory circuits with in-place analog multiply-add functions. You can refer to the Mythic and Gyrfalcon accelerator materials for more details on this innovative technology.

#This article reasonably categorizes accelerators according to their expected applications. Figure 1 uses ellipses to identify five types of accelerators, corresponding to performance and power consumption. : Voice processing with very low power consumption and very small sensors; embedded cameras, small drones and robots; driver assistance systems, autonomous driving and autonomous robots; chips and cards for data centers; data center systems.

The performance, functions and other indicators of most accelerators have not changed. You can refer to papers in the past two years for relevant information. The following are accelerators that have not been included in past articles.

Dutch embedded systems startup Acelera claims that they produce embedded test chips with digital and analog design capabilities, and this test chip is to test the scope of digital design capabilities. They hope to add analog (and possibly flash) design elements in future work.

Maxim Integrated has released a system-on-chip (SoC) called the MAX78000 for ultra-low-power applications. It includes ARM CPU cores, RISC-V CPU cores, and AI accelerators. The ARM core is used for rapid prototyping and code reuse, while the RISC-V core is optimized for lowest power consumption. The AI accelerator has 64 parallel processors supporting 1-bit, 2-bit, 4-bit and 8-bit integer operations. The SoC operates at a maximum power of 30mW, making it suitable for low-latency, battery-powered applications.

Tachyum recently released an all-in-one processor called Prodigy. Each core of Prodigy integrates the functions of CPU and GPU. It is designed for HPC and machine learning applications. The chip has 128 high-performance unified cores. , operating frequency is 5.7GHz.

NVIDIA released its next-generation GPU called Hopper (H100) in March 2022. Hopper integrates more Symmetric Multiprocessors (SIMD and Tensor cores), 50% more memory bandwidth, and SXM mezzanine card instances with 700W power. (PCIe card power is 450W)

NVIDIA has released a series of system platforms over the past few years for deployment of Ampere architecture GPUs in automotive, robotics and other embedded applications. For automotive applications, the DRIVE AGX platform adds two new systems: DRIVE AGX L2 enables Level 2 autonomous driving in the 45W power range, and DRIVE AGX L5 enables Level 5 autonomous driving in the 800W power range. Jetson AGX Orin and Jetson NX Orin also use Ampere architecture GPUs for robotics, factory automation, and more, and they have a maximum peak power of 60W and 25W.

Graphcore releases its second-generation accelerator chip, the CG200, which is deployed on a PCIe card and has a peak power of approximately 300W. Last year, Graphcore also launched the Bow accelerator, the first wafer-to-wafer processor designed in partnership with TSMC. The accelerator itself is the same as the CG200 mentioned above, but it's paired with a second die that greatly improves power and clock distribution across the entire CG200 chip. This represents a 40% performance improvement and a 16% performance-per-watt improvement.

In June 2021, Google announced details of its fourth-generation pure inference TPU4i accelerator. Nearly a year later, Google has shared details of its 4th generation training accelerator, TPUv4. While the official announcement has few details, they did share peak power and related performance figures. Like previous TPU versions, TPU4 is available through Google Compute Cloud and used for internal operations.

The following is an introduction to accelerators that do not appear in Figure 2. Each version releases some benchmark results, but some lack peak performance and some do not publish peak performance. Power, as follows.

SambaNova released some benchmark results of reconfigurable AI accelerator technology last year. This year it also released a number of related technologies and published application papers in cooperation with Argonne National Laboratory. However, SambaNova did not provide any details and could only estimate the peak performance or power consumption of its solution from publicly available sources.

In May this year, Intel Habana Labs announced the launch of the second generation Goya inference accelerator and Gaudi training accelerator, named Greco and Gaudi2 respectively. Both perform several times better than previous versions. The Greco is a 75w single wide PCIe card, while the Gaudi2 is also a 650w double wide PCIe card (probably on a PCIe 5.0 slot). Habana published some benchmark comparisons of Gaudi2 against the Nvidia A100 GPU, but did not disclose peak performance figures for either accelerator.

Esperanto has produced some demo chips for Samsung and other partners to evaluate. The chip is a 1000-core RISC-V processor with an AI tensor accelerator per core. Esperanto has released some performance figures, but they don't disclose peak power or peak performance.

At Tesla AI Day, Tesla introduced their custom Dojo accelerator and some details of the system. Their chips have a peak 22.6 TF FP32 performance, but peak power consumption for each chip has not been announced, perhaps those details will be revealed at a later date.

Last year Centaur Technology launched an x86 CPU with an integrated AI accelerator, which has a 4096-byte wide SIMD unit and has very competitive performance. But Centaur's parent company, VIA Technologies, appears to have ended development of CNS processors after selling its U.S.-based processor engineering team to Intel.

There are several observations worth mentioning in Figure 2, as follows.

Int8 remains the default numeric precision for embedded, autonomous, and datacenter inference applications. This accuracy is sufficient for most AI/ML applications that use rational numbers. Also some accelerators use fp16 or bf16. Model training uses integer representation.

No additional features other than accelerators for machine learning have been found in the extremely low-power chips. In the ultra-low-power chip and embedded categories, it is common to release system-on-chip (SoC) solutions, often including low-power CPU cores, audio and video analog-to-digital converters (ADCs), cryptographic engines, network interfaces, etc. . These additional features of the SoC don't change the peak performance metrics, but they do have a direct impact on the peak power reported by the chip, so that's important when comparing them.

The embedded part has not changed much, which means that the computing performance and peak power are sufficient to meet the application needs in this field.

Over the past few years, several companies, including Texas Instruments, have launched AI accelerators. And NVIDIA has also released some better-performing systems for automotive and robotics applications, as mentioned earlier. In the data center, the PCIe v5 specification is highly anticipated in order to break through the 300W power limit of PCIe v4.

Finally, not only are high-end training systems releasing impressive performance numbers, but these companies are also releasing highly scalable interconnect technology to connect thousands of cards together. This is especially important for dataflow accelerators like Cerebras, GraphCore, Groq, Tesla Dojo, and SambaNova, which are programmed via explicit/static programming or place-and-route onto the compute hardware. This way it enables these accelerators to fit very large models like transformers.

Please refer to the original text for more details.

The above is the detailed content of Summarizing the past three years, MIT releases a review paper on AI accelerators. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to set html scroll bar style

How to set html scroll bar style

Solution to Win7 folder properties not sharing tab page

Solution to Win7 folder properties not sharing tab page

How to set both ends to be aligned in css

How to set both ends to be aligned in css

What are the e-commerce platforms?

What are the e-commerce platforms?

How to make ppt pictures appear one by one

How to make ppt pictures appear one by one

How to configure the path environment variable in java

How to configure the path environment variable in java