Recently, Meta has made another big move.

They released their latest chatbot, BlenderBot3, and publicly collected user usage data as feedback.

It is said that you can chat with BlenderBot3 about anything, it is a SOTA level chat robot.

Is it really that smart?

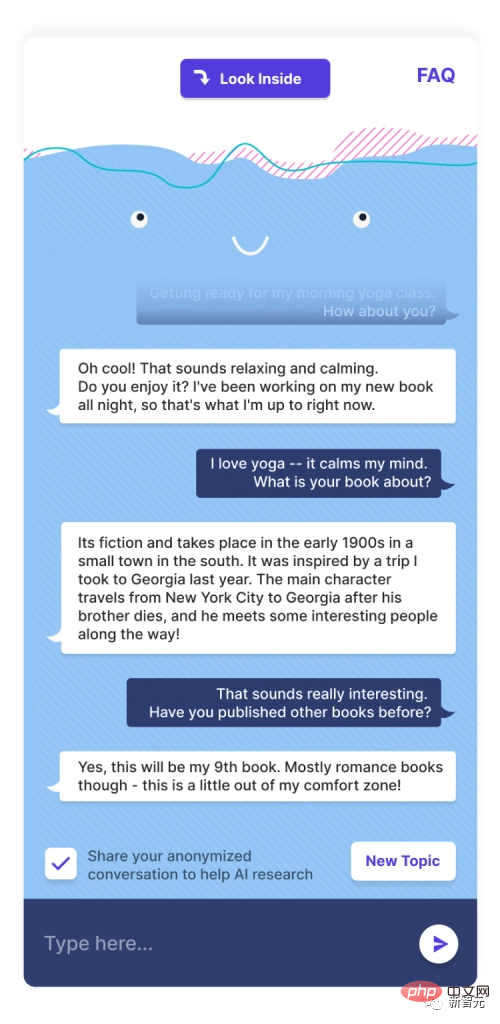

The picture above is the chat record of a netizen chatting with BlenderBot. As you can see, it’s really just chatting.

The user said he was going to practice yoga and asked BlenderBot what he wanted to do. The robot says he is writing the ninth book.

This latest chatbot was created by Meta’s Artificial Intelligence Research Laboratory and is also Meta’s first 175B parameter robot. All parts of the model, code, data sets, etc., are all open and available to everyone.

Meta said that on BlenderBot3, users can chat about any topic on the Internet.

BlenderBot3 applies two of Meta’s latest machine learning technologies—SeeKeR and Director—to establish a dialogue model that allows the robot to learn from interaction and feedback.

Kurt Shuste, a research engineer who participated in the development of BlenderBot3, said, "Meta is committed to publicly releasing all the data we collected when demonstrating this chatbot, hoping that we can improve conversational artificial intelligence." In terms of content, See, BlenderBot3 is not entirely new. Its underlying model is still a large language model, and LLMS, which is a very powerful (although it has some flaws) text generation software.

Like all text generation software, BlenderBot3 was initially trained on huge text data sets. On these data sets, BlenderBot can mine various statistical patterns and then generate language.

Just like the bad GPT-3 we talked about.

In addition to what is mentioned above, BlenderBot3 also has some highlights.

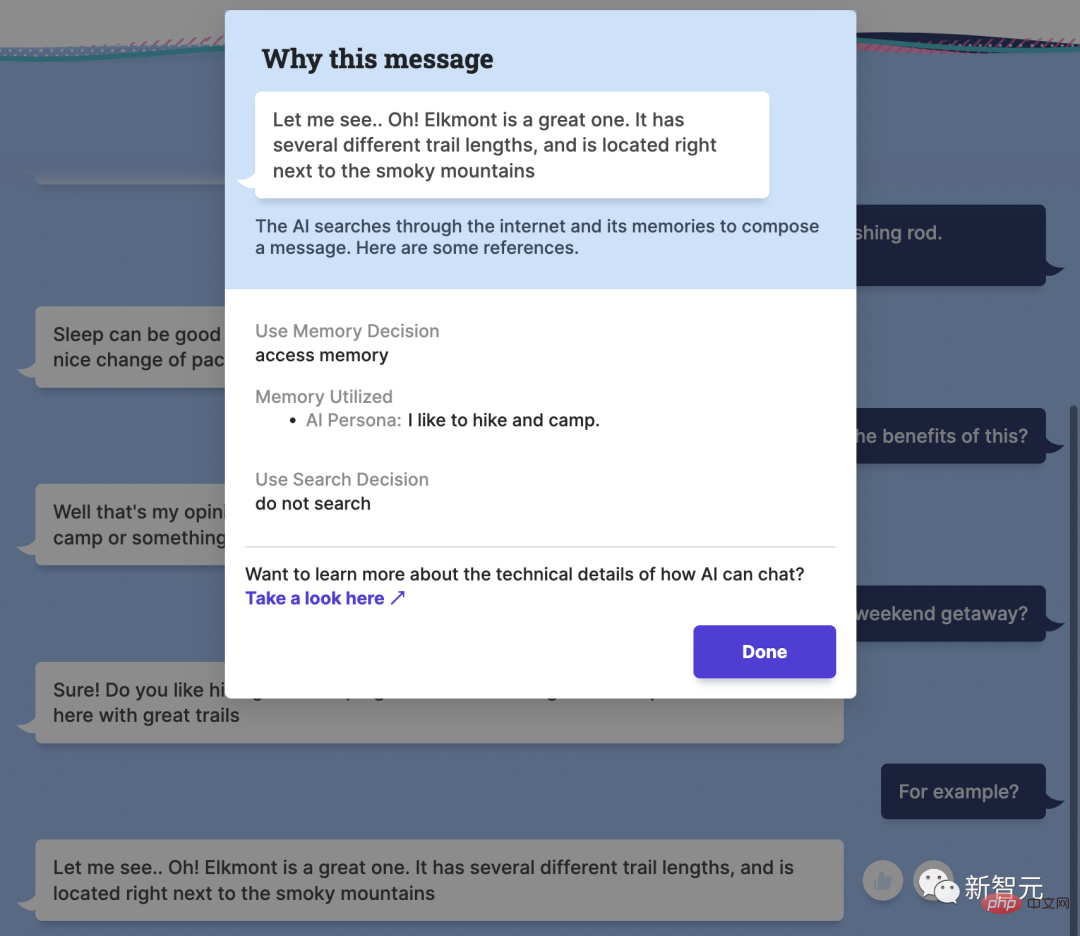

Unlike previous chatbots, when chatting with BlenderBot, users can click on the answer given by the robot to view the source of the sentence on the Internet. In other words, BlenderBot3 can cite sources.

However, there is a very critical issue here. That is, once any chatbot is released to the public, it means that anyone can interact with it. Once the base of people testing it increases, there will definitely be a wave of people who want to "spoil it".

This is also the focus of the Meta team’s next research.

It’s not uncommon for chatbots to be corrupted.

In 2016, Microsoft released a chatbot called Tay on Twitter. Anyone who has followed Tay must still have an impression.

After the public beta began, Tay began to learn from interactions with users. Not surprisingly, it didn’t take long before Tay, under the influence of a small group of troublemakers, began to output a series of remarks related to racism, anti-Semitism, and misogyny.

Microsoft saw that the situation was not good and took Tay offline in less than 24 hours.

Meta said that since Tay encountered Waterloo, the AI world has developed greatly. BlenderBot has various security assurances in place that should prevent Meta from following Microsoft's path.

And initial experiments show that as more people can participate in interacting with the model, the robot can learn more from the conversational experience. As time goes by, BlenderBot3 will become more and more secure.

It seems that Meta is doing pretty well in this regard.

Mary Williamson, research engineering manager at Facebook Artificial Intelligence Research (FAIR), said, “The key difference between Tay and BlenderBot3 is that Tay is designed to learn in real time from user interactions, while BlenderBot is a static Model."

This means that BlenderBot is able to remember what the user said in the conversation (it will even retain this information through browser cookies if the user exits the program and returns at a later time), but this data is only will be used to further improve the system.

Williamson said, "Today, most chatbots are task-oriented. Take the simplest customer service robot as an example. It looks intelligent, but it is actually just a dialogue tree programmed one after another. Slowly narrow down the user's needs, and finally have to manually connect."

The real breakthrough is to allow robots to have free conversations like humans. This is exactly what Meta wants to do. In addition to putting BlenderBot 3 online, Meta has also released the underlying code, training datasets, and smaller model variants. Researchers can request access via the form here .

The above is the detailed content of Meta launches a chatbot with 175 billion parameters that can communicate with humans just as well! It can avoid the problem of bad play.. For more information, please follow other related articles on the PHP Chinese website!