3D reconstruction of 2D images has always been a highlight in the CV field.

Different models have been developed to try to overcome this problem.

Today, scholars from the National University of Singapore jointly published a paper and developed a new framework, Anything-3D, to solve this long-standing problem.

##Paper address: https://arxiv.org/pdf/2304.10261.pdf

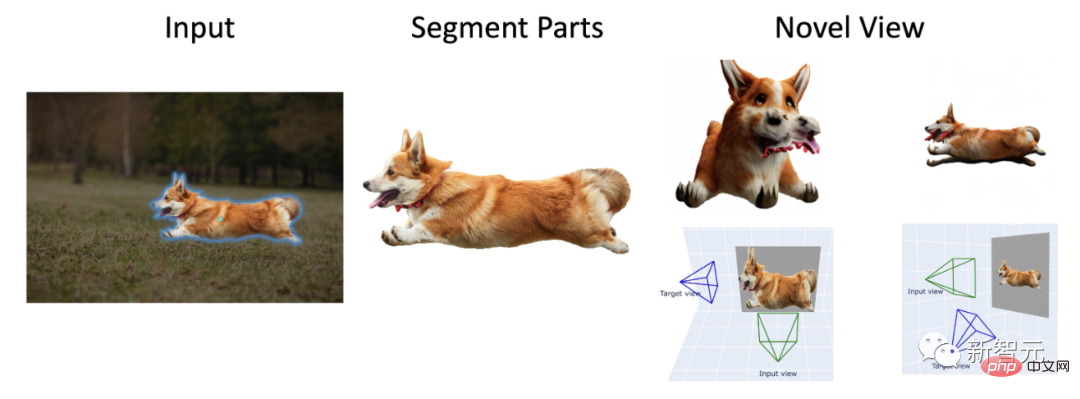

With the help of Meta’s “divide everything” model, Anything-3D directly makes any divided object come alive.

In addition, by using the Zero-1-to-3 model, you can get corgis from different angles.

# Even 3D reconstruction of characters can be performed.

It can be said that this one is a real breakthrough.

Anything-3D!In the real world, various objects and environments are diverse and complex. Therefore, without restrictions, 3D reconstruction from a single RGB image faces many difficulties.

Here, researchers from the National University of Singapore combined a series of visual language models and SAM (Segment-Anything) object segmentation models to generate a multi-functional and reliable system— —Anything-3D.

The purpose is to complete the task of 3D reconstruction under the condition of a single perspective.

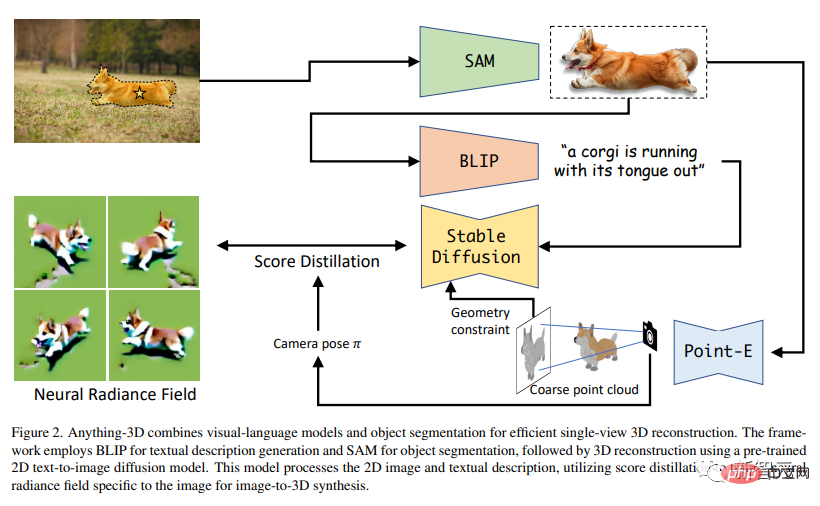

They use the BLIP model to generate texture descriptions, use the SAM model to extract objects in the image, and then use the text → image diffusion model Stable Diffusion to place the objects into Nerf (neural radiation field) .

In subsequent experiments, Anything-3D demonstrated its powerful three-dimensional reconstruction capabilities. Not only is it accurate, it is also applicable to a wide range of applications.

Anything-3D has obvious effects in solving the limitations of existing methods. The researchers demonstrated the advantages of this new framework through testing and evaluation on various data sets.

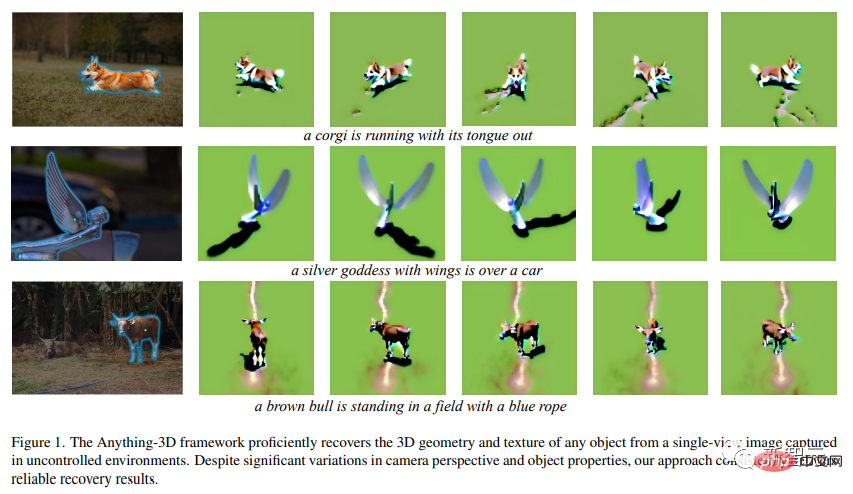

In the picture above, we can see, "The picture of Corgi sticking out his tongue and running for thousands of miles" and "The picture of the silver-winged goddess committing herself to a luxury car" , and "Image of a brown cow in a field wearing a blue rope on its head."

This is a preliminary demonstration that the Anything-3D framework can skillfully restore single-view images taken in any environment into a 3D form and generate textures.

This new framework consistently provides highly accurate results despite large changes in camera perspective and object properties.

You must know that reconstructing 3D objects from 2D images is the core of the subject in the field of computer vision, and has great implications for robotics, autonomous driving, augmented reality, virtual reality, and three-dimensional printing. Influence.

Although some good progress has been made in recent years, the task of single-image object reconstruction in an unstructured environment is still a very attractive problem that needs to be solved urgently. .

Currently, researchers are tasked with generating a three-dimensional representation of one or more objects from a single two-dimensional image, including point clouds, grids, or volume representations.

However, this problem is not fundamentally true.

It is impossible to unambiguously determine the three-dimensional structure of an object due to the inherent ambiguity produced by two-dimensional projection.

Coupled with the huge differences in shape, size, texture and appearance, reconstructing objects in their natural environment is very complex. In addition, objects in real-world images are often occluded, which hinders accurate reconstruction of occluded parts.

At the same time, variables such as lighting and shadows can also greatly affect the appearance of objects, and differences in angle and distance can also cause obvious changes in the two-dimensional projection.

Enough about the difficulties, Anything-3D is ready to play.

In the paper, the researchers introduced in detail this groundbreaking system framework, which integrates the visual language model and the object segmentation model to easily turn 2D objects into 3D of.

In this way, a system with powerful functions and strong adaptability becomes. Single view reconstruction? Easy.

Combining the two models, the researchers say, it is possible to retrieve and determine the three-dimensional texture and geometry of a given image.

Anything-3D uses the BLIP model (Bootstrapping language-image model) to pre-train the text description of the image, and then uses the SAM model to identify the distribution area of the object.

Next, use the segmented objects and text descriptions to perform the 3D reconstruction task.

In other words, this paper uses a pre-trained 2D text→image diffusion model to perform 3D synthesis of images. In addition, the researchers used fractional distillation to train a Nerf specifically for images.

The above figure is the entire process of generating 3D images. The upper left corner is the 2D original image. It first goes through SAM to segment the corgi, then goes through BLIP to generate a text description, and then uses fractional distillation to create a Nerf.

Through rigorous experiments on different data sets, the researchers demonstrated the effectiveness and adaptability of this approach, while outperforming in accuracy, robustness, and generalization capabilities. existing methods.

The researchers also conducted a comprehensive and in-depth analysis of existing challenges in the reconstruction of 3D objects in natural environments, and explored how the new framework can solve such problems.

Ultimately, by integrating the zero-distance vision and language understanding capabilities in the basic model, the new framework can reconstruct objects from various real-world images and generate accurate, complex, and Widely applicable 3D representation.

It can be said that Anything-3D is a major breakthrough in the field of 3D object reconstruction.

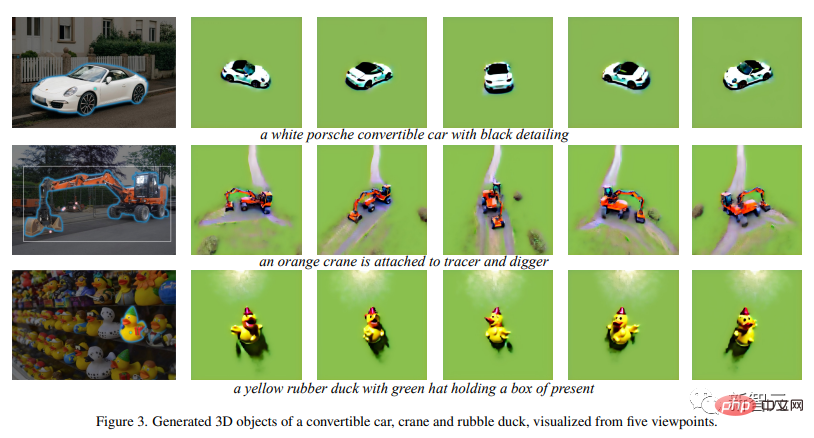

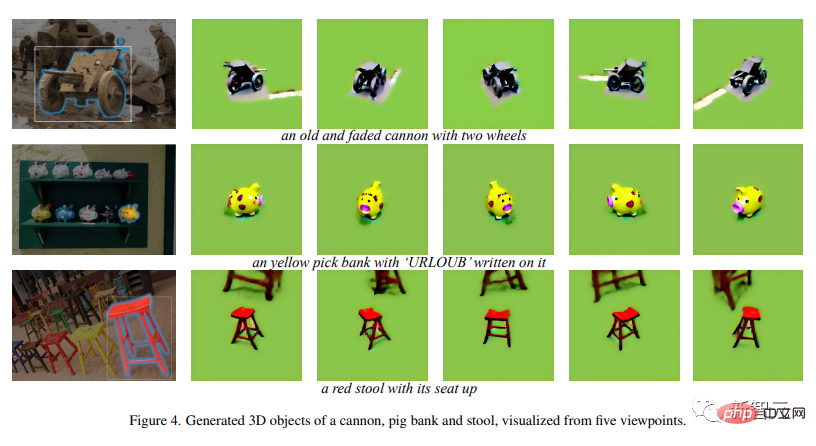

Here are more examples:

## Porsche, bright orange excavator crane, little yellow rubber duck with green hat

# The cannon faded by the tears of the times, the cute little piggy Mini piggy bank, cinnabar red four-legged high stool

This new framework interactively identifies regions in single-view images and represents them in 2D with optimized text embeddings object. Ultimately, a 3D-aware fractional distillation model is used to efficiently generate high-quality 3D objects.In summary, Anything-3D demonstrates the potential of reconstructing natural 3D objects from single-view images. The researchers said that the quality of the 3D reconstruction of the new framework can be more perfect, and the researchers are constantly working hard to improve the quality of the generation. In addition, the researchers said that quantitative evaluations of 3D datasets such as new view synthesis and error reconstruction are not currently provided, but these will be included in future iterations of work. Meanwhile, the researchers’ ultimate goal is to expand this framework to accommodate more practical situations, including object recovery under sparse views. Wang is currently a tenure-track assistant professor in the ECE Department of the National University of Singapore (NUS). Before joining the National University of Singapore, he was an Assistant Professor in the CS Department of Stevens Institute of Technology. Prior to joining Stevens, I served as a postdoc in Professor Thomas Huang's image formation group at the Beckman Institute at the University of Illinois at Urbana-Champaign. Wang received his PhD from the Computer Vision Laboratory of the Ecole Polytechnique Fédérale de Lausanne (EPFL), supervised by Professor Pascal Fua, and received his Bachelor of Science with First Class Honors from the Department of Computer Science of the Hong Kong Polytechnic University in 2010 Bachelor of Science. About the author

The above is the detailed content of The NUS Chinese team releases the latest model: single-view 3D reconstruction, fast and accurate!. For more information, please follow other related articles on the PHP Chinese website!