Translator | Zhu Xianzhong

Reviewer | Sun Shujuan

Since the Python programming language faces multiple open source libraries available; therefore, choosing the Python language for motion detection development is easy. Currently, motion detection has many commercial applications. For example, it can be used for proctoring online exams and for security purposes in stores, banks, etc.

The Python programming language is an open source language with extremely rich support libraries. Today, a large number of applications have been developed for users based on this language and have a large number of users. Because of this, the Python language is growing rapidly in the market. The advantages of the Python language are numerous, not only because of its simple syntax and easy to find errors, but also because of its very fast debugging process, which makes it more user-friendly.

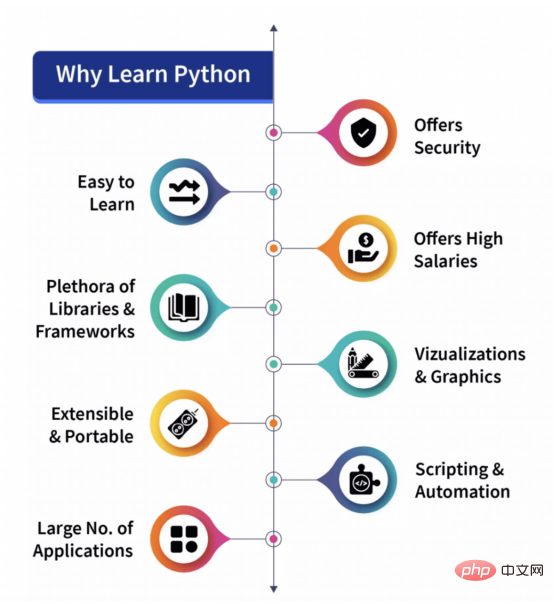

Why is it recommended that you learn Python? We can use the following diagram to explain simply:

Python was designed in 1991 and developed by the Python Software Foundation. Many versions have been released today. Among them, Python2 and Python3 are the most famous. At present, Python3 has been widely used, and the number of users is also growing rapidly. In this project, we will use Python3 as the development language.

According to the principles of physics, when an object is stationary and has no speed, it is considered to be at rest; and on the contrary, when an object is not completely stationary and is moving in a certain direction (whether When there is a certain movement or speed (left or right, front and back or up and down), it is considered to be in motion. In this article, we will try to detect the behavior of objects.

Currently, motion detection has been implemented or applied in a large number of real life situations, which fully proves its huge application value. Among them, using webcams (which we will implement in this article) as security guards for online exam invigilation, etc. is the most typical application.

In this article, we will try to implement a script. With this script, we will use the webcam installed on a desktop or laptop to detect object motion. The idea is that we will take two frames of video and try to find the difference between them. If there is some kind of difference between the two frames, then obviously there is some kind of movement of the object in front of the camera, which makes the difference.

Before we start implementing the code, let’s take a look at some modules or libraries that we will use in the code to operate the webcam for motion detection. As we discussed, these open source libraries play an important role in spreading Python's reputation. Let's take a look at the open source libraries needed in the example project of this article:

The above two libraries OpenCV and Pandas are purely based on Free and open source libraries for Python, we will use them through the Python3 version of the Python language.

OpenCV is a very famous open source library that can be used with many programming languages (such as C, Python, etc.) and is specially used for image processing and video program development. By integrating applications with Python's open source library Pandas or NumPy library, we can fully exploit the functions of OpenCV.

Pandas is an open source Python library that provides rich built-in tools for data analysis; therefore, it has been widely used in the fields of data science and data analysis. Pandas provides the data structure form DataFrame, which provides extremely convenient support for operating and storing tabular data into a two-dimensional data structure.

Neither of the above two modules are built-in to Python, we must install them before using them. Apart from this, we will also use two other modules in our project.

Both modules are built into Python and do not need to be installed in the future . These modules are used to handle date and time related functions respectively.

So far we have seen the libraries we will use in our code. Next, let's start with the assumption that a video is just a combination of many still images or frames, and then use the combination of all those frames to create a video.

In this section, we will first import all libraries like Pandas and OpenCV. Then, we import the time and DateTime functions from the DateTime module.

#导入Pandas库 import Pandas as panda # 导入OpenCV库 import cv2 #导入时间模块 import time #从datetime 模块导入datetime 函数 from datetime import datetime

In this section, we are going to initialize some variables and will Use these variables further. First, we define the initial state as "None", and then store the tracked motion through another variable motionTrackList.

此外,我们还定义了一个列表“motionTime”,用于存储发现运动的时间,并使用Panda的模块初始化数据帧列表。

# 对于初始帧,以变量initialState的形式将初始状态指定为None initialState = None # 帧中检测到任何运动时存储所有轨迹的列表 motionTrackList= [ None, None ] # 一个新的“时间”列表,用于存储检测到移动时的时间 motionTime = [] # 使用带有初始列和最终列的Panda库初始化数据帧变量“DataFrame” dataFrame = panda.DataFrame(columns = ["Initial", "Final"])

在本节中,我们将实现本文示例项目中最关键的运动检测步骤。下面,让我们分步骤进行解说:

# 使用cv2模块启动网络摄像头以捕获视频

video = cv2.VideoCapture(0)

# 使用无限循环从视频中捕获帧

while True:

# 使用read功能从视频中读取每个图像或帧

check, cur_frame = video.read()

#将'motion'变量定义为等于零的初始帧

var_motion = 0

# 从彩色图像创建灰色帧

gray_image = cv2.cvtColor(cur_frame, cv2.COLOR_BGR2GRAY)

# 从灰度图像中使用GaussianBlur函数找到变化部分

gray_frame = cv2.GaussianBlur(gray_image, (21, 21), 0)

# 在第一次迭代时进行条件检查

# 如果为None,则把grayFrame赋值给变量initalState

if initialState is None:

initialState = gray_frame

continue

# 计算静态(或初始)帧与我们创建的灰色帧之间的差异

differ_frame = cv2.absdiff(initialState, gray_frame)

# 静态或初始背景与当前灰色帧之间的变化将突出显示

thresh_frame = cv2.threshold(differ_frame, 30, 255, cv2.THRESH_BINARY)[1]

thresh_frame = cv2.dilate(thresh_frame, None, iterations = 2)

#对于帧中的移动对象,查找轮廓

cont,_ = cv2.findContours(thresh_frame.copy(),

cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for cur in cont:

if cv2.contourArea(cur) < 10000:

continue

var_motion = 1

(cur_x, cur_y,cur_w, cur_h) = cv2.boundingRect(cur)

# 在移动对象周围创建一个绿色矩形

cv2.rectangle(cur_frame, (cur_x, cur_y), (cur_x + cur_w, cur_y + cur_h), (0, 255, 0), 3)

# 从帧中添加运动状态

motionTrackList.append(var_motion)

motionTrackList = motionTrackList[-2:]

# 添加运动的开始时间

if motionTrackList[-1] == 1 and motionTrackList[-2] == 0:

motionTime.append(datetime.now())

# 添加运动的结束时间

if motionTrackList[-1] == 0 and motionTrackList[-2] == 1:

motionTime.append(datetime.now())

# 在显示捕获图像的灰度级中

cv2.imshow("The image captured in the Gray Frame is shown below: ", gray_frame)

# 显示初始静态帧和当前帧之间的差异

cv2.imshow("Difference between theinital static frame and the current frame: ", differ_frame)

# 在框架屏幕上显示视频中的黑白图像

cv2.imshow("Threshold Frame created from the PC or Laptop Webcam is: ", thresh_frame)

#通过彩色框显示物体的轮廓

cv2.imshow("From the PC or Laptop webcam, this is one example of the Colour Frame:", cur_frame)

# 创建处于等待状态的键盘按键

wait_key = cv2.waitKey(1)

# 按下'm'键时,结束整个进程执行

if wait_key == ord('m'):

# 当屏幕上有东西移动时,将运动变量值添加到motiontime列表中

if var_motion == 1:

motionTime.append(datetime.now())

break

在结束循环体执行后,我们将从dataFrame和motionTime列表中添加数据到CSV文件中,最后关闭视频。这一部分代码的实现如下所示:

# 最后,我们在数据帧中添加运动时间

for a in range(0, len(motionTime), 2):

dataFrame = dataFrame.append({"Initial" : time[a], "Final" : motionTime[a + 1]}, ignore_index = True)

# 创建CSV文件记录下所有运动信息

dataFrame.to_csv("EachMovement.csv")

# 释放视频内存

video.release()

#现在,在openCV的帮助下关闭或销毁所有打开的窗口

cv2.destroyAllWindows()至此,我们已经成功地创建完所有代码。现在,让我们再次归纳一下整个过程。

首先,我们使用设备的网络摄像头捕捉视频,然后将输入视频的初始帧作为参考,并不时检查下一帧。如果发现与第一帧不同的帧,则说明存在运动。该信息将被标记在绿色矩形中。

现在,让我们把上面所有代码片断连接到一起,如下所示:

#导入Pandas库

import Pandas as panda

# 导入OpenCV库

import cv2

#导入时间模块

import time

#从datetime 模块导入datetime 函数

from datetime import datetime

# 对于初始帧,以变量initialState的形式将初始状态指定为None

initialState = None

# 帧中检测到任何运动时存储所有轨迹的列表

motionTrackList= [ None, None ]

# 一个新的“时间”列表,用于存储检测到移动时的时间

motionTime = []

# 使用带有初始列和最终列的Panda库初始化数据帧变量“DataFrame”

dataFrame = panda.DataFrame(columns = ["Initial", "Final"])

# 使用cv2模块启动网络摄像头以捕获视频

video = cv2.VideoCapture(0)

# 使用无限循环从视频中捕获帧

while True:

# 使用read功能从视频中读取每个图像或帧

check, cur_frame = video.read()

#将'motion'变量定义为等于零的初始帧

var_motion = 0

# 从彩色图像创建灰色帧

gray_image = cv2.cvtColor(cur_frame, cv2.COLOR_BGR2GRAY)

# 从灰度图像中使用GaussianBlur函数找到变化部分

gray_frame = cv2.GaussianBlur(gray_image, (21, 21), 0)

# 在第一次迭代时进行条件检查

# 如果为None,则把grayFrame赋值给变量initalState

if initialState is None:

initialState = gray_frame

continue

# 计算静态(或初始)帧与我们创建的灰色帧之间的差异

differ_frame = cv2.absdiff(initialState, gray_frame)

# 静态或初始背景与当前灰色帧之间的变化将突出显示

thresh_frame = cv2.threshold(differ_frame, 30, 255, cv2.THRESH_BINARY)[1]

thresh_frame = cv2.dilate(thresh_frame, None, iterations = 2)

#对于帧中的移动对象,查找轮廓

cont,_ = cv2.findContours(thresh_frame.copy(),

cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for cur in cont:

if cv2.contourArea(cur) < 10000:

continue

var_motion = 1

(cur_x, cur_y,cur_w, cur_h) = cv2.boundingRect(cur)

# 在移动对象周围创建一个绿色矩形

cv2.rectangle(cur_frame, (cur_x, cur_y), (cur_x + cur_w, cur_y + cur_h), (0, 255, 0), 3)

# 从帧中添加运动状态

motionTrackList.append(var_motion)

motionTrackList = motionTrackList[-2:]

# 添加运动的开始时间

if motionTrackList[-1] == 1 and motionTrackList[-2] == 0:

motionTime.append(datetime.now())

# 添加运动的结束时间

if motionTrackList[-1] == 0 and motionTrackList[-2] == 1:

motionTime.append(datetime.now())

# 在显示捕获图像的灰度级中

cv2.imshow("The image captured in the Gray Frame is shown below: ", gray_frame)

# 显示初始静态帧和当前帧之间的差异

cv2.imshow("Difference between theinital static frame and the current frame: ", differ_frame)

# 在框架屏幕上显示视频中的黑白图像

cv2.imshow("Threshold Frame created from the PC or Laptop Webcam is: ", thresh_frame)

#通过彩色框显示物体的轮廓

cv2.imshow("From the PC or Laptop webcam, this is one example of the Colour Frame:", cur_frame)

# 创建处于等待状态的键盘按键

wait_key = cv2.waitKey(1)

# 借助'm'键结束我们系统的整个进行

if wait_key == ord('m'):

# 当屏幕上有物体运行时把运动变量值添加到列表motiontime中

if var_motion == 1:

motionTime.append(datetime.now())

break

# 最后,我们在数据帧中添加运动时间

for a in range(0, len(motionTime), 2):

dataFrame = dataFrame.append({"Initial" : time[a], "Final" : motionTime[a + 1]}, ignore_index = True)

# 记录下所有运行,并创建到一个CSV文件中

dataFrame.to_csv("EachMovement.csv")

# 释放视频内存

video.release()

#现在,在openCV的帮助下关闭或销毁所有打开的窗口

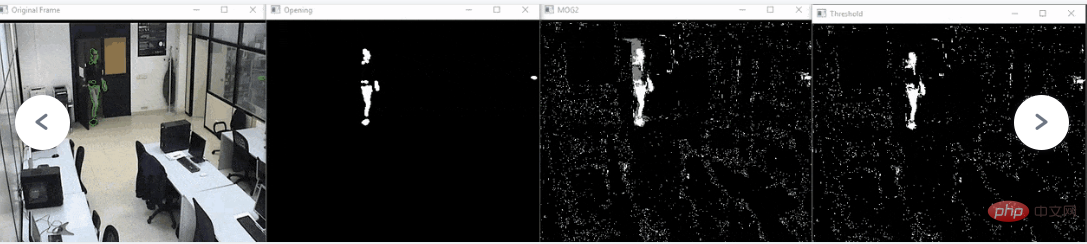

cv2.destroyAllWindows()运行上述代码后得到的结果与下面看到的结果类似。

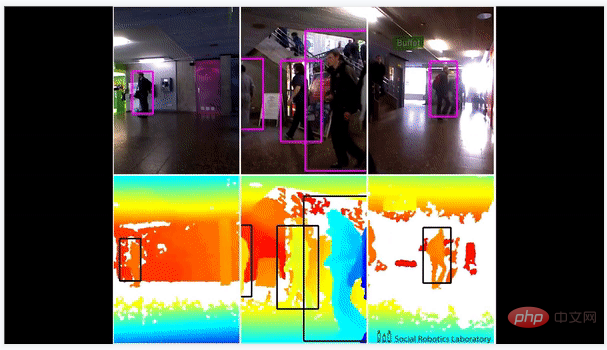

从这个动画中,我们可以看到该男子在视频中的动作已经被跟踪。因此,可以相应地看到输出结果。

然而,在这段代码中,跟踪是在移动对象周围的矩形框的帮助下完成,类似于下面动画中所示的。这里要注意的一件有趣的事情是,这段视频是一个实际的安全摄像头的镜头,已经对其进行了检测处理。

最后来归纳一下。本文中所介绍的运动检测的主要思想是,每个视频只是许多静态图像(称为帧)的组合,我们是通过判断帧之间的差异来实现运行检测的。

朱先忠,51CTO社区编辑,51CTO专家博客、讲师,潍坊一所高校计算机教师,自由编程界老兵一枚。早期专注各种微软技术(编著成ASP.NET AJX、Cocos 2d-X相关三本技术图书),近十多年投身于开源世界(熟悉流行全栈Web开发技术),了解基于OneNet/AliOS+Arduino/ESP32/树莓派等物联网开发技术与Scala+Hadoop+Spark+Flink等大数据开发技术。

原文标题:How to Perform Motion Detection Using Python,作者:Vaishnavi Amira Yada

The above is the detailed content of Python motion detection programming practical drill. For more information, please follow other related articles on the PHP Chinese website!