In this article, we will introduce the decision tree and random forest models in detail. Furthermore, we will show which hyperparameters of decision trees and random forests have a significant impact on their performance, allowing us to find the optimal solution between underfitting and overfitting. After understanding the theory behind decision trees and random forests. , we will implement them using Scikit-Learn.

Decision tree is an important algorithm for predictive modeling machine learning. The classic decision tree algorithm has been around for decades, and modern variants like random forests are among the most powerful techniques available.

Typically, such algorithms are called "decision trees", but on some platforms such as R, they are called CART. The CART algorithm provides the basis for important algorithms such as bagged decision trees, random forests, and boosting decision trees.

Unlike linear models, decision trees are non-parametric models: they are not controlled by a mathematical decision function and have no weights or intercepts to optimize. In effect, a decision tree will divide the space by taking features into account.

The representation of the CART model is a binary tree. This is a binary tree from algorithms and data structures. Each root node represents an input variable (x) and a split point on that variable (assuming the variable is numeric).

The leaf nodes of the tree contain an output variable (y), which is used to make predictions. Given a new input, the tree is traversed by computing the specific input starting from the root node of the tree.

Some advantages of decision trees are:

Disadvantages of decision trees include:

Random Forest is one of the most popular and powerful machine learning algorithms. It is an integrated machine learning algorithm called Bootstrap Aggregation or bagging.

To improve the performance of decision trees, we can use many trees with random feature samples.

We will use decision tree and random forest to predict the loss of your valuable employees.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

sns.set_style("whitegrid")

plt.style.use("fivethirtyeight")

df = pd.read_csv("WA_Fn-UseC_-HR-Employee-Attrition.csv")

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

df.drop(['EmployeeCount', 'EmployeeNumber', 'Over18', 'StandardHours'], axis="columns", inplace=True)

categorical_col = []

for column in df.columns:

if df[column].dtype == object and len(df[column].unique()) 50:

categorical_col.append(column)

df['Attrition'] = df.Attrition.astype("category").cat.codes

categorical_col.remove('Attrition')

label = LabelEncoder()

for column in categorical_col:

df[column] = label.fit_transform(df[column])

X = df.drop('Attrition', axis=1)

y = df.Attrition

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

def print_score(clf, X_train, y_train, X_test, y_test, train=True):

if train:

pred = clf.predict(X_train)

print("Train Result:n================================================")

print(f"Accuracy Score: {accuracy_score(y_train, pred) * 100:.2f}%")

print("_______________________________________________")

print(f"Confusion Matrix: n {confusion_matrix(y_train, pred)}n")

elif train==False:

pred = clf.predict(X_test)

print("Test Result:n================================================")

print(f"Accuracy Score: {accuracy_score(y_test, pred) * 100:.2f}%")

print("_______________________________________________")

print(f"Confusion Matrix: n {confusion_matrix(y_test, pred)}n")

from sklearn.tree import DecisionTreeClassifier

tree_clf = DecisionTreeClassifier(random_state=42)

tree_clf.fit(X_train, y_train)

print_score(tree_clf, X_train, y_train, X_test, y_test, train=True)

print_score(tree_clf, X_train, y_train, X_test, y_test, train=False)

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import GridSearchCV

params = {

"criterion":("gini", "entropy"),

"splitter":("best", "random"),

"max_depth":(list(range(1, 20))),

"min_samples_split":[2, 3, 4],

"min_samples_leaf":list(range(1, 20)),

}

tree_clf = DecisionTreeClassifier(random_state=42)

tree_cv = GridSearchCV(tree_clf, params, scoring="accuracy", n_jobs=-1, verbose=1, cv=3)

tree_cv.fit(X_train, y_train)

best_params = tree_cv.best_params_

print(f"Best paramters: {best_params})")

tree_clf = DecisionTreeClassifier(**best_params)

tree_clf.fit(X_train, y_train)

print_score(tree_clf, X_train, y_train, X_test, y_test, train=True)

print_score(tree_clf, X_train, y_train, X_test, y_test, train=False)

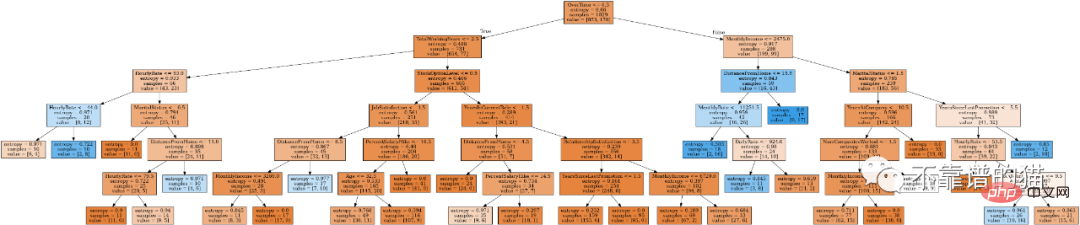

from IPython.display import Image

from six import StringIO

from sklearn.tree import export_graphviz

import pydot

features = list(df.columns)

features.remove("Attrition")

dot_data = StringIO()

export_graphviz(tree_clf, out_file=dot_data, feature_names=features, filled=True)

graph = pydot.graph_from_dot_data(dot_data.getvalue())

Image(graph[0].create_png())

随机森林是一种元估计器,它将多个决策树分类器对数据集的不同子样本进行拟合,并使用均值来提高预测准确度和控制过拟合。

随机森林算法参数:

from sklearn.ensemble import RandomForestClassifier

rf_clf = RandomForestClassifier(n_estimators=100)

rf_clf.fit(X_train, y_train)

print_score(rf_clf, X_train, y_train, X_test, y_test, train=True)

print_score(rf_clf, X_train, y_train, X_test, y_test, train=False)

调优随机森林的主要参数是n_estimators参数。一般来说,森林中的树越多,泛化性能越好,但它会减慢拟合和预测的时间。

我们还可以调优控制森林中每棵树深度的参数。有两个参数非常重要:max_depth和max_leaf_nodes。实际上,max_depth将强制具有更对称的树,而max_leaf_nodes会限制最大叶节点数量。

n_estimators = [100, 500, 1000, 1500]

max_features = ['auto', 'sqrt']

max_depth = [2, 3, 5]

max_depth.append(None)

min_samples_split = [2, 5, 10]

min_samples_leaf = [1, 2, 4, 10]

bootstrap = [True, False]

params_grid = {'n_estimators': n_estimators, 'max_features': max_features,

'max_depth': max_depth, 'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf, 'bootstrap': bootstrap}

rf_clf = RandomForestClassifier(random_state=42)

rf_cv = GridSearchCV(rf_clf, params_grid, scoring="f1", cv=3, verbose=2, n_jobs=-1)

rf_cv.fit(X_train, y_train)

best_params = rf_cv.best_params_

print(f"Best parameters: {best_params}")

rf_clf = RandomForestClassifier(**best_params)

rf_clf.fit(X_train, y_train)

print_score(rf_clf, X_train, y_train, X_test, y_test, train=True)

print_score(rf_clf, X_train, y_train, X_test, y_test, train=False)

本文主要讲解了以下内容:

The above is the detailed content of Theory, implementation and hyperparameter tuning of decision trees and random forests. For more information, please follow other related articles on the PHP Chinese website!