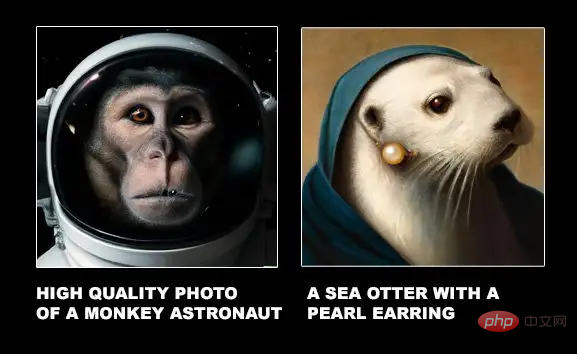

When DALL-E 2 was first released, the generated paintings could almost perfectly reproduce the input text. The high-definition resolution and powerful drawing imagination also made various netizens call it "too cool".

But a new research paper from Harvard University recently shows that although the images generated by DALL-E 2 are exquisite, it may just glue a few entities in the text together. Taken together, the spatial relationships expressed in the text are not even understood!

Paper link: https://arxiv.org/pdf/2208.00005.pdf

Data link: https://osf.io/sm68h/

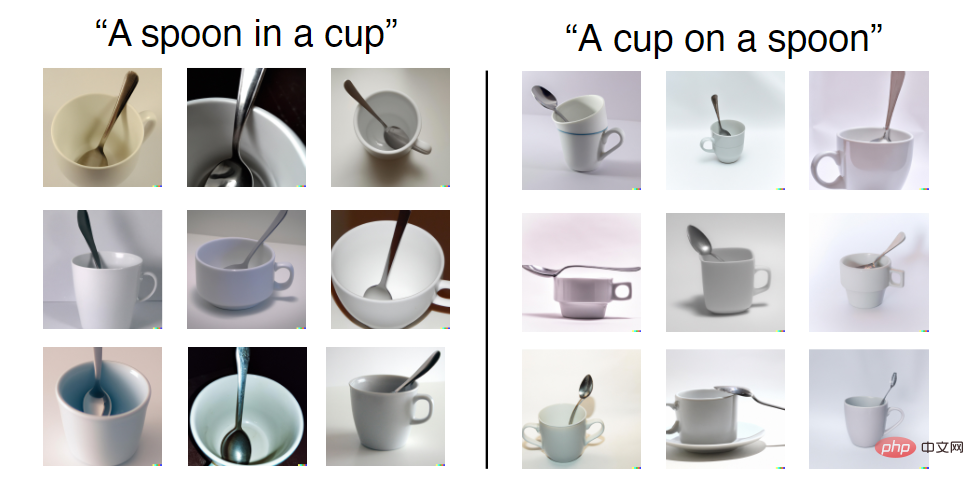

For example, given a text prompt of "A cup on a spoon", you can see that some of the images generated by DALL-E 2 do not satisfy the "on" relationship.

However, in the training set, the combinations of tea cups and spoons that DALL-E 2 may see are all "in", while "on" is relatively rare, so between the two In terms of generating these relationships, the accuracy rates are also different.

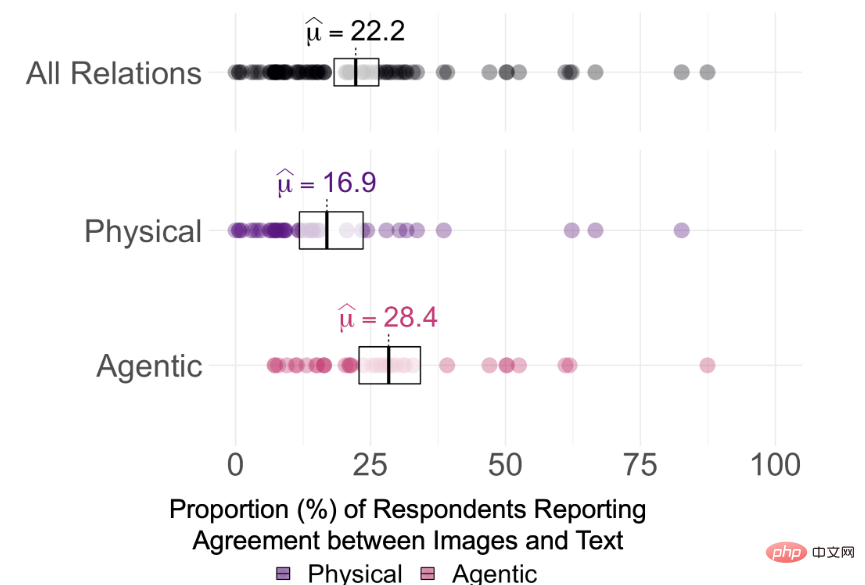

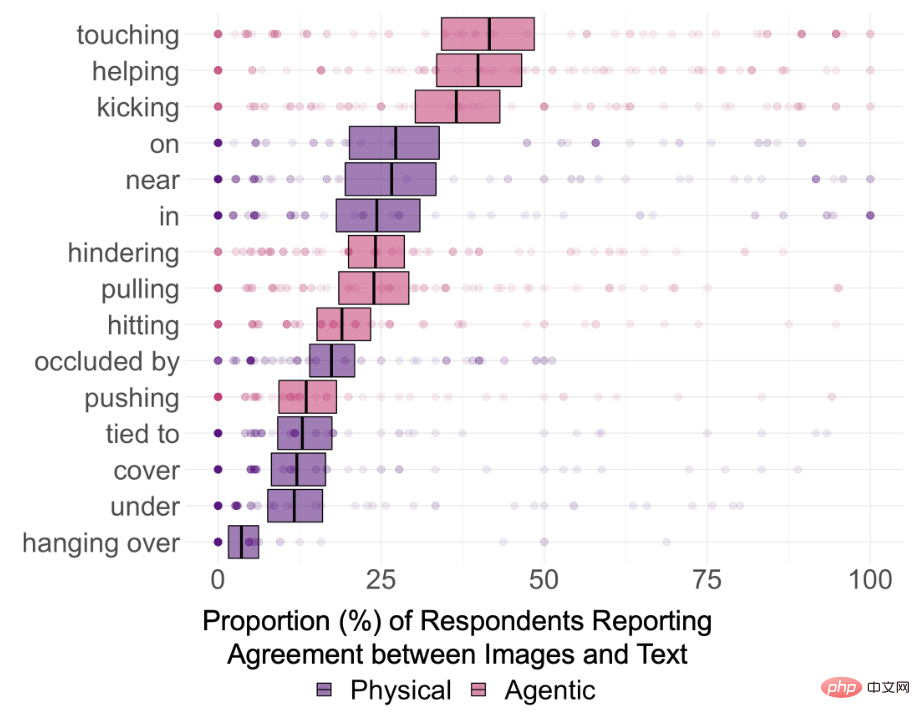

So in order to explore whether DALL-E 2 can really understand the semantic relationships in the text, the researchers selected 15 types of relationships, 8 of which were spatial relationships (physical relations). ), including in, on, under, covering, near, occluded by, hanging over and tied to; 7 action relations (agentic relation), including pushing, pulling, touching, hitting, kicking, helping and hiding.

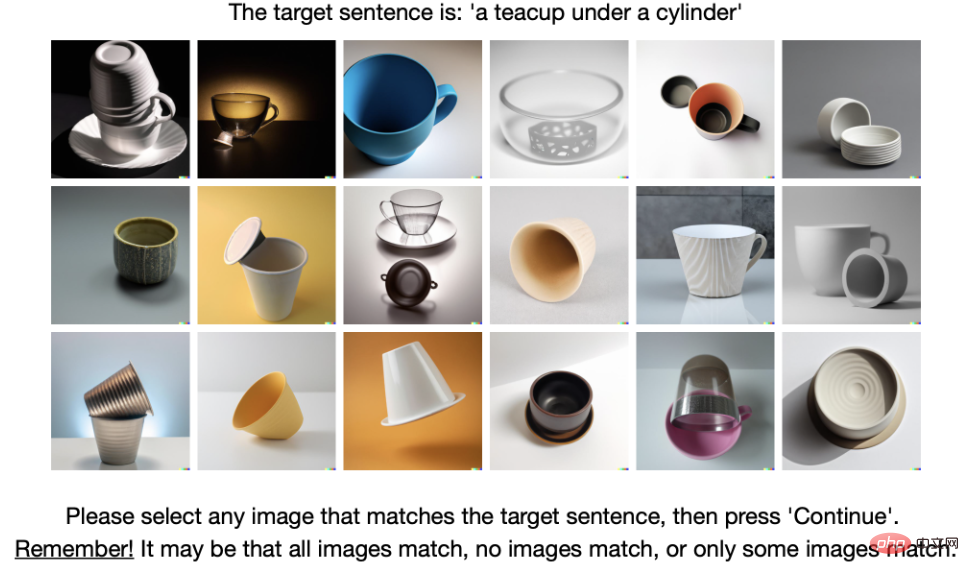

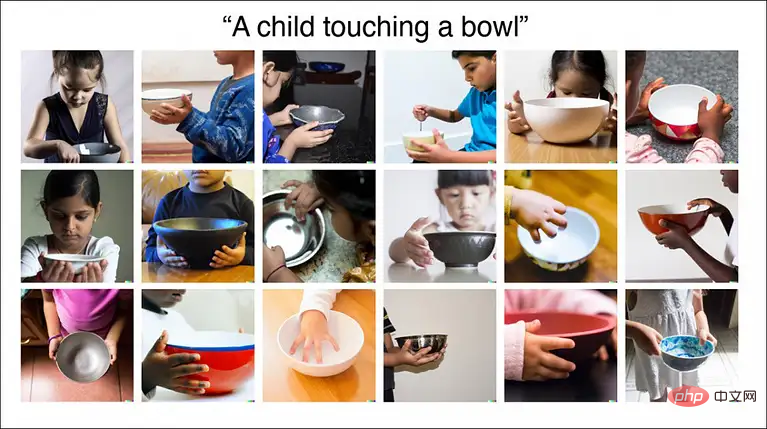

The entity set in the text is limited to 12, and the selected items are simple and common items in each data set, namely: box, cylinder, blanket, bowl, teacup, knife; man, woman, child, robot, monkey and iguana.

For each type of relationship, 5 prompts are created, and 2 entities are randomly selected for replacement each time, ultimately generating 75 text prompts. After submission to the DALL-E 2 rendering engine, the first 18 generated images were selected, resulting in 1350 images.

The researchers then selected 169 out of 180 annotators through a common sense reasoning test to participate in the annotation process.

Experimental results found that the average consistency between the images generated by DALL-E 2 and the text prompts used to generate the images was only 22.2% across 75 prompts

However, it is difficult to say whether DALL-E 2 truly "understands" the relationship in the text. By observing the consistency scores of the annotators, based on the consensus thresholds of 0%, 25% and 50%, Holm-corrected one-sample significance tests for each relationship showed that participant agreement was significantly higher than 0% at α = 0.95 (pHolm

So even without correcting for multiple comparisons, the fact is that the images generated by DALL-E 2 do not understand the relationship between the two objects in the text.

The results also show that DALL-E's ability to connect two unrelated objects may not be as strong as imagined, such as "A child touching a bowl" The consistency is 87% because in real-world images, children and bowls appear together very frequently.

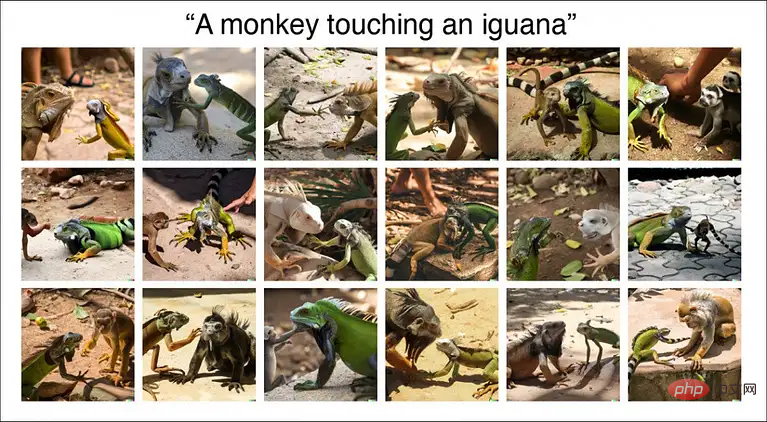

The final consistency rate of the image generated by "A monkey touching an iguana" is only 11%, and there may even be species errors in the rendered image.

Therefore, some image categories in DALL-E 2 are relatively well developed, such as children and food, but some categories of data still require continued training.

However, the current DALL-E 2 still mainly displays its high-definition and realistic style on the official website. It is not yet clear whether its inner meaning is to "glue two objects together" or to truly understand the text information. Then generate the image.

Researchers said that relational understanding is a basic component of human intelligence, and DALL-E 2's poor performance in basic spatial relationships (such as on, of) shows that it is not yet as flexible and flexible as humans. Robustly construct and understand the world.

However, netizens said that being able to develop "glue" to stick things together is already a great achievement! DALL-E 2 is not AGI and there is still a lot of room for improvement in the future. At least we have opened the door to automatically generate images!

In fact, as soon as DALL-E 2 was released, a large number of practitioners conducted in-depth analysis of its advantages and disadvantages.

Blog link: https://www.lesswrong.com/posts/uKp6tBFStnsvrot5t/what-dall-e-2-can-and-cannot-do

Writing novels with GPT-3 is a bit monotonous. DALL-E 2 can generate some illustrations for the text and even generate comic strips for long texts.

For example, DALL-E 2 can add features to pictures, such as "A woman at a coffeeshop working on her laptop and wearing headphones, painting by Alphonse Mucha", which can accurately generate painting styles, coffee shops, and wearing headphones. , laptops, etc.

But if the feature description in the text involves two people, DALL-E 2 may forget which features belong to which person. For example, the input text is:

a young dark-haired boy resting in bed, and a grey-haired older woman sitting in a chair beside the bed underneath a window with sun streaming through, Pixar style digital art.

A young dark-haired boy lies on the bed and an old gray-haired woman sits on a chair next to the bed under the window with sunlight shining through, Pixar style digital art.

It can be seen that DALL-E 2 can correctly generate windows, chairs and beds, but the generated images are slightly different in the feature combination of age, gender and hair color. confused.

Another example is to let "Captain America and Iron Man stand side by side". You can see that the generated result obviously has the characteristics of Captain America and Iron Man, but the specific elements are placed on different people. (For example, Iron Man wears Captain America’s shield).

If the foreground and background are particularly detailed, the model may not be generated.

For example, the input text is:

Two dogs dressed like roman soldiers on a pirate ship looking at New York City through a spyglass.

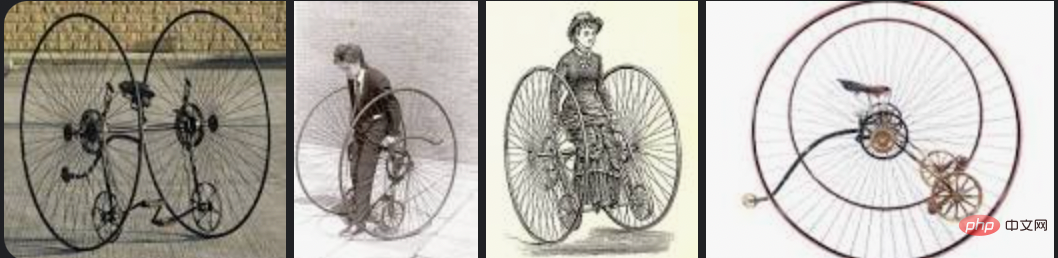

##two dogs Dog looks at New York City through a spyglass like a Roman soldier on a pirate ship. This time DALL-E 2 just stopped working. The author of the blog spent half an hour and couldn't figure it out. In the end, he needed to play in "New York City and a pirate ship" or "a dog with a telescope and a Roman soldier uniform" Choose between. Dall-E 2 can generate images using a generic background, such as a city or a bookshelf in a library, but if that is not the main focus of the image, getting the finer details often becomes very Disaster. Although DALL-E 2 can generate common objects, such as various fancy chairs, if you ask it to generate an "Alto bicycle", the resulting picture will be somewhat similar to a bicycle, but not exactly.

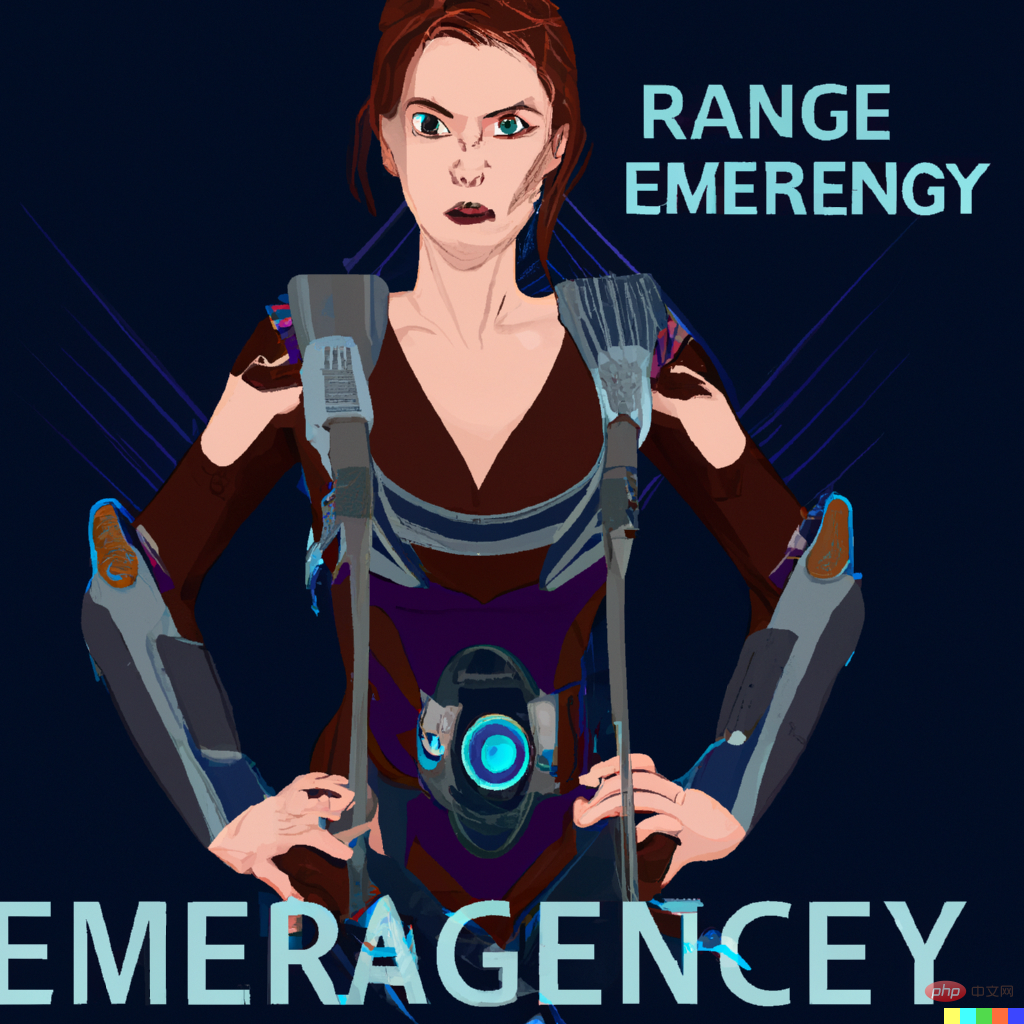

# on a stop sign. ##Although the model can indeed generate some "recognizable" English letters, the connected semantics are still different from the expected words. This is where DALL-E 2 is not as good as the first generation DALL-E.

When generating images related to musical instruments, DALL-E 2 seems to remember the position of the human hand when playing, but without strings, playing is a little awkward.

When generating images related to musical instruments, DALL-E 2 seems to remember the position of the human hand when playing, but without strings, playing is a little awkward.

DALL-E 2 also provides an editing function. For example, after generating an image, you can use the cursor to highlight its area and add a complete description of the modification.

DALL-E 2 also provides an editing function. For example, after generating an image, you can use the cursor to highlight its area and add a complete description of the modification.

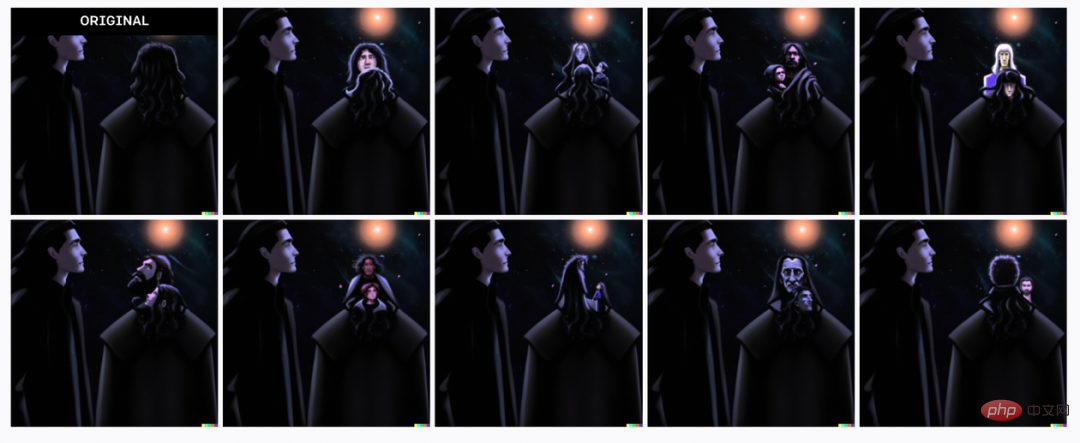

But this function is not always effective. For example, if you want to add "short hair" to the original image, the editing function will always add something in strange places.

# Technology is still being updated and developed, looking forward to DALL-E 3!

# Technology is still being updated and developed, looking forward to DALL-E 3!

The above is the detailed content of Harvard University messed up: DALL-E 2 is just a 'glue monster', and the accuracy of its generation is only 22%. For more information, please follow other related articles on the PHP Chinese website!