#In recent years, big data enlargement models have become the standard paradigm for modeling in the AI field. In the advertising scene, large models use more model parameters and use more training data. The models have stronger memory capabilities and generalization capabilities, opening up more space for improving advertising effects. However, the resources required for large models in the training process have also increased exponentially, and the storage and computing pressure are huge challenges to the machine learning platform.

Tencent Taiji Machine Learning Platform continues to explore cost reduction and efficiency improvement solutions. It uses hybrid deployment resources in advertising offline training scenarios to greatly reduce resource costs, and provides Tencent Advertising with 50W core cheap hybrid deployment every day. resources, helping Tencent Advertising reduce the cost of offline model training resources by 30%. At the same time, through a series of optimization methods, the stability of co-location resources is equal to that of normal resources.

In recent years, with the great success of large models sweeping various big data orders in the field of NLP, big data enlarged models have become A standard paradigm for modeling in the AI domain. Modeling of search, advertising, and recommendation is no exception. With hundreds of billions of parameters at every turn, T-sized models have become the standard for major prediction scenarios. Large model capabilities have also become the focus of the arms race among major technology companies.

In the advertising scene, large models use more model parameters and use more training data. The model has stronger memory ability and generalization ability, which improves the advertising effect. Lifting upward opens up more space. However, the resources required for large models in the training process have also increased exponentially, and the storage and computing pressure are huge challenges to the machine learning platform. At the same time, the number of experiments that the platform can support directly affects the algorithm iteration efficiency. How to provide more experimental resources at a lower cost is the focus of the platform's efforts.

Tencent Taiji Machine Learning Platform continues to explore cost reduction and efficiency improvement solutions. It uses hybrid deployment resources in advertising offline training scenarios to greatly reduce resource costs, and provides Tencent Advertising with 50W core cheap hybrid deployment every day. resources, helping Tencent Advertising reduce the cost of offline model training resources by 30%. At the same time, through a series of optimization methods, the stability of co-location resources is equal to that of normal resources.

Taiji Machine Learning Platform is committed to allowing users to focus more on business AI problem solving and application, one-stop solution for algorithm engineers to solve engineering problems such as feature processing, model training, and model services in the AI application process. Currently, it supports key businesses such as in-company advertising, search, games, Tencent Conference, and Tencent Cloud.

Taiji Advertising Platform is a high-performance machine learning platform designed by Taiji Advertising System that integrates model training and online reasoning. The platform has the training and reasoning capabilities of trillions of parameter models. At present, the platform supports Tencent advertising recall, rough ranking, fine ranking, dozens of model training and online inference; at the same time, the Taiji platform provides one-stop feature registration, sample supplementary recording, model training, model evaluation and online testing capabilities, greatly improving Improve developer efficiency.

With the continuous development of the Tai Chi platform, the number and types of tasks are increasing day by day, and the resource requirements are also increasing. In order to reduce costs and increase efficiency, the Tai Chi platform on the one hand improves platform performance and increases training speed; on the other hand, we also look for cheaper resources to meet the growing demand for resources.

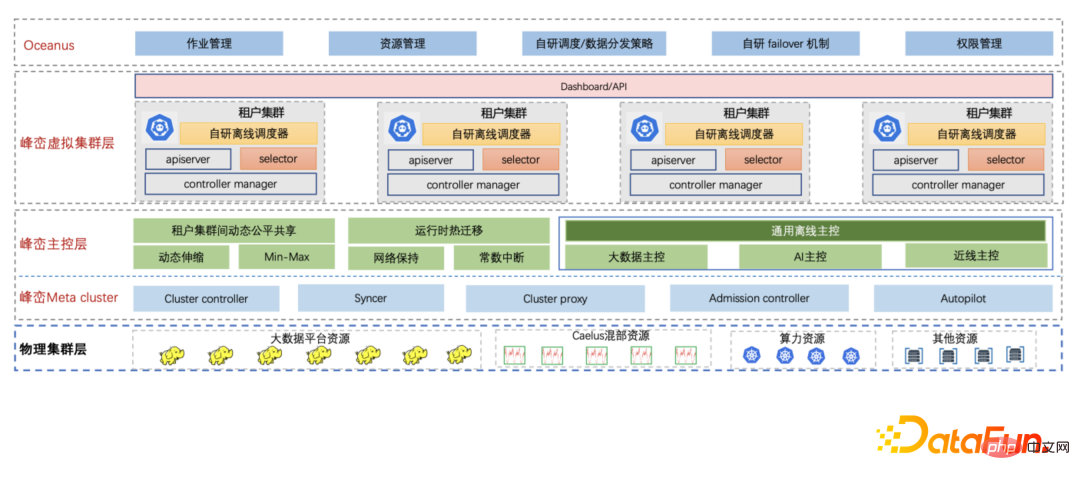

Fengluan - Tencent's internal cloud-native big data platform uses cloud-native technology to upgrade the company's entire big data architecture. In order to meet the continuously growing resource demand of big data business, Fengluan has introduced co-location resources, which can not only meet the resource demand, but also greatly reduce resource costs. Fengluan provides a series of solutions for co-location resources in different scenarios, turning unstable co-location resources into stable resources that are transparent to the business. Fengluan's co-location capability supports three types of co-location resources:

At the same time, Fengluan introduces cloud-native virtual cluster technology to shield the dispersion characteristics caused by the underlying co-location resources coming from different cities and regions. The Taiji platform directly connects to the Fengluan tenant cluster, which corresponds to a variety of underlying co-location resources. The tenant cluster has an independent and complete cluster perspective, and the Taiji platform can also be seamlessly connected.

Fengluan has self-developed the Caelus full-scenario offline co-location solution. By co-locating online operations and offline operations, it fully taps the idle resources of online machines and improves the utilization of online machine resources. , while reducing the resource cost of offline operations.

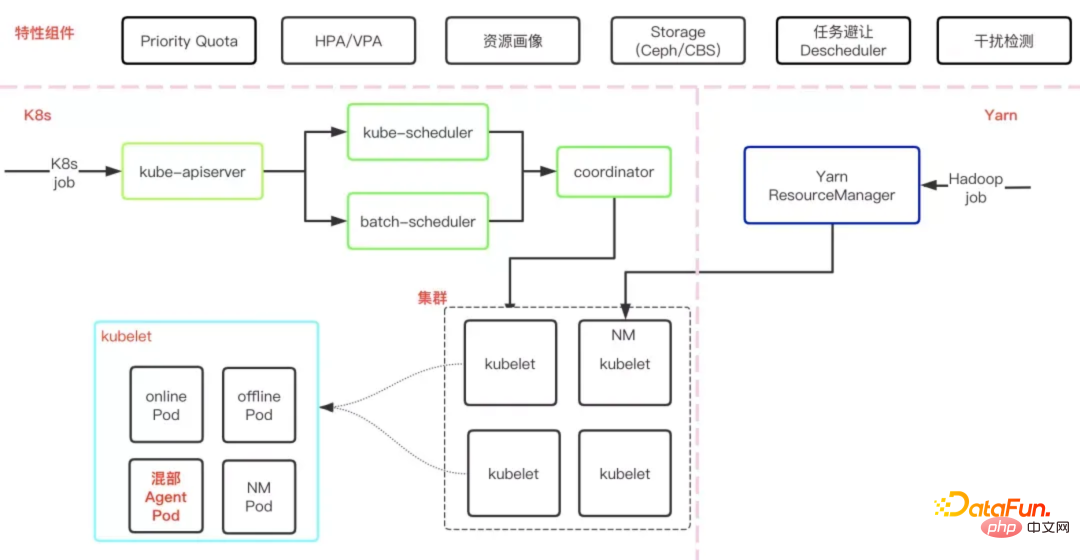

As shown in the figure below, it is the basic architecture of Caelus. Each component and module cooperates with each other to ensure the quality of co-location in many aspects.

First of all, Caelus ensures the service quality of online operations in all aspects, which is also one of the important prerequisites for co-location. For example, through rapid interference detection and processing mechanisms, it actively senses online services quality, timely processing, and supports plug-in expansion methods to support specific interference detection requirements of the business; through full-dimensional resource isolation, flexible resource management strategies, etc., high priority of online services is ensured.

Secondly, Caelus ensures the SLO of offline jobs in many aspects, such as: matching appropriate resources to jobs through co-location resources and offline job portraits to avoid resource competition; optimizing offline job eviction strategies and prioritizing eviction , supports graceful exit, and the strategy is flexible and controllable. Unlike big data offline jobs, which are mostly short jobs (minutes or even seconds), most Tai Chi jobs take longer to run (hours or even days). Through long-term resource prediction and job portraits, we can better guide scheduling to find suitable resources for jobs with different running times and different resource requirements, and avoid jobs being evicted after running for hours or even days, resulting in loss of job status, waste of resources and time. When an offline job needs to be evicted, runtime live migration will be used first to migrate the job instance from one machine to another, while keeping the memory status and IP unchanged. There will be almost no impact on the job, which greatly improves the job efficiency. SLO. In order to better utilize co-location resources, Caelus also has more capabilities. For details, see Caelus full-scenario offline co-location solution( //m.sbmmt.com/link/caaeb10544b465034f389991efc90877).

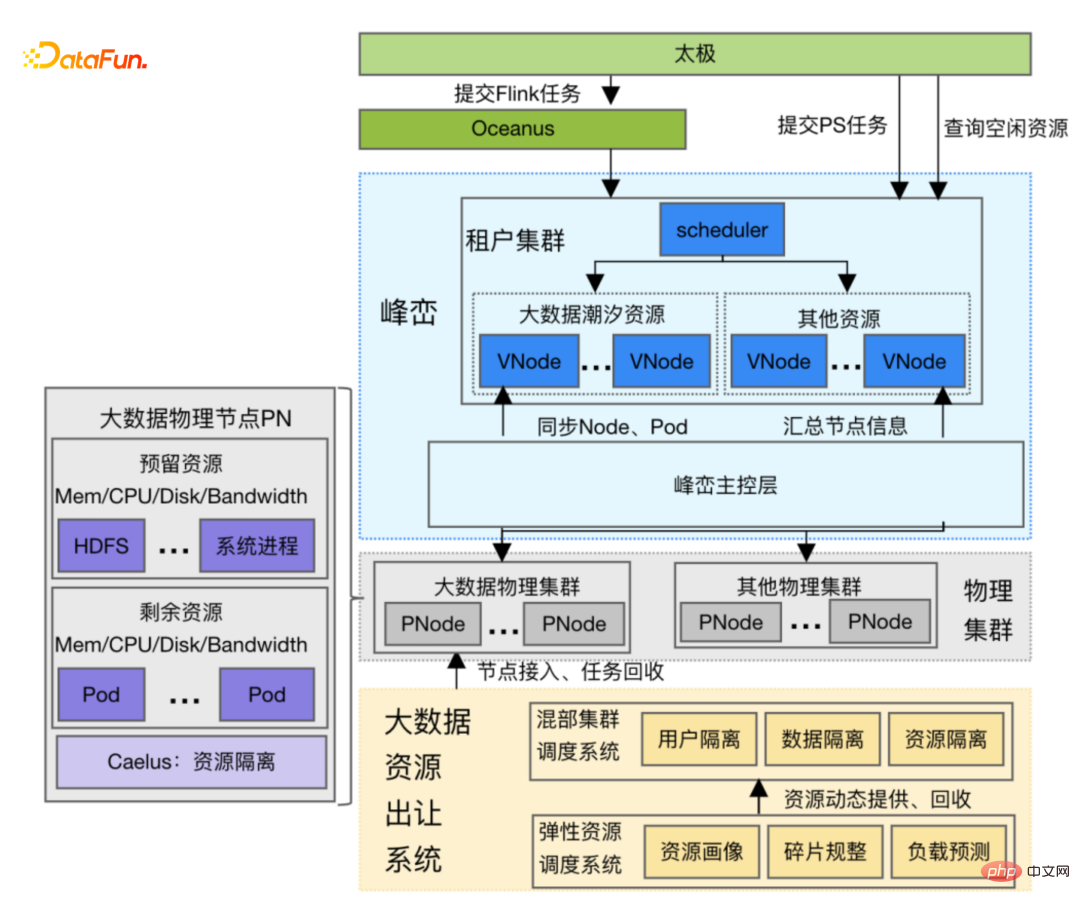

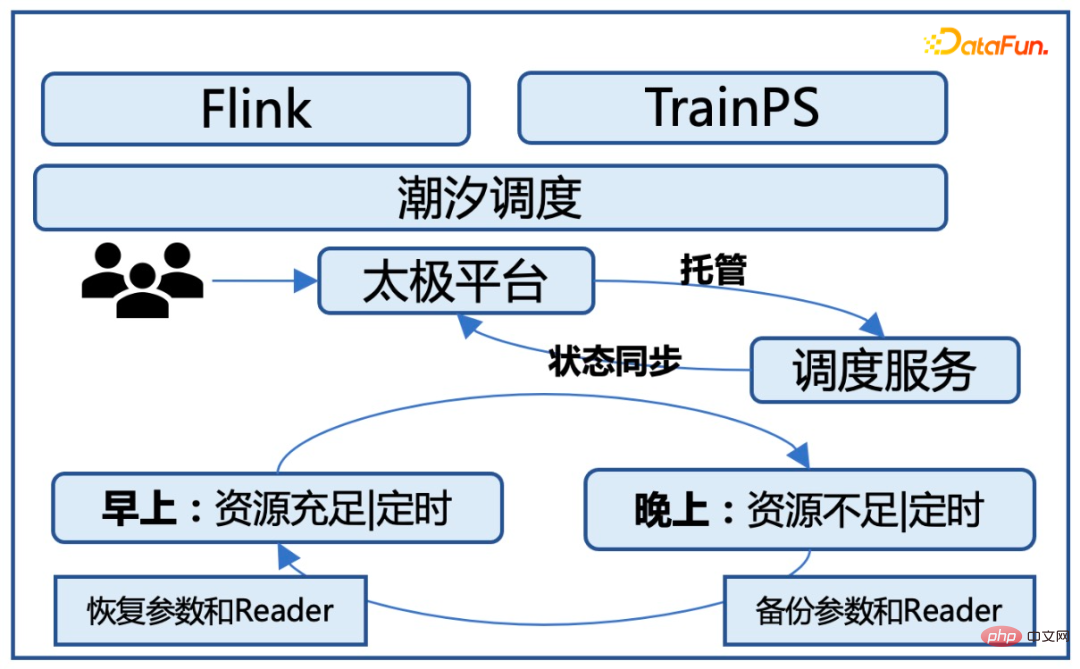

Big data tasks are generally relatively large during the day. There are less tasks at night, so Fengluan transfers some of the idle big data resources during the day to the Taiji platform, and recycles these resources at night. We call this resource tidal resources. The characteristic of Tidal resources is that the big data tasks on the nodes are almost completely exited, but the big data storage service HDFS is still retained on the nodes, and the HDFS service cannot be affected when running Tai Chi jobs. When the Taiji platform uses tidal resources, it needs to reach an agreement with the Fengluan platform. The Fengluan platform will screen a batch of nodes in advance based on historical data at a fixed time point. After the big data task gracefully exits, it will notify the Taiji platform that new nodes have joined, and the Taiji platform will start to The tenant cluster submits more tasks. Before the borrowing time arrives, Fengluan notifies Taiji Platform that some nodes need to be recycled, and Taiji Platform returns the nodes in an orderly manner.

As shown in the figure below, the mining, management and use of tidal resources involve the division of labor and cooperation of multiple systems:

The characteristic of computing resources is that it presents an exclusive CVM to the business. It is relatively friendly for business users. However, the challenge of using computing resources is that the CPU resources of low-quality CVM at the mica machine level will be suppressed by online CVM at any time, resulting in very unstable computing resources:

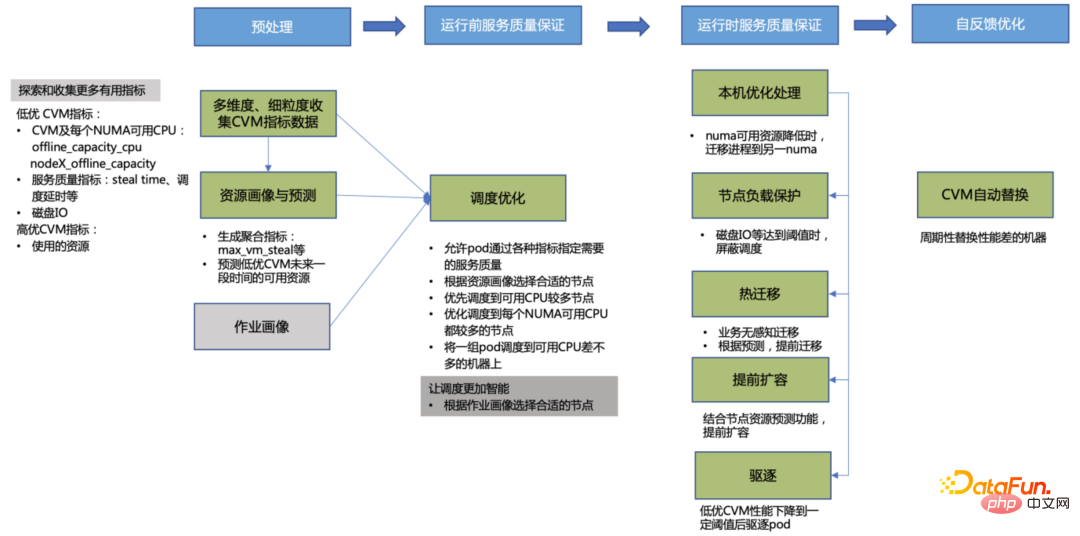

In order to solve the problem of instability of computing power resources, various capabilities are expanded through the peak and mountain main control layer, and computing power resources are optimized from many aspects to improve the stability of computing power. :

##① Resource portrait and prediction: Explore and collect various machine performance indicators and generate Aggregation indicators predict the available resources of low-quality CVM in the future. This information is used by the scheduler to schedule pods and the eviction component to evict pods to meet the pod's resource requirements.

② Scheduling optimization: In order to ensure the service quality of Tai Chi operations, there are more optimizations in the scheduling strategy based on the needs of the operations and the characteristics of the resources. Operation performance has been improved by more than 2 times.

③ Runtime service quality assurance

④ Self-feedback optimization: Through resource portraits, machines with poor performance are periodically replaced and connected with the underlying platform to achieve The smooth detachment of CVM gives Fengluan the opportunity to migrate application instances one by one without affecting the business, reducing the impact on the instances.

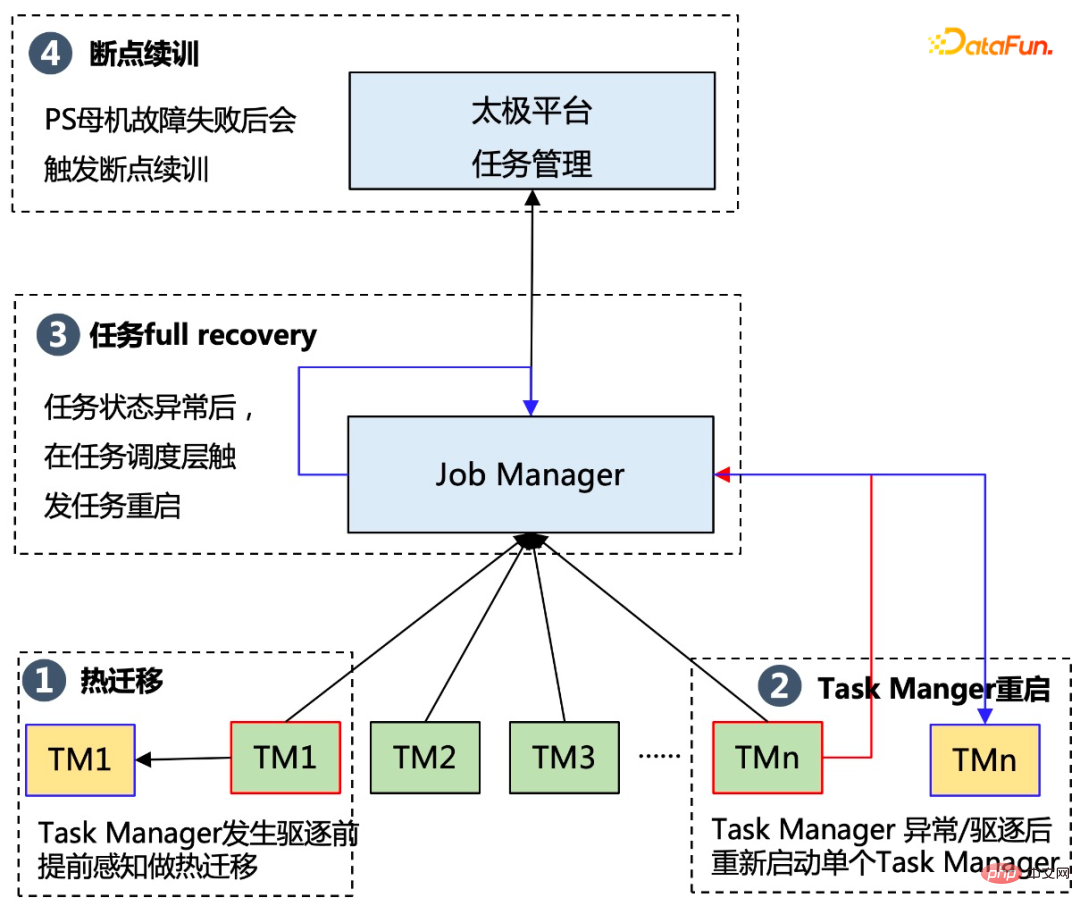

⑤ Improve the disaster recovery capability of the Flink layer and support single point restart and hierarchical scheduling

##TM (Task Manager)'s single-point restart capability prevents Task failure from causing the entire DAG to fail, and can better adapt to the computing power preemptive feature; hierarchical scheduling avoids excessive job waiting caused by gang scheduling, and avoids the waste of excessive application of TM Pods.

Offline training tasks should be used A major premise of cheap resources is that they cannot affect the normal operation of the original tasks on the resources, so co-location resources have the following key challenges:

In order to ensure that tasks can run stably on co-location resources, the platform uses a three-level fault tolerance strategy. Specifically, The solution is as follows:

Through the fault tolerance of the business layer, the stability of tasks running on co-location resources has increased from less than 90% at the beginning to 99.5% at the end. Basic and ordinary exclusive resources The stability of the above tasks remains the same.

In view of the tidal resource requirements, offline training tasks can only be used during the day and need to be provided for online business use at night, so the Tai Chi platform It is necessary to automatically start training tasks during the day based on the availability of resources; make cold backup of tasks at night and stop corresponding training tasks at the same time. At the same time, the priority of each task scheduling is managed through the task management queue. New tasks started at night will automatically enter the queue state and wait for new tasks to be started the next morning.

#Through these optimizations, we can ensure that tasks can run stably on tidal resources and are basically unaware of the business layer. At the same time, the running speed of the task will not be greatly affected, and the additional overhead caused by task start and stop scheduling is controlled within 10%.

Taiji’s offline hybrid distribution optimization solution has been implemented in Tencent advertising scenarios, providing 30W core all-weather for Tencent advertising offline model research and training every day Mixed deployment resources, 20W core tidal resources, support advertising recall, rough ranking, fine ranking multi-scenario model training. In terms of resource cost, for tasks with the same computational load, the resource cost of hybrid deployment is 70% of that of ordinary resources. After optimization, system stability and physical cluster task success rate are basically the same.

In the future, on the one hand, we will continue to increase the use of hybrid computing resources, especially the application of hybrid computing resources; on the other hand, the company's online business is becoming GPU-based, so In mixed resource applications, in addition to traditional CPU resources, online GPU resources will also be tried to be used during offline training.

That’s it for today’s sharing, thank you all.

The above is the detailed content of Training cost optimization practice of Tencent advertising model based on 'Tai Chi'. For more information, please follow other related articles on the PHP Chinese website!