What kind of BERT model can be obtained by training on a consumer-grade GPU in only one day?

In recent times, language models have once again made the field of AI popular. The unsupervised training attribute of the pre-trained language model allows it to be trained on the basis of massive samples and obtain a large amount of semantic and grammatical knowledge. Whether it is classification or question and answer, there seems to be no problem that AI cannot solve.

However, large models not only bring technological breakthroughs, but also put forward endless demands for computing power.

Recently, Jonas Geiping and Tom Goldstein from the University of Maryland discussed all the research on scaling up computing and delved into the direction of improvements in scaling down computing. Their research has attracted the attention of the machine learning community.

In new research, the author discussed what kind of language model a single consumer-grade GPU (RTX 2080Ti) can train, and obtained exciting results. Let’s see how it is implemented:

In the field of natural language processing (NLP), pre-training models based on the Transformer architecture have become mainstream and have brought many Breakthrough progress. In large part, the reason for the powerful performance of these models is their large scale. As the amount of model parameters and data increases, the performance of the model will continue to improve. As a result, there is a race within the NLP field to increase model size.

However, few researchers or practitioners believe they have the ability to train large language models (LLMs), and usually only the technology giants in the industry have the resources to train LLMs.

In order to reverse this trend, researchers from the University of Maryland conducted some exploration.

The paper "Cramming: Training a Language Model on a Single GPU in One Day":

Paper link: https://arxiv.org/abs /2212.14034

This issue is of great significance to most researchers and practitioners, because it will become a reference for model training costs and is expected to break the bottleneck of extremely high LLM training costs. The research paper quickly sparked attention and discussion on Twitter.

IBM's NLP research expert Leshem Choshen commented on Twitter: "This paper summarizes all the large model training tricks you can think of."

Researchers at the University of Maryland believe that if scaled-down model pre-training is a feasible simulation of large-scale pre-training, then this will open up a series of further academic research on large-scale models that are currently difficult to achieve. Research.

Furthermore, this study attempts to benchmark the overall progress in the NLP field over the past few years, not just the impact of model size.

The study created a challenge called "Cramming" - learning an entire language model the day before testing. The researchers first analyzed aspects of the training pipeline to understand which modifications could actually improve the performance of small-scale simulation models. And, the study shows that even in this constrained environment, model performance strictly follows the scaling laws observed in large computing environments.

While smaller model architectures can speed up gradient calculations, the overall rate of model improvement remains almost the same over time. This research attempts to use the expansion law to obtain performance improvements by improving the efficiency of gradient calculations without affecting the model size. In the end, the study succeeded in training a model with respectable performance—close to or even exceeding BERT on the GLUE task—at a low training cost.

In order to simulate the resource environment of ordinary practitioners and researchers, this study first constructed a resource-limited research environment:

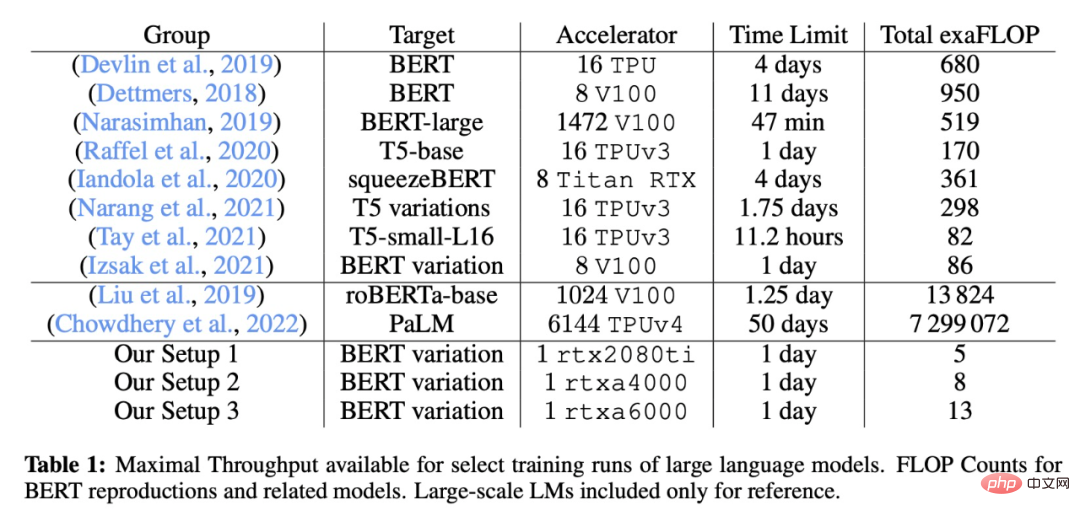

The comparison of the specific training settings of this study with some classic large models is shown in the following table:

Research The staff implemented and tested some modification directions proposed by existing work, including general implementation and initial data settings, and tried methods to modify the architecture, training, and modify the data set.

The experiments are conducted in PyTorch, without using specialized implementations to be as fair as possible, everything is kept at the implementation level of the PyTorch framework, only automatic operator fusion is allowed that can be applied to all components, and only in The efficient attention kernel is re-enabled only after the final architectural variant is selected.

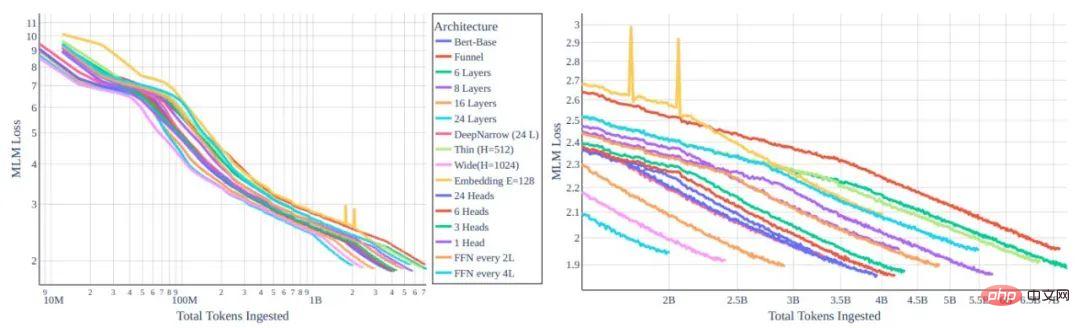

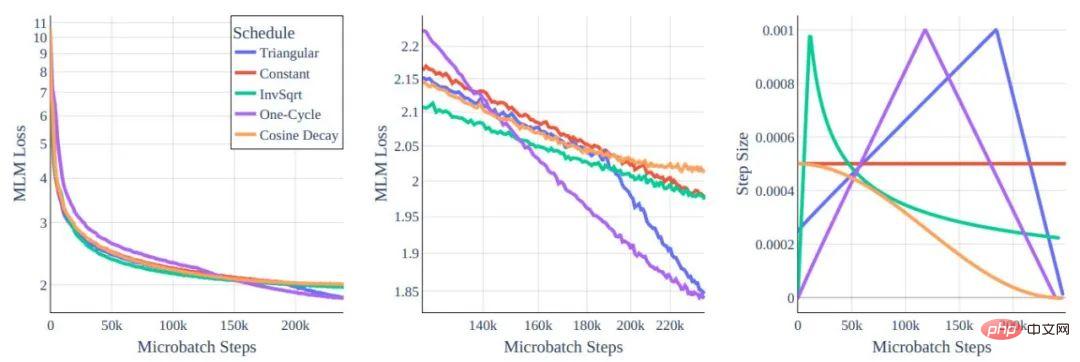

Figure 1: Comparison of MLM loss function and token of different transformer architecture variants

Left: Global view. Right: zoomed in to 10e8 and more tokens.

All models are trained with the same computing power cost. We can see that the improvement achieved through architectural reshaping is minimal.

Regarding improving performance, the first way we think of is to modify the model architecture. Intuitively, smaller/lower capacity models seem to be optimal in one-card-a-day training. However, after studying the relationship between model type and training efficiency, the researchers found that the scaling laws created a huge obstacle to downsizing. The training efficiency of each token depends heavily on the model size rather than the transformer type.

Additionally, smaller models learn less efficiently, which largely slows down throughput increases. Fortunately, the fact that training efficiency remains almost the same in models of the same size means that we can look for suitable architectures with similar parameter numbers, making design choices mainly based on the computational time affecting a single gradient step. .

Figure 2: Learning Rate Schedule

Although the behavior is similar globally, you can see in the middle zoom-in that the differences do exist.

In this work, the authors studied the impact of training hyperparameters on the BERT-base architecture. Understandably, models from the original BERT training method did not perform well with Cramming-style training requirements, so the researchers revisited some standard choices.

The author also studied the idea of optimizing the data set. The law of scaling prevents significant gains (beyond computational efficiency) from architectural modifications, but the law of scaling does not prevent us from training on better data. If we want to train more tokens per second, we should look to train on better tokens.

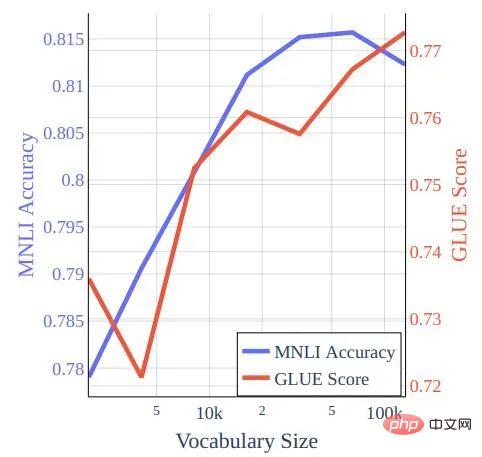

Figure 3: Vocabulary size versus GLUE score and MNLI accuracy for a model trained on Cramming-style training on bookcorpus-wikipedia data.

Researchers systematically evaluate the performance of GLUE benchmarks and WNLI, noting that in the previous section only MNLI (m) was used and not based on the full GLUE score Adjust hyperparameters. In the new study, the authors fine-tuned all data sets for 5 epochs for BERT-base, with a batch size of 32 and a learning rate of 2 × 10-5. This is suboptimal for Cramming-trained models, which can get slight improvements from a batch size of 16 and a learning rate of 4 × 10−5 with cosine decay (this setting does not improve the pre-trained BERT check point).

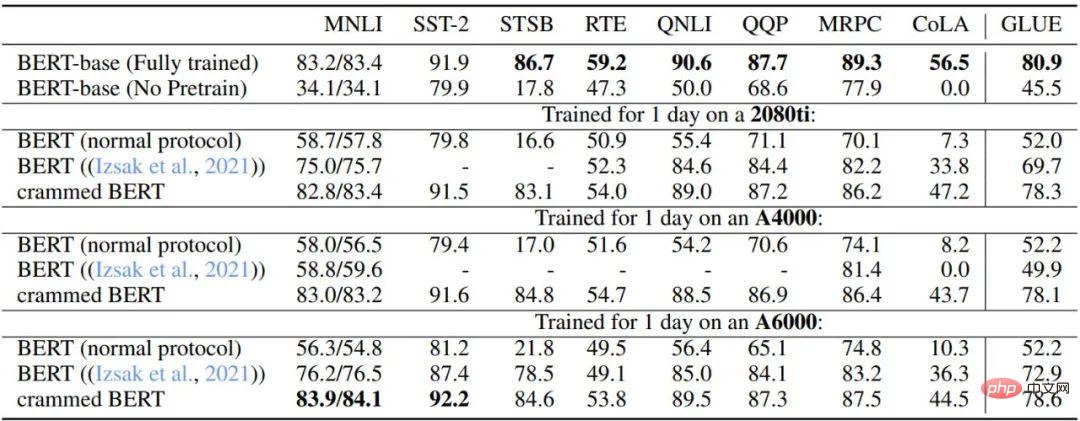

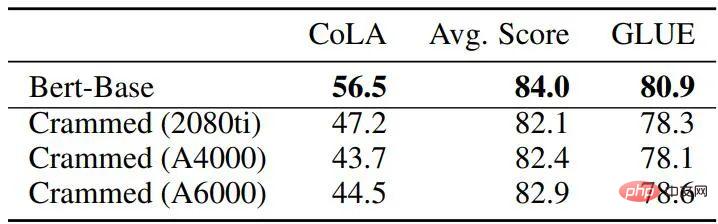

Table 3 and Table 4 describe the performance of this setup on GLUE downstream tasks. The authors compared the original BERT-base check point, a BERT pre-training setting that stops after reaching the computing power limit, the setting described in the 2021 study by Izsak et al., and a modified setting that trained for one day per GPU setting. Overall, performance is surprisingly good, especially for larger datasets such as MNLI, QQP, QNLI, and SST-2, and downstream fine-tuning can smooth out the remaining differences between the full BERT model and the Cramming setting variant.

In addition, the authors found that the new method is significantly improved compared to ordinary BERT training with limited computing power and the method described by Izsak et al. For the study by Izsak et al., the method described was originally designed for a full 8-GPU blade server, and in the new scenario, compressing the BERT-large model onto the smaller GPU was responsible for most of the performance Reasons for the decline.

Table 3: GLUE-dev performance comparison of baseline BERT and Cramming version models

The hyperparameters of all tasks are fixed, and the epoch limit is 5, the missing value is NaN. It was designed for an 8-GPU blade server, and here, all the computing is crammed into a single GPU.

Table 4: GLUE-dev performance comparison between baseline BERT and padding model

Overall, using the method in the paper, the training results are very close The original BERT, but be aware that the latter uses 45-136 times more total FLOPS than the new method (it takes four days on 16 TPUs). And when the training time is extended by 16 times (two days of training on 8 GPUs), the performance of the new method actually improves a lot than the original BERT, reaching the level of RoBERTa.

In this work, it was discussed how much performance transformer-based language models can achieve in a very computationally limited environment. Fortunately, several modification directions can make We get good downstream performance on GLUE. The researchers hope that this work can provide a baseline for further improvements and further provide theoretical support for the many improvements and techniques proposed for transformer architecture in recent years.

The above is the detailed content of One card per day challenge: RTX2080Ti handles large model training, saving 136 times of computing power, and the academic community cheers. For more information, please follow other related articles on the PHP Chinese website!