Table of Contents

Paper 1: Language models generalize beyond natural proteins

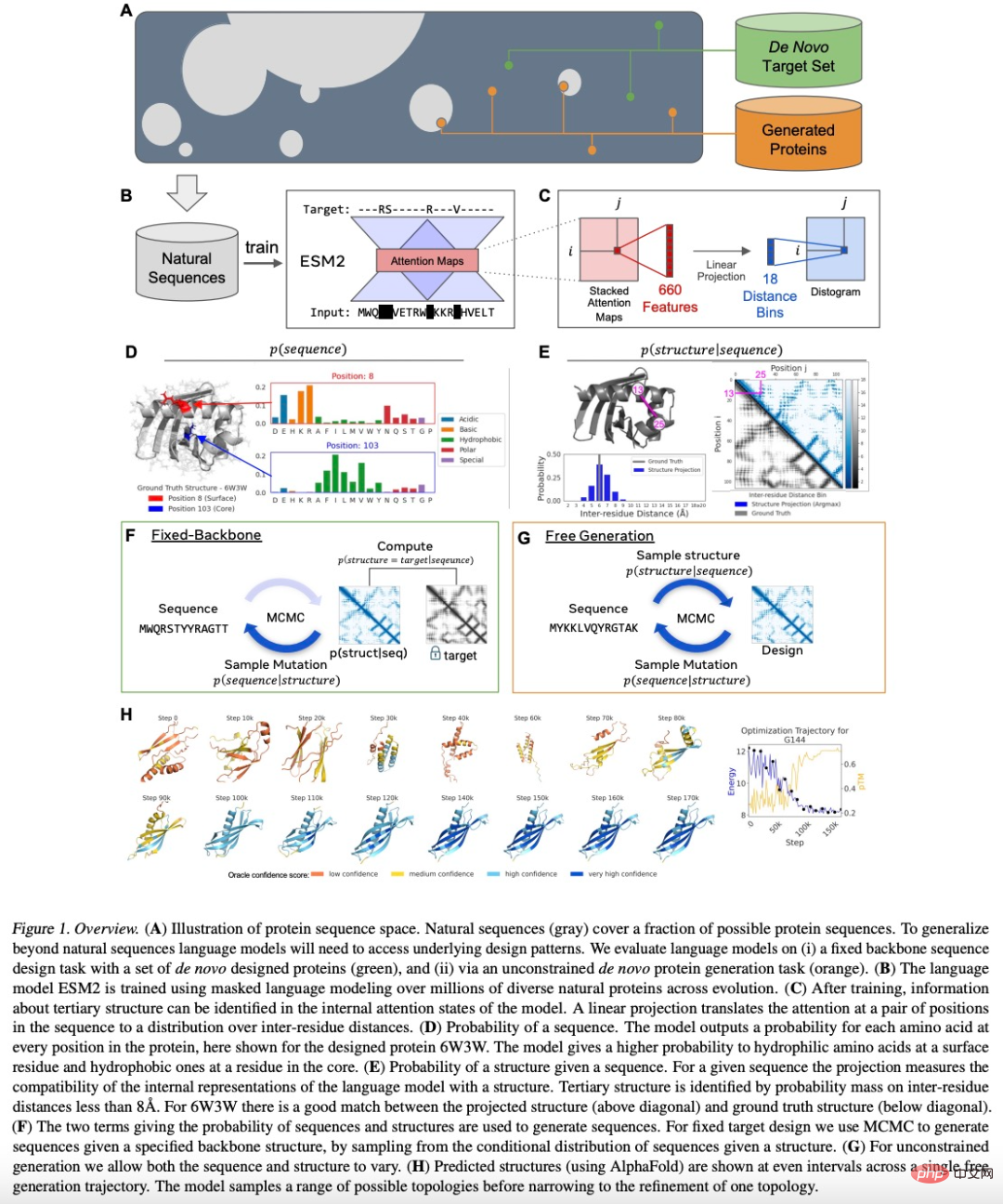

: Researchers focused on two Protein design tasks: Fixed backbone design of specified structures; unconstrained generation of sampled structures from models. Although language models were trained only on sequences, the study found that they were able to design structures. In the experimental results of this study, a total of 228 proteins were generated, with a design success rate of 152/228 (67%). Of the 152 experimentally successful designs, 35 had no obvious sequence match to a known native protein.

For fixed-backbone designs, the language model successfully generated protein designs for eight experimentally evaluated human-created fixed-backbone targets.

For the case of unconstrained generation, the sampled proteins cover different topologies and secondary structure compositions, resulting in a high experimental success rate of 71/129 (55%) .

Figure 1 below is the overall process of protein design using the ESM2 model:

: This study found that the ESM2 language model can generate new proteins other than natural proteins by learning deep grammar.

Paper 2: A high-level programming language for generative protein design

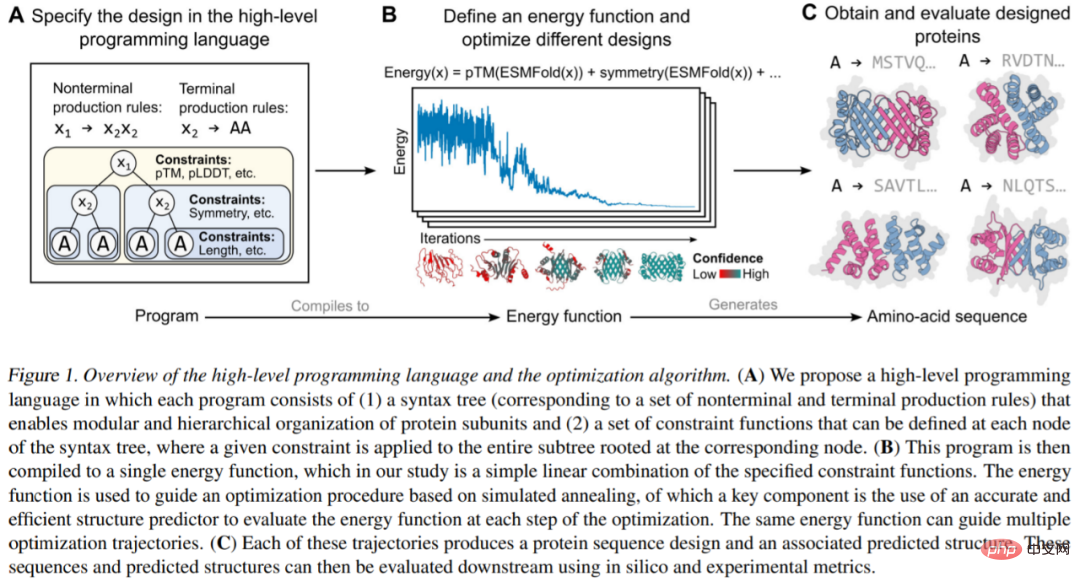

: FAIR researchers start from modularity and programmability, placing them at a higher level of abstraction, Protein designers only need to reassemble high-level instructions and then execute the instructions on the generated model. Their proposed programming language for generating protein designs allows designers to specify intuitive, modular, and hierarchical procedures. The programming language first requires a syntax tree (Figure 1A), which consists of terminal symbols (i.e., the leaves of the tree) and non-terminal symbols (i.e., the internal nodes of the tree). The former corresponds to a unique protein sequence (which may be repeated in the protein), The latter supports hierarchical organization.

In addition, an energy-based generation model is also needed. First, Protein Designer specifies a high-level program that consists of a set of constraints organized hierarchically (Figure 1A). The program is then compiled into an energy function that is used to evaluate compatibility with constraints, which are arbitrary and non-differentiable (Figure 1B). Finally by incorporating atomic-level structure predictions (supported by language models) into energy functions, a large number of complex protein designs can be generated (Figure 1C).

Recommendation: Programmatically generate complex and modular protein structures.

Paper 3: DOC: Improving Long Story Coherence With Detailed Outline Contro

Abstract: Some time ago, Re^3, a language model that imitates the human writing process, was released. This model does not require fine-tuning of large models, but It is through designing prompts to generate consistent stories.

Now, the research team has proposed a new model for generating stories, DOC. The authors of the paper, Kevin Yang and Tian Yuandong, also posted on Twitter to promote the DOC model, saying that the stories generated by DOC are more coherent and interesting than those generated by Re^3.

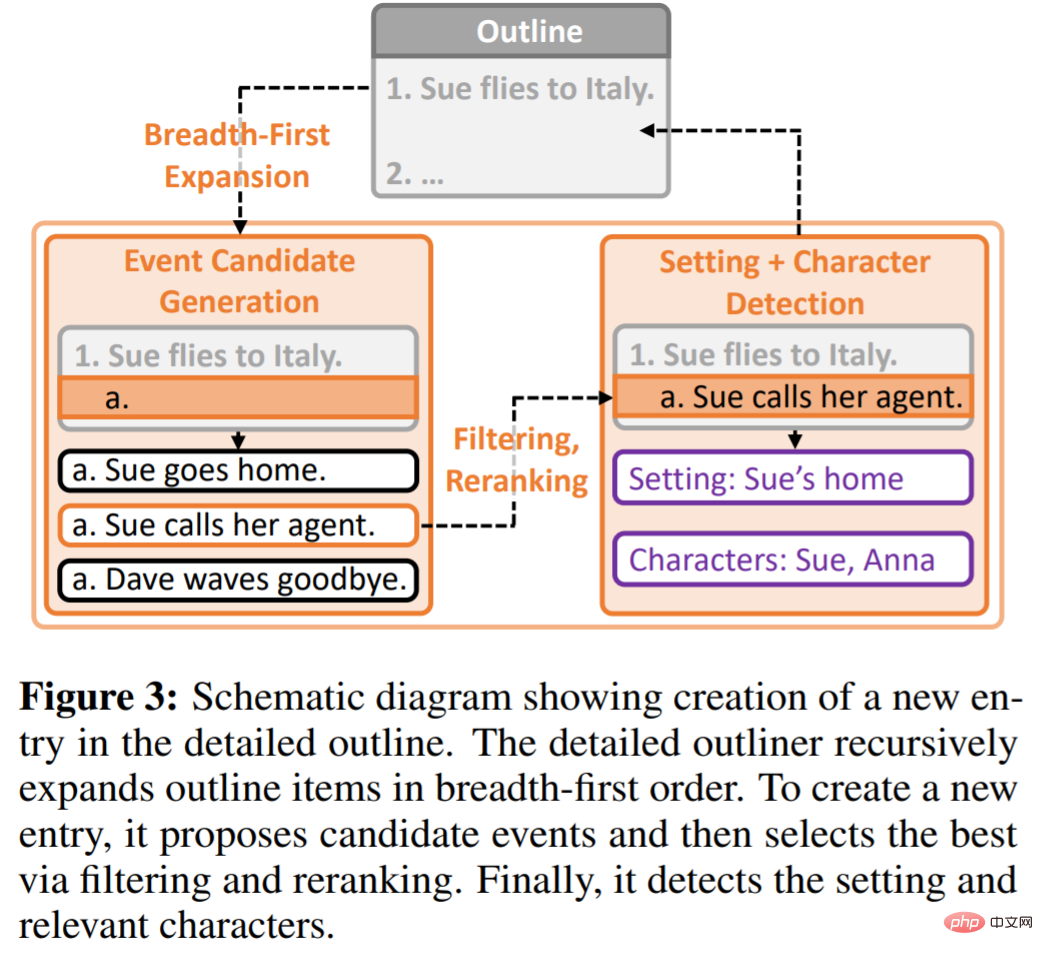

DOC framework means Detailed Outline Control, which is used to improve plot coherence when automatically generating stories that are thousands of words long. DOC consists of two complementary components: Detailed Outliner and Detailed Controller.

Detailed Outliner is responsible for creating detailed, hierarchically structured outlines that move writing ideas from the drafting to the planning stage. The Detailed Controller ensures that the generated results follow the detailed outline by controlling the alignment of story paragraphs with outline details.

The study conducted a manual evaluation of the model's ability to automatically generate stories, and DOC achieved substantial gains in multiple indicators: plot coherence (22.5%), outline relevance (28.2 %) and fun (20.7%), which is much better than the Re^3 model. Additionally, DOC is easier to control in an interactive build environment.

## Recommendation: Another new work by Tian Yuandong and other original team members: AI generates long stories, numbers Even a thousand-word article can be coherent and interesting.

Paper 4: Scalable Diffusion Models with Transformers

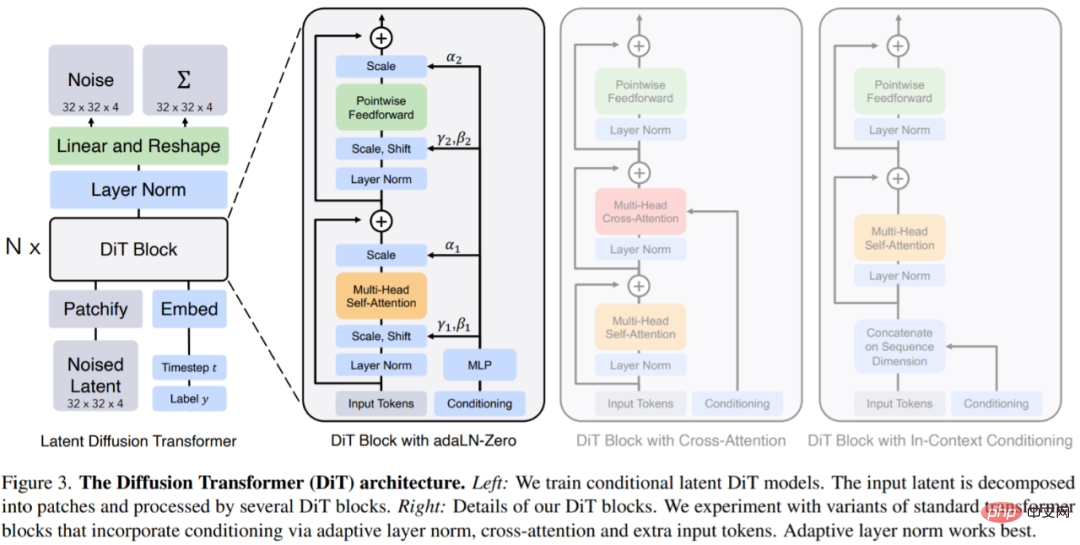

Abstract: In this article, William Peebles from UC Berkeley and Xie Saining from New York University wrote "Scalable Diffusion Models with Transformers". The goal is to uncover the significance of architectural choices in diffusion models and provide guidance for future generation models. Research provides an empirical baseline. This study shows that U-Net inductive bias is not critical to the performance of diffusion models and can be easily replaced with standard designs such as transformers.

This research focuses on a new class of Transformer-based diffusion models: Diffusion Transformers (DiTs for short). DiTs follow the best practices of Vision Transformers (ViTs), with some small but important tweaks. DiT has been shown to scale more efficiently than traditional convolutional networks such as ResNet.Specifically, this article studies the scaling behavior of Transformer in terms of network complexity and sample quality. It is shown that by constructing and benchmarking the DiT design space under the latent diffusion model (LDM) framework, where the diffusion model is trained within the latent space of VAE, it is possible to successfully replace the U-Net backbone with a transformer. This paper further shows that DiT is a scalable architecture for diffusion models: there is a strong correlation between network complexity (measured by Gflops) and sample quality (measured by FID). By simply extending DiT and training an LDM with a high-capacity backbone (118.6 Gflops), state-of-the-art results of 2.27 FID are achieved on the class-conditional 256 × 256 ImageNet generation benchmark. Recommendation: The U-Net that dominates the diffusion model will be replaced, introduced by Xie Senin et al. Transformer proposes DiT. Paper 5: Point-E: A System for Generating 3D Point Clouds from Complex Prompts Abstract: OpenAI’s open source 3D model generator Point-E has triggered a new wave of craze in the AI circle. Point-E can generate 3D models in one to two minutes on a single Nvidia V100 GPU, according to a paper published with the open source content. In comparison, existing systems such as Google's DreamFusion typically require hours and multiple GPUs. Point-E does not output a 3D image in the traditional sense, it generates a point cloud, or a discrete set of data points in space that represents a 3D shape. The E in Point-E stands for "efficiency," meaning it's faster than previous 3D object generation methods. While point clouds are easier to synthesize from a computational perspective, they cannot capture the fine-grained shape or texture of objects — a key limitation of Point-E currently. To solve this problem, the OpenAI team trained an additional artificial intelligence system to convert Point-E’s point cloud into a mesh. Recommendation: Three-dimensional text-to-image AI is now available: Single GPU ships in less than one minute, produced by OpenAI . Paper 6: Reprogramming to recover youthful epigenetic information and restore vision Abstract: On December 2, 2020, several surprising words appeared on the cover of the top scientific journal "Nature": " Turning Back Time". The research on the cover comes from the team of David Sinclair, a tenured professor at Harvard Medical School. Although the article is only a few pages long, it shows a new prospect - using gene therapy to induce reprogramming of ganglion cells and restore youthful epigenetic information, allowing the optic nerve to regenerate after damage and reverse the vision caused by glaucoma and aging. decline. David Sinclair said that the team’s research goal has always been to slow down and reverse human aging and treat diseases by addressing the causes rather than the symptoms. Building on this 2020 study, David Sinclair's team is using an age reversal technology called "REVIVER" to test it on non-human primates to observe To see if it's safe and treats blindness like it does in mice. The latest research comes from David Sinclair and a team of 60 people led by him. He said that aging is like scratches on a CD that can be erased, or damaged software in the system, which can be achieved by simply reinstalling it. Reversals, as they say in the Lifespan book. In the preprint paper, the authors stated that all living things lose genetic information over time and gradually lose cellular functions. Using a transgenic mouse system known as ICE (for Inducible Changes in the Epigenome), researchers demonstrate that the process of repairing non-mutagenic DNA breaks accelerates age-related physiological, cognitive, and molecular changes, including expression of Observe genetic erosion, loss of cell ability, cell senescence, etc. Epigenetic reprogramming through ectopic expression can restore youthful gene expression patterns, researchers say. Recommendation: Research on reversing aging. Paper 7: Training Robots to Evaluate Robots: Example-Based Interactive Reward Functions for Policy Learning Abstract: Often, physical interactions help reveal less obvious information, such as when we might pull on a table leg To assess whether it's stable, or to turn a water bottle upside down to check if it's leaking, the study suggests that this interactive behavior could be acquired automatically by training a robot to evaluate the results of the robot's attempts to perform a skill. These evaluations, in turn, serve as IRFs (interactive reward functions) that are used to train reinforcement learning policies to perform target skills, such as tightening table legs. Additionally, IRF can serve as a validation mechanism to improve online task execution even after full training is complete. For any given task, IRF training is very convenient and requires no further specification. Evaluation results show that IRF can achieve significant performance improvements and even surpass baselines with access to demos or carefully crafted rewards. For example, in the picture below, the robot must first close the door, and then rotate the symmetrical door handle to completely lock the door.

The above is the detailed content of CoRL 2022 Excellent Paper; Language Model Generates Proteins Not Found in Nature. For more information, please follow other related articles on the PHP Chinese website!