The current trend of general-purpose large models replacing proprietary models customized for specific tasks is gradually emerging. This approach has significantly reduced the marginal cost of AI model application. This raises a question:Is it feasible to achieve zero-sample information extraction without training?

Information extraction technology is an important part of building a knowledge graph. If it can be implemented without training at all, it will greatly reduce the threshold of data analysis and help realize automated knowledge. Library build.

We build a general zero-sample IE system by using prompt engineering method for GPT-3.5——GPT4IE (GPT for Information Extraction), found that GPT3.5 can automatically extract structured information from original sentences. Supports both Chinese and English, and the tool code is open source.

Tool URL: https://cocacola-lab.github.io/GPT4IE/

Code: https://github.com/cocacola-lab/GPT4IE

Information The goal of extraction (Information Extraction, IE) is to extract structured information from unstructured text, including entity-relation triple extraction (Entity-relation Extract, RE), named entity recognition (Named Entity Recognition, NER) and event extraction ( Event Extraction, EE)[1][2][3][4][5]. Many studies have begun to rely on IE technology to automate zero-shot/few-shot work, such as clinical IE [6].

Recently, large-scale pre-trained language models (LLMs) have performed extremely well on many downstream tasks, even with just a few examples as a guide without the need for It can be achieved with a little tweaking. From this we raise a question:Is it feasible to implement zero-sample IE tasks only through prompts?We try to use the prompt method to build a general zero-sample IE system for GPT-3.5 -GPT4IE (GPT for Information Extraction). Combined with GPT3.5 and hints, it is able to automatically extract structured information from original sentences.

Design a task-specified prompt template, and then fill the template with user input The specific slot value (slot) forms a prompt (prompt), which is entered into GPT-3.5 and used for IE. There are three supported tasks: RE, NER and EE, and all three tasks are bilingual in Chinese and English. The user needs to enter a sentence and formulate a list of extraction types (i.e., relationship list, head entity list, tail entity list, entity type list, or event list). The details are as follows:

The goal of the RE taskis to extract triples from the text, such as "(China, capital, Beijing)", "("Ruyi "Biography", starring, Zhou Xun)". The required input format is as follows (the items with "*" represent non-required fields. We have set default values for these options, but for flexibility, we support user-defined specified lists, the same below):

NER taskis designed to extract entities from text, such as "(LOC , Beijing)”, “(Character, Zhou Enlai)”. On the NER task, the input format is as follows:

EE Taskaims to extract events from plain text, such as "{Life-Divorce: {Person: Bob, Time: today, Place: America}}", "{Contest Behavior-Promotion: {Time : None, Promotional side: Northwest Wolves, Promotional event: Battle for the top spot in the Chinese Premier League}}". The input format is as follows:

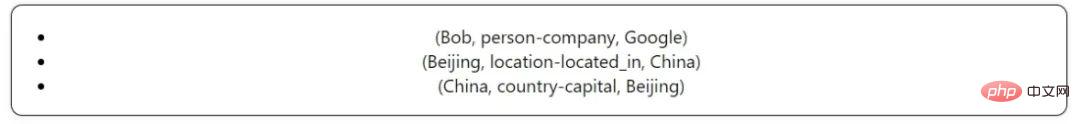

##3.1 RE Example 1

Input:

Input Sentence: Bob worked for Google in Beijing, the capital of China.

rtl: [ 'location-located_in', 'administrative_division-country', 'person-place_lived', 'person-company', 'person-nationality', 'company-founders', 'country-administrative_divisions', 'person-children', 'country -capital', 'deceased_person-place_of_death', 'neighborhood-neighborhood_of', 'person-place_of_birth']

stl: ['organization', 'person' , 'location', 'country']

otl: ['person', 'location', 'country', 'organization', 'city']

Output:

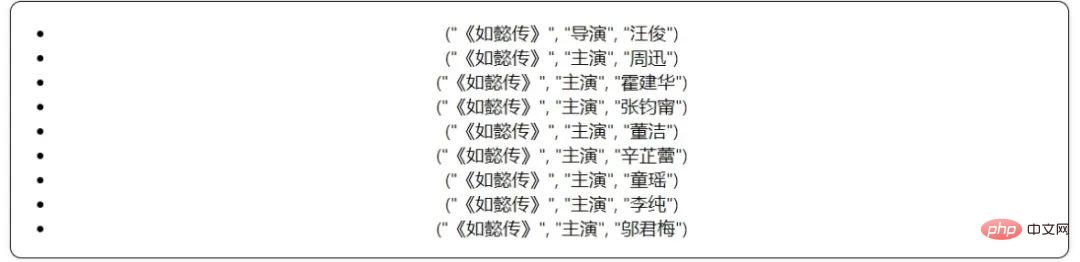

##3.2 RE Example 2

Input Sentence:"Ruyi's Royal Love in the Palace" is an ancient costume palace emotional TV series, produced by Directed by Wang Jun, starring Zhou Xun, Huo Jianhua, Zhang Junning, Dong Jie, Xin Zhilei, Tong Yao, Li Chun, Wu Junmei and others.

rtl:['Album', 'Date of establishment', 'Altitude', 'Official language', 'Area', 'Father', 'Singer', 'Producer', 'Director', 'Capital', 'Starring', 'Chairman', 'ancestral home', 'Wife', 'Mother', 'Climate', 'Area', 'Protagonist' , 'Postal code', 'Abbreviation', 'Production company', 'Registered capital', 'Screenwriter', 'Founder', 'Graduation school', 'Nationality', 'Professional code', 'Dynasty', 'Author ', 'lyrics', 'city', 'guest', 'headquarter location', 'population', 'spokesperson', 'adapted from', 'principal', 'husband', 'host', 'theme song' ', 'years of study', 'composition', 'number', 'release time', 'box office', 'acting', 'dubbing', 'award-winning']

# #stl: ['Country', 'Administrative Region', 'Literary Works', 'Characters', 'Film and Television Works', 'School', 'Book Works', 'Place', 'Historical Figures', 'Attractions' , 'Song', 'Subject Major', 'Enterprise', 'TV Variety Show', 'Institution', 'Enterprise/Brand', 'Entertainment Figure']

#otl: ['Country', 'Person', 'Text', 'Date', 'Place', 'Climate', 'City', 'Song', 'Enterprise', 'Number', 'Music Album', 'School', 'Work', 'Language']Output:

3.3 NER Example 1

Input:Input Sentence :Bob worked for Google in Beijing, the capital of China.

etl

: ['LOC', 'MISC', 'ORG', ' PER']Output:

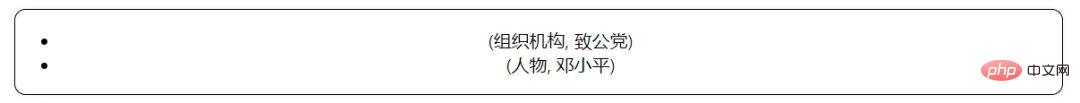

## 3.4 NER Example 2

Input Sentence:In the past five years, under the guidance of Deng Xiaoping Theory, the Zhigong Party has followed the basic line of the primary stage of socialism and worked hard to implement the policies proposed at the 10th National Congress of the Zhigong Party The basic tasks of participating in party functions and strengthening self-construction.

etl: ['Organization', 'Location', 'People']

Output:

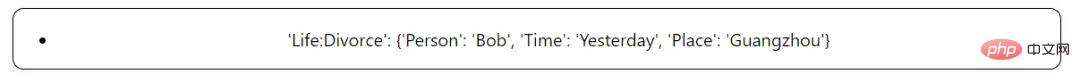

##3.5 EE Example 1

Input:

Input Sentence:Yesterday Bob and his wife got divorced in Guangzhou.

etl: {'Personnel:Elect': ['Person', 'Entity', 'Position', 'Time', 'Place'], 'Business:Declare-Bankruptcy': ['Org', 'Time ', 'Place'], 'Justice:Arrest-Jail': ['Person', 'Agent', 'Crime', 'Time', 'Place'], 'Life:Divorce': ['Person', 'Time ', 'Place'], 'Life:Injure': ['Agent', 'Victim', 'Instrument', 'Time', 'Place']}

Output:

##3.6 EE Example 2

Input:

Input Sentence:: In the 2022 Qatar World Cup final, Argentina narrowly defeated France in a penalty shootout.

etl: {'Organizational Behavior-Strike': ['Time', 'Affiliation', 'Number of Strikers', 'Strike Personnel'], ' Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limited Stock': ['Time', 'Limited Stock'], 'Organizational Relations-Dismissal': [' Time', 'Firing Party', 'Fired Person']}

Output:

3.7 EE example three (an interesting error example)

Input:

Input Sentence:: I divorced him today

##etl: {'Organizational Behavior-Strike': [ 'Time', 'Organization', 'Number of strikes', 'Strike personnel'], 'Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limit' :['Time', 'Limit Stock'] , 'Organizational Relations-Dismissal': ['Time', 'Dismissal Party', 'Dismissed Personnel']}Output:

The above is the detailed content of Zero-sample information extraction by talking to GPT. For more information, please follow other related articles on the PHP Chinese website!