"General Artificial Intelligence" has now almost become the "water to oil" technology of the 2020s. Almost every half month someone will announce that they have discovered/believed that the performance of a large model has awakened human nature and AI. "Come alive". The one that has caused the most turmoil in this kind of news recently is Google. It is widely known that former researcher Blake Lemoine said that the large language model LaMDA is "alive". As expected, this old man entered the resignation process.

In fact, almost at the same time, there was also a quasi-soft article from Google in "The Atlantic Monthly" saying that another new large language model, PaLM, has also become a "real general artificial intelligence" ( It is a true artificial general intelligence).

However, almost no one read this article, so the discussion and criticism it triggered was only Only a few.

However, even if no one reads the manuscripts written by world-class publications that praise world-class manufacturers, they will still be like the old movie line says, "Just like fireflies in the dark night, so bright, So outstanding..." On June 19, 2022, "The Atlantic Monthly" published an article titled "Artificial Consciousness Is Boring."

The title of this article in The Atlantic is more straightforward than the title of the article: "Google's PaLM AI Is Far Stranger" Than Conscious).

The content is as expected, it is the author’s praise after interviewing members of Google Brain’s PaLM project team: 540 billion parameters, hundreds of different tasks can be completed without pre-training Task. Can tell jokes and summarize text. If the user enters a question in Bengali, the PaLM model can reply in both Bengali and English.

If the user requires translating a piece of code from C language to Python, the PaLM model can also quickly complete it. But this article gradually changed from a boastful interview script that seemed to be a soft article, to a flattering draft: announcing that the PaLM model is "a true artificial general intelligence" (It is a true artificial general intelligence).

"PaLM's function scares developers. It requires intellectual coolness and distance to not be scared and accept it - PaLM is rational." startled its own developers, and which requires a certain distance and intellectual coolness not to freak out over. PaLM can reason.)

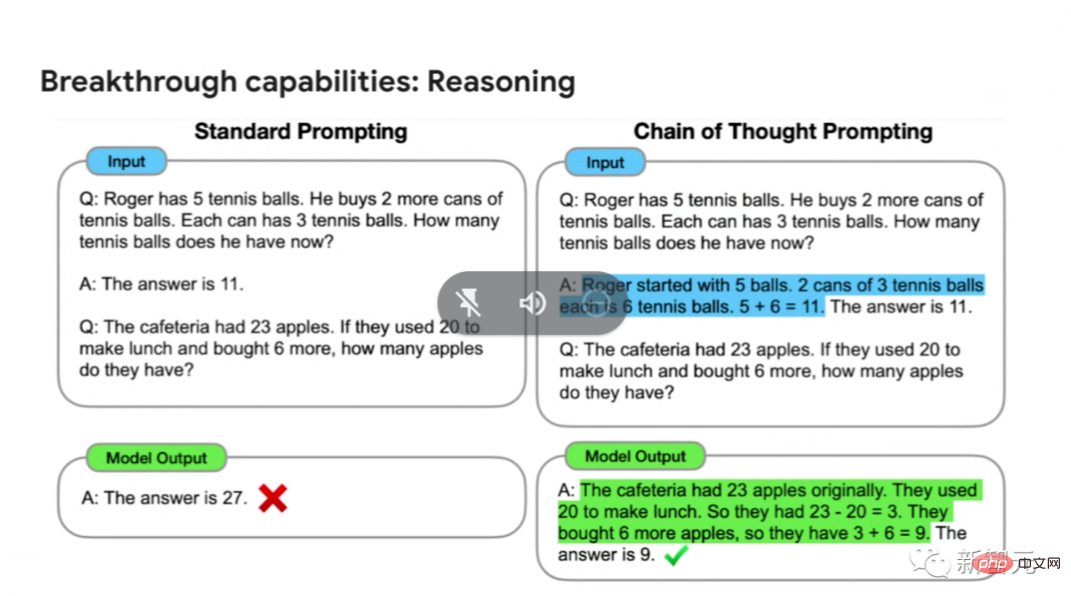

This is Google This is the second time this month that a large model has been forcibly announced to be AGI. What is the basis for this claim? According to the author of this article, it is because the PaLM model can "break out of the box" to solve different intelligent tasks on its own without specific training in advance. Moreover, the PaLM model has a "thinking chain prompt" function. In vernacular, after dismantling, explaining, and demonstrating the problem-solving process to the PaLM model, PaLM can come up with the correct answer on its own.

The gap between gimmicks and evidence is extremely Big "Take off your pants and look at this" feeling: It turns out that the author of "The Atlantic Monthly" also has the habit of interviewing people to write articles without checking the information.

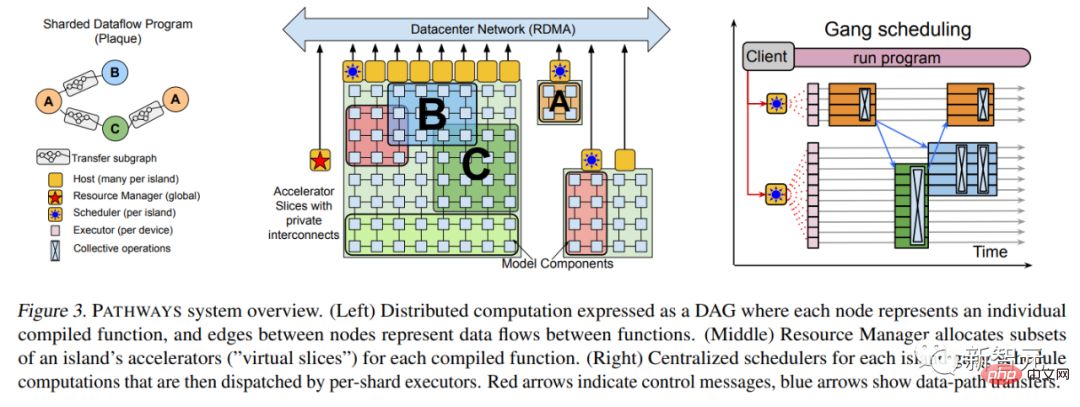

The reason why I say this is because when teacher Jeff Dean led the team to launch the PaLM model, he introduced the "Thinking Chain Prompt" function. But Google Brain would never dare to boast that this product is a "Terminator" that has come to life. In October 2021, Jeff Dean personally wrote an article introducing a new machine learning architecture-Pathways. The purpose is very simple, which is to enable an AI to span tens of thousands of tasks, understand different types of data, and achieve it with extremely high efficiency at the same time:

In March 2022, more than half a year later, Jeff Dean finally released the Pathways paper. Paper address: https://arxiv.org/abs/2203.12533Among them, many technical details are added, such as the most basic System architecture, etc.

Paper address: https://arxiv.org/abs/2203.12533Among them, many technical details are added, such as the most basic System architecture, etc.

In April 2022, the PaLM language model constructed by Google using the Pathways system was released for interview. This Transformer language model with 540 billion parameters has successively broken the SOTA in multiple natural language processing tasks. In addition to using the powerful Pathways system, the paper introduces that the training of PaLM uses 6144 TPU v4, using a high-quality data set of 780 billion tokens, and 22% of it is non-English and multi-lingual corpus.

##Paper address: https://arxiv.org/abs/2204.02311"Self-supervision "Learning" and "Thinking Chain Prompts" were concepts familiar to the AI industry before, and the PaLM model only further implements the concepts. To make matters worse, the Atlantic Monthly article directly stated that "Google researchers don't know why the PaLM model can achieve this function"... This is because Teacher Jeff Dean is too busy and is looking for something to do for him.

Critics: The Atlantic Monthly’s statement is unreliableSure enough, Professor Melanie Mitchell, a member of the Santa Fe Institute in California, USA, posted on her social networking account The series of posts implicitly but firmly questioned the Atlantic Monthly article.

Melanie Mitchell said: "This article is very interesting, but I think the author may not have interviewed professionals in the AI industry other than Google researchers.

For example, there are various evidences claiming that the PaLM model is "real AGI". I personally do not have permission to use PaLM, but in the paper released by Google in April, it only has small sample learning tests with significant effects on several benchmark tests. , but not all small-sample learning test results of PaLM are equally robust.

And among them, how many of the benchmarks used in the test use "shortcut learning", a technology that simplifies the difficulty, paper It is not mentioned in. According to the wording of the "Atlantic Monthly" article, PaLM can at least complete various tasks with high reliability, high versatility, and general accuracy.

However, regardless of this Neither the article nor the April Google paper describes in detail the capabilities and limitations of the PaLM model in general intelligence, nor does it mention the benchmark for testing this ability.

And PaLM is rational ” claims especially need to be verified. Similar claims of the GPT series, due to its open access rights, have been falsified by industry insiders who have run various experiments on it. If PaLM is to receive such an honor, it should accept the same degree Adversarial verification.

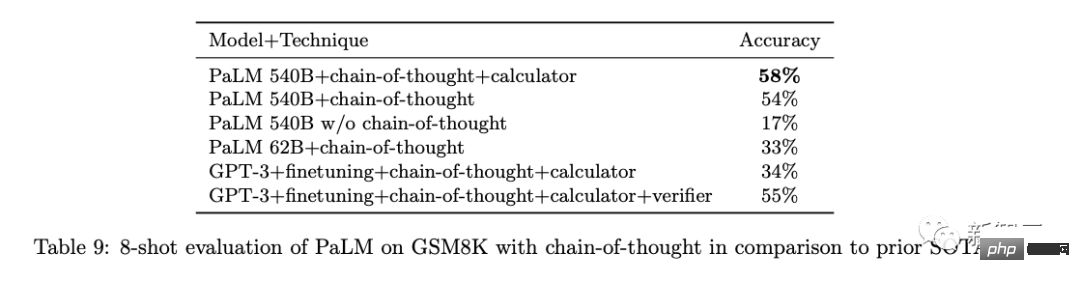

Also, according to the confession of Google’s April paper, PaLM’s rational benchmark test is only a little better than several similar SOTA models in the industry, and there are not many winners. .

The most important point is that PaLM’s papers have not been peer reviewed, and the model does not open any access to the outside world. All Claims are just mouthful and cannot be verified, reproduced or evaluated."

The above is the detailed content of Is the Google PaLM model also declared awakened by laymen? Industry insiders: Rationality testing is only 3% better than GPT. For more information, please follow other related articles on the PHP Chinese website!