Abracadabra!

In terms of 2D generated 3D models, Nvidia has unveiled its self-proclaimed "world-class" research: GET3D.

After training on 2D images, the model generates 3D shapes with high-fidelity textures and complex geometric details.

How powerful is it?

GET3D gets its name because of its ability to generate explicitly textured 3D meshes (Generate Explicit Textured 3D meshes).

Paper address: https://arxiv.org/pdf/2209.11163.pdf

That is, the shape it creates is in the form of a triangle mesh, like a paper model, covered with a textured material.

#The key is that this model can generate a variety of high-quality models.

For example, various wheels on chair legs; car wheels, lights and windows; animal ears and horns; motorcycle rearview mirrors, Textures on car tires; high heels, human clothes...

#Unique buildings on both sides of the street, different vehicles whizzing by, and different groups of people passing by But...

#It is very time-consuming to create the same 3D virtual world through manual modeling.

Although previous 3D generated AI models are faster than manual modeling, their ability to generate more richly detailed models is still lacking.

Even the latest inverse rendering methods can only generate 3D objects based on 2D images taken from various angles. Developers can only build one 3D object at a time.

GET3D is different.

Developers can easily import generated models into game engines, 3D modelers, and movie renderers to edit them.

#When creators export GET3D-generated models to graphics applications, they can apply realistic lighting effects as the model moves or rotates within the scene.

as the picture shows:

In addition, GET3D can also achieve text-guided shape generation.

# By using StyleGAN-NADA, another AI tool from NVIDIA, developers can use text prompts to add specific styles to images.

For example, you can turn the rendered car into a burned-out car or taxi

Convert an ordinary house Transform into a brick house, a burning house, or even a haunted house.

##Or apply the characteristics of tiger print and panda print to any animal...

It’s like the Simpsons’ “Animal Crossing”...

NVIDIA introduced that when trained on a single NVIDIA GPU, GET3D can generate approximately 20 objects per second.

Here, the larger and more diverse the training data set it learns from, the more diverse and detailed the output will be.

NVIDIA said that the research team used the A100 GPU to train the model on approximately 1 million images in just 2 days.

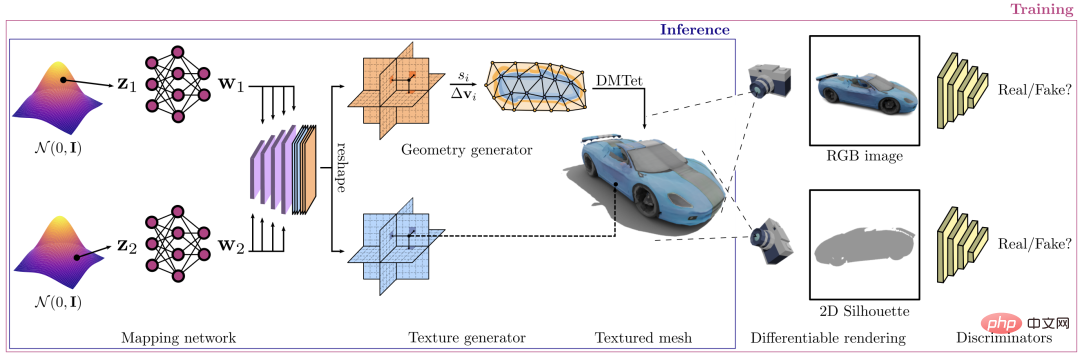

Research methods and processesGET3D framework, its main function is to synthesize textured three-dimensional shapes.

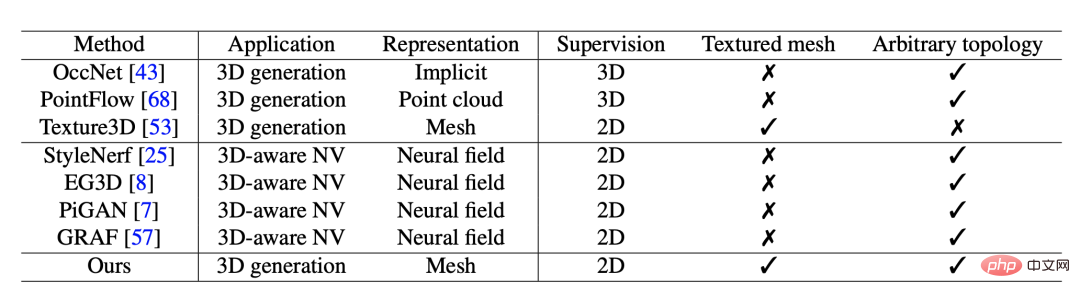

The generation process is divided into two parts: the first part is the geometry branch, which can output surface meshes of any topology. The other part is the texture branch, which produces a texture field from which surface points can be queried.

## During training, a differentiable rasterizer It is used to efficiently render the resulting texture mesh into a two-dimensional high-resolution image. The entire process is separable, allowing adversarial training from images by propagating the gradients of the 2D discriminator.

#Afterwards, the gradients are propagated from the 2D discriminator to the two generator branches.

#The researchers conducted extensive experiments to evaluate the model. They first compared the quality of 3D textured meshes generated by GET3D with existing ones generated using the ShapeNet and Turbosquid datasets.

Next, the researchers optimized the model in subsequent studies based on the comparison results and conducted more experiments.

#GET3D models can achieve phase separation in geometry and texture.

#The figure shows the shape generated by the same geometry hidden code in each row, while changing the texture code.

# Shown in each column are shapes generated by the same texture hiding code while changing the geometry code.

In addition, the researchers inserted the geometry hiding code from left to right in the shapes generated by the same texture hiding code in each row.

# and the shape generated by the same geometry hidden code while inserting the texture code from top to bottom. The results show that each interpolation is meaningful to the generated model. Within each model’s subgraph, GET3D is able to generate smooth transitions between different shapes in all categories. In each line, locally perturb the hidden code by adding a small noise. In this way, GET3D is able to locally generate shapes that look similar but are slightly different. The researchers note that future versions of GET3D could use camera pose estimation technology to let developers train models for the real world. data rather than synthetic datasets. # In the future, through improvements, developers can train GET3D on a variety of 3D shapes in one go, rather than needing to train it on one object category at a time. Sanja Fidler, vice president of artificial intelligence research at Nvidia, said, GET3D takes us away from artificial intelligence-driven 3D content The popularization of creation is one step closer. Its ability to generate textured 3D shapes on the fly could be a game-changer for developers, helping them quickly populate virtual worlds with a variety of interesting objects. The first author of the paper, Jun Gao, is a doctoral student in the machine learning group of the University of Toronto, and his supervisor is Sanja Fidler. #In addition to his excellent academic qualifications, he is also a research scientist at the NVIDIA Toronto Artificial Intelligence Laboratory. His research mainly focuses on deep learning (DL), with the goal of structured geometric representation learning. At the same time, his research also draws insights from human perception of 2D and 3D images and videos. # Such an outstanding top student comes from Peking University. He graduated with a bachelor's degree in 2018. While at Peking University, he worked together with Professor Wang Liwei. #After graduation, he also interned at Stanford University, MSRA and NVIDIA. Jun Gao’s mentor is also a leader in the industry. Fidler is an associate professor at the University of Toronto and a faculty member at the Vector Institute, where she is also a co-founding member. #In addition to teaching, she is also the vice president of artificial intelligence research at NVIDIA, where she leads a research laboratory in Toronto. # Before coming to Toronto, she was a research assistant professor at the Toyota Institute of Technology in Chicago. The institute is located on the campus of the University of Chicago and is considered an academic institution. Fidler’s research areas focus on computer vision (CV) and machine learning (ML), focusing on the intersection of CV and graphics, three-dimensional vision, and 3D reconstruction and synthesis, as well as interactive methods for image annotation, etc.

Introduction to the author

The above is the detailed content of One GPU, 20 models per second! NVIDIA's new toy uses GET3D to create the universe. For more information, please follow other related articles on the PHP Chinese website!