Since Alan Turing first raised the question "Can machines think?" in his seminal paper "Computing Machines and Intelligence" in 1950, the development of artificial intelligence has not been smooth sailing and has not yet been achieved. Its goal of “general artificial intelligence.”

However, the field has still made incredible progress, such as: IBM Deep Blue robot defeated the best chess player in the world, automatic The birth of the driving car, and Google DeepMind's AlphaGo defeating the world's best Go player... The current achievements demonstrate the best research and development results of the past 65 years.

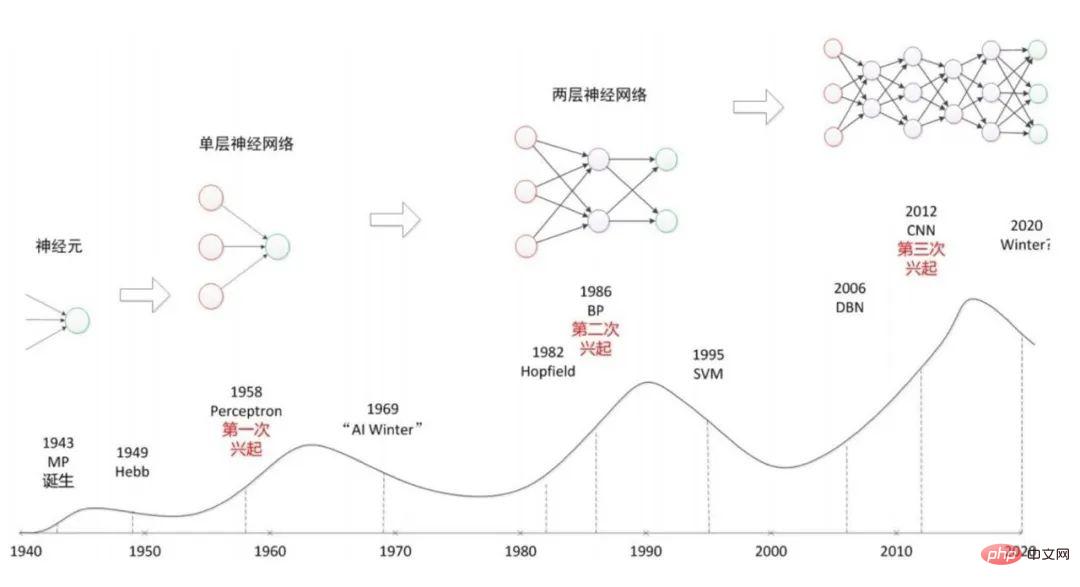

It is worth noting that there was a well-documented “AI Winters” during this period, which almost completely overturned people’s early expectations for artificial intelligence.

One of the factors leading to the AI winter is the gap between hype and actual fundamental progress.

In the past few years, there has been speculation that another artificial intelligence winter may be coming. So what factors may trigger an artificial intelligence ice age?

Cyclic Fluctuations in Artificial Intelligence "AI Winter" refers to the public interest in artificial intelligence as the commercial and academic fields develop these technologies. A period of tapering investment.Artificial intelligence initially developed rapidly in the 1950s and 1960s. Although there have been many advances in artificial intelligence, they have mostly remained academic.

In the early 1970s, people’s enthusiasm for artificial intelligence began to fade, and this gloomy period lasted until around 1980.

During this artificial intelligence winter, activities dedicated to developing human-like intelligence for machines are starting to lack funding.

For eight weeks, they imagined a new field of research together.

As a young professor at Dartmouth University, John McCarthy coined the term “artificial intelligence” while designing a proposal for a seminar.

He believes that the workshop should explore the hypothesis that "every aspect of human learning or any other feature of intelligence can in principle be described so accurately that it can be described by machines to simulate it”.

At that meeting, researchers roughly sketched out what we know as artificial intelligence today.

It gave birth to the first camp of artificial intelligence scientists. "Symbolism" is an intelligent simulation method based on logical reasoning, also known as logicism, psychology school or computer school. Its principles are mainly physical symbol system assumptions and limited rationality principles, which have long been dominant in artificial intelligence research.

Their expert system reached its peak in the 1980s.

In the years after the conference, "connectionism" attributed human intelligence to the high-level activities of the human brain, emphasizing that intelligence is produced by a large number of simple units through complex interconnections and Results of parallel runs.

It starts from neurons and then studies neural network models and brain models, opening up another development path for artificial intelligence.

The two approaches have long been considered mutually exclusive, with both sides believing they are on the road to general artificial intelligence.

Looking back in the decades since that meeting, we can see that AI researchers’ hopes were often dashed, and that these setbacks did not stop them from developing AI.

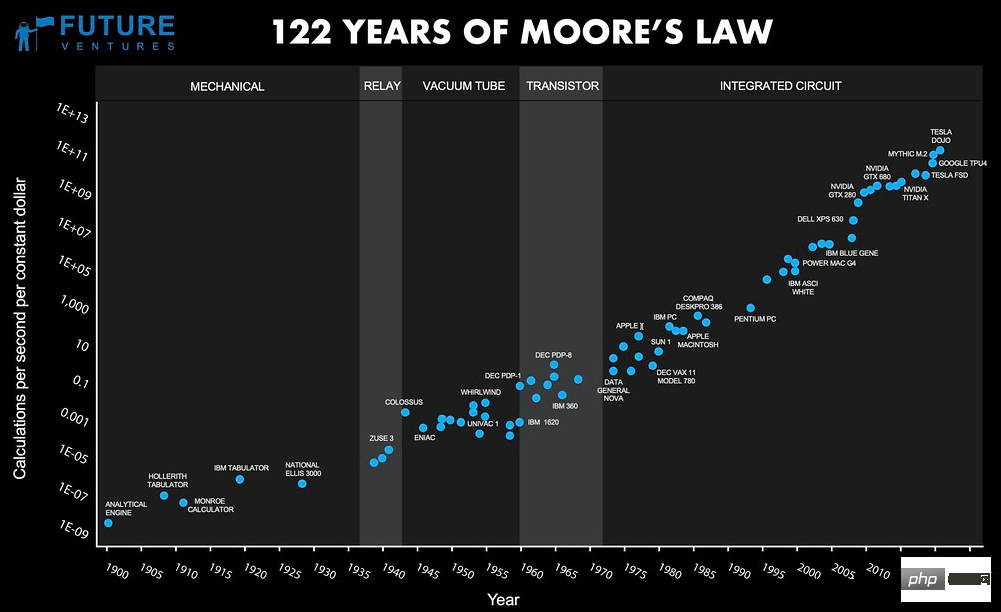

Today, even though artificial intelligence is bringing revolutionary changes to industries and has the potential to disrupt the global labor market, many experts are still wondering whether today’s artificial intelligence applications have reached their limits. As Charles Choi describes in "Seven Revealed Ways AI Fail," today's deep learning systems Weaknesses are becoming increasingly apparent. However, researchers are not pessimistic about the future of artificial intelligence. We may be facing another AI winter in the near future. But this may be the moment when inspired artificial intelligence engineers finally lead us into the eternal summer of machine thinking. An article by Filip Piekniewski, an expert in computer vision and artificial intelligence, titled "AI Winter is Coming" has aroused heated discussion on the Internet. This article mainly criticizes the hype of deep learning, believing that this technology is far from revolutionary and is facing development bottlenecks. The interest of major companies in artificial intelligence is actually converging, and another winter of artificial intelligence may be coming. Since 1993, the field of artificial intelligence has made increasingly impressive progress. In 1997, IBM's Deep Blue system became the first to defeat world chess champion Gary. Kasparov's computer chess player. In 2005, a Stanford unmanned robot drove 131 miles on a desert road without touching a single step, winning the DARPA Autonomous Robot Challenge. In early 2016, Google’s DeepMind’s AlphaGo defeated the world’s best Go player. In the past twenty years, everything has changed. Especially with the booming development of the Internet, the artificial intelligence industry has enough pictures, sounds, videos and other types of data to train neural networks and apply them extensively. But the ever-expanding success of the deep learning field relies on increasing the number of layers in neural networks, and increasing the GPU time used to train them. The computing power required to train the largest artificial intelligence systems doubles every two years and then every 3-4 months, according to an analysis by artificial intelligence research firm OpenAI. Ichiban. As Neil C. Thompson and colleagues write in Diminishing Returns of Deep Learning, many researchers worry that the computational power of artificial intelligence Demand is on an unsustainable trajectory. A common problem faced by early artificial intelligence research was a severe lack of computing power. They were limited by hardware rather than human intelligence or ability. Over the past 25 years, as computing power has increased dramatically, so have our advances in artificial intelligence. However, in the face of the surging massive data and increasingly complex algorithms, 20ZB of new data are added globally every year, and the demand for AI computing power increases 10 times every year. This rate has far exceeded Moore's Law is about the doubling cycle of performance. We are approaching the theoretical physical limit of the number of transistors that can be installed on a chip. For example, Intel is slowing down the pace of launching new chip manufacturing technologies because it is difficult to continue to reduce the size of transistors while saving costs. In short, the end of Moore's Law is approaching. Photo source: Ray Kurzwell, DFJ There are some short-term solutions that will ensure the continued growth of computing power and thus the advancement of artificial intelligence. For example, in mid-2017, Google announced that it had developed a specialized artificial intelligence chip called "Cloud TPU" that is optimized for the training and execution of deep neural networks. Amazon develops its own chip for Alexa (artificial intelligence personal assistant). At the same time, there are currently many startups trying to adapt chip designs for specialized artificial intelligence applications. However, these are only short-term solutions. What happens when we run out of options to optimize traditional chip design? Will we see another AI winter? The answer is yes, unless quantum computing can surpass classical computing and find a more solid answer. But until now, a quantum computer that can achieve "quantum hegemony" and is more efficient than a traditional computer does not yet exist. If we reach the limit of traditional computing power before the arrival of true "quantum supremacy", I am afraid that there will be another winter of artificial intelligence in the future. The problems that artificial intelligence researchers are grappling with are increasingly complex and pushing us toward realizing Alan Turing’s vision of artificial general artificial intelligence. However, there is still a lot of work to be done. At the same time, we will be very unlikely to realize the full potential of artificial intelligence without the help of quantum computing. No one can say for sure whether an AI winter is coming. However, it is important to be aware of the potential risks and pay close attention to the signs so that we can be prepared when it does happen. Will the artificial intelligence winter come?

The above is the detailed content of If it cannot support its future vision, will artificial intelligence once again usher in a 'winter'?. For more information, please follow other related articles on the PHP Chinese website!