With the development of AI technology, the AI technologies involved in different businesses are becoming more and more diverse. At the same time, the number of AI model parameters is growing explosively year by year. How Overcoming the problems faced by AI algorithm implementation such as high development costs, strong reliance on manual labor, unstable algorithms, and long implementation cycles have become problems that plague artificial intelligence practitioners. The "automatic machine learning platform" is the key method to solve the pressure of AI implementation. Today I will share with you Du Xiaoman’s practical experience in building the automatic machine learning platform ATLAS.

First introduce the background and development of Du Xiaoman Machine Learning Platform process and current situation.

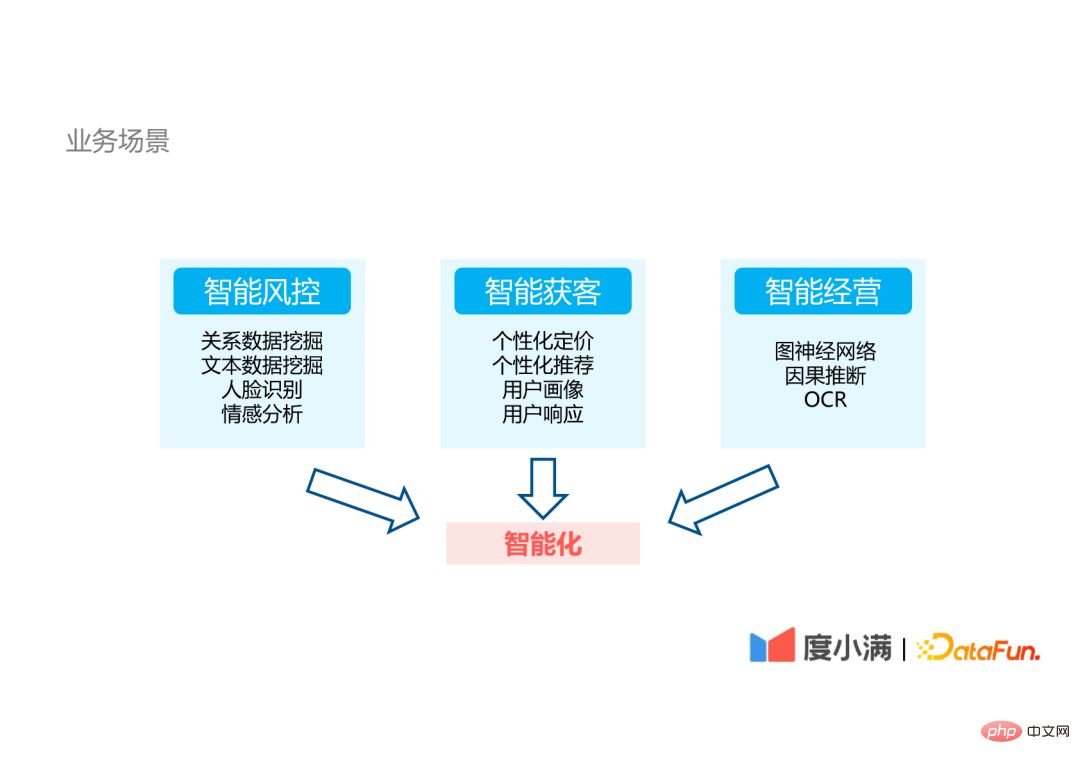

## Degree Xiaoman is a financial technology company. The company's internal business scenarios are mainly divided into three aspects:

Because the AI technologies involved in the business are very diverse, it brings great challenges to the implementation of AI algorithms.

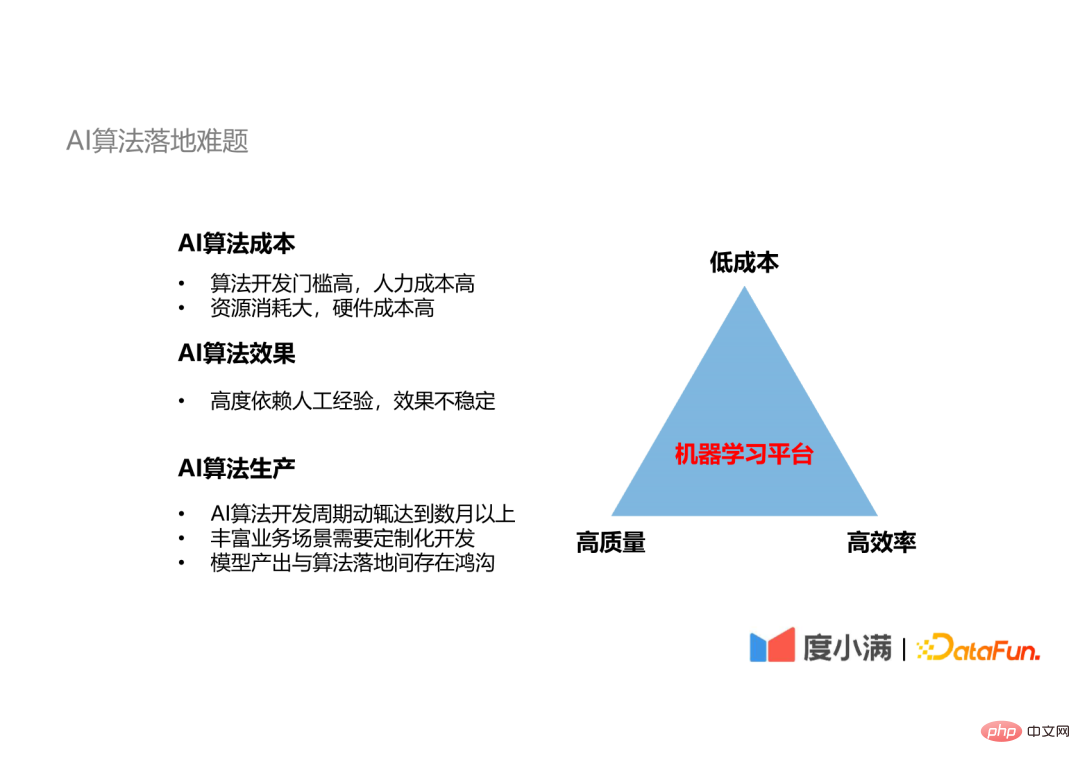

There is a problem with the implementation of AI algorithms Impossible triangle: It is difficult to achieve high efficiency, low cost and high quality of algorithm development at the same time.

Faced with these problems of AI implementation, I think the only solution is to use a machine learning platform.

3. AI algorithm production processLet’s understand the AI algorithm implementation process from the AI algorithm production process specific difficulties encountered.

AI algorithm implementation is mainly divided into four parts: data management, model training, algorithm optimization, and deployment and release. Model training and algorithm optimization are an iterative process.

In each step of algorithm development, the technical requirements for those involved in that step vary greatly:

It can be seen from the technology stack required for each step that it is difficult for one or two or three technicians to fully master all the technologies, and every Every step involving manual labor is a production bottleneck that causes unstable production. The use of machine learning platforms can solve these two problems.

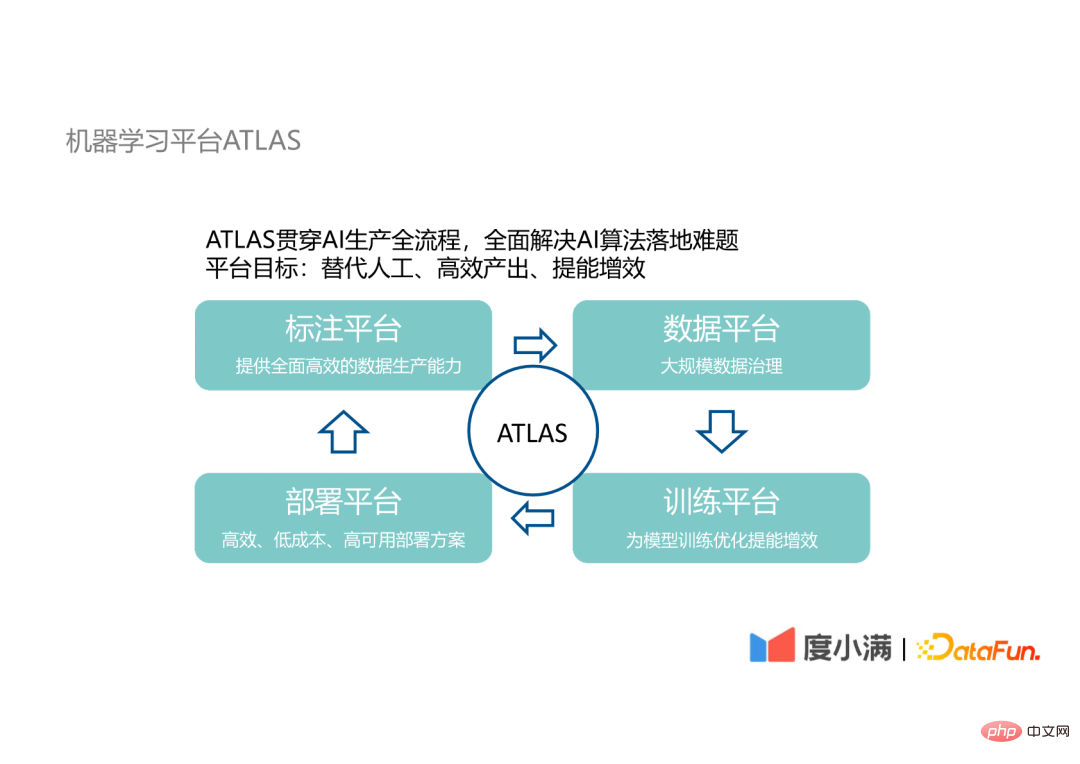

Our machine learning platform ATLAS runs through the entire process of AI production, aiming to Human participation in the process of replacing AI algorithm implementation can achieve the goal of efficient output and increase the efficiency of AI algorithm research and development.

##ATLAS involves the following four platforms:

#There is also an iterative relationship between these four platforms. The design details and operation processes of these platforms are introduced below.

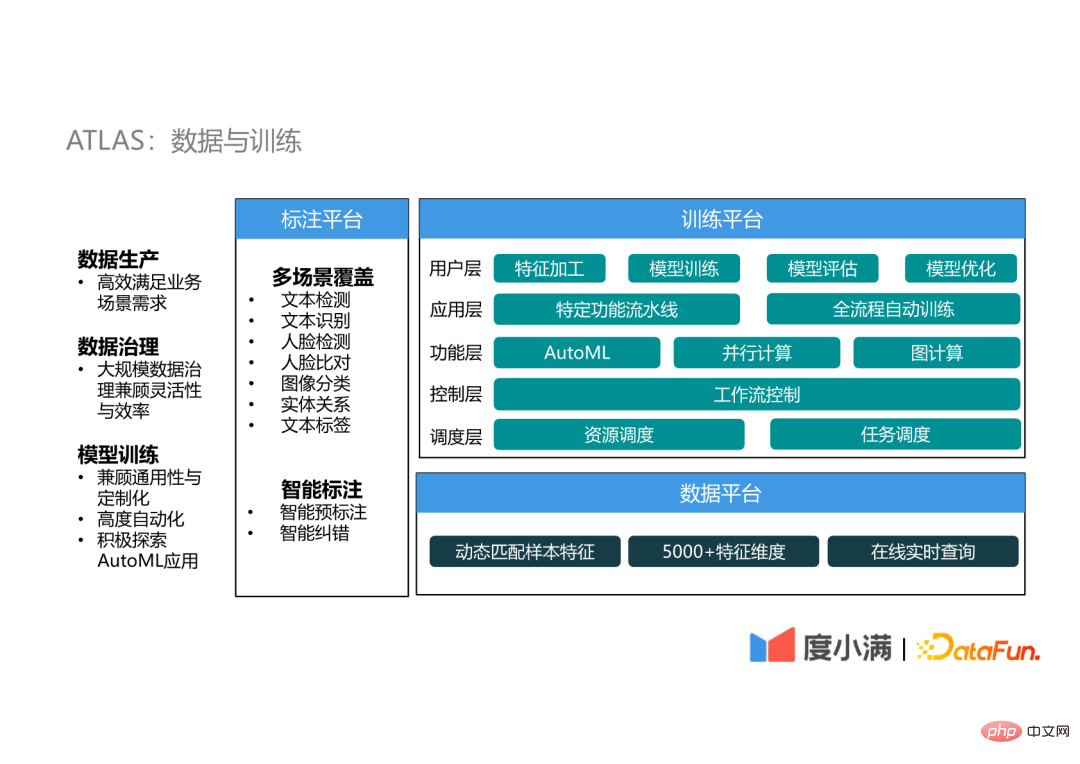

##The data and training section covers annotation platform, data platform and training platform.

(1) Annotation platform

The annotation platform is mainly provided for the training of AI algorithms Label data. Since the birth of deep learning, models have become highly complex. The bottleneck of AI algorithm effects has shifted from model design to data quality and quantity. Therefore, efficient production of data is crucial in the implementation of AI algorithms. link.

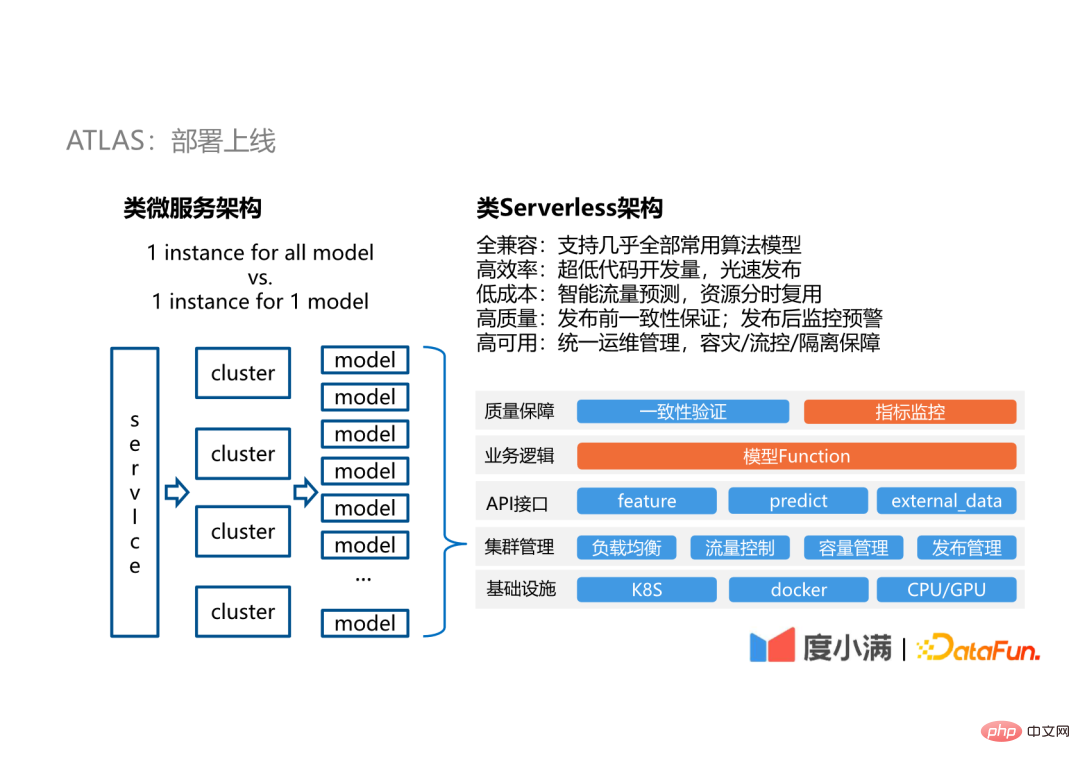

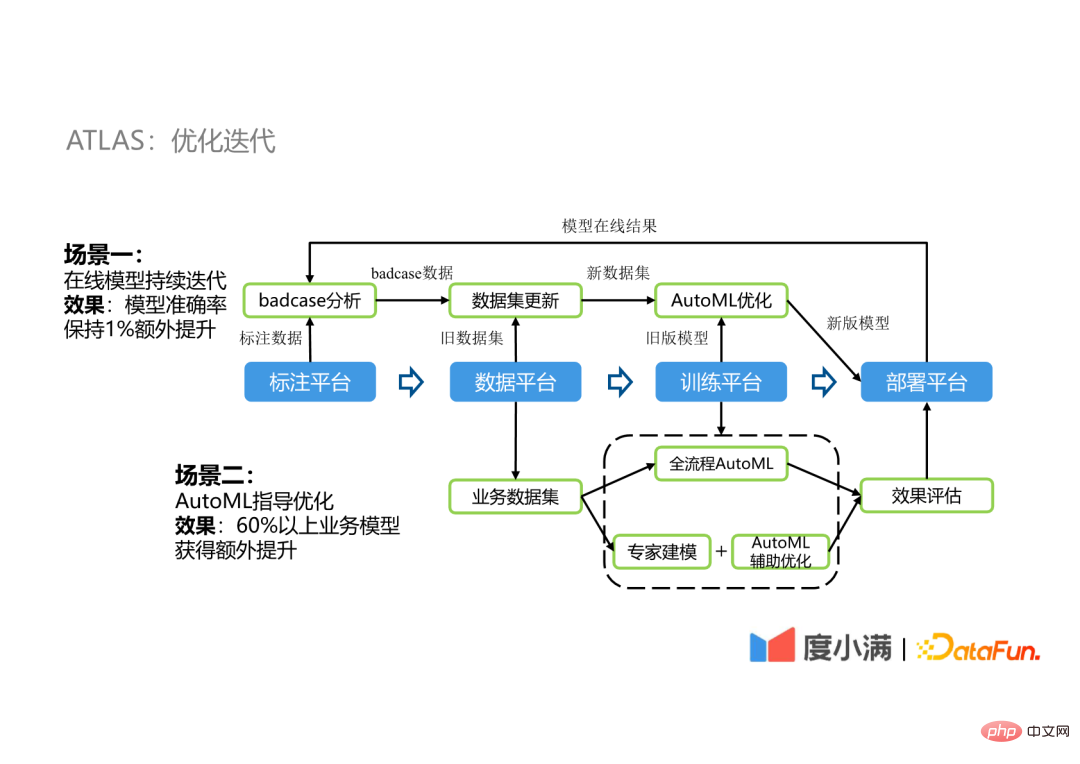

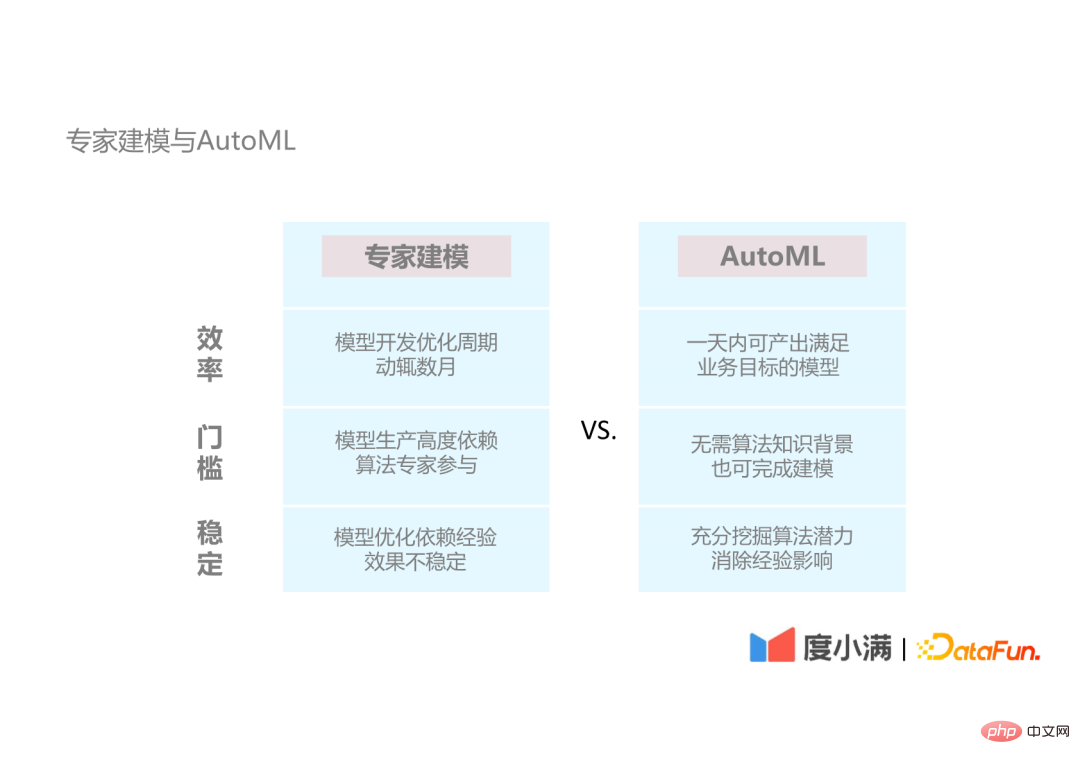

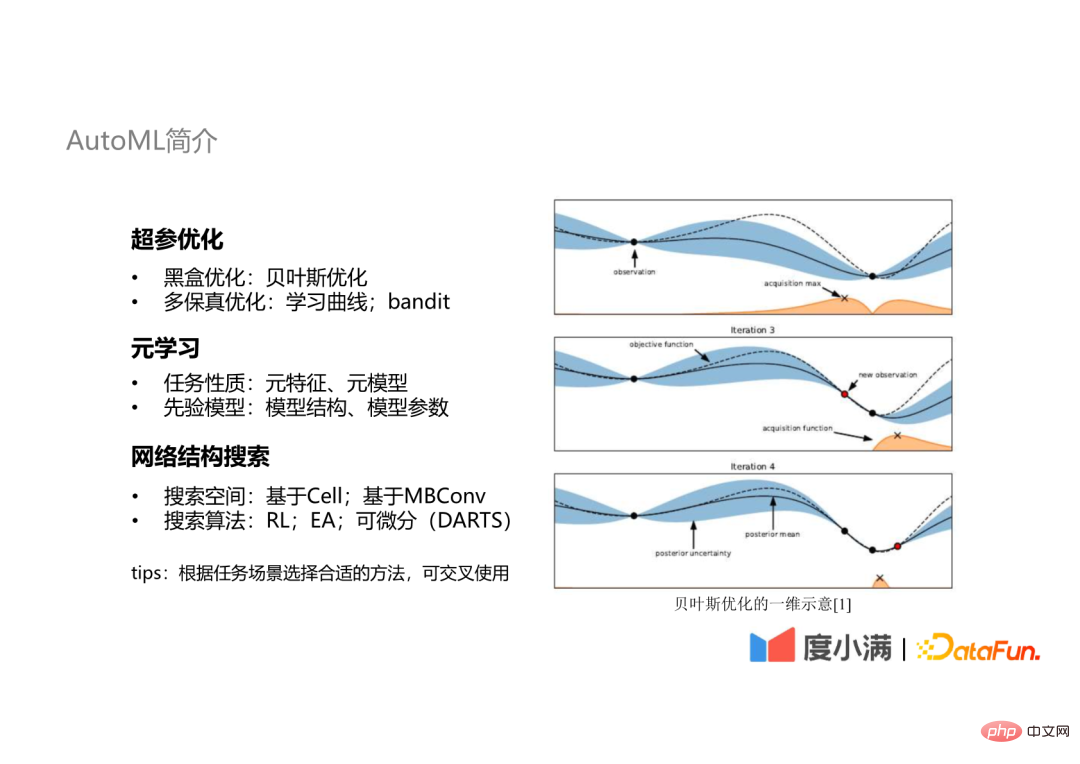

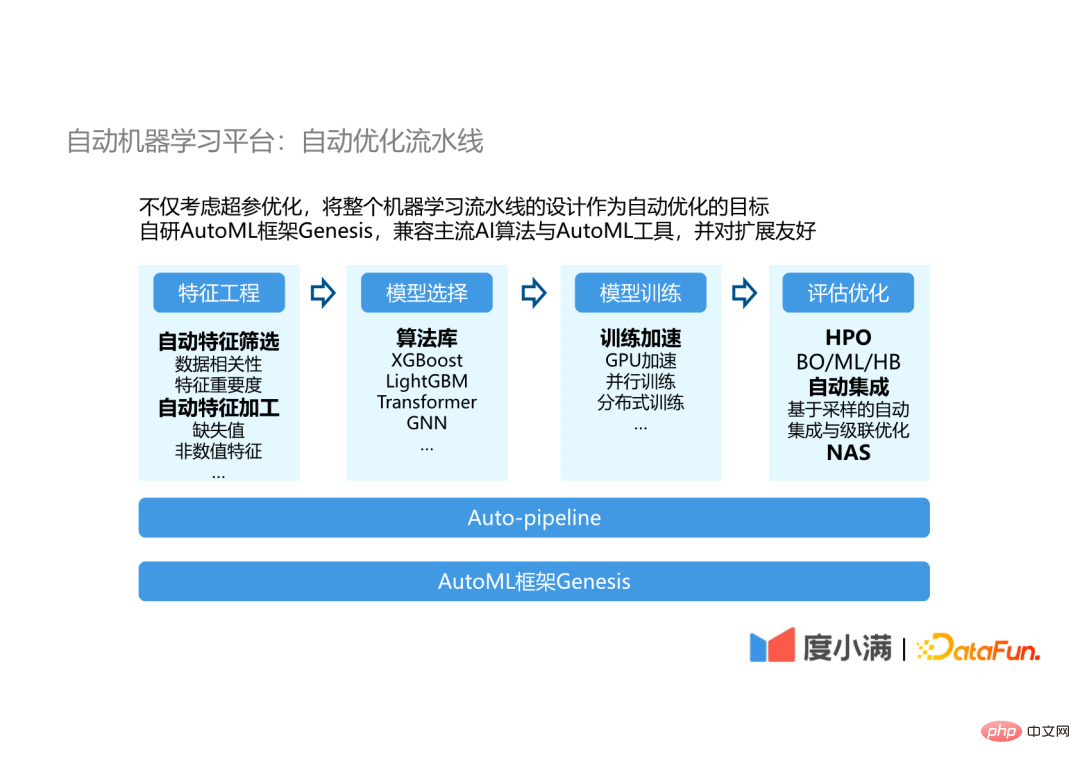

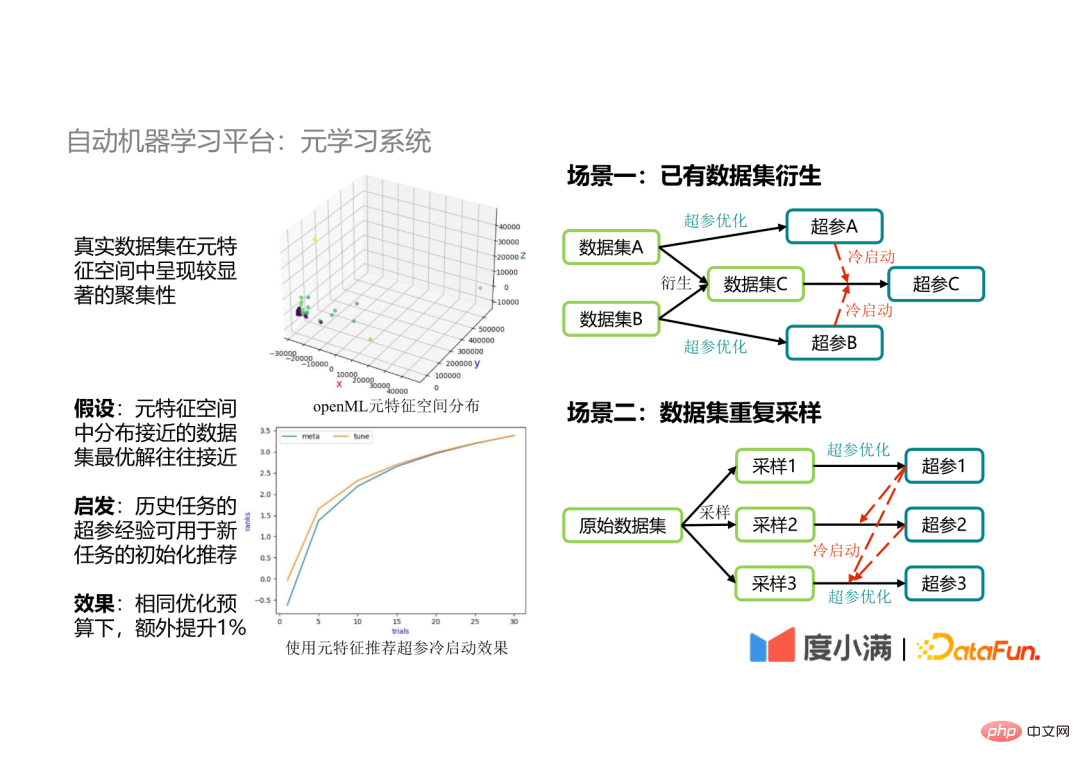

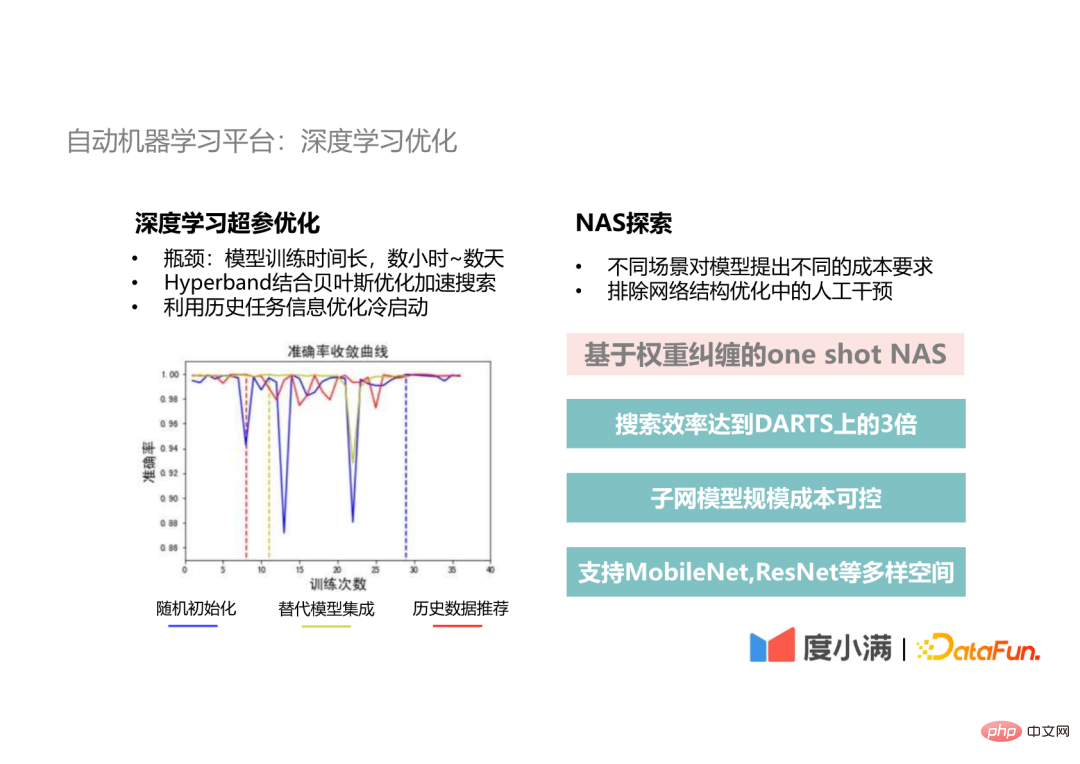

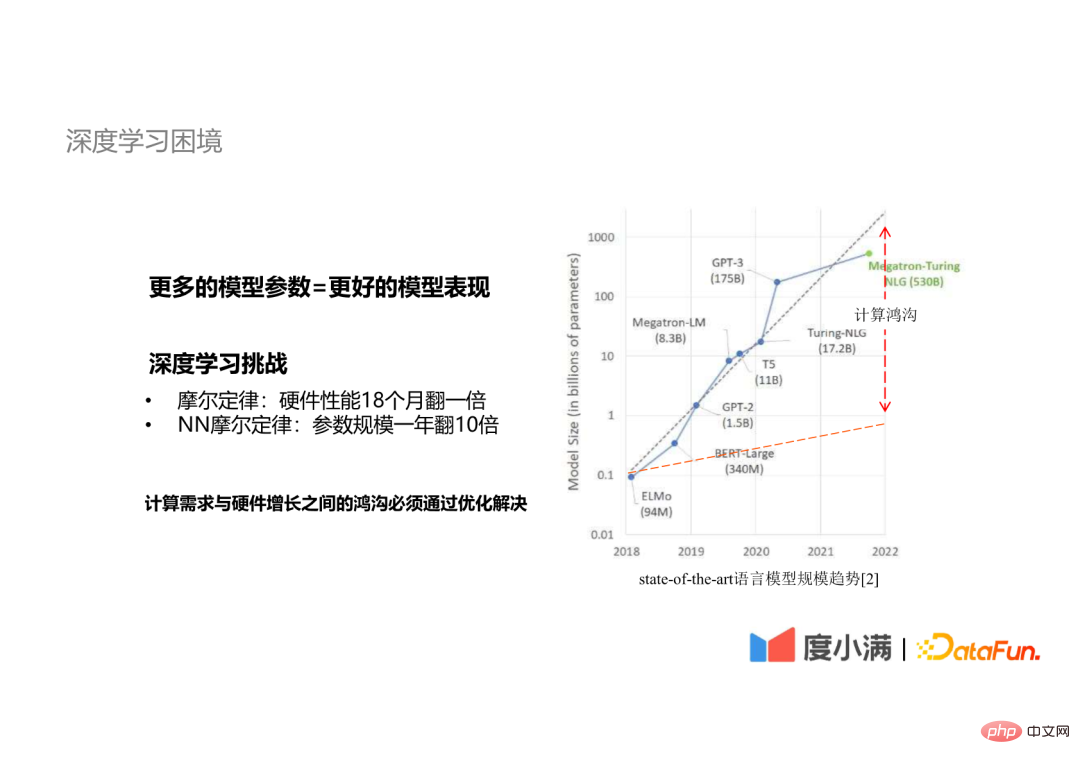

ATLAS’s data annotation platform mainly has two capabilities and features: multi-scenario coverage and intelligent annotation. (2) Data platform The data platform mainly achieves large-scale Data governance can take into account flexibility during the governance process and dynamically match samples. On the basis of saving more than 5000 dimensions of features of hundreds of millions of users, an online real-time query can be achieved. Dynamic matching samples can meet the sample selection and data selection requirements of different scenarios. (3) Training platform The training platform is a very important facility. It is divided into five layers: our The deployment adopts a serverless-like architecture. The reason why it is said to be serverless-like is that it is not a completely serverless service. Because our services are not oriented to a wide range of general application scenarios, but only to online services of models, there is no need to consider compatibility with more scenarios. The API interface layer provides three parts that the model will come into contact with: For users, only the orange part in the picture needs to be concerned about. The API provided by the platform can reduce development low cost and compatible with almost all algorithms on the market. Using the API to develop a model, the process from development to implementation can be completed within a day or even half a day. On top of this, we can provide good stability guarantee, traffic management and capacity management for the platform through cluster management. The following demonstrates the scenarios of two optimization iterations on ATLAS. For example During the implementation of an OCR model, some bad cases will be generated after the old model is deployed. These bad cases will be merged with the existing annotation data to become a new data set. The old model will then be optimized through the AutoML optimization pipeline to generate a new model. After deployment, the cycle repeats. Through such a cycle, the model can maintain an additional 1% improvement in accuracy. Since the accuracy of the OCR model is very high, generally above 95%, 1% is also a big improvement. For simplicity Repeated optimization processes are replaced by full-process AutoML. AutoML is used as auxiliary optimization for scenarios that require expert experience. The results of full-process AutoML are used as Baseline to select the optimal model for deployment and online. In our company, more than 60% of the scenarios have achieved performance improvements through this optimization method, with the improvement effects ranging from 1% to 5%. #The following introduces what AutoML technologies we use and what we do improvement of. First introduce what AutoML has compared to traditional expert modeling Advantage. The advantages of AutoML are divided into three aspects: Let’s introduce the technologies commonly used in AutoML. ##AutoML commonly used technologies include three aspects: In the actual work process, different technologies will be selected according to different task scenarios, and these technologies can be used jointly. #The following sections introduce these three technologies respectively. The first is the hyperparameter optimization part. In fact, in our automatic optimization pipeline, the entire machine learning pipeline is used as the target of automatic optimization, not just for hyperparameter optimization. Including automated feature engineering, model selection, model training and automatic integration, etc., this reduces the possibility of over-fitting compared to individual hyper-parameter optimization. In addition, we have implemented an AutoML framework, Genesis, to be compatible with mainstream AI algorithms and AutoML tools, and is friendly to expansion. It can orthogonalize different capability modules in the platform to each other, making them They can be freely combined to achieve a more flexible automatic optimization pipeline. is also used in our system Now that the meta-learning method has been introduced, let’s introduce the necessity of the meta-learning method and the key scenarios of its application. (1) The necessity of meta-learning After accumulating a large amount of experimental data Afterwards, we found that the data sets showed obvious aggregation in the meta-feature space, so we assumed that the optimal solutions of data sets with close distribution in the meta-feature space would also be close. Based on this assumption, we used the hyperparameters of historical tasks to guide the parameter optimization of new tasks and found that the hyperparameter search converges faster, and under a limited budget, the algorithm effect can be improved by an additional 1%. (2) Application scenario In big data application scenarios, it is sometimes necessary to merge existing data sets, such as data set A and data set B merges to generate a new data set C. If the hyperparameters of data set A and data set B are used as the cold start of data set C to guide the hyperparameter optimization of data set C, on the one hand, the search space can be locked , on the other hand, it canachieve the optimal parameter optimization results. In the actual development process, it is sometimes necessary to sample the data set, and then perform hyperparameter optimization on the sampled data set. Because the meta-feature space distribution of the sampled data is close to the original data, the original data set is used Using the hyperparameters to guide the hyperparameter optimization of sampled data can improve optimization efficiency. Finally It is our automatic optimization for deep learning scenarios. It is divided into two aspects: hyperparameter optimization and exploration of NAS: The development bottleneck of deep learning is training time. One iteration takes hours to days. Then using traditional Bayesian optimization requires twenty or thirty iterations, and the training time is as long as one Month to several months. Therefore, we will use the Hyperband method to provide seeds for Bayesian optimization in the deep learning hyperparameter optimization part to accelerate the hyperparameter search process. On this basis, we will also use historical data information to optimize cold start and use historical alternative models for integration, which will achieve a global optimal solution at a faster convergence speed than random initialization. In actual development scenarios, different deployment scenarios have different effects on model size and time Performance requirements are different. Secondly, the optimization of neural network structure is an important part of model optimization. We need to eliminate manual interference in this step. So we proposed this one-shot NAS method based on weight entanglement. The search efficiency can reach more than 3 times that of the classic DARTS method, and the parameter amount and calculation cost of the searched subnetwork model are controllable. We An appropriate model can be selected within the target. In addition, we also support various spaces such as MobileNet and ResNet to meet different CV task requirements. Finally, let’s discuss the issues we encountered during the construction of the machine learning platform Issues of scale and efficiency. #The reason why we pay attention to the issues of scale and efficiency is because deep learning faces This represents a conflict between model size and computational requirements.

6. ATLAS: Deployment and online

2. Automatic machine learning

1. Expert modeling and AutoML

#2. Introduction to AutoML

3. Automatic machine learning platform: automatic optimization pipeline

4. Automatic machine learning platform: meta-learning system

5. Automatic machine learning platform: deep learning optimization

3. Scale and efficiency

1. Dilemma of Deep Learning

It is an industry consensus that more model parameters mean better model performance. There is the following Moore's Law in deep learning:

So the gap between the rapidly growing computing needs and hardware performance must be overcome Optimize to solve it.

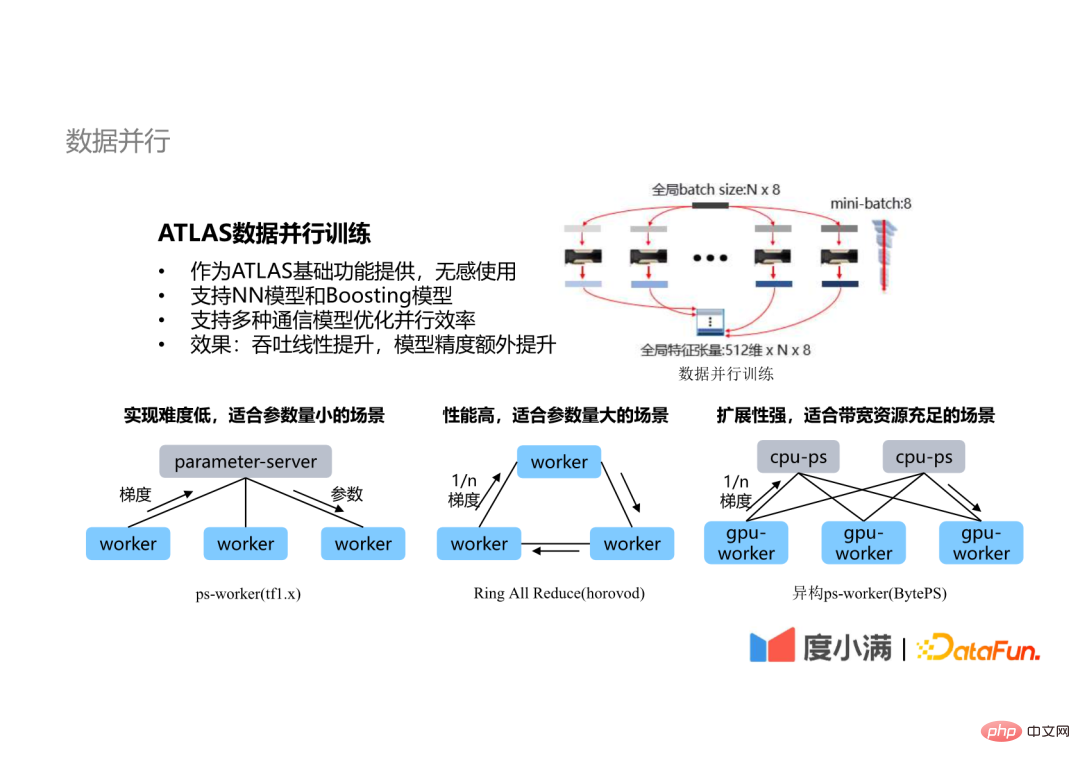

The most commonly used optimization method is parallelism, including data parallelism, model parallelism, etc. The most commonly used is data parallel technology.

The data parallel technology of the ATLAS platform has the following characteristics:

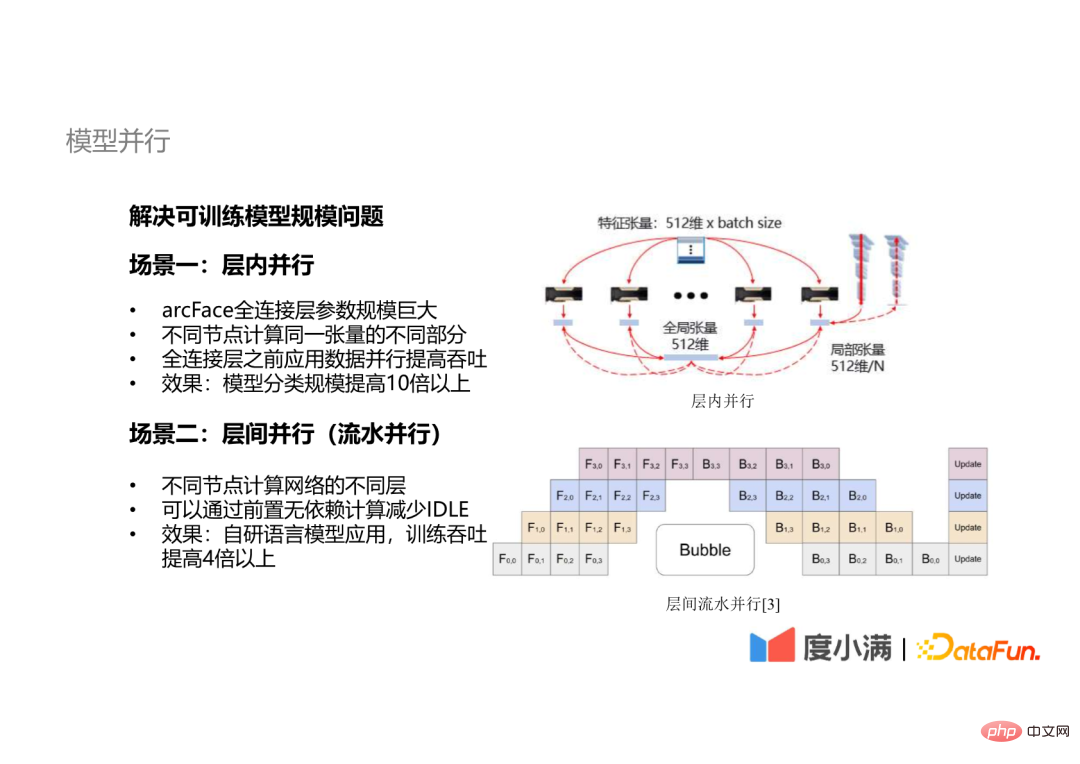

There are also some models that cannot solve the problem of training efficiency by relying solely on data parallelism. , it is also necessary to introduce model parallel technology.

ATLAS model parallelism is mainly divided into two aspects:

The parameter scale of the fully connected layer of some network models is very large For example, the classification scale of arcFace reaches dozens, millions or even tens of millions. Such a fully connected layer cannot be covered by a GPU card. At this time, intra-layer parallel technology needs to be introduced, and different nodes calculate different parts of the same tensor.

At the same time, inter-layer parallel technology will also be used, that is, data of different layers of the network are calculated on different nodes, and non-dependent calculations are pre-empted to reduce IDLE (GPU waiting time) during the calculation process.

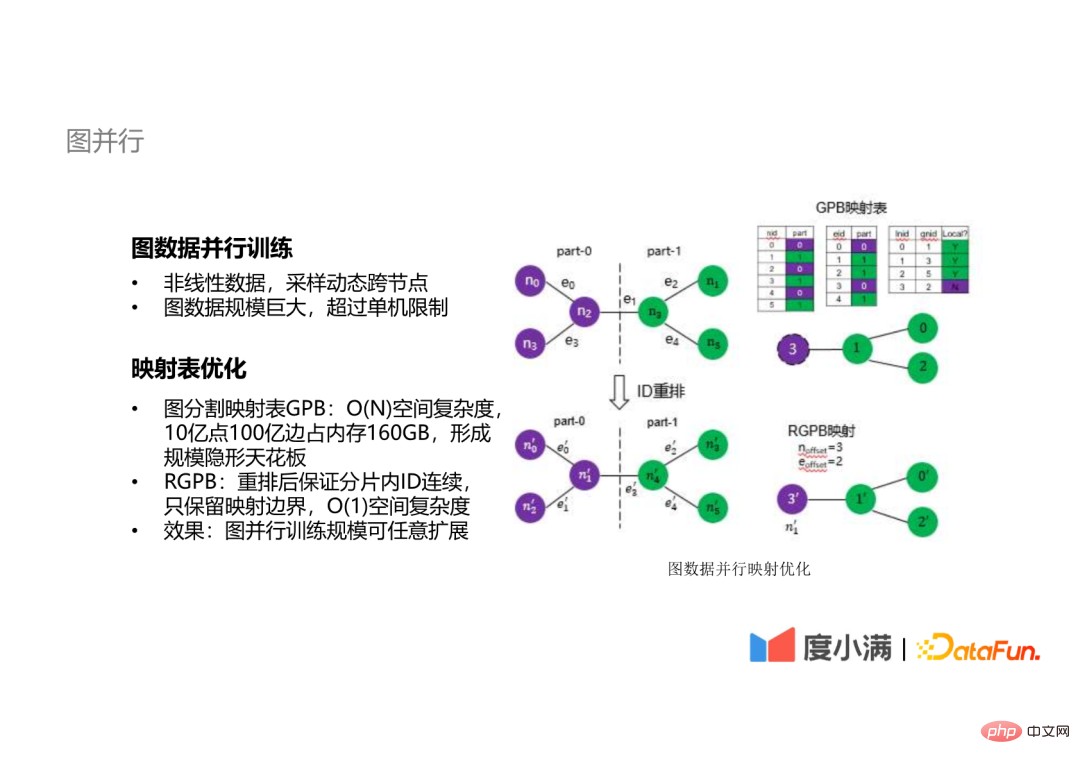

#In addition to linear data that can be described by tensors, we have made some graphs Exploration of data parallel training.

For graph data, whether sampling or other operations need to dynamically cross nodes, and graph data is generally large in size They are all very large. Our internal graph data has reached tens of billions. It is difficult to complete the calculation of such graph data on a single machine.

The bottleneck of distributed computing of graph data lies in the mapping table. The space complexity of the traditional mapping table is O(n), such as 1 billion points 1 billion The edge graph data occupies 160GB of memory, forming a scale ceiling for distributed training. We propose a method with a space complexity of O(1). By rearranging the IDs of nodes and edges, only the mapping boundaries are retained, so that the scale of graph parallel training can be arbitrarily expanded.

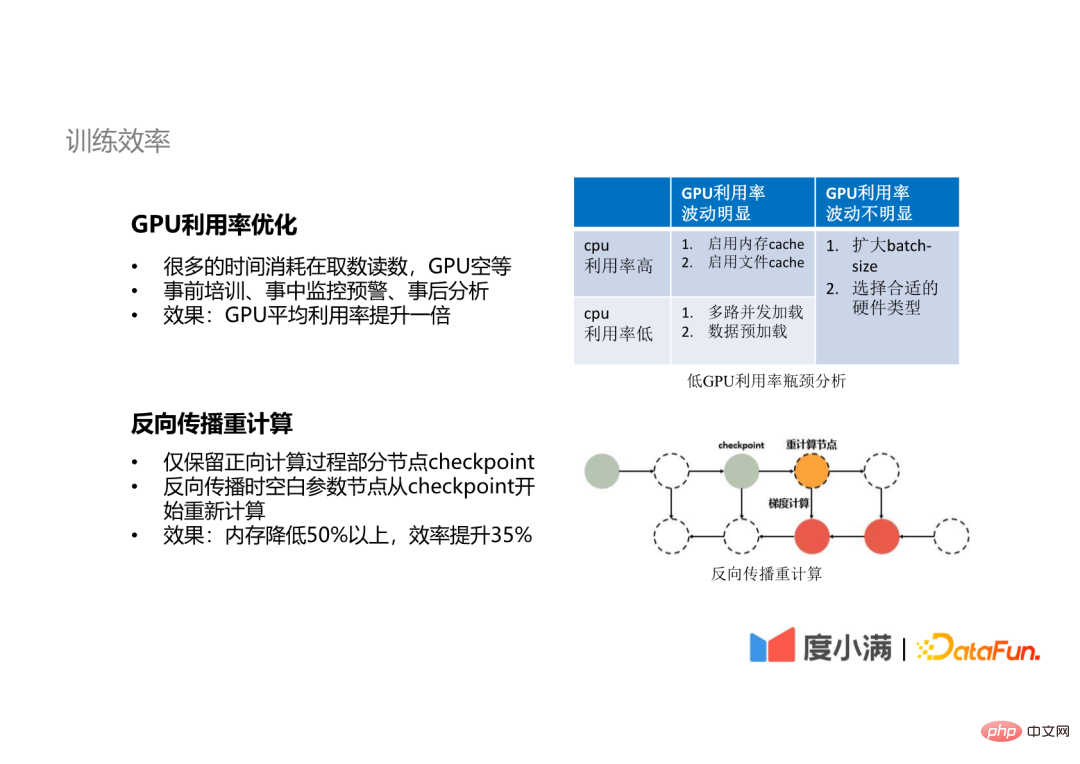

At the same time, we have also made some optimizations in terms of training efficiency.

A lot of GPU time is spent reading data, and the GPU is idle. Through pre-training, in-process monitoring and early warning, and post-event analysis, the average GPU usage can be doubled.

We also use Backpropagation recalculation technology is adopted. For some models with very many parameters, during the forward propagation process, we do not save the calculation results of all layers, but only retain the checkpoints of some nodes. During the backward propagation, the blank parameter nodes are recalculated from the checkpoint. In this way, memory resources can be reduced by more than 50%, and training efficiency can be improved by more than 35%.

## Finally, let’s talk about our experience and thoughts in the construction of machine learning platform.

We have summarized some experiences as follows: ##Because the implementation of our AI algorithm involves all aspects of technology and content, it is impossible for me to require students in any link to understand the whole situation, so we must have a platform that can provide these foundations capabilities to help everyone solve these problems. Because only if the application of automation or AutoML is done well, can the productivity of algorithm experts be more effectively liberated, allowing algorithm experts to do some more in-depth algorithms. , or capacity building to increase the upper limit of machine learning. A1: The most commonly used open source AutoML framework is Optuna. I have also tried Auto-Sklearn and AutoWeka. Then I recommend a website to everyone, which is automl.org. Because there are actually relatively few people working in this field now, this website is a website built by several experts and professors in the field of AutoML. It has a lot of open source learning materials for AutoML that everyone can refer to. The open source framework we recommend is Optuna, which we use for parameter adjustment, because its algorithm is not just the most basic Bayesian optimization. It is a TPE algorithm, which is more suitable for very large parameters. In some scenarios, Bayesian optimization is still more suitable for some scenarios with fewer parameters. However, my suggestion is that you may try some different methods for different scenarios, because after more attempts, you may have different opinions on what scenarios What method is suitable for more experience. #A2: It has been 3 or 4 years since the construction of our machine learning platform. At the beginning, we first solved the problem of deploying applications, and then started to build our production capabilities, such as computing and training. If you are building it from scratch, I suggest you refer to some open source frameworks to build it first, and then see what kind of problems you will encounter in your own business scenarios during use, so as to clarify the future development direction. A3: This may be a more specific algorithm optimization problem, but in our optimization pipeline, we train through the sampling method. Through this This method allows our task to see more angles, or aspects, of the data set, and then integrates the top models trained after these samplings to give our model stronger generalization capabilities. This is It is also a very important method in our scene. A4: This development cycle has just been mentioned, and it takes about three to four years. Then, in terms of personnel investment, there are currently six or seven students. In the early days, the number was even less than this. #A5: First of all, the virtualized GPU mentioned by this student should refer to the segmentation and isolation of resources. If we are building a machine learning platform, virtualizing GPU should be a necessary capability. This means that we must virtualize resources in order to achieve better resource scheduling and allocation. Then on this basis, we may also divide our GPU's video memory and its computing resources, and then allocate resource blocks of different sizes to different tasks, but we are not actually training at this point. Use it inside, because training tasks usually have higher requirements for computing capabilities and will not be a smaller resource consumption application scenario. We will use it in inference scenarios. During the actual application process, we found that there is no good open source free solution for virtualization technology. Some cloud service vendors will provide some paid solutions, so we use a time-sharing multiplexing solution for deployment, which is Mixing some tasks with high computing requirements and some tasks with low computing requirements to achieve time-sharing multiplexing can achieve the effect of increasing capacity to a certain extent. #A6: We can get close to a linear acceleration ratio. If we measure it ourselves, it can probably reach a level of 80 to 90 when it is better. Of course, if the number of nodes is very large, further optimization may be required. Now you may publish papers or see papers mentioning that 32 or 64 nodes can achieve an acceleration ratio of 80 or 90. That may be necessary. There are some more specialized optimizations. But if we are in the machine learning platform, we may need to target a wider range of scenarios. In actual scenarios, most training may require 4 GPU cards or 8 GPU cards, and up to 16 GPU cards can meet the requirements. . #A7: In the ideal situation of our AutoML, users do not need to configure any parameters. Of course, we will allow users to adjust or determine some parameters according to their needs. In terms of time consumption, for all our AutoML scenarios, our goal is to complete the optimization within one day. And in terms of computing power, if it is general big data modeling, such as tree models XGB, LGBM, etc., even a single machine can handle it; if it is a GPU task, it depends on the scale of the GPU task itself. Basically AutoML training can be completed with 2 to 3 times the computing power of the original training scale. #A8: This question was mentioned just now. You can refer to Optuna, Auto-Sklearn and AutoWeka. And I just mentioned the website automl.org. It has a lot of information on it. You can go and learn about it. A9: EasyDL is owned by Baidu, and our framework is completely self-developed. That’s it for today’s sharing, thank you all. Looking to the future:

5. Q&A session

Q1: Which open source AutoML frameworks have we tried and which ones do we recommend?

#Q2: How long is the development cycle of the machine learning platform?

#Q3: How to eliminate overfitting during cross-validation?

Q4: How much is the development cycle and personnel investment when we build the entire machine learning learning platform?

#Q5: Will virtualizing GPU improve the machine learning platform?

Q6: What is the acceleration ratio of multi-node distributed parallel training? Can it be close to linear?

Q7: What parameters do users need to configure when using AutoML? How much computing power and time does the entire calculation require?

#Q8: What open source machine learning frameworks can you refer to?

Q9: What is the relationship with EasyDL?

The above is the detailed content of Duxiaoman automatic machine learning platform practice. For more information, please follow other related articles on the PHP Chinese website!

How to configure jsp virtual space

How to configure jsp virtual space

Two-way data binding principle

Two-way data binding principle

How to check deleted call records

How to check deleted call records

Comparative analysis of iqooneo8 and iqooneo9

Comparative analysis of iqooneo8 and iqooneo9

How to solve tomcat startup crash

How to solve tomcat startup crash

How to deal with blocked file downloads in Windows 10

How to deal with blocked file downloads in Windows 10

How to solve the problem of slow server domain name transfer

How to solve the problem of slow server domain name transfer

Today's Toutiao gold coin is equal to 1 yuan

Today's Toutiao gold coin is equal to 1 yuan