Who is publishing the most influential AI research? In today's era of "letting a hundred flowers bloom", this issue has great potential for exploration.

You may have guessed some conclusions: top institutions such as Google, Microsoft, OpenAI, and DeepMind. Conclusions like this are only half correct. There is also some other information to us. Revealing conclusions that were otherwise unknown.

With the rapid development of AI innovation, it is crucial to obtain some "intelligence" as soon as possible. After all, few people have time to read everything, but what is certain is that the papers compiled in this article have the potential to change the direction of artificial intelligence technology.

The real test of the influence of the R&D team is of course how the technology is implemented in products. OpenAI released ChatGPT at the end of November 2022, which shocked the entire field. This is after their 2022 Another breakthrough after the March paper "Training language models to follow instructions with human feedback" (Training language models to follow instructions with human feedback).

It is rare for a product to be launched so quickly. Therefore, in order to gain insight into more information, Zeta Alpha’s statistics recently adopted a classic academic indicator: number of citations.

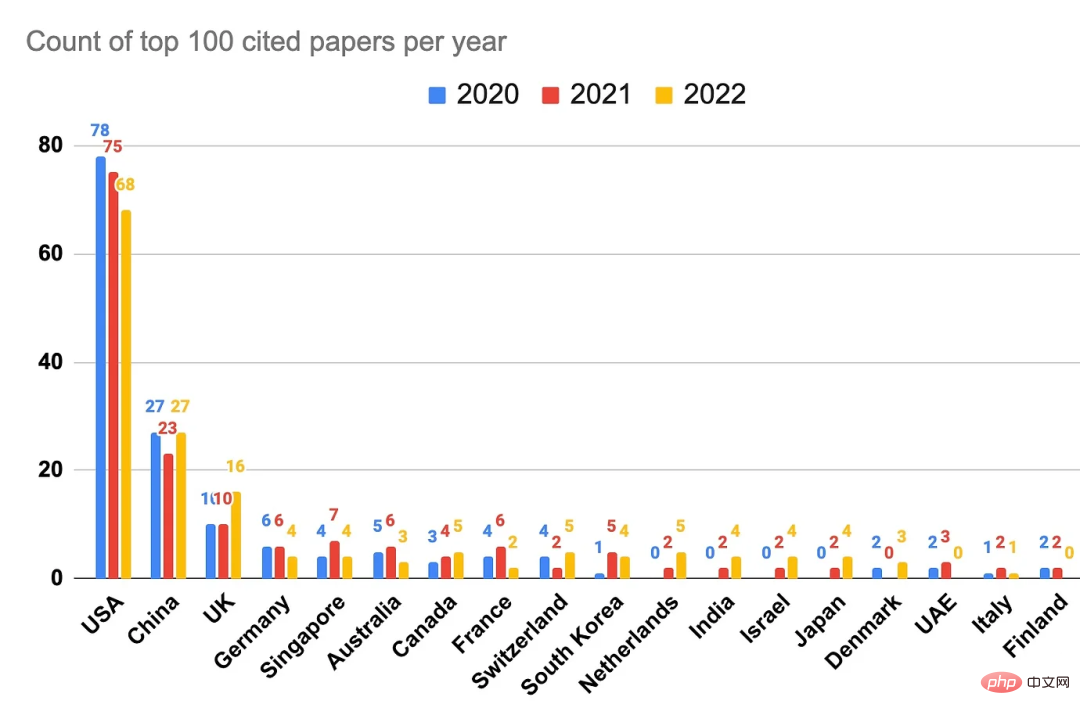

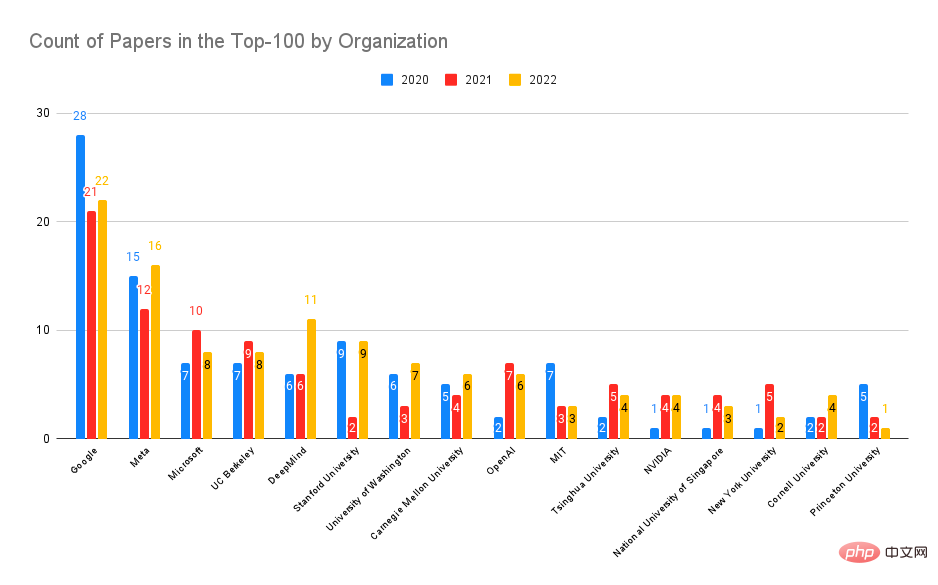

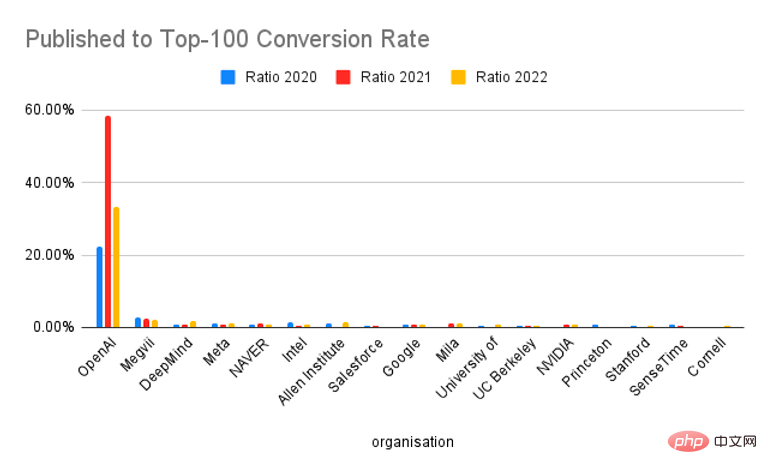

Detailed analysis of the 100 most cited papers in each of 2022, 2021 and 2020 provides insight into the institutions and countries currently publishing the most impactful AI research / area. Some preliminary conclusions are: the United States and Google still dominate, and DeepMind also had a great year, but considering the output, OpenAI is really at the forefront in terms of product impact and research, and can be quickly and widely adopted. Quote.

Source: Zeta Alpha.

As shown in the figure above, another important conclusion is that China ranks second in terms of research citation influence, but there is still a gap compared with the United States. As described in many reports, "equal or even surpassed."

Using data from the Zeta Alpha platform, and then combined with manual curation, this article collects the most cited papers in the field of artificial intelligence in 2022, 2021 and 2020, and analyzes the authors Institution and country/region. This enables ranking of these papers by R&D impact rather than pure publication data.

To create the analysis, we first collected the most cited papers per year on the Zeta Alpha platform and then manually checked the first publication date (usually arXiv preprints) in order to Papers are placed in the correct year. This list was then supplemented by mining highly cited AI papers on Semantic Scholar, which has broader coverage and the ability to sort by citation count. This primarily uncovered papers from outside high-impact publishers such as Nature, Elsevier, Springer, and other journals. Then, the number of citations for each paper on Google Scholar was used as a representative metric, and the papers were sorted by this number to arrive at the top 100 for the year. For these papers, we used GPT-3 to extract authors, affiliations, and countries and manually checked these results (if the country was not obvious in the publication, the country where the organization was headquartered was used). If a paper has authors from multiple institutions, each institution is counted once.

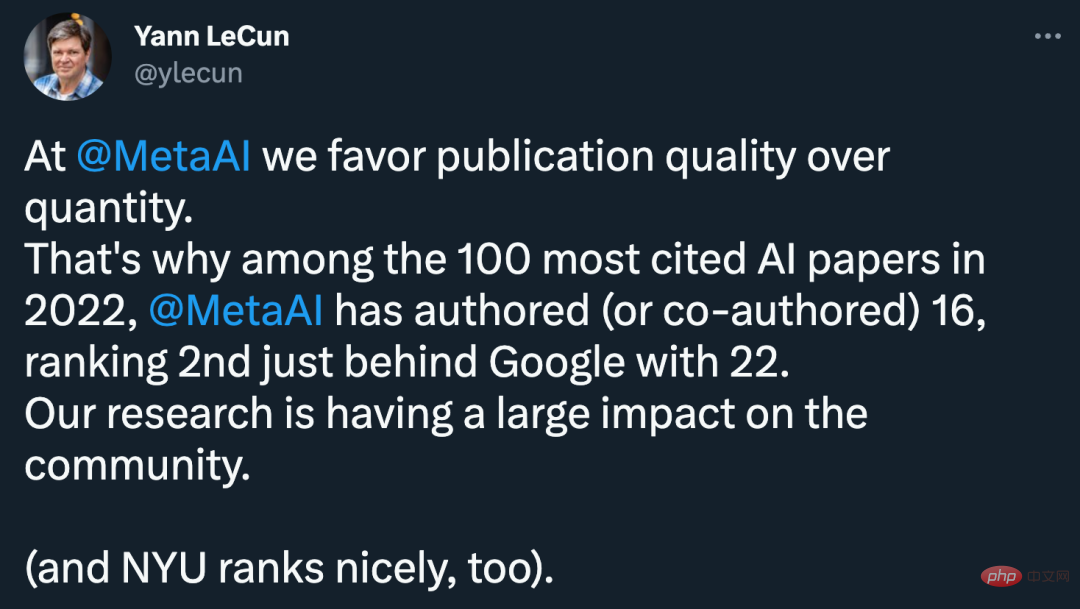

After reading this ranking, the boss Yann LeCun said he was very pleased: "At Meta AI, we tend to publish quality rather than quantity. This is why it was cited in 2022 Among the top 100 artificial intelligence papers, Meta AI has authored (or co-authored) 16, ranking second only to Google’s 22. Our research is having a huge impact on society. (In addition, New York University’s The ranking is also very good)."

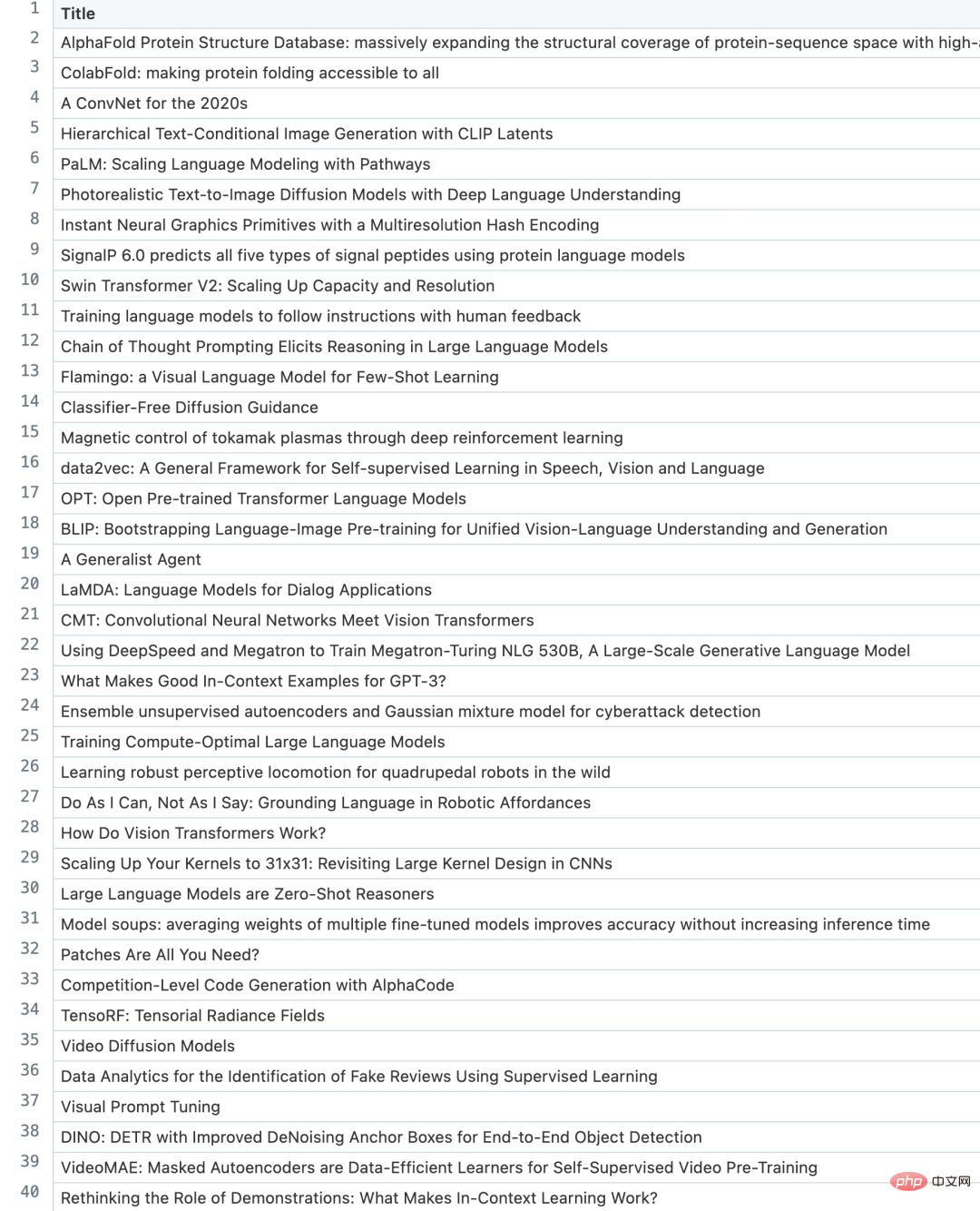

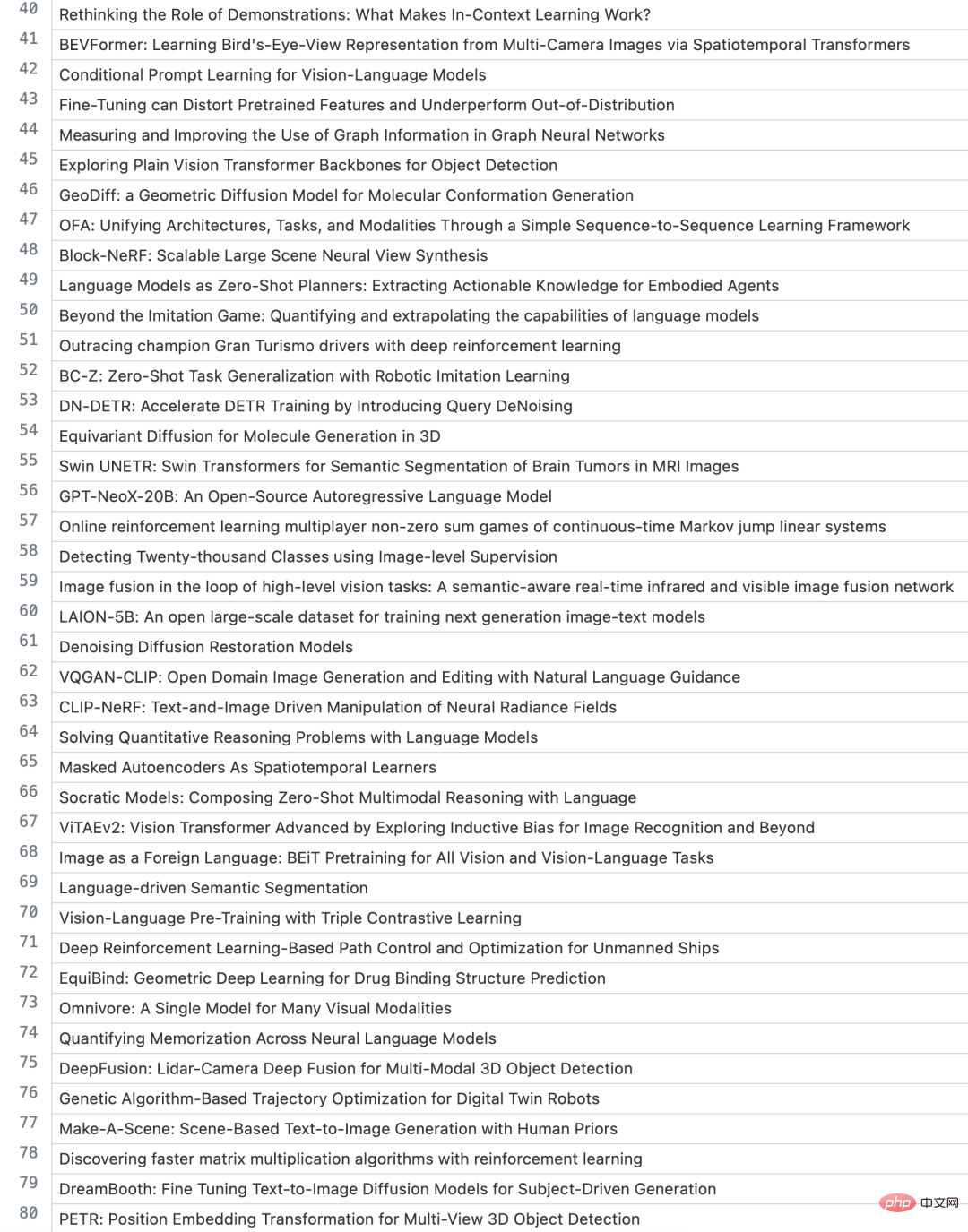

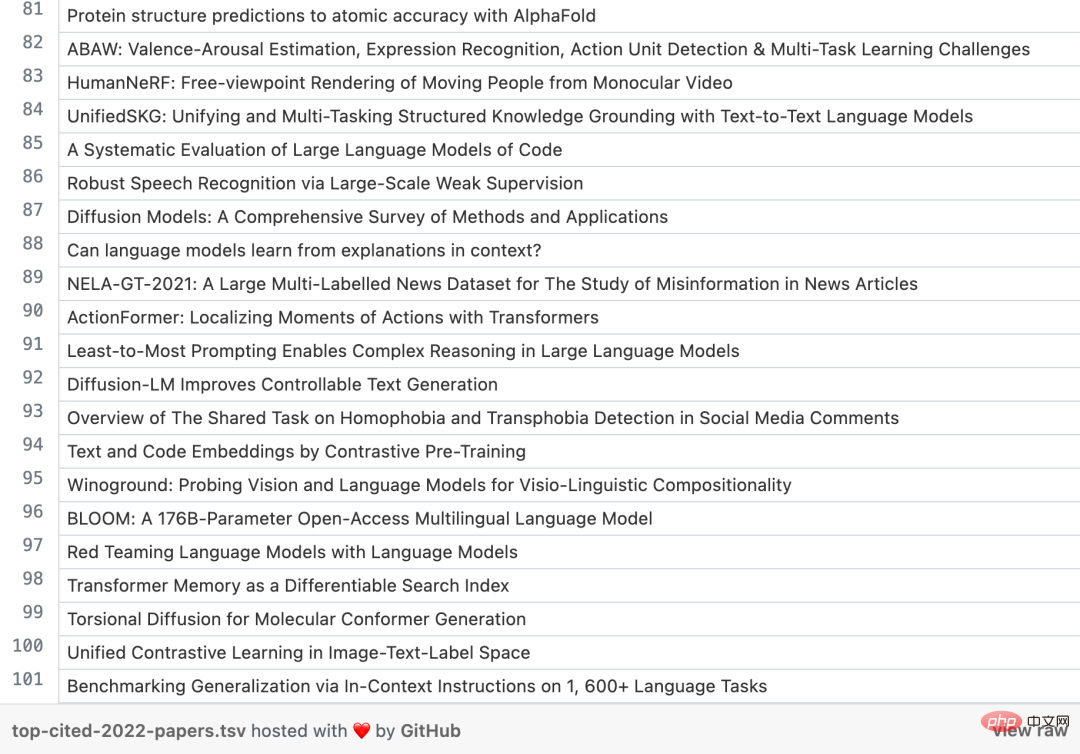

So, what are the top papers we just talked about?

Before we dive into the numbers, let’s take a look at the top papers from the past three years. I’m sure you’ll recognize a few of them.

1. AlphaFold Protein Structure Database: massively expanding the structural coverage of protein-sequence space with high-accuracy models

2. ColabFold: making protein folding accessible to all

3、Hierarchical Text-Conditional Image Generation with CLIP Latents

4、A ConvNet for the 2020s

5. PaLM: Scaling Language Modeling with Pathways

1、"Highly accurate protein structure prediction with AlphaFold》

2、《Swin Transformer: Hierarchical Vision Transformer using Shifted Windows》

3、《Learning Transferable Visual Models From Natural Language Supervision》

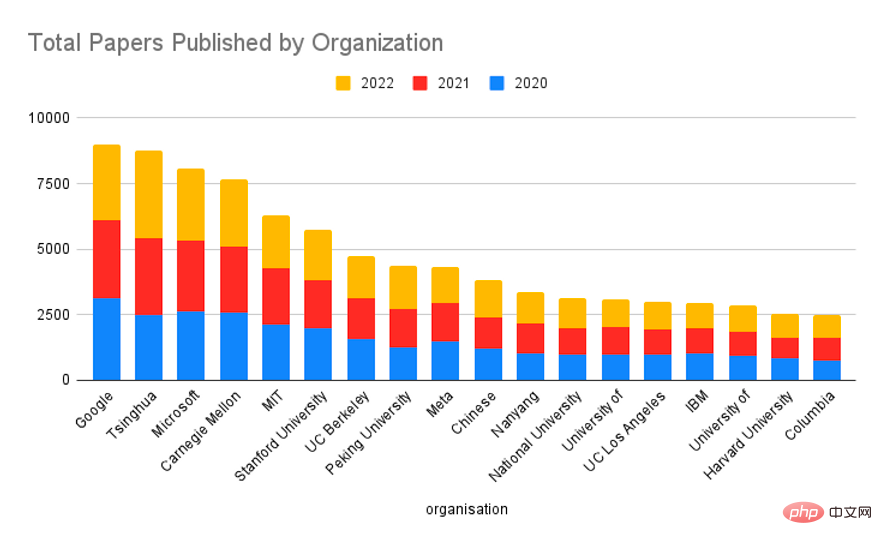

4、《On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?》 5、《Emerging Properties in Self-Supervised Vision Transformers》 1. "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale" ##2、《Language Models are Few-Shot Learners》 3. "YOLOv4: Optimal Speed and Accuracy of Object Detection" 4、《Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer》 5. "Bootstrap your own latent: A new approach to self-supervised Learning" Google has always been the strongest player, followed by Meta , Microsoft, UC Berkeley, DeepMind and Stanford. While industry is now the dominant force in AI research, a single academic institution won't have that big of an impact, but the tail of these institutions is much longer, so when we aggregate by organization type, a balance is achieved . In terms of total research volume, Google has ranked first in the past three years, Tsinghua University, Carnegie Mellon University, MIT, Stanford University and other universities ranked high, while Microsoft ranked third Bit. Overall, academic institutions produce more research than technology companies in the industry, and the two technology giants Google and Microsoft have also published a high number of studies in the past three years.

##Hot papers in 2020

Paper link: https://arxiv.org/abs/2005.14165

Paper link: https://arxiv.org/abs /2004.10934

Paper link: https://arxiv.org/abs/1910.10683

Paper link: https://arxiv. org/abs/2006.07733

Let’s take a look at some of the leading institutions in the top 100 How the number of famous papers ranks:

In fact, Google’s scientific research strength has always been very strong. In 2017, Google published the paper "Attention Is All You Need", marking the advent of transformer. To this day, transformers are still the architectural foundation of most NLP and CV models, including ChatGPT.

Last month, on the occasion of the release of Bard, Google CEO Sundar Pichai also stated in an open letter: "Google AI and DeepMind promote the development of the most advanced technology. Our Transformer The research project and our 2017 field paper, along with our important advances in diffusion models, are the basis for many current generative AI applications."

Of course, as a newly promoted OpenAI, the company behind streaming ChatGPT, has an absolute advantage in the conversion rate of its research results in the past three years. In recent years, most of OpenAI's research results have attracted great attention, especially in large-scale language models.

In 2020, OpenAI released GPT-3, a large-scale language model with 175 billion parameters. It is a game changer in the field of language modeling because it solves many difficult problems in large-scale language modeling. GPT-3 set off a craze for large-scale language models. For several years, the parameter scale of language models has been continuously broken, and people have been exploring more potentials of large-scale language models.

At the end of 2022, ChatGPT was born, which attracted great attention to text generation and AI dialogue systems. In particular, ChatGPT has demonstrated very high capabilities in generating knowledge-based content and generating code. After Google and Microsoft successively announced the integration of functions similar to ChatGPT into the next generation search engine, ChatGPT is believed to lead a new revolution in AIGC and intelligent tools.

Finally, let’s take a look at the 100 most cited papers in 2022:

#The number of Twitter mentions has also been added here, This is sometimes seen as an early indicator of impact. But so far, the correlation appears to be weak. Further work is needed.

The above is the detailed content of Who has published the most influential AI research? Google is far ahead, and OpenAI's achievement conversion rate beats DeepMind. For more information, please follow other related articles on the PHP Chinese website!