##symbol |

meaning |

|

The number of unique tags. |

|

##label data set, where is the one-hot representation of the real tag.  |

|

## Unlabeled dataset.

|

| #The entire data set, including Labeled samples and unlabeled samples.

|

|

can represent unlabeled samples or labeled samples. |

|

#After enhanced processing Label samples or label samples. |

|

#i-th sample.

|

| # represents loss and supervision respectively Loss, unsupervised loss

|

|

## The unsupervised loss weight increases as the number of training steps increases. |

|

## Given input , the conditional probability of labeling the data set. ## Given input , the conditional probability of labeling the data set.

|

|

#Generated using weighted θ Neural network is the model that is expected to be trained. |

|

##Output of logical function f vector of values.

|

|

predicted Label distribution. |

|

#Distance function between two distributions, such as mean square error, cross entropy, KL divergence, etc.

|

| #Teacher Moving average weighted hyperparameter for model weights.

|

|

##α is the of the mixed sample coefficient, |

| Sharpen the temperature of the predicted distribution. |

|

#Select the confidence threshold for qualified prediction results. |

3 Assumptions

In the existing research literature, the following assumptions have been discussed to support decisions about certain designs in semi-supervised learning methods.

Assumption 1: Smoothness Assumptions

If two data samples are at the high end of the feature space Density areas are close, their labels should be the same or very similar.

Assumption 2: Cluster Assumptions

The feature space has both dense areas and sparse areas . Densely grouped data points naturally form clusters. Samples in the same cluster should have the same label. This is a small extension of Assumption 1.

Assumption 3: Low-density Separation Assumptions

Decision boundaries between classes It is often located in sparse low-density areas, because otherwise, the decision boundary will split the high-density cluster into two classes corresponding to two clusters respectively, which will cause both Assumption 1 and Assumption 2 to be invalid.

Assumption 4: Manifold Assumptions

High-dimensional data tend to be located on low-dimensional manifolds . Although real-world data may be observed at very high dimensions (e.g., images of real-world objects/scenes), they can actually be captured by lower-dimensional manifolds. It captures certain properties of the data and closely groups some similar data points (for example, images of real-world objects/scenes are not derived from a uniform distribution of all pixel combinations). This enables the model to learn a more efficient representation method to discover and evaluate similarities between unlabeled data points. This is also the basis of representation learning. For a more detailed explanation of this assumption, please refer to the article "How to Understand Popular Assumptions in Semi-supervised Learning".

Link: https://stats.stackexchange.com/questions/66939/what-is-the-manifold-assumption-in-semi-supervised-learning

4 Consistency Regularization

Consistency regularization, also called consistency training, assumes that given the same input, randomness in the neural network properties (such as using the Dropout algorithm) or data augmentation transformations do not change model predictions. Each method in this section has a consistency regularization loss:  . Several self-supervised learning methods such as SimCLR, BYOL, and SimCSE have adopted this idea. Different enhanced versions of the same sample produce the same representation. The research motivations for cross-view training in language modeling and multi-view learning in self-supervised learning are the same.

. Several self-supervised learning methods such as SimCLR, BYOL, and SimCSE have adopted this idea. Different enhanced versions of the same sample produce the same representation. The research motivations for cross-view training in language modeling and multi-view learning in self-supervised learning are the same.

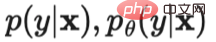

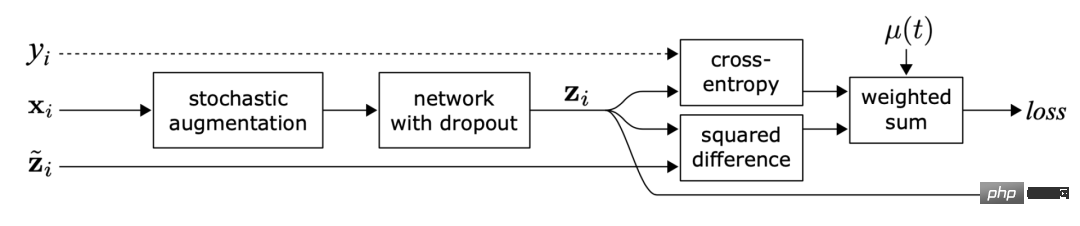

(1).Π model

##Figure 1: Overview of Π-model. The same input is perturbed with different random enhancements and dropout masks to produce two versions, and two outputs are obtained through the network. The Π-model predicts that the two outputs are consistent. (Image source: Laine and Aila's 2017 paper "Sequential Integration of Semi-supervised Learning")

In the paper "Regularization With Stochastic Transformations and Perturbations for Deep Semi-Supervised Learning" published in 2016, Sajjadi et al. proposed an unsupervised Learning loss, which can generate two versions of the same data point through random transformation (such as dropout, random maximum pooling), and minimize the difference between the two output results after passing through the network. This loss can be applied to unlabeled datasets since their labels are not explicitly used. Laine and Aila later gave this processing method a name in the paper "Temporal Ensembling for Semi-Supervised Learning" published in 2017, called the Π model.  Among them,

Among them,  refers to the values of different random enhancement or dropout masks applied to the same neural network. This loss uses the entire dataset.

refers to the values of different random enhancement or dropout masks applied to the same neural network. This loss uses the entire dataset.

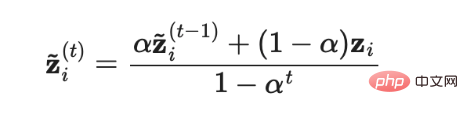

(2) Timing integration (Temporal ensembling)

##Figure 2: Timing integration Overview. Its learning goal is to make label predictions on the exponential moving average (EMA) of each sample. (Image source: Laine and Aila's 2017 paper "Sequential Integration of Semi-supervised Learning")

ΠThe model requires each sample to pass through the neural network twice, which doubles the computational cost. In order to reduce costs, the temporal ensemble model continues to use the exponential moving average (EMA) of the real-time model predictions for each training sample  as the learning target, and the EMA only needs to be calculated and updated once in each iteration. Since the output

as the learning target, and the EMA only needs to be calculated and updated once in each iteration. Since the output  of the sequential integration model is initialized to 0, it is divided by

of the sequential integration model is initialized to 0, it is divided by  for normalization to correct this startup bias. The Adam optimizer also has such a bias correction term for the same reason.

for normalization to correct this startup bias. The Adam optimizer also has such a bias correction term for the same reason.  Where

Where  is the ensemble prediction in the t-th iteration, and

is the ensemble prediction in the t-th iteration, and  is the model prediction in the current round. It should be noted that since

is the model prediction in the current round. It should be noted that since  =0, after bias correction,

=0, after bias correction,  is completely equal to the model prediction value

is completely equal to the model prediction value  in the first iteration.

in the first iteration.

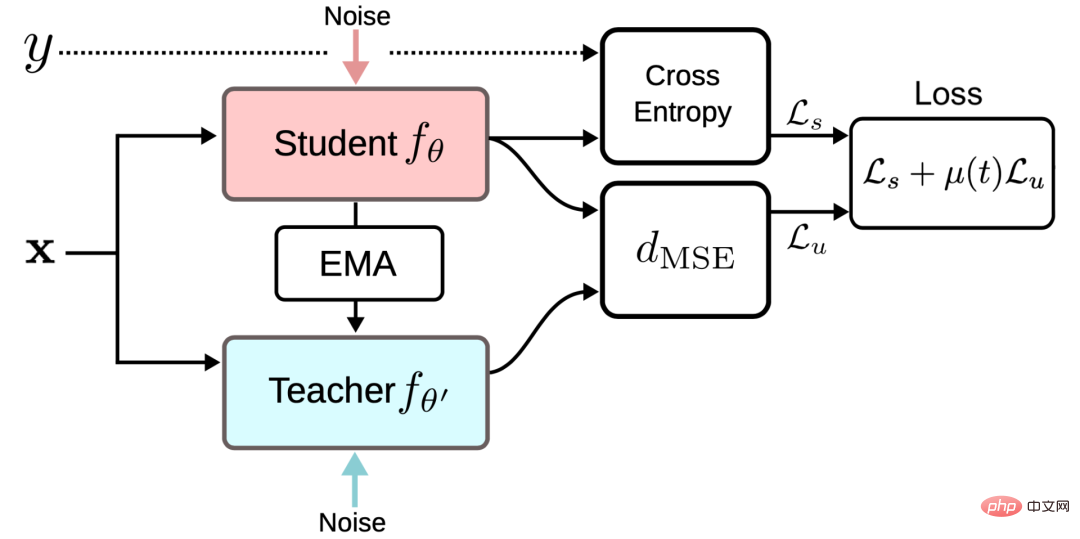

(3) Mean teachers

Figure 3: Mean Teacher Framework Overview (Image source: Tarvaninen and Valpola's paper "The Mean Teacher Model is a Better-Performing Exemplar Model: Weighted Average Consistency Objective Optimization Semi-supervised Deep Learning Results" published in 2017)

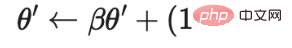

The temporal ensemble model tracks the exponential moving average of the label predictions for each training sample as the learning objective. However, this label prediction only changes in each iteration, which makes this approach cumbersome when the training data set is large. In order to overcome the problem of slow target update speed, Tarvaninen and Valpola published the paper "Mean teachers are better role models: Weighted average consistency target optimization semi-supervised deep learning results" in 2017 (Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results), the Mean Teacher algorithm is proposed, which updates the target by tracking the moving average of the model weights instead of the model output. The original model with a weight of θ is called a Student model, and a model with a weight of the moving average weight θ′ of multiple consecutive Student models is called a Mean Teacher model:

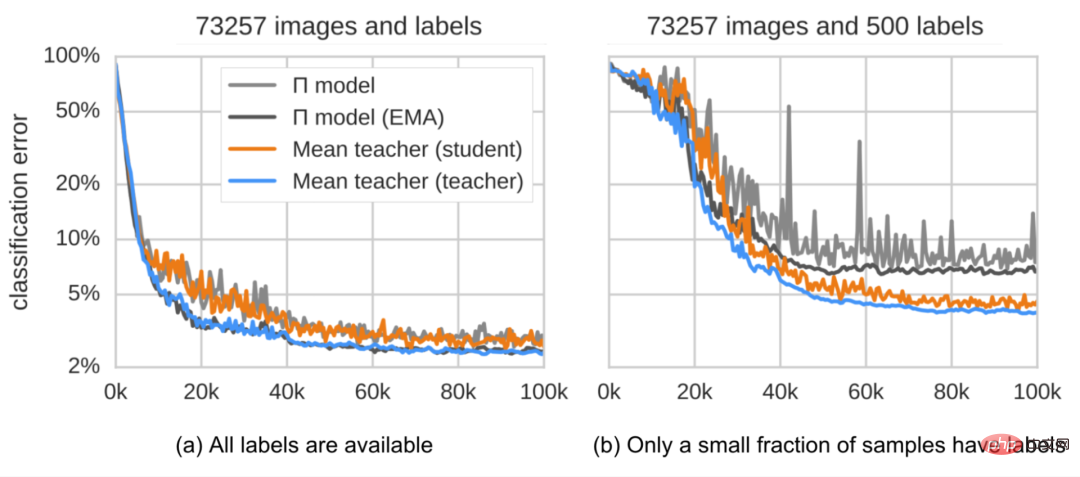

The consistency regularization loss is the distance between the predictions of the Student model and the Teacher model, and this gap should be minimized. The Mean Teacher model can provide more accurate predictions than the Student model. This conclusion is confirmed in the empirical experiment, as shown in Figure 4.

Figure 4: Classification errors of the Π model and the Mean Teacher model on the SVHN dataset. The Mean Teacher model (represented by the orange line) performs better than the Student model (represented by the blue line). (Image source: Tarvaninen and Valpola's paper "The Mean Teacher Model is a Better-Performing Exemplar Model: Weighted Average Consistency Objective Optimization Semi-supervised Deep Learning Results" published in 2017)

According to its ablation research:

- Input enhancement methods (for example, random flipping of input images, Gaussian noise) or dropout processing of the Student model are good for model implementation Performance is a must. Teacher mode does not require dropout processing.

- Performance is sensitive to the decay hyperparameter β of the exponential moving average. A better strategy is to use a smaller β=0.99 in the growth stage and a larger β=0.999 in the later stages when the improvement of the Student model slows down.

- It was found that the mean square error (MSE) of the consistency cost function performed better than other cost functions such as KL divergence.

(4) Use noise samples as learning targets

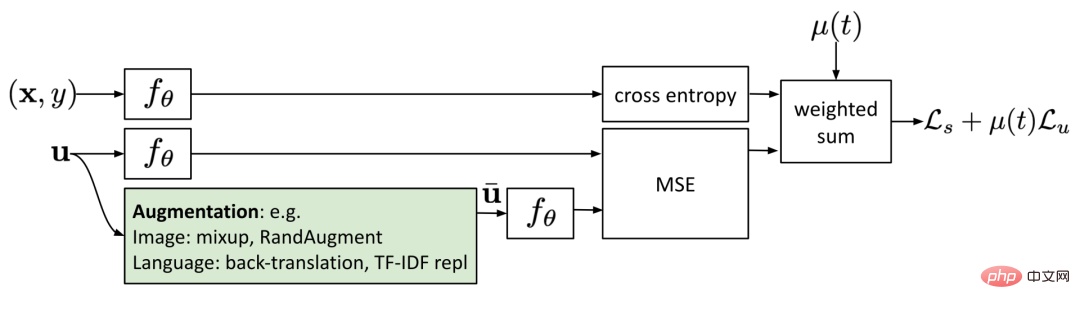

Several recent consistency training methods learn to combine original unlabeled samples with their corresponding enhanced versions The difference between predictions is minimized. This idea is very similar to the Π model, but its consistency regularization loss only works on unlabeled data.

Figure 5: Consistency training using noisy samples

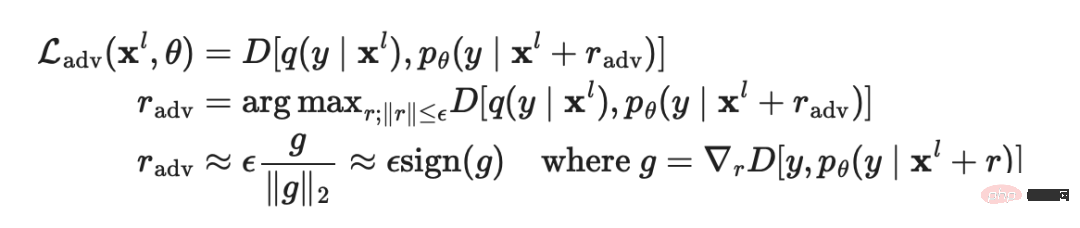

In the paper "Explaining and Harnessing Adversarial Examples" published by Goodfellow et al. in 2014, adversarial training (Adversarial Training) applies adversarial noise to the input and trains The model makes it robust to such adversarial attacks.

The application formula of this method in supervised learning is as follows:

Where  is the true distribution, which approximates the one-hot encoding of the true value label,

is the true distribution, which approximates the one-hot encoding of the true value label,  is the model prediction, and

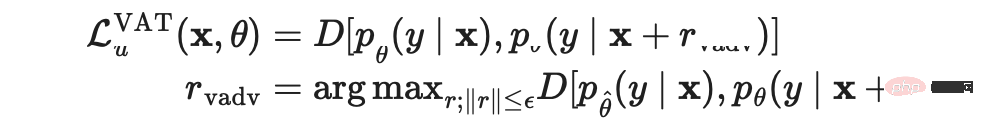

is the model prediction, and  is the distance function that calculates the difference between the two distributions. Miyato et al. proposed virtual adversarial training in the paper "Virtual Adversarial Training: A Regularization Method for Supervised and Semi-Supervised Learning" published in 2018 (Virtual Adversarial Training, VAT), this method is an extension of the adversarial training idea in the field of semi-supervised learning. Since

is the distance function that calculates the difference between the two distributions. Miyato et al. proposed virtual adversarial training in the paper "Virtual Adversarial Training: A Regularization Method for Supervised and Semi-Supervised Learning" published in 2018 (Virtual Adversarial Training, VAT), this method is an extension of the adversarial training idea in the field of semi-supervised learning. Since  is unknown, VAT replaces the unknown term with the current model's prediction of the original input when the current weight is set to

is unknown, VAT replaces the unknown term with the current model's prediction of the original input when the current weight is set to  . It should be noted that

. It should be noted that  is a fixed value of the model weight, so gradient updates will not be performed on

is a fixed value of the model weight, so gradient updates will not be performed on  .

.

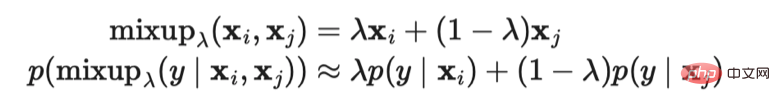

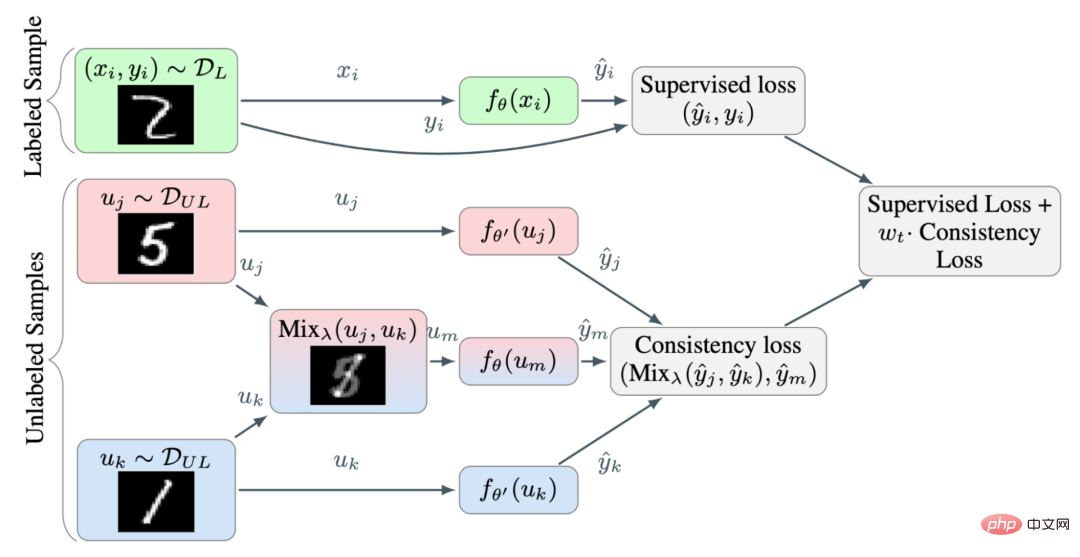

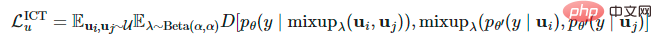

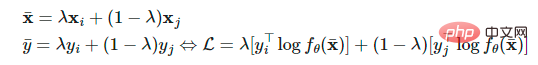

VAT loss applies to both labeled and unlabeled samples. It calculates the negative smoothness of the current model's prediction manifold at each data point. Optimizing this loss can make the prediction manifold smoother. Verma et al. proposed interpolation consistency training (ICT) in the 2019 paper "Interpolation Consistency Training for Semi-Supervised Learning" by adding interpolation of more data points. Strengthen the dataset so that the model predictions and the interpolation of the corresponding labels are as consistent as possible. Hongyi Zhang et al. proposed the MixUp method in the 2018 paper "Mixup: Beyond Empirical Risk Minimization", which mixes two images through a simple weighted sum. Interpolation consistency training is based on this idea, allowing the prediction model to generate a label for a mixed sample to match the predicted interpolation of the corresponding input:

##Where represents the moving average of  θ of the Mean Teacher model.

θ of the Mean Teacher model.

Figure 6: Overview of interpolation consistency training. Use the MixUp method to generate more interpolation samples with interpolation labels as the learning target. (Image source: Verma et al.'s 2019 paper "Interpolation Consistency Training for Semi-supervised Learning")

Since two randomly selected unlabeled samples belong to different categories The probability is very high (for example, there are 1000 target categories in ImageNet), so applying the Mixup method between two random unlabeled samples is likely to generate an interpolation near the decision boundary. According to the low-density separation hypothesis, decision boundaries are often located in low-density areas.

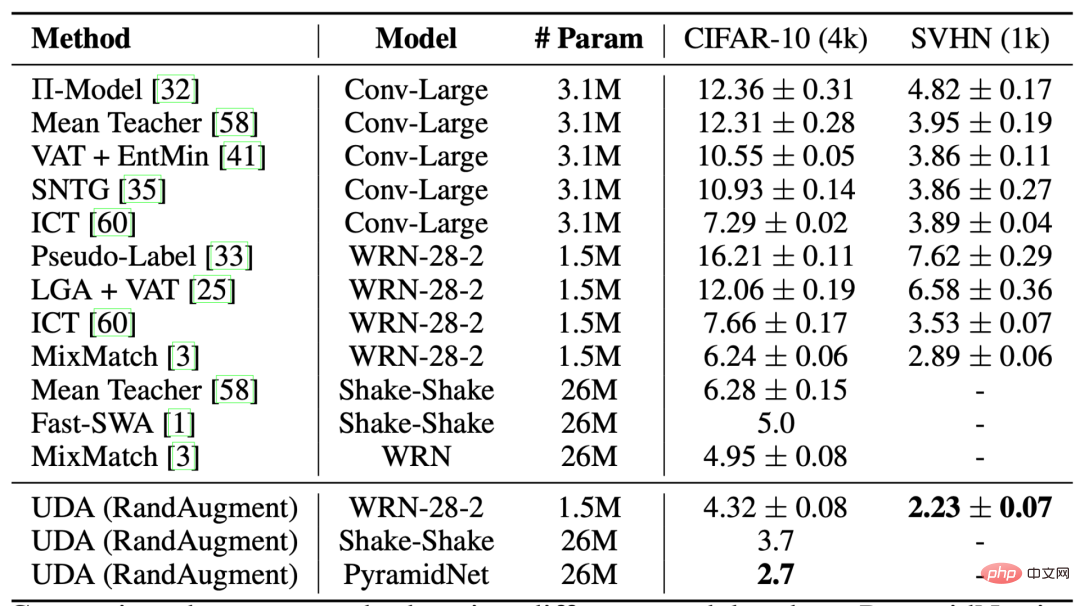

represents the moving average of θ. Similar to VAT, the Unsupervised Data Augmentation (UDA) proposed by Xie et al. in the 2020 paper "Unsupervised Data Augmentation for Consistency Training" learns to give unlabeled samples The same output as predicted by the augmented sample. UDA specifically focuses on studying how the "quality" of noise affects the performance of semi-supervised learning through consistent training. To generate meaningful and efficient noise samples, it is crucial to use advanced data augmentation methods. A good data augmentation method should be able to generate efficient (i.e., do not change labels) and diverse noise with targeted inductive biases.

represents the moving average of θ. Similar to VAT, the Unsupervised Data Augmentation (UDA) proposed by Xie et al. in the 2020 paper "Unsupervised Data Augmentation for Consistency Training" learns to give unlabeled samples The same output as predicted by the augmented sample. UDA specifically focuses on studying how the "quality" of noise affects the performance of semi-supervised learning through consistent training. To generate meaningful and efficient noise samples, it is crucial to use advanced data augmentation methods. A good data augmentation method should be able to generate efficient (i.e., do not change labels) and diverse noise with targeted inductive biases.

For the image field, UDA uses the RandAugment method, which was proposed by Cubuk et al. in the 2019 paper "RandAugment: A practical automatic data enhancement method that reduces the search space " (RandAugment: Practical automated data augmentation with a reduced search space). It uniformly samples the augmentation operations available in the Python image processing library PIL, without requiring learning or optimization, and therefore is much cheaper than using the AutoAugment method.

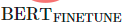

Figure 7: Comparison of various semi-supervised learning methods in CIFAR-10 classification. When trained on 50,000 samples without RandAugment processing, the error rates of Wide-ResNet-28-2 and PyramidNet ShakeDrop under full supervision are **5.4** and **2.7** respectively.

For the language field, UDA uses a combination of back-translation and word replacement based on TF-IDF. Back-translation retains high-level meaning but does not retain certain words themselves, while TF-IDF-based word replacement removes uninformative words with low TF-IDF scores. In experiments on language tasks, researchers found that UDA is complementary to transfer learning and representation learning; for example, fine-tuning the BERT model on unlabeled data in the domain ( in Figure 8) can further improve performance. .

in Figure 8) can further improve performance. .

Figure 8: Comparison of unsupervised data enhancement methods using different initialization settings on different text classification tasks. (Image source: Xie et al.'s 2020 paper "Unsupervised Data Augmentation for Consistency Training")

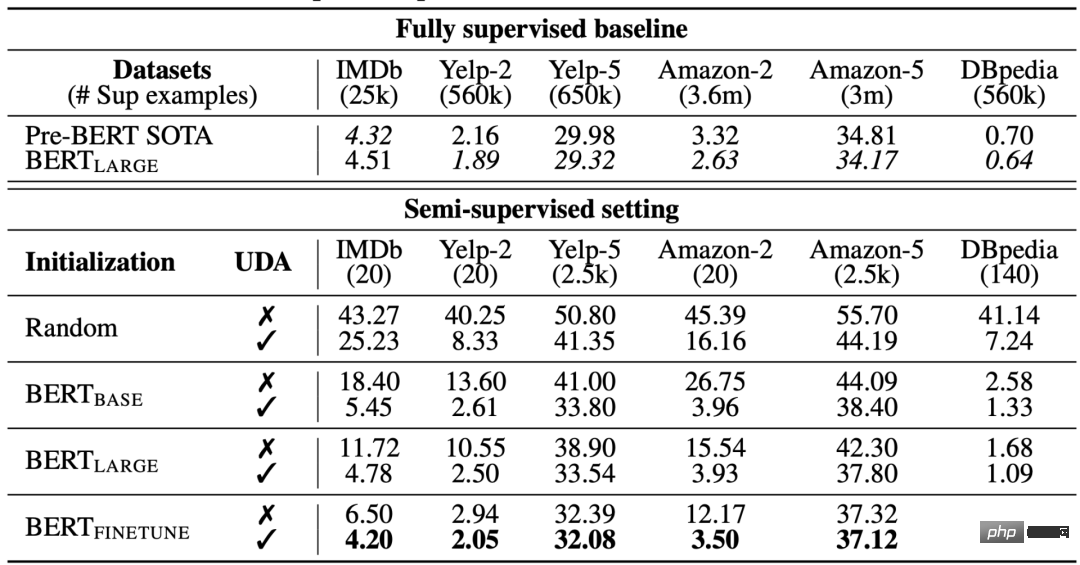

When calculating  , UDA can be used by using the following Three training techniques to optimize the results:

, UDA can be used by using the following Three training techniques to optimize the results:

- Low confidence masking: If the prediction confidence of the sample is lower than the threshold

, then Perform mask processing.

, then Perform mask processing.

- Sharpening Prediction Distribution: Use low temperature

in Softmax to sharpen the prediction probability distribution.

in Softmax to sharpen the prediction probability distribution.

- In-Domain Data Filtration: In order to extract more in-domain data from large out-of-domain data sets, researchers train a classifier to predict in-domain labels and then retain Samples with high confidence predictions are used as in-domain candidate samples.

Among them,  is the fixed value of the model weight, the same as

is the fixed value of the model weight, the same as  in VAT , so there is no gradient update,

in VAT , so there is no gradient update,  is the enhanced data point,

is the enhanced data point,  is the prediction confidence threshold,

is the prediction confidence threshold,  is the distribution sharpening temperature.

is the distribution sharpening temperature.

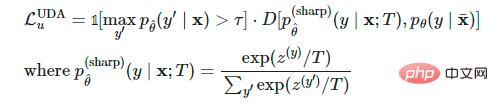

5 Pseudo Labeling

Lee et al.’s 2013 paper "Pseudo Labeling: A simple and efficient semi-supervised learning method for deep neural networks" Pseudo-Label: The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks is proposed in (Pseudo-Label: The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks). It assigns pseudo-labels to unlabeled samples based on the maximum softmax probability predicted by the current model, and then assigns pseudo-labels to unlabeled samples under a fully supervised setting. , train the model on labeled samples and unlabeled samples simultaneously.

Why do pseudo tags work? Pseudo-labeling is actually equivalent to entropy regularization, which minimizes the conditional entropy of the class probability of unlabeled data, thereby achieving low-density separation between classes. In other words, the predicted class probabilities actually compute the class overlap, and minimizing the entropy is equivalent to reducing the class overlap and thus the density separation.

Figure 9: (a) shows that after training the model using only 600 labeled data, the test output is performed on the MINIST test set The t-SNE visualization result, (b) represents the t-SNE visualization result of the test output on the MINIST test set after training the model using 600 labeled data and 60,000 pseudo-labels of unlabeled data. Pseudo labels enable the learned embedding space to achieve better separation. (Image source: Lee et al.'s 2013 paper "Pseudo Labels: A Simple and Efficient Semi-supervised Learning Method for Deep Neural Networks")

Use pseudo labels for training Nature is an iterative process. Here, the model that generates pseudo labels is called the Teacher model, and the model that uses pseudo labels for learning is called the Student model.

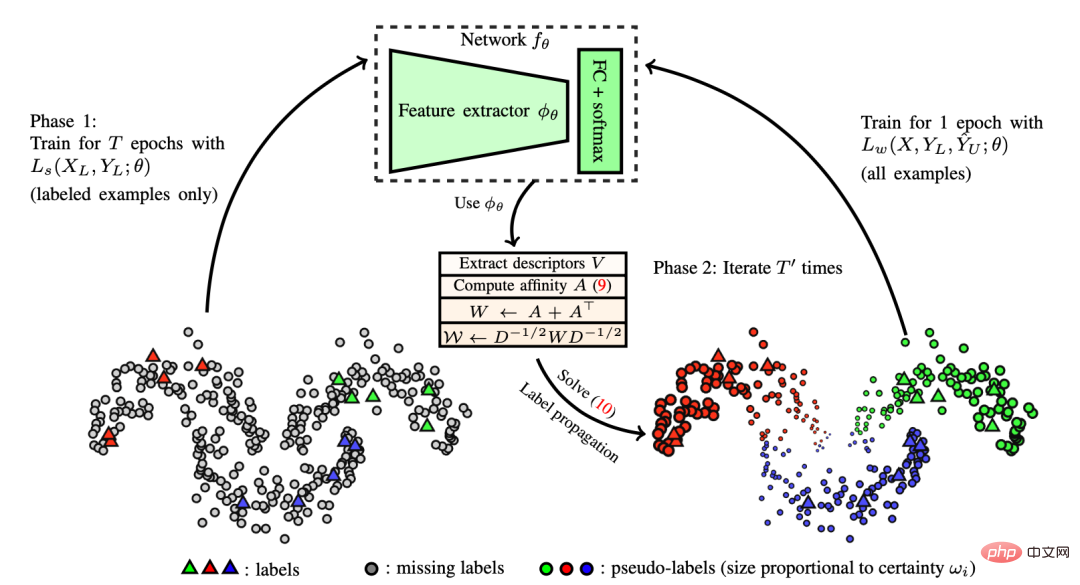

(1) Label propagation

The paper "Label Propagation for Deep Semi-supervised Learning" published by Iscen et al. in 2019 The concept of label propagation was proposed in Semi-supervised Learning, which is an idea of constructing similarity graphs between samples based on feature embedding. The pseudo-labels are then "diffused" from known samples to unlabeled samples with propagation weights proportional to the pairwise similarity scores in the graph. Conceptually, it is similar to the k-NN classifier, both of which suffer from the problem of not scaling well to large data sets.

Figure 10: Schematic diagram of how tag propagation works. (Image source: "Label Propagation of Deep Semi-supervised Learning" published by Iscen et al. in 2019)

(2) Self-Training

Self-training is not a new concept. Scudder et al. published the paper "Probability of error of some adaptive pattern-recognition machines" in 1965, Nigram & Ghani et al. in CIKM The paper "Analyzing the Effectiveness and Applicability of Co-trainin" published in 2000 all involves this concept. It is an iterative algorithm that performs the following two steps in turn until each unlabeled sample matches a label:

- First, it constructs a classification on the labeled data device.

- Next, it uses this classifier to predict labels for unlabeled data and converts the labels with the highest confidence into labeled samples.

The paper "Self-training with Noisy Student improves ImageNet classification" published by Xie et al. in 2020 ImageNet classification), self-training is applied to deep learning and has achieved great results. In the ImageNet classification task, the researchers first trained an EfficientNet model as the Teacher model to generate pseudo labels for 300 million unlabeled images, and then trained a larger EfficientNet model as the Student model to learn real labeled images and pseudo labels. image. In their experimental setup, a key element is the addition of noise during the training of the Student model, while the Teacher model does not add noise during the pseudo-label generation process. Therefore, their method is called "Noisy Student", which uses random depth, dropout and RandAugment methods to add noise to the Student model. The Student model performs better than the Teacher model, largely due to the benefits of adding noise. The added noise has the compounding effect of smoothing the decision boundaries produced by the model on both labeled and unlabeled data. There are several other important technical settings for Student model self-training, including:

- The Student model plus should be large enough (i.e. larger than the Teacher model) to fit more data.

- Student models that add noise should be combined with data balancing methods, which is especially important to balance the number of pseudo-labeled images for each class weight.

- Soft pseudo tags work better than hard tags.

The addition of noise to the Student model also improves defense against FGSM (fast gradient sign attack, which uses the loss gradient of the input data and adjusts the input data to maximize the loss) Robustness, even if the model is not optimized for adversarial robustness.

Du et al. proposed the SentAugment method in the 2020 paper "Self-training Improves Pre-training for Natural Language Understanding", which aims to It solves the problem of insufficient unlabeled data in the domain when performing self-training in the language field. It relies on sentence vectors to find unlabeled in-domain samples from large corpora and uses the retrieved sentences for self-training.

(3) Reduce confirmation bias (Confirmation Bias)

Confirmation bias is the problem of providing wrong pseudo-labels due to the fact that the Teacher model is not mature enough. Overfitting to the wrong labels may not result in a better Student model.

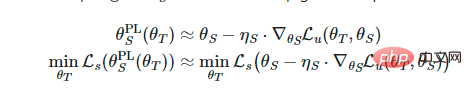

In order to reduce confirmation bias, Eric Arazo et al. in the paper "Pseudo-Labeling and Confirmation Bias in Deep Semi-supervised Learning" Two new methods are proposed in Supervised Learning).

One is the Mixup method using soft labels. Given  two samples and their corresponding true labels and pseudo labels

two samples and their corresponding true labels and pseudo labels  , the interpolation label equation can be converted into softmax Output cross-entropy loss:

, the interpolation label equation can be converted into softmax Output cross-entropy loss:

If there are too few labeled samples, using the Mixup method is not enough. Therefore, the authors of the paper oversampled the label samples to set a minimum number of label samples in each mini-batch. This works better than weight compensation for labeled samples, because it will be updated more frequently, rather than less frequent and larger updates - which are actually more unstable.

Like consistency regularization, data augmentation and dropout methods are also important to play the role of pseudo-labels.

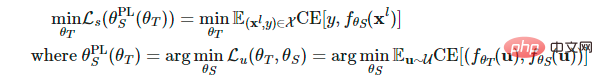

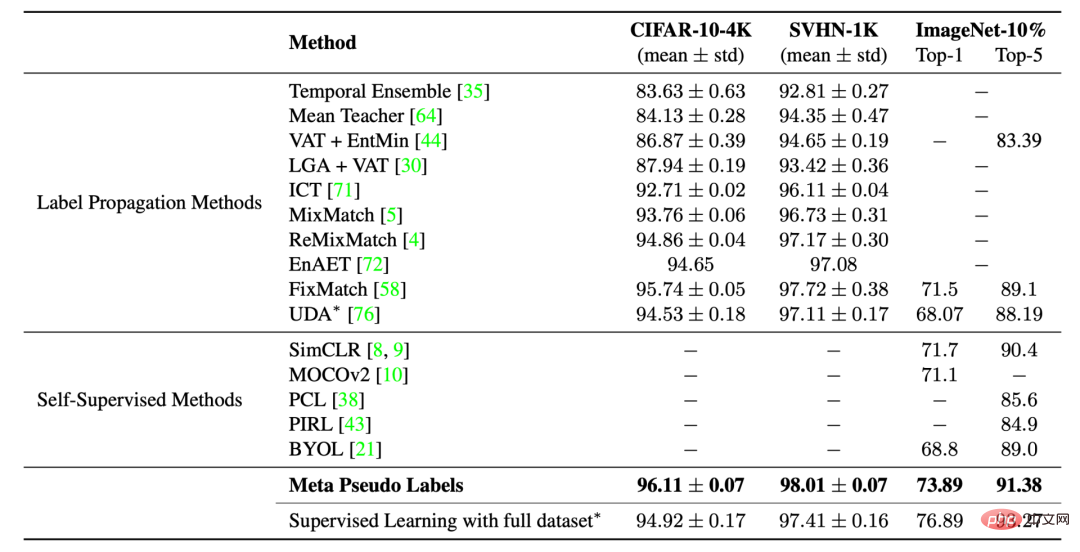

Hieu Pham and others proposed meta pseudo-labels in the 2021 paper "Meta Pseudo Labels". Based on the feedback of the Student model on the performance on the label data set, continuous Adjust the Teacher model. The Teacher model and the Student model are trained simultaneously, the Teacher model learns to generate better pseudo labels, and the Student model learns from the pseudo labels.

Set the weights of the Teacher model and Student model to  and

and  respectively. The loss of the Student model on the labeled samples is defined as a function of

respectively. The loss of the Student model on the labeled samples is defined as a function of

, and tends to optimize the Teacher model model to minimize this loss accordingly.

, and tends to optimize the Teacher model model to minimize this loss accordingly.

However, optimizing the above equation is not an easy task. Borrowing the idea of MAML (Model-Agnostic Meta-Learnin), it is similar to performing a one-step gradient update on  while performing multi-step

while performing multi-step

calculations.

calculations.

Since soft pseudo-labels are used, the above objective function is a differentiable function. However, if hard pseudo labels are used, they are non-differentiable functions, so reinforcement learning methods such as REINFORCE are needed.

The optimization process is carried out alternating between two models:

- Student model update: Given a batch of unlabeled samples

, we can generate pseudo labels through the function

, we can generate pseudo labels through the function  and use one-step stochastic gradient descent to optimize

and use one-step stochastic gradient descent to optimize  :

:  .

.

- Teacher model update: Given a batch of labeled samples

, we repeatedly use the updates of the Student model to optimize

, we repeatedly use the updates of the Student model to optimize  :

:  . Additionally, UDA objects are applied to the Teacher model to incorporate consistent regularization.

. Additionally, UDA objects are applied to the Teacher model to incorporate consistent regularization.

Figure 11: Meta pseudo-labeling versus other semi-supervised or self-supervised learning methods on images Performance comparison in classification tasks. (Image source: Hieu Pham et al.'s 2021 paper "Meta Pseudo Labels")

6 Consistency regularization pseudo labels

can combine consistency with Regularization and pseudo-labeling methods are combined and applied to semi-supervised learning.

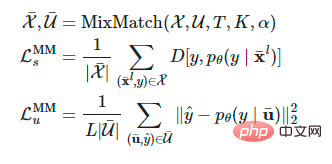

(1) MixMatch

Berthelot et al.’s 2019 paper "MixMatch: A Holistic Approach to Semi-supervised Learning" The MixMatch method proposed in "To Semi-Supervised Learning" is a holistic method applied to semi-supervised learning. It uses unlabeled data by integrating the following methods:

- Consistency regularization: Let the model output the same prediction for perturbed unlabeled samples.

- Entropy minimization (Entropy minimization): Let the model output confident predictions for unlabeled data.

- MixUp enhancement: Make the model behave linearly between samples.

Given a batch of labeled data  and unlabeled data

and unlabeled data  , the enhanced version is obtained through the

, the enhanced version is obtained through the  operation,

operation,  and

and  respectively Represents the enhanced sample and the predicted label for the unlabeled sample.

respectively Represents the enhanced sample and the predicted label for the unlabeled sample.

is the sharpening temperature, used to reduce the guessed label overlap; K is each The number of enhanced versions generated by label samples;

is the sharpening temperature, used to reduce the guessed label overlap; K is each The number of enhanced versions generated by label samples;  is a parameter in the MixMatch function. For each

is a parameter in the MixMatch function. For each  , MixMatch will generate K enhanced versions,

, MixMatch will generate K enhanced versions,  equals k to 1, ...., K enhanced versions of

equals k to 1, ...., K enhanced versions of  , and the model guesses the pseudo label based on the average

, and the model guesses the pseudo label based on the average  .

.

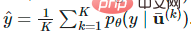

Figure 12: The "label guessing" process in MixMatch: the average of K enhanced unlabeled samples corrects the predicted marginal distribution, and finally sharpens the distribution. (Image source: Berthelot et al.’s 2019 paper "MixMatch: A Holistic Method for Semi-supervised Learning")

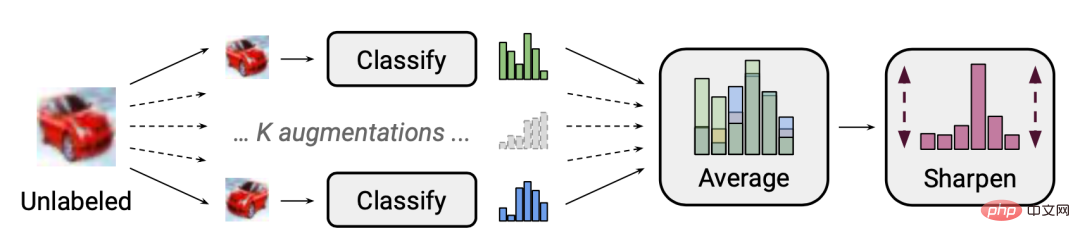

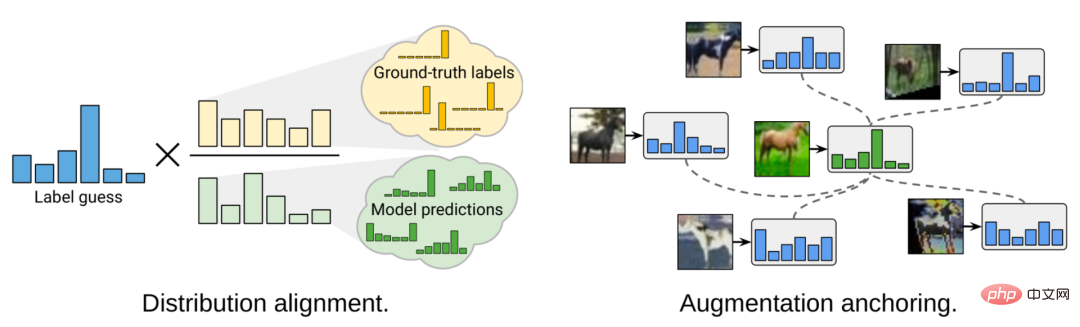

According to the ablation research of this paper, the MixUp enhancement of unlabeled data is particularly important. Removing temperature sharpening on the pseudo-label distribution can severely impact performance. For label guessing, calculating the average of multiple augmented versions of unlabeled data is also essential. In the 2020 paper "ReMixMatch: Semi-Supervised Learning with Distribution Alignment and Augmentation Anchoring" (ReMixMatch: Semi-Supervised Learning with Distribution Alignment and Augmentation Anchoring), Berthelot et al. further proposed the ReMixMatch method, by introducing the following two new Mechanism to improve the MixMatch method:

## Figure 13: Diagram of two improvements of the MixMatch method by the ReMixMatch method Show. (Image source: Berthelot et al.'s 2020 paper "ReMixMatch: Semi-supervised learning using distribution alignment and enhanced anchoring")

1. Distribution alignment (Distribution alignment ). This method makes the marginal distribution  close to the marginal distribution of the true value label. Let

close to the marginal distribution of the true value label. Let  be the class distribution of the ground truth labels, and

be the class distribution of the ground truth labels, and  be the moving average of the predicted class distribution on unlabeled data. The model's predictions for unlabeled samples

be the moving average of the predicted class distribution on unlabeled data. The model's predictions for unlabeled samples  are normalized to

are normalized to  to match the true marginal distribution.

to match the true marginal distribution.

It should be noted that entropy minimization is not a useful goal if the marginal distributions are inconsistent. Moreover, the assumption that the class distributions on labeled and unlabeled data match each other is indeed too absolute and may not necessarily be correct in real-world settings.

2. Augmentation Anchoring. Given an unlabeled sample, a weakly enhanced "anchored" version is first generated, and then the CTAugment (Control Theory Augment) method is used to calculate the average of K strongly enhanced versions. CTAugment only samples enhanced versions of the talkback model whose predictions remain within the network's tolerance.

ReMixMatch loss consists of the following items:

- Supervised loss that applies data augmentation and Mixup methods

- Unsupervised loss that applies data augmentation and Mixup methods but uses pseudo labels as targets

- Cross entropy loss of a single strongly enhanced unlabeled image without using the Mixup method

- Rotation loss in self-supervised learning (rotation loss ).

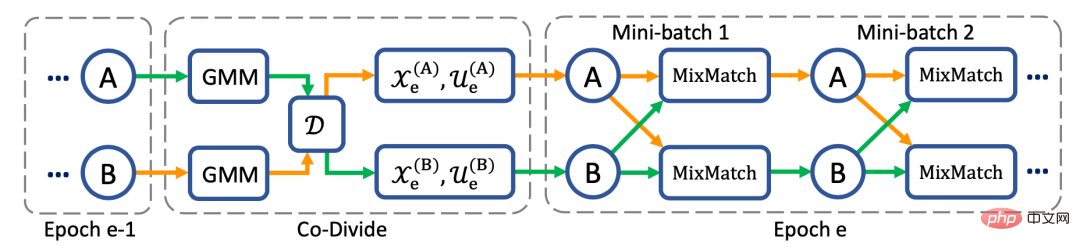

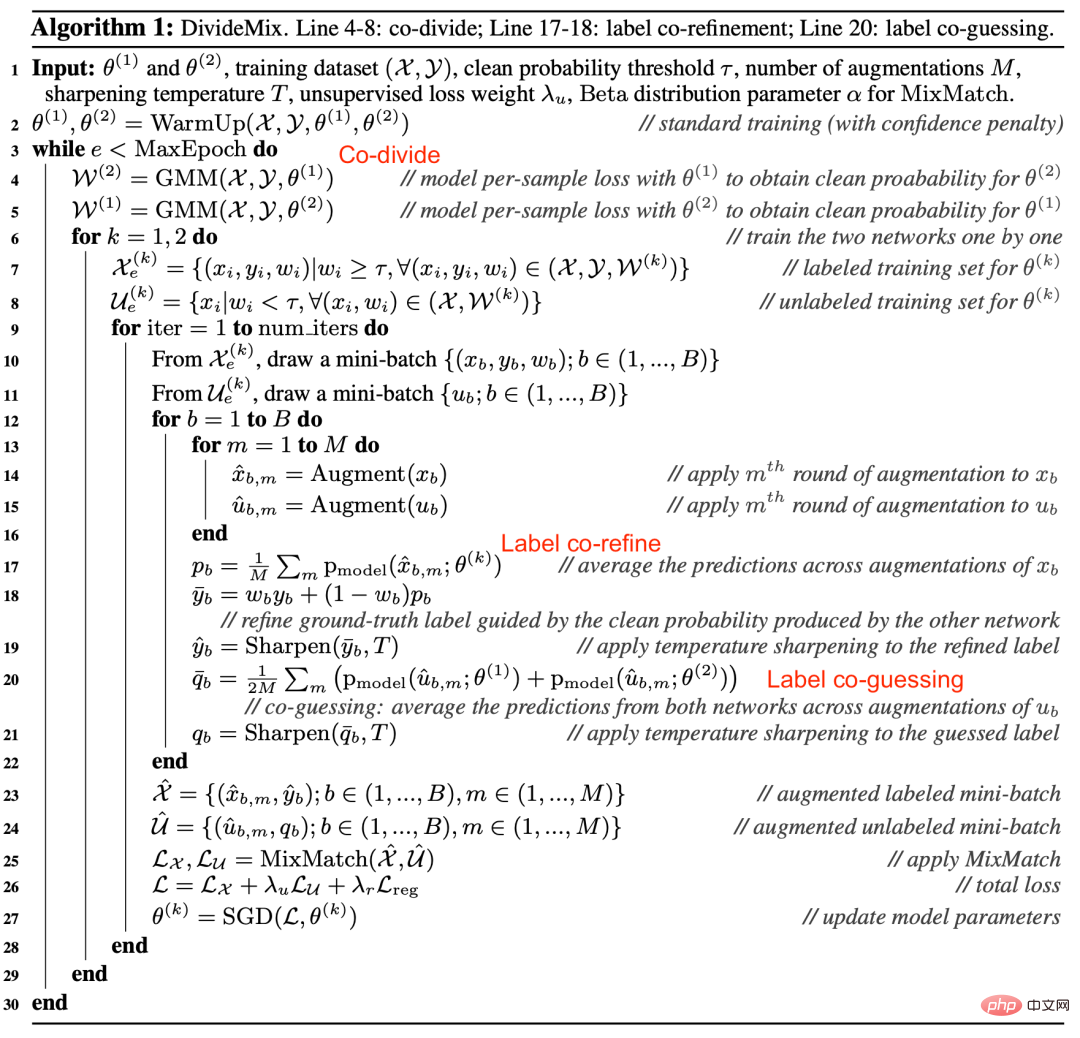

(2) DivideMix

Junnan Li et al.’s 2020 paper "DivideMix: Using Noise Label Learning to Implement Semi-supervised Learning" (DivideMix: Learning The DivideMix method is proposed in (with Noisy Labels as Semi-supervised Learning), which combines semi-supervised learning with learning using noisy labels (Learning with noisy labels, LNL). It models the loss distribution of each sample through a Gaussian mixture model (GMM), which dynamically divides the training data into a labeled dataset containing clean samples and an unlabeled dataset containing noisy samples.

According to the idea proposed by Arazo et al. in the 2019 paper "Unsupervised Label Noise Modeling and Loss Correction" (Unsupervised Label Noise Modeling and Loss Correction), they A binary Gaussian mixture model is fitted on the cross-entropy loss. Clean samples are expected to get lower loss faster than noisy samples. The Gaussian mixture model with smaller mean corresponds to the clustering of clean labels, which is denoted as c here. If the posterior probability of the Gaussian mixture model  (i.e., the probability that the sample belongs to the clean sample set) is greater than the threshold, the sample is considered a clean sample, otherwise it is considered a noise sample.

(i.e., the probability that the sample belongs to the clean sample set) is greater than the threshold, the sample is considered a clean sample, otherwise it is considered a noise sample.

The process of data clustering is called co-divide. To avoid confirmation bias, the DividImax method trains two crossed networks simultaneously, where each network uses a separate part of the data set from the other network, similar to how Double Q-Learning works.

##

##

##Figure 14: DivideMix independently trains two network to reduce confirmation bias. The two networks simultaneously run collaborative partitioning, collaborative refinement and collaborative guessing. (Image source: Junnan Li et al.'s 2020 paper "DivideMix: Using Noisy Label Learning to Implement Semi-supervised Learning")

Compared with MixMatch, DivideMix has an additional co-divide step for processing noisy samples, and the following improvements were made during training: Label co-refinement: It linearizes the ground truth label with the network prediction Combination, where  is the average of

is the average of  multiple enhanced versions under the probability

multiple enhanced versions under the probability  of another network producing a clean data set.

of another network producing a clean data set.

Label co-guessing: It averages the predictions of two models for unlabeled data samples.

Figure 15: DivideMix algorithm. (Image source: Junnan Li et al.'s 2020 paper "DivideMix: Using Noisy Label Learning to Implement Semi-supervised Learning")

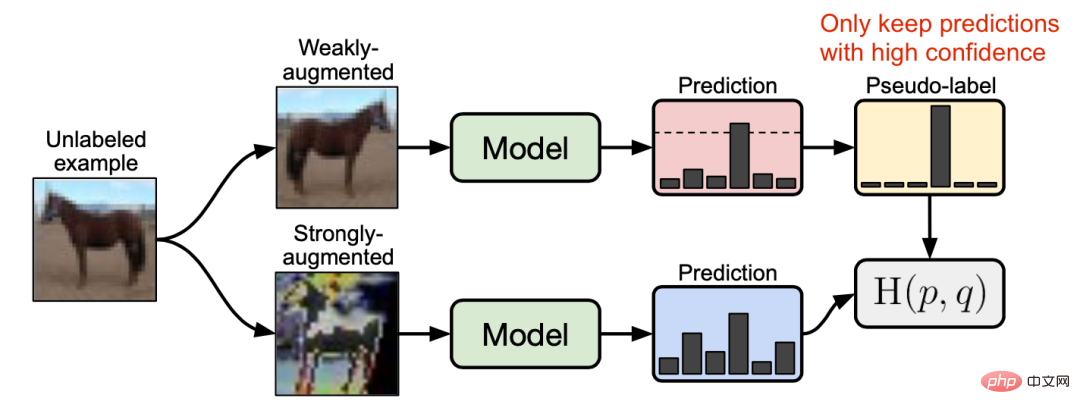

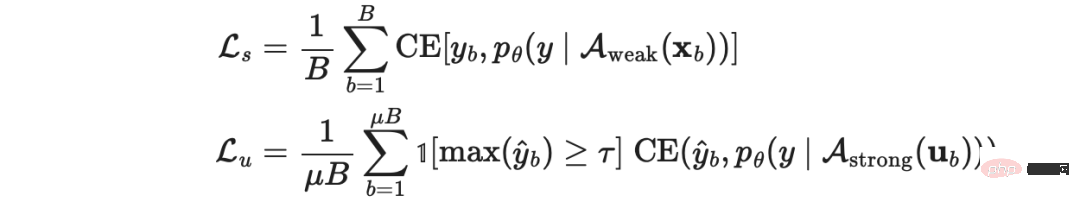

(3) FixMatch

The FixMatch method proposed by Sohn et al. in the 2020 paper "FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence" uses weak enhancement methods to detect unlabeled samples generate pseudo-labels and only keep high-confidence predictions. Here, both weak enhancement and high-confidence filtering help to generate high-quality credible pseudo-label targets. FixMatch then predicts these pseudo-labels given a heavily enhanced sample.

Figure 16: Illustration of how the FixMatch method works. (Image source: Sohn et al.'s 2020 paper "FixMatch: Simplifying semi-supervised learning using consistency and confidence")

Where  is the pseudo-label of the unlabeled sample;

is the pseudo-label of the unlabeled sample;  is a hyperparameter that determines the relative size of

is a hyperparameter that determines the relative size of  and

and  . Weak enhancement

. Weak enhancement : Standard translation and transformation enhancement. Strong enhancement

: Standard translation and transformation enhancement. Strong enhancement : Data enhancement methods such as AutoAugment, Cutout, RandAugment and CTAugment.

: Data enhancement methods such as AutoAugment, Cutout, RandAugment and CTAugment.

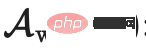

Figure 17: Performance of FixMatch and several other semi-supervised learning methods on image classification tasks. (Image source: Sohn et al.'s 2020 paper "FixMatch: Simplifying semi-supervised learning using consistency and confidence")

According to FixMatch's ablation research,

- When using the threshold τ, using the temperature parameter T does not have a significant impact on sharpening the prediction distribution.

- Cutout and CTAugment are strong enhancement methods that play an important role in achieving good performance of the model.

- The model diverges early in training when label guessing uses strong boosting instead of weak boosting. If weak reinforcement is discarded, the model will overfit the guessed labels.

- Using weak enhancement instead of strong enhancement for pseudo-label prediction will lead to unstable model performance. Strong data enhancement is crucial for the stability of model performance.

7 Combined with powerful pre-training

This method pre-trains task-independent models on a large unsupervised data corpus through self-supervised learning. The model is then fine-tuned on downstream tasks using small labeled datasets, which is a common paradigm, especially in language tasks. Research shows that models can gain additional gains if they combine semi-supervised learning with pre-training.

Zoph et al.’s 2020 paper "Rethinking Pre-training and Self-training" studied how much more effective self-training is than pre-training . Their experimental setup is to use ImageNet for pre-training or self-training to improve COCO results. It is important to note that when using ImageNet for self-training, it discards labels and only uses ImageNet samples as unlabeled data points. He Kaiming and others have proven in the 2018 paper "Rethinking ImageNet Pre-training" that if the downstream tasks (such as target detection) are very different, the effect of ImageNet classification pre-training is not very good.

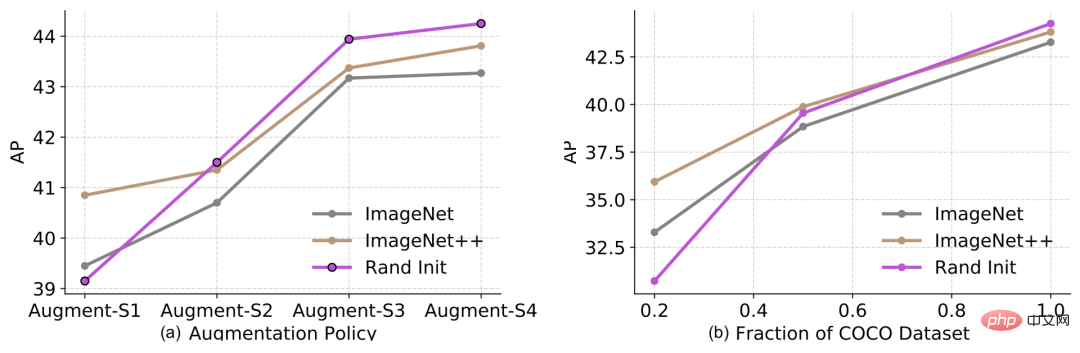

Figure 18: (a) Results of data enhancement (from weak to strong) and (b) The impact of label dataset size on object detection performance. In the legend: "Rand Init" represents a model initialized with random weights; `ImageNet` is initialized using a pre-trained model with a Top-1 accuracy of 84.5% on the ImageNet dataset; `ImageNet` is initialized using a Top-1 accuracy on the ImageNet dataset -1 is initialized with a pre-trained model with an accuracy of 86.9%. (Image source: Zoph et al.'s 2020 paper "Rethinking Pre-training and Self-Training")

This experiment obtained a series of interesting findings:

- The more labeled samples available for downstream tasks, the less effective pre-training will be. Pretraining is helpful in low data mode (20%), but neutral or counterproductive in high data situations.

- In high data/strong boost mode, self-training is helpful even if pre-training is counterproductive.

- Even using the same data source, self-training can bring additional improvements over pre-training.

- Self-supervised pre-training (such as pre-training through SimCLR) will harm the performance of the model in high-data mode, similar to supervised pre-training.

- Jointly training supervised and self-supervised learning objectives helps resolve the mismatch between pre-training and downstream tasks. Pretraining, joint training, and self-training are all additive.

- Noise labels or non-target labels (i.e., pre-trained labels are not aligned with downstream task labels) are worse than pseudo-labels of the target.

- Self-training is computationally more expensive than fine-tuning on a pre-trained model.

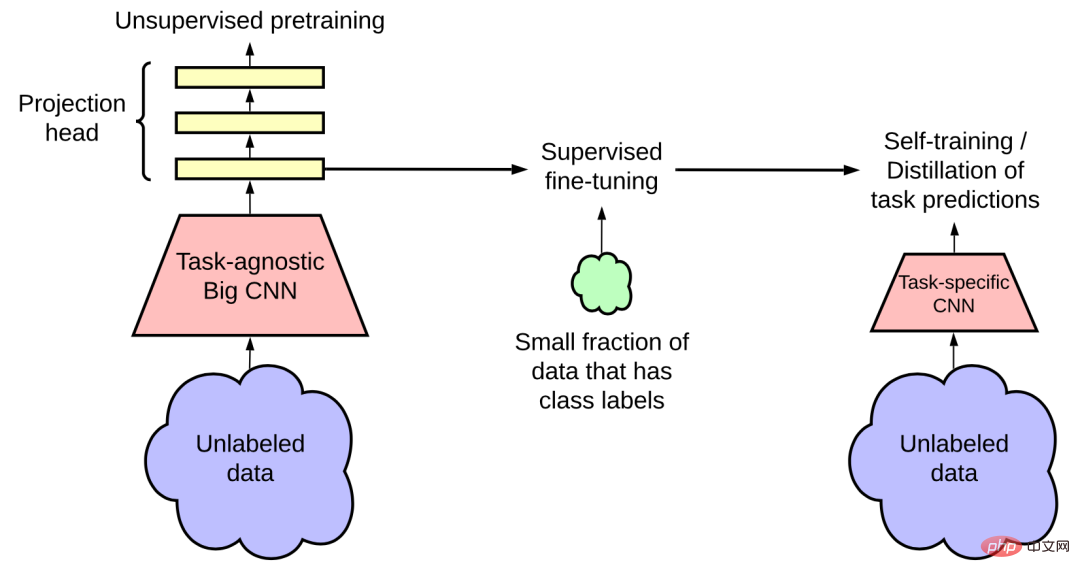

Ting Chen et al.'s 2020 paper "Big Self-Supervised Models are Strong Semi-Supervised Learners" , a three-step procedure method is proposed, which combines the advantages of self-supervised pre-training, supervised fine-tuning and self-training:

1. Use Unsupervised or sub-supervised methods train a large model;

#2. Supervise fine-tuning of the model on some labeled examples, using large (deep and wide) neural The network is crucial because the larger the model, the better the performance with fewer labeled examples.

3. Use pseudo labels in self-training to distill unlabeled examples.

a. Knowledge from a large model can be distilled into a small model because this task-specific usage does not require the additional capacity of the learned representation.

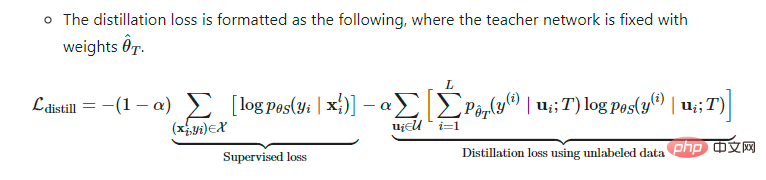

b. The distillation loss formula is as follows, where the Teacher network is fixed and the weight is  .

.

## Figure 19: Semi-supervised The learning framework uses unlabeled data corpora through task-agnostic unsupervised pre-training (left) and task-specific self-training and distillation (right). (Image source: Ting Chen et al.'s 2020 paper "Large-scale self-supervised models are powerful semi-supervised learners")

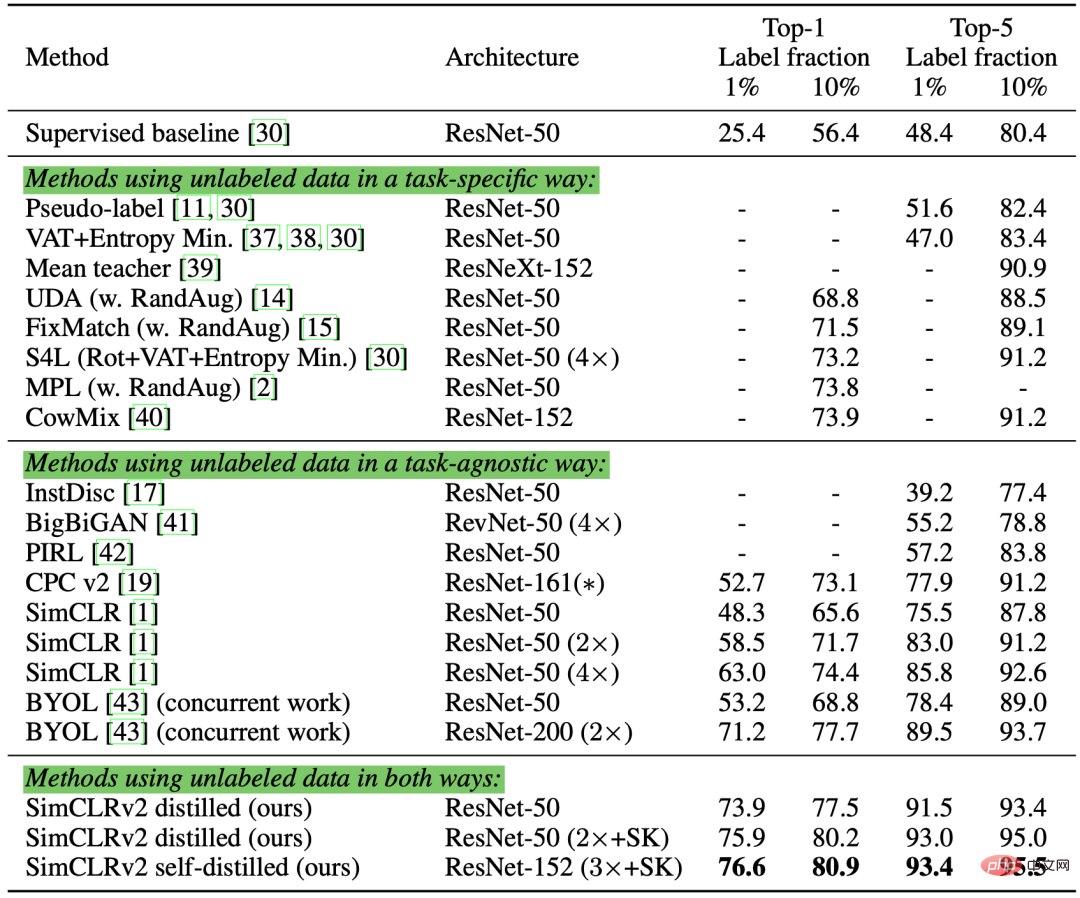

The author of the paper conducted it on the ImageNet classification task experimented. Self-supervised pretraining uses SimCLRv2, a direct improvement of SimCLR. Their observations in the empirical study confirmed some of the results proposed by Zoph et al. in 2020:

- Label learning for larger models is more efficient;

- Bigger/deeper project heads in SimCLR can improve representation learning;

- Using unlabeled data for distillation can optimize semi-supervised learning.

Figure 20: Performance comparison of SimCLRv2 semi-supervised distillation on ImageNet classification. (Image source: Ting Chen et al.'s 2020 paper "Large-scale self-supervised models are powerful semi-supervised learners")

Through the analysis of recent semi-supervised learning methods To summarize, we can find that many methods aim to reduce confirmation bias:

- Apply effective and diverse noise to samples through advanced data augmentation methods.

- MixUp is an effective data enhancement method when processing images. This method can also be used for language tasks to achieve smaller incremental optimization (Guo et al. 2019).

- Set a threshold and remove pseudo-labels with low confidence.

- Set the minimum number of labeled samples in each mini-batch.

- Sharpen pseudo-label distribution to reduce class overlap.

If you need to quote, please indicate:

<span style="color: rgb(215, 58, 73); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">@</span><span style="color: rgb(89, 89, 89); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">article</span>{<span style="color: rgb(89, 89, 89); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">weng2021semi</span>,<span style="color: rgb(89, 89, 89); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">title</span> <span style="color: rgb(215, 58, 73); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">=</span> <span style="color: rgb(102, 153, 0); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">"Learning with not Enough Data Part 1: Semi-Supervised Learning"</span>,<span style="color: rgb(89, 89, 89); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">author</span><span style="color: rgb(215, 58, 73); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">=</span> <span style="color: rgb(102, 153, 0); margin: 0px; padding: 0px; background: none 0% 0% / auto repeat scroll padding-box border-box rgba(0, 0, 0, 0);">"Weng, Lilian"</span>,Copy after login

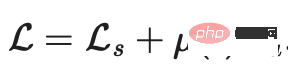

. Among them, the supervision loss

. Among them, the supervision loss  is very easy to calculate when all the samples are labeled samples. We need to focus on how to design unsupervised losses

is very easy to calculate when all the samples are labeled samples. We need to focus on how to design unsupervised losses . The weighted item

. The weighted item  usually chooses to use the slope function, where t is the number of training steps. As the number of training times increases, the proportion of

usually chooses to use the slope function, where t is the number of training steps. As the number of training times increases, the proportion of  increases. Disclaimer: This article does not cover all semi-supervised methods, but only focuses on model architecture tuning. For information on how to use generative models and graph-based methods in semi-supervised learning, you can refer to the paper "An Overview of Deep Semi-Supervised Learning".

increases. Disclaimer: This article does not cover all semi-supervised methods, but only focuses on model architecture tuning. For information on how to use generative models and graph-based methods in semi-supervised learning, you can refer to the paper "An Overview of Deep Semi-Supervised Learning".