In today’s field of artificial intelligence, AI writing artifacts are emerging one after another, and technology and products are changing with each passing day.

If the GPT-3 released by OpenAI two years ago is still a little lacking in writing, then the generated results of ChatGPT some time ago can be regarded as "gorgeous writing, full plot, and logical logic" It’s both harmonious and harmonious.”

Some people say that if AI starts to write, it will really have nothing to do with humans.

But whether it is humans or AI, once the "word count requirement" is increased, the article will become more difficult to "control".

Recently, Chinese AI research scientist Tian Yuandong and several other researchers recently released a new language model-Re^3. This research was also selected for EMNLP 2022.

Paper link: https://arxiv.org/pdf/2210.06774.pdf

Tian Yuandong once introduced this model on Zhihu:

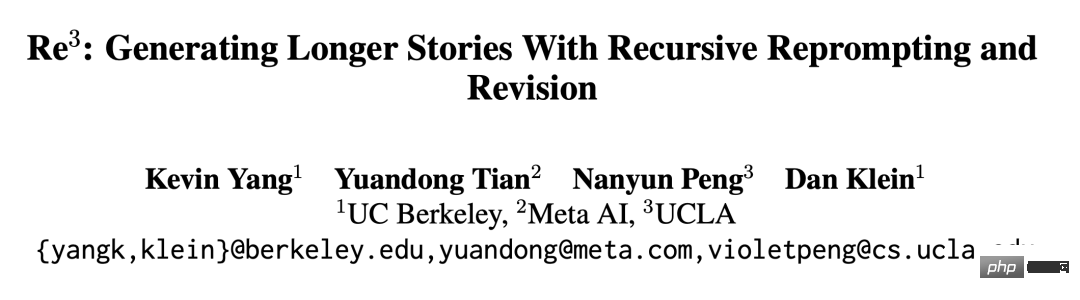

The idea of Re^3 is extremely simple. By designing prompts, it can generate strong consistency story, no need to fine-tune the large model at all. We jump out of the linear logic of word-by-word generation of the language model and use a hierarchical generation method: first generate the story characters, various attributes and outlines of the characters in the Plan stage, and then give the story outline and roles in the Draft stage, and repeatedly generate specific Paragraphs, these specific paragraphs are filtered by the Rewrite stage to select generated paragraphs that are highly related to the previous paragraph, while discarding those that are not closely related (this requires training a small model), and finally correct some obvious factual errors in the Edit stage.

The idea of Re^3 is to generate longer stories through recursive Reprompt and adjustments, which is more in line with the creative process of human writers. Re^3 breaks down the human writing process into 4 modules: planning, drafting, rewriting, and editing.

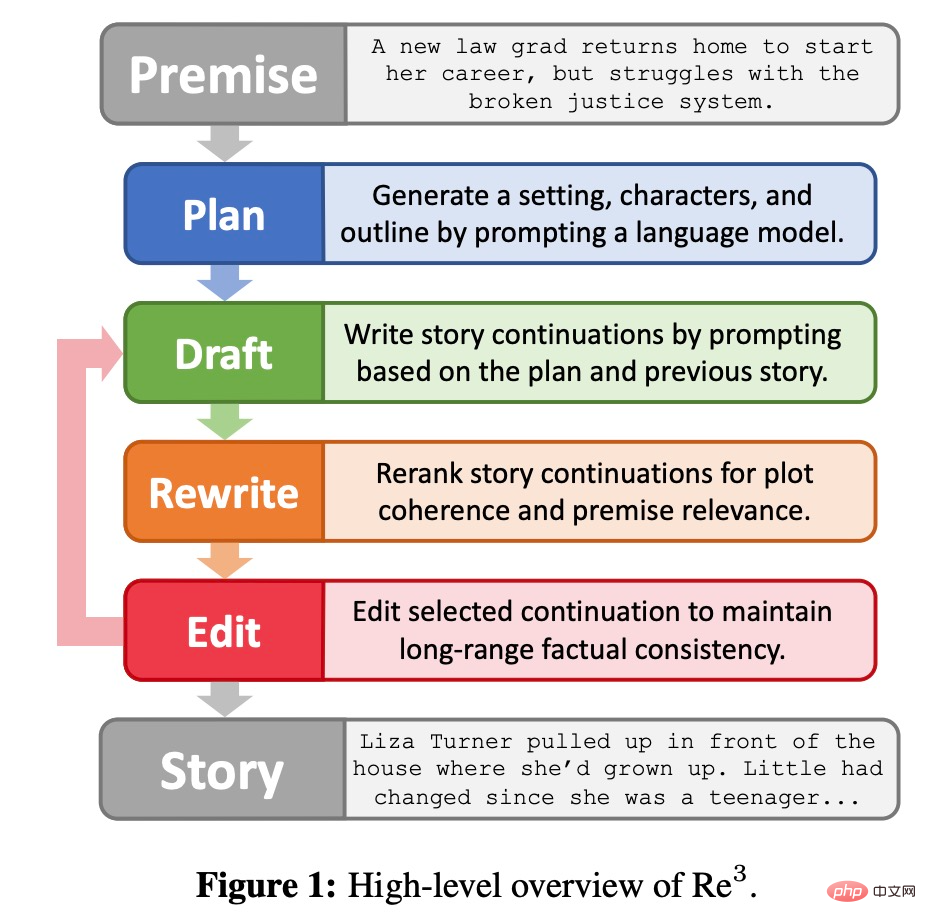

Plan module

As shown in Figure 2 below , the planning module will expand the story premise (Premise) into background, characters and story outline. First, the background is a simple one-sentence extension of the story premise, obtained using GPT3-Instruct-175B (Ouyang et al., 2022); then, GPT3-Instruct175B regenerates character names and generates character descriptions based on the premise and background; finally, the Method prompt GPT3-Instruct175B to write the story outline. The components in the planning module are generated by prompt themselves and will be used over and over again.

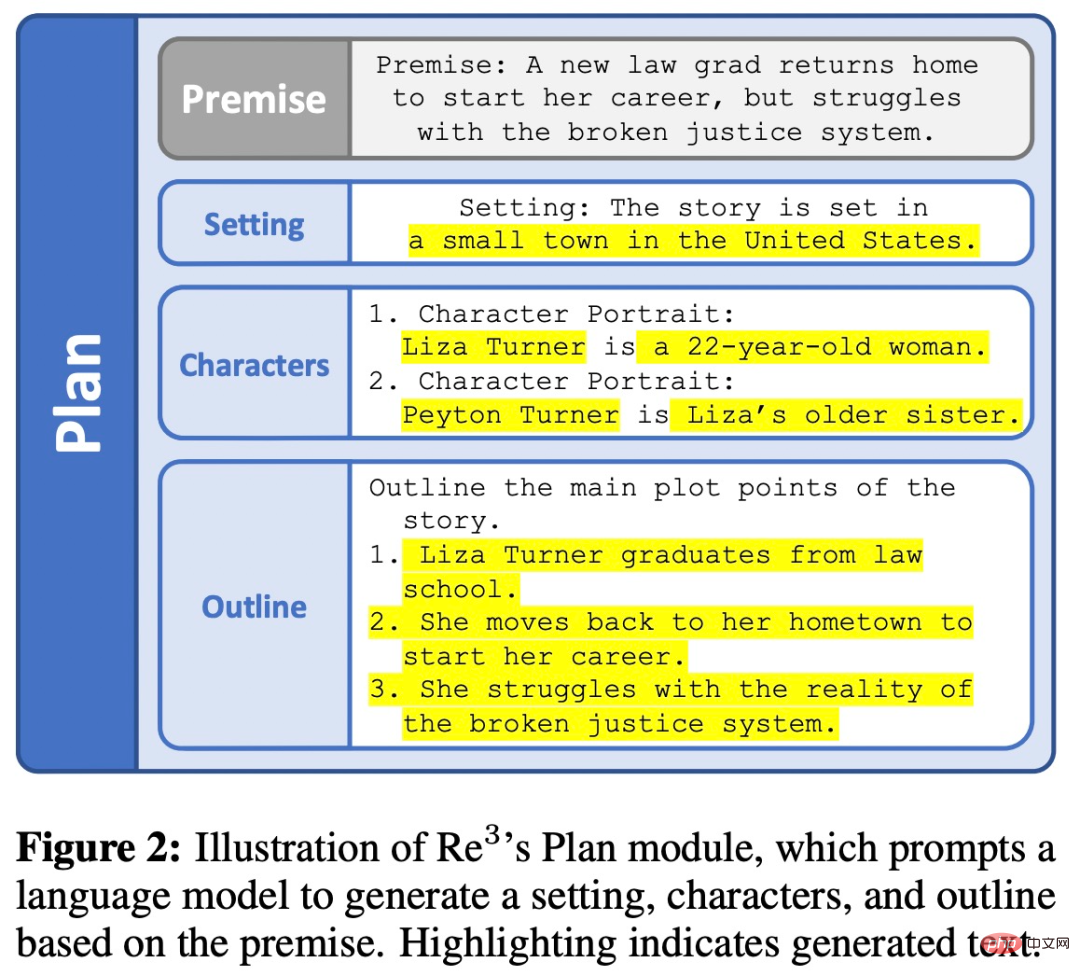

Draft module

For each result obtained by the planning module An outline, the draft module will continue to generate several story paragraphs. Each paragraph is a fixed-length continuation generated from a structured prompt formed by a recursive reprompt. The draft module is shown in Figure 3 below.

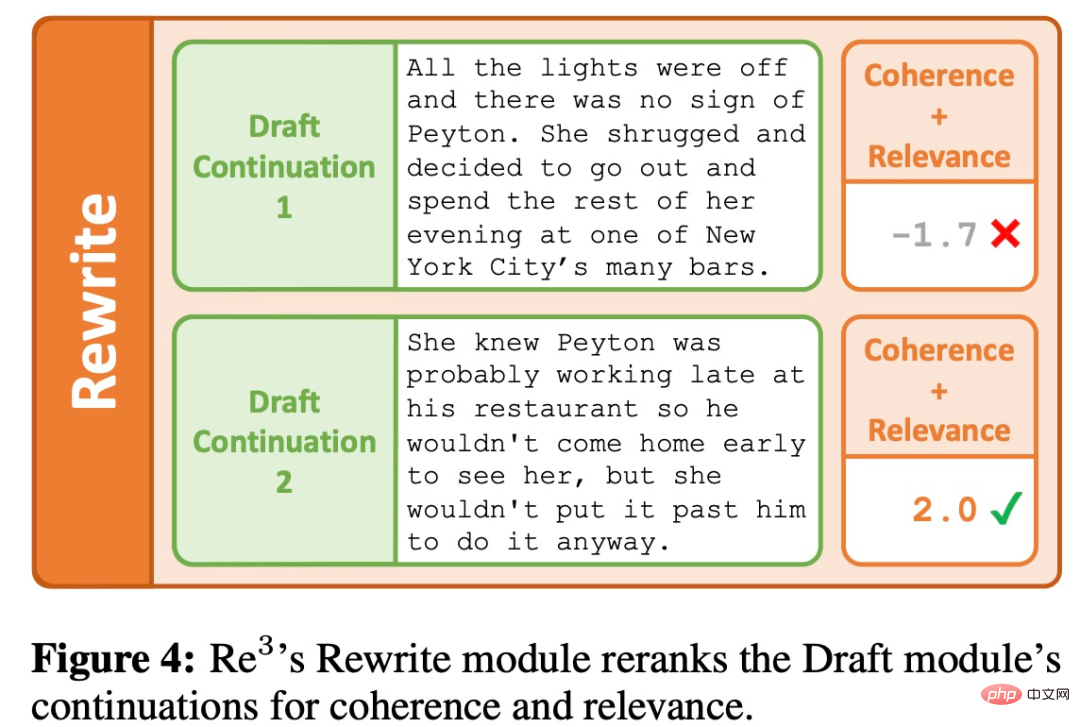

Rewrite module

The first one of the generator The output is often low quality, like a first draft that people complete, a second draft that may require rewriting an article based on feedback.

The Rewrite module simulates the rewriting process by reordering the Draft module output based on coherence with previous paragraphs and relevance to the current outline point, as shown in Figure 4 below.

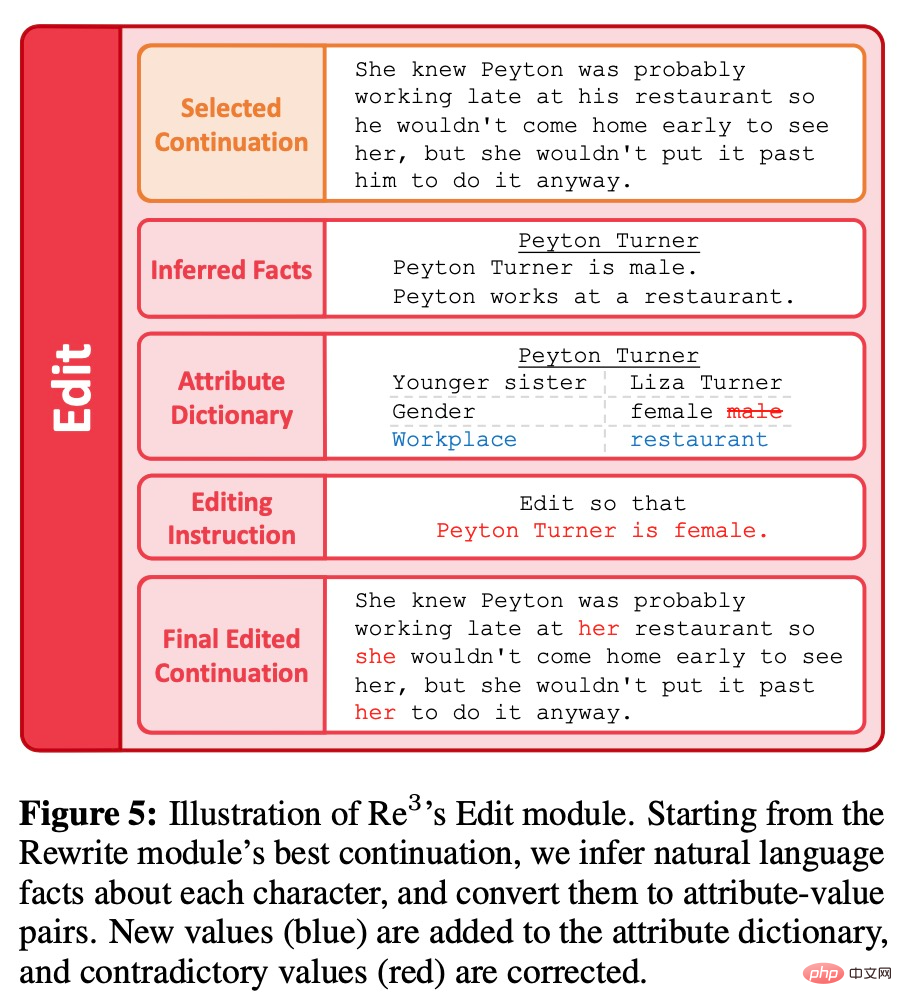

Edit module

#Different from substantial rewriting, the editing module is generated through the planning, drafting and rewriting modules. Partial editing of paragraphs to further improve the generated content. Specifically, the goal is to eliminate long sequences of factual inconsistencies. When one discovers a small factual discontinuity while proofreading, one may simply edit the problematic detail rather than make major revisions or substantive rewrites of the high-level article plan. The editing module mimics this process of human authoring in two steps: detecting factual inconsistencies and correcting them, as shown in Figure 5 below.

In the evaluation session, the researcher sets the task to perform a brief initial A story is generated from the previous situation. Since “stories” are difficult to define in a rule-based manner, we did not impose any rule-based constraints on acceptable outputs and instead evaluated them through several human-annotated metrics. To generate initial premises, the researchers prompted with GPT3-Instruct-175B to obtain 100 different premises.

Baseline

It was difficult since the previous method focused more on short stories compared to Re^3 Direct comparison. So the researchers used the following two baselines based on GPT3-175B:

1. ROLLING, generate 256 tokens at a time through GPT3-175B, using the previous situation and all previously generated stories The text is used as a prompt, and if there are more than 768 tokens, the prompt is left truncated. Therefore, the "rolling window" maximum context length is 1024, which is the same maximum context length used in RE^3. After generating 3072 tokens, the researchers used the same story ending mechanism as RE^3.

2. ROLLING-FT, the same as ROLLING, except that GPT3-175B first fine-tunes hundreds of paragraphs in the WritingPrompts story, which have at least 3000 tokens.

Indicators

Several evaluation indicators used by researchers include:

1. Interesting. Be interesting to readers.

2. Continuity. The plot is coherent.

3. Relevance. Stay true to the original.

4. Humanoid. Judged to be written by humans.

In addition, the researchers also tracked how many times the generated stories had writing problems in the following aspects:

1. Narrative. A shocking change in narrative or style.

2. Inconsistency. It is factually incorrect or contains very strange details.

3. Confusion. Confusing or difficult to understand.

4. Repeatability. High degree of repeatability.

5. Not smooth. Frequent grammatical errors.

The results

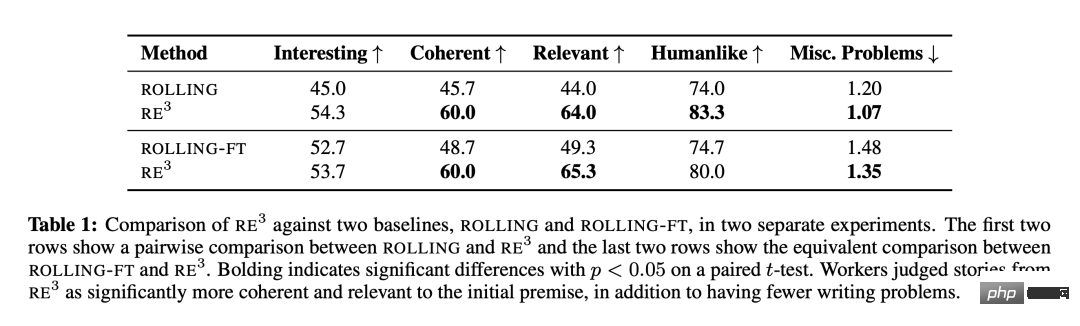

## are shown in Table 1 As shown, Re^3 is very effective at writing a longer story based on anticipated events while maintaining a coherent overall plot, validating the researchers' design choices inspired by the human writing process, as well as the reprompting generation method. . Compared with ROLLING and ROLLING-FT, Re^3 significantly improves both coherence and relevance. The annotator also marked Re^3's story as having "significantly fewer redundant writing issues".

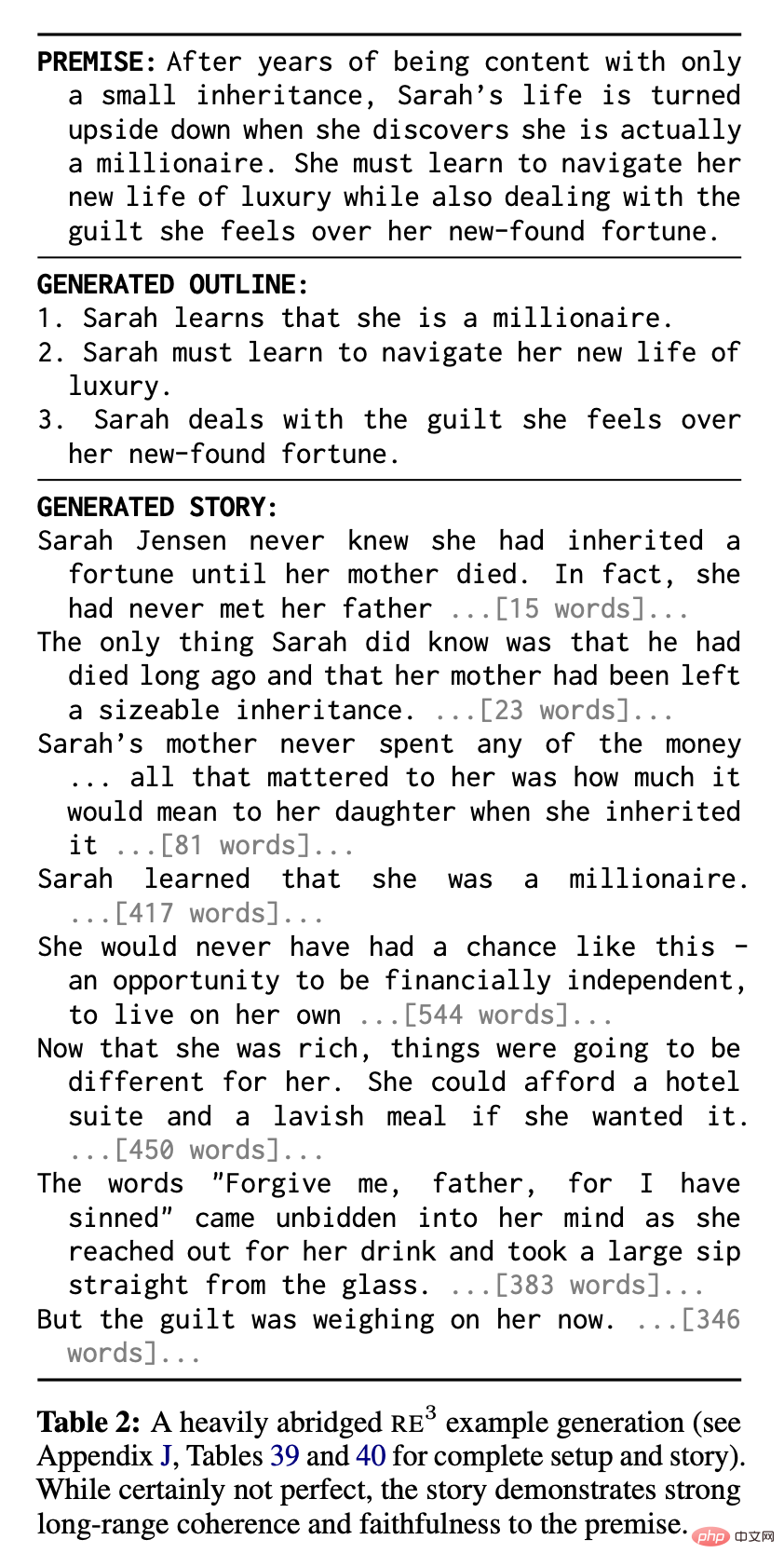

Re^3 shows strong performance in an absolute sense: annotators believe that in the two comparisons, 83.3% and 80.0% of Re^3's stories were written by humans, respectively. Table 2 shows a heavily abridged story example from Re^3, showing strong coherence and context relevance:

Despite this, researchers still qualitatively observed that Re^3 still has a lot of room for improvement.

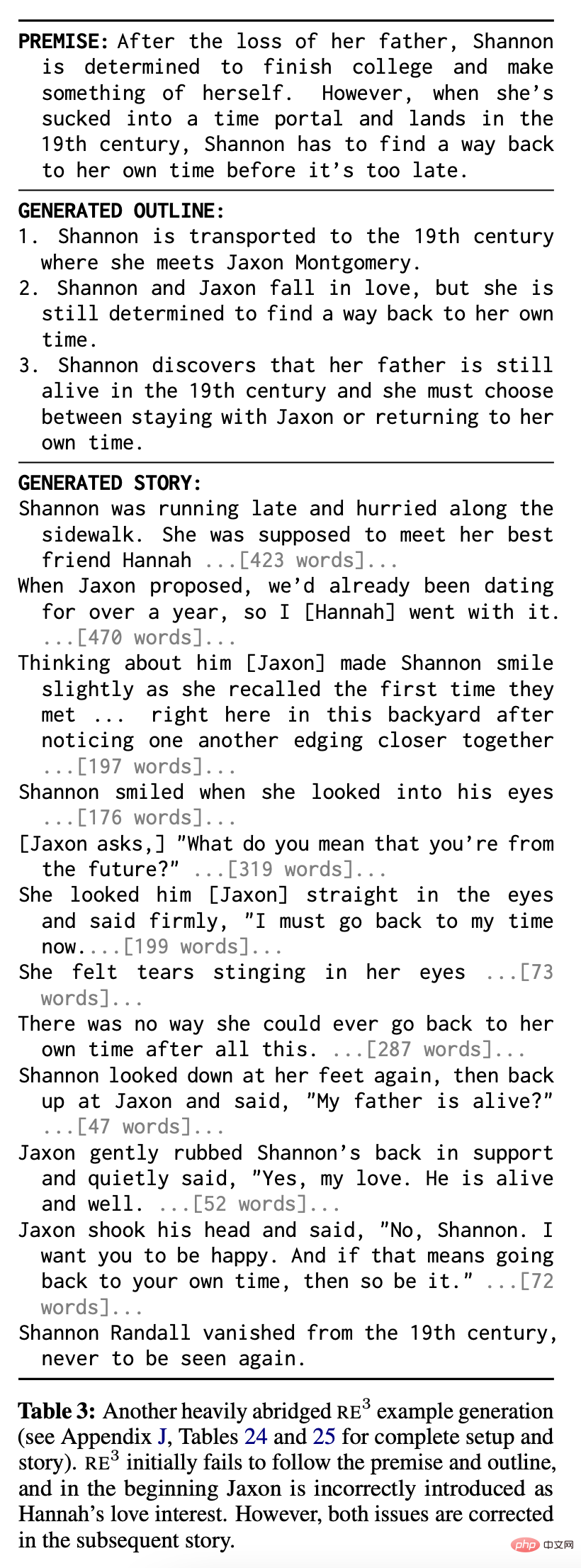

Two common issues are shown in Table 3. First, although Re^3s almost always follow the story premise to some extent, unlike baseline stories, they may not capture all parts of the premise and may not follow the partial outline generated by the planning module (e.g., Table 3 The first part of the story and outline). Secondly, due to the failure of the rewriting module, especially the editing module, there are still some confusing passages or contradictory statements: for example, in Table 3, the character Jaxon has a contradictory identity in some places.

However, unlike the rolling window method (rolling window), Re^3's planning method can "self-correct" , back to the original plot. The second half of the story in Table 3 illustrates this ability.

Ablation Experiment

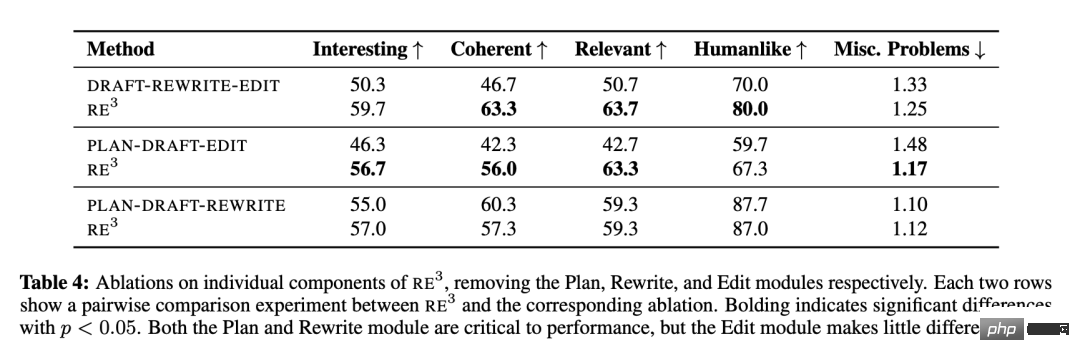

The researchers discussed the various modules of Re^3 Relative contribution of: planning, drafting, rewriting, and editing, and conducting ablation experiments on each module in turn. The exception is the Draft module, as it is unclear how the system would function without it.

Table 4 shows that the “Planning” and “Rewriting” modules that imitate the human planning and rewriting process have an impact on the overall plot. Coherence and the relevance of premises are crucial. However, the "Edit" module contributes very little to these metrics. The researchers also qualitatively observed that there are still many coherence issues in Re^3's final story that are not addressed by the editing module, but that these issues could be resolved by a careful human editor.

Further analysis of the "Edit" module

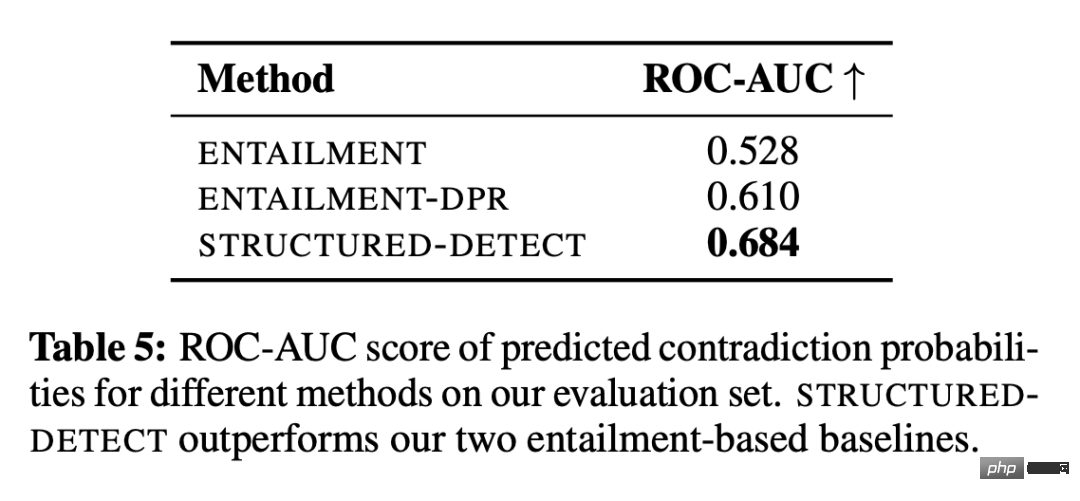

##The researcher used a controlled environment to study Whether the Edit module can at least detect role-based factual inconsistencies. The detection subsystem is called STRUCTURED-DETECT to avoid confusion with the entire editing module.

As shown in Table 5, STRUCTUREDDETECT outperforms both baselines when detecting role-based inconsistencies according to the standard ROC-AUC classification metric. The ENTAILMENT system's ROC-AUC score is barely better than chance performance (0.5), highlighting the core challenge that detection systems must be overwhelmingly accurate. Additionally, STRUCTURED-DETECT is designed to scale to longer paragraphs. The researchers hypothesized that the performance gap would widen in evaluations with longer inputs compared to the baseline.

Even in this simplified environment, the absolute performance of all systems is still low. Additionally, many of the generated complete stories contain non-character inconsistencies, such as background inconsistencies with the current scene. Although the researchers did not formally analyze the GPT-3 editing API's ability to correct inconsistencies after detecting them, they also observed that it can correct isolated details but struggles when dealing with larger changes.

Taken together, compound errors from the detection and correction subsystems make it difficult for this study’s current editing module to effectively improve factual consistency across thousands of words, without introducing unnecessary changes at the same time.

The above is the detailed content of AIGC that conforms to the human creative process: a model that automatically generates growth stories emerges. For more information, please follow other related articles on the PHP Chinese website!

How to light up Douyin close friends moment

How to light up Douyin close friends moment

What should I do if my computer starts up and the screen shows a black screen with no signal?

What should I do if my computer starts up and the screen shows a black screen with no signal?

Kaspersky Firewall

Kaspersky Firewall

plugin.exe application error

plugin.exe application error

nvidia geforce 940mx

nvidia geforce 940mx

Do you know if you cancel the other person immediately after following them on Douyin?

Do you know if you cancel the other person immediately after following them on Douyin?

How to calculate the refund handling fee for Railway 12306

How to calculate the refund handling fee for Railway 12306

Top ten digital currency exchanges

Top ten digital currency exchanges