ChatGPT has received attention since its release and is considered one of the most powerful language models currently available. Its text generation capabilities are no less than those of humans, and some machine learning experts have even banned researchers from using ChatGPT to write papers.

But recently, a paper was clearly signed ChatGPT in the author column. What's going on?

This paper is "Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models" published on the medical research paper platform medRxiv. ChatGPT is the first part of the paper. Three authors.

As can be seen from the title of the paper, the main content of this paper is to study the performance of ChatGPT in the United States Medical Licensing Examination (USMLE). Experimental results show that, without any special training or reinforcement, ChatGPT’s scores in all exams reached or were close to the passing threshold. Moreover, the answers generated by ChatGPT show a high degree of consistency and insight. The study suggests that large language models may be useful in medical education and may aid clinical decision-making.

Judging from the content of the research, ChatGPT seems to be more like a research object, as a Twitter user said: "If human researchers contribute to the experimental results, then of course they He is a co-author of the paper, but there is no such precedent for models and algorithms."

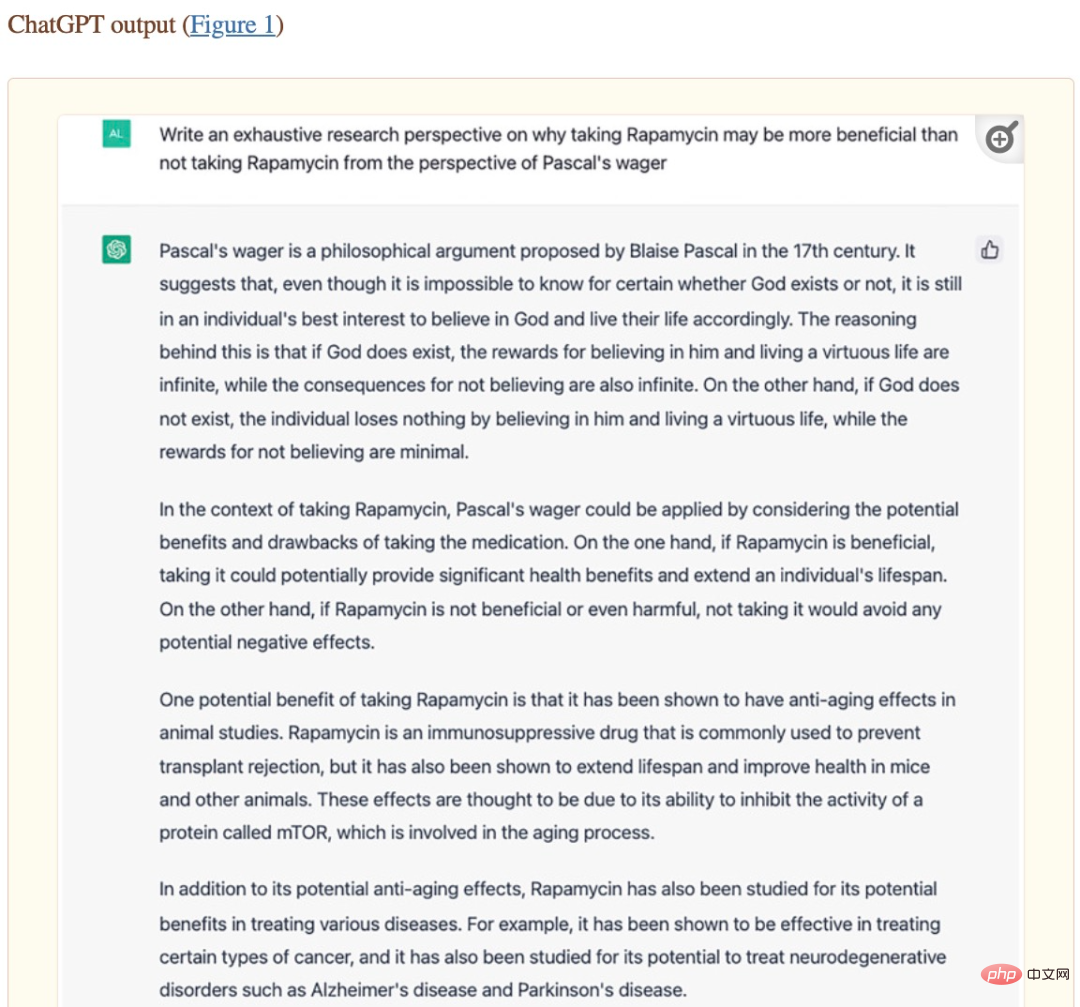

However, another netizen immediately refuted him in the comment area : A previous paper titled "Rapamycin in the context of Pascal's Wager: generative pre-trained transformer perspective" was not only signed, but ChatGPT was even the first author.

This paper is included in Oncoscience from the National Institutes of Health (NIH). However, from the contributions of the paper's authors, we found that most of the content of this paper was indeed generated by ChatGPT - Alex Zhavoronkov, the second author of the paper, asked ChatGPT questions related to the research topic, and ChatGPT automatically generated a large number of opinions and explanations, and then Content generated by ChatGPT is moderated by Alex Zhavoronkov. In addition, ChatGPT also assists in formatting the paper.

##Screenshot of the paper "Rapamycin in the context of Pascal's Wager: generative pre-trained transformer perspective".

#When deciding on the signature of the paper, Alex Zhavoronkov contacted OpenAI co-founder and CEO Sam Altman for confirmation, and finally published this article with ChatGPT as the first author. paper. This suggests that powerful AI systems such as large language models will make meaningful contributions to academic work in the future, and even have the ability to become co-authors of papers.

However, there are also some drawbacks to allowing large language models to write academic papers. For example, the top machine learning conference ICML said: "ChatGPT is trained on public data, which is often collected without consent. Collected under certain conditions, this will bring about a series of responsibility attribution issues."

In a recent study by Catherine Gao and others at Northwestern University, the researchers selected some data published in Manually research papers in the Journal of the American Medical Association (JAMA), New England Journal of Medicine (NEJM), British Medical Journal (BMJ), The Lancet and Nature Medicine, use ChatGPT to generate abstracts for the papers, and then test the review Can a human detect that these summaries are generated by AI?

Experimental results show that reviewers correctly identified only 68% of the generated abstracts and 86% of the original abstracts. They incorrectly identified 32% of generated summaries as original summaries and 14% of original summaries as AI-generated. The reviewer said: "It is surprisingly difficult to distinguish between the two, and the generated summary is vague and gives people a formulaic feel."

This experimental result shows that it is difficult for human researchers to It’s not a good sign to tell whether text is generated by AI or written by humans. The AI seems to be “cheating”.

However, so far, the content generated by language models cannot fully guarantee its correctness, and even the error rate in some professional fields is very high. If it is impossible to distinguish between human-written content and AI model-generated content, then humans will face serious problems of being misled by AI.

The above is the detailed content of An author of a paper is popular. When can large language models such as ChatGPT become a co-author of the paper?. For more information, please follow other related articles on the PHP Chinese website!