Translator|Bugatti

Reviser|Sun Shujuan

##This article will discussSeven AI-basedtools that canhelp data scientistsimprove their work efficiency. These tools can helpautomatically handledata cleaning,feature selection, model tuningand so ontasks, directly or indirectlymake yourwork more efficient,moreaccurate,Andhelps make better decisions.Many of these

tools haveuser-friendlyUI, it is very simple to use. At the same time, sometoolsallow data scientists to share and collaborate on projects with other members, which can help increase team productivity.1. DataRobot

Web that can help Automatically build, deploy, and maintain machine learning models. It supports many features and technologies,such as deeplearning, ensemble learning and sequentialanalysis. It uses advanced algorithms and technologies tocanhelpyoubuild models quickly and accurately,stillProvides functions for maintaining and monitoring deployment models.

It also allows data scientists to share and collaborate with others

It also allows data scientists to share and collaborate with others

Projects,thus making iteasier for teamsto collaborate oncomplex projects.2. H2O.ai

is aspeciesAn open source platform that provides professional tools for data scientists.Itsmainfunctionis automated machine learning(AutoML),can automate theprocess of building and tuning machine learning models. It also includesalgorithms like gradientboosting andrandom forest.Since it is

one#open source platform, data scientists can customize their The source code needs to be customized so that itcan be integratedinto an existing system.

It uses a version control system to trackallchanges and modifications that are added to thecode.H2O.aialso runs on cloud and edge devices, supporting a large and active base of users and developers who contributecodeto the platform者Community.3. Big Panda

Big Panda is used to automaticallyhandle IT operationsEvent management and anomaly detection. Simply put, anomaly detection is the identification of patterns, events, or observations in a data set that deviate significantly from expected behavior. It is used to identifydata points that may indicate unusual or unusual#s or# problems.

It uses various AI and ML technologies to analyze log data,and identify potential issues. It can automatically resolveincidents andreduce the need for manual intervention.

## easier and# prevent theissue from happening again.

4. HuggingFaceHuggingFace is used for natural language processing(NLP), and provides pre-trained models, allowing data scientists to quickly implement NLP tasks. It performs many functions,such astext classification, named entity recognition, question answering, and language translation. It also providesthe ability to fine-tune pre-trained models for specifictasks and datasets,and thus facilitatesImproveImproveperformance.

Its pre-trained modelhas reached the state-of-the-art inmultiple benchmark indicatorsperformance, because theyaretrained usinga large amount of data.This allows data scientists tobuild models quickly without having to train themfrom scratch, thussavingtheir timeand resources.

The platform also allows data scientiststo fine-tune pre-trained models forspecifictasks and datasets, which It canimprovethe performance of the model. This can be done using a simple API,evenNLPexperiencelimited## It is also easy for people to use.5. CatBoostThe CatBoost library is used for gradient

tasks and is specifically designed for Designed to handlecategorydata. Itachieves state-of-the-art performance on many datasets,enabling accelerated model training processes due toparallel GPU computing.

CatBoost The most stable,

The most stable,

overfitting in the data Most compatible with noise,thiscan improve the generalization ability of the model. It uses an algorithm called"Ordered Boosting"tobefore making a prediction.IterationWayFill in missing values.CatBoost provides feature importance, which can help data scientistsunderstand

the contribution of each feature to model predictions .6. OptunaOptuna is also an open source library, mainly used for hyperparameter

and optimization. This helps data scientists find the best parameters for their machine learning models. It uses acalled "Bayesian Optimization"technology that can automatically search for aThe optimal hyperparameters for a specificmodel.

Another of its main features is that it is easy to interact with various A variety of machine learning frameworks and library integrations,

is easy to interact with various A variety of machine learning frameworks and library integrations,

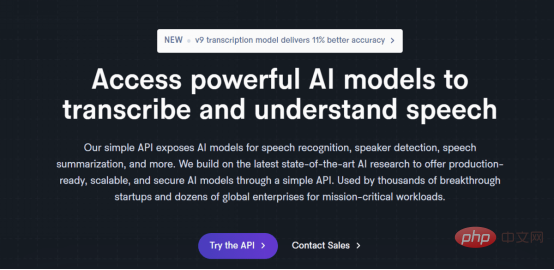

such asTensorFlow, PyTorch and scikit-learn. It can alsooptimizemultiple targets simultaneously,inperformanceandother metrics provides a good trade-off.7. AssemblyAIItis a platform that provides pre-trained models, designed to enable developers to integrate these Model

integrate into existing applications or services.

It also provides variousAPI,such asspeech to textAPIor Natural Language ProcessingAPI. Speech to text API is used to obtain text from audio or video files with high accuracy. In addition, the natural language API can help withtasks such as sentiment analysis, image entity recognition, and text summarization.##Conclusion

Training a machine learning model includes data collection &

&

andtraining, model evaluation, and model deployment. To perform all tasks, youneedto understand the various tools and commands involved. These seventools can help youspend the minimumeffort totrain and Deploy the model.Original title:##Ranking of universities and colleges specializing in data science and big data technology

The above is the detailed content of These seven AI-based tools empower data scientists. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence mysql transaction isolation level

mysql transaction isolation level How to install wordpress after downloading it

How to install wordpress after downloading it How to open pdb file

How to open pdb file Usage of promise

Usage of promise vscode Chinese setting method

vscode Chinese setting method How to set up a domain name that automatically jumps

How to set up a domain name that automatically jumps