Technology peripherals

Technology peripherals

AI

AI

Xiaohongshu's 'grass planting” mechanism is decrypted for the first time: how large-scale deep learning system technology is applied

Xiaohongshu's 'grass planting” mechanism is decrypted for the first time: how large-scale deep learning system technology is applied

Xiaohongshu's 'grass planting” mechanism is decrypted for the first time: how large-scale deep learning system technology is applied

The new generation of information technology led by AI is driving a new wave of science and technology. As one of the most rapidly developing mobile Internet platforms in China in recent years, Xiaohongshu has taken advantage of the momentum and has now formed a very large UGC community focusing on graphic, text and short video content. In this unique and active community, massive multi-modal data and user behavior feedback are generated every day, giving rise to new problems that are both valuable and challenging.

Many exciting developments are currently taking place in large-scale deep learning systems. At the "Xiaohongshu REDtech Youth Technology Salon" event on October 15, Xiaohongshu Vice President of Technology Cage shared "Large-Scale Deep Learning System Technology and Its Application in Xiaohongshu" and unveiled LarC for us. "Mystery".

Cage: Vice President of Technology of Xiaohongshu. He graduated from Shanghai Jiao Tong University. He once served as Vice President of Technology of Huanju Times and Chief Architect of Baidu Fengchao, responsible for Baidu search advertising CTR machine learning algorithm work. He once served as the China technical leader of the IBM Deep Question Answering (DeepQA) project.

The following content is compiled based on Cage’s on-site report

1. Xiaohongshu business overview

The real life of ordinary people Experience Sharing

Xiaohongshu is a booming content community where a large number of people who understand life and love sharing exchange their life experiences and attitudes with each other, and continue to attract more and more people. More and more users are joining. Now, Xiaohongshu has 200 million monthly active users, of which more than 70% are born in the 1990s. 50% of users come from first- and second-tier cities, and half come from third- and fourth-tier cities. The composition of users is very rich and young. .

"Ordinary people" are sharing their "real" "life experiences", which is a very big difference between Xiaohongshu and other content platforms and communities. First of all, the sharers are "ordinary people". Secondly, "sincere sharing and friendly interaction" are the Xiaohongshu community conventions, and "sincerity" is a very important point. Sharing in these communities is closely related to our offline life consumption, such as Treasure Bookstore, or how to dress, decorate, cook, etc., which are everyone's daily "life experience".

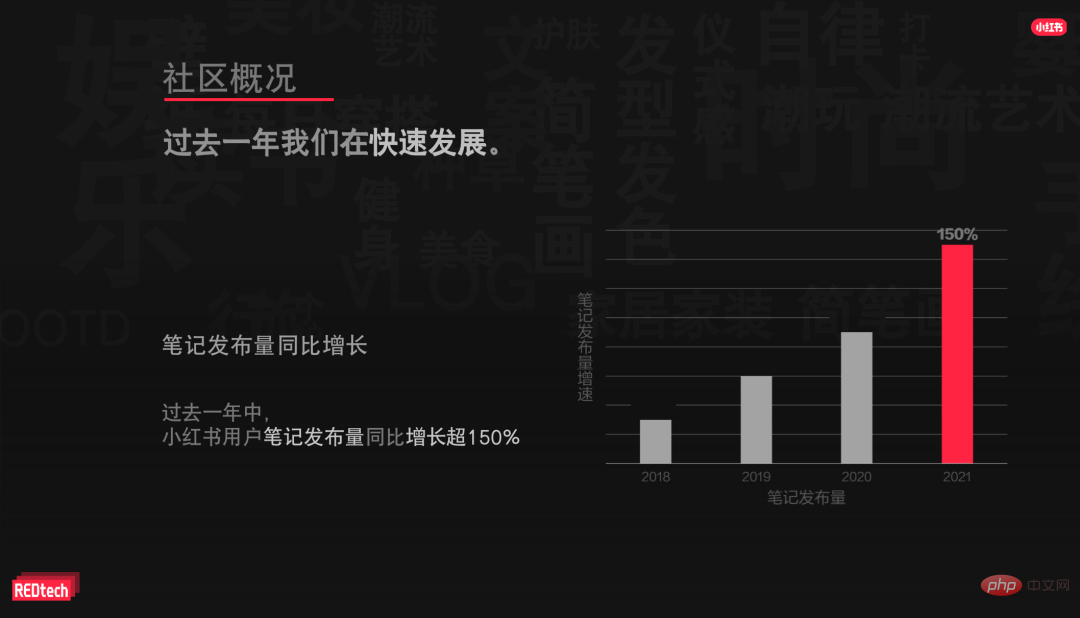

We can also use some numbers to measure the development of the Xiaohongshu community over the years. We see that the number of note releases has increased at a very high rate every year from 2018 to 2021. The speed is growing rapidly. From 2020 to 2021, the number of notes published by Xiaohongshu users increased by more than 150% year-on-year.

##Three main businesses: community, commercialization, e-commerce

In such a rapidly developing content community, the three most important businesses are community, commercialization and e-commerce. First of all, our content community and content platform is a lifestyle content communitycovering all life categories, mainly UGC. Also because of this kind of "sincere sharing" that fits life and daily consumption, users have a high degree of trust in our community content. Everyone will be "seeded" when they see good lifestyles, consumer content, services and products, etc. "Grass", We use our unique "grass planting" business model to bring about the transformation of brands and effects.

"After planting grass, can you pull it out?" While consuming content, everyone also hopes to be able to buy their favorite items naturally and conveniently. This is ourefficient closed-loop consumption Field , that is, the e-commerce part.

2. Xiaohongshu Technical ChallengeMultimodal technology is one of the technology directions that has attracted widespread attention and is developing rapidly in the entire AI field. The UGC community and content ecology contain a large number of images. Texts, videos, text and user behavior information generate a massive amount of high-quality multi-modal data, making it an excellent practical scenario. Users like good content when they see it, perform various search behaviors, watch a certain video, etc., which constitute a large amount of actual user feedback. Now the number of feedback samples actually generated through user behavior every day is tens of billions.How to mine user-interested content and good commercial content in massive multi-modal data Starting from this goal, many valuable and challenging problems are derived.

How do we solve these technologies:Real-time recommendation system for thousands of people

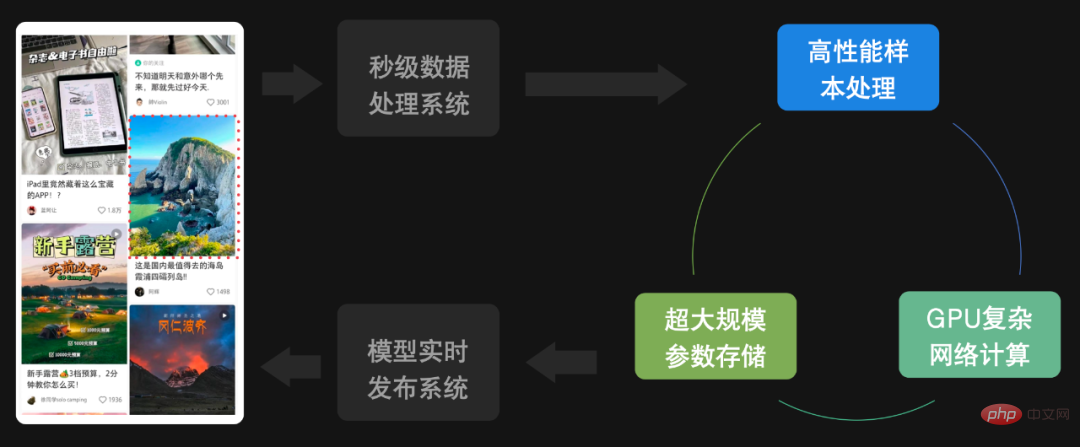

When you open Xiaohongshu, the first thing you see is the waterfall flow or content flow. These are all It is the content recommended by the recommendation system to everyone. According to statistics, Xiaohongshu generates tens of billions of user actions every day. For this data, Xiaohongshu’s technical team uses a machine learning framework based on LarC to train the model, and based on the rules in user behavior, it finds content that users are interested in and recommends it to users.

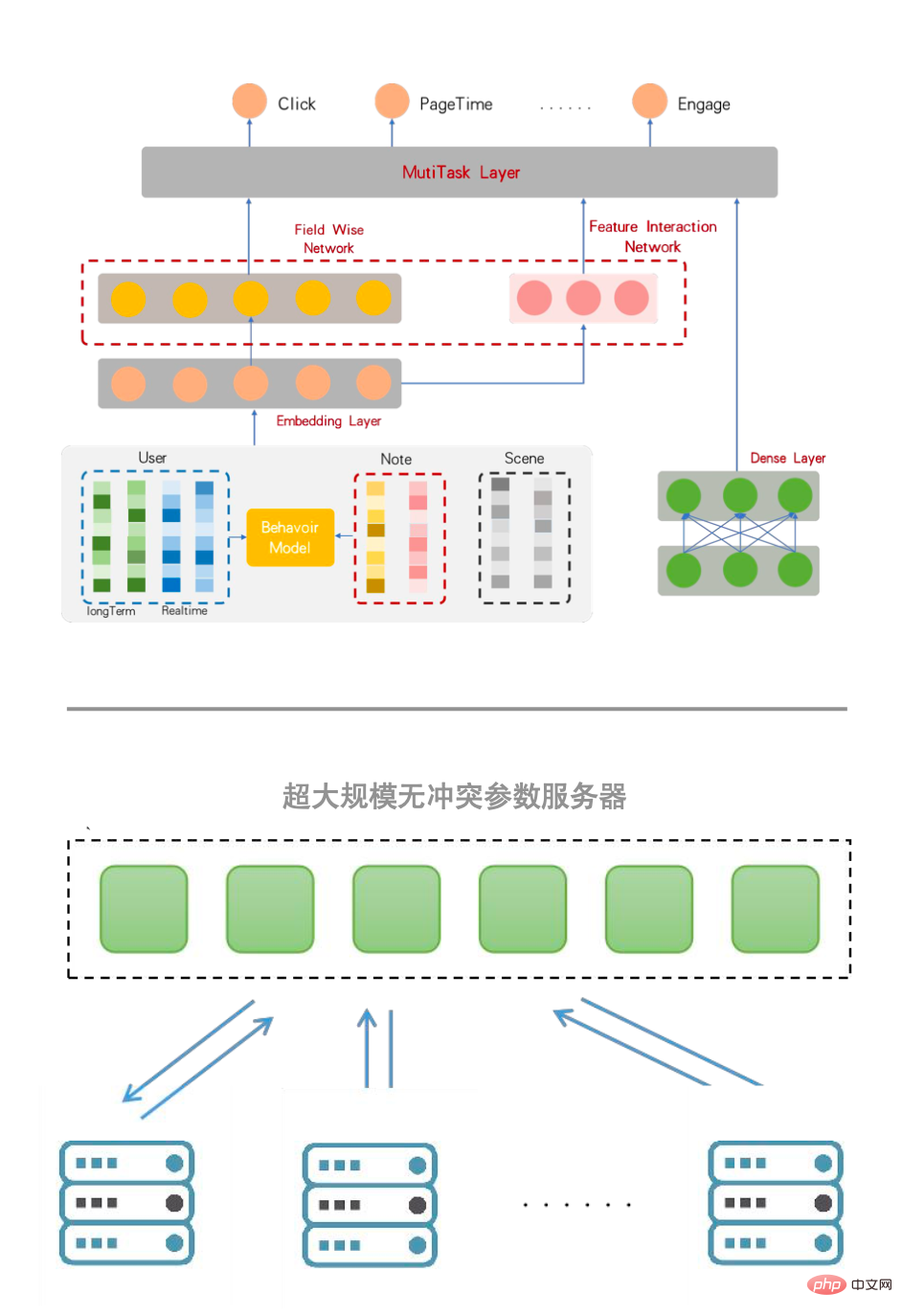

The picture below shows the general structure of the Xiaohongshu recommendation model. This is a multi-task machine learning model that can predict the user's clicks, dwell time, whether to like and collect, etc. In view of the massive coefficient parameters generated by the Xiaohongshu platform, Xiaohongshu updates and captures these parameters through a very large-scale conflict-free parameter server.

The Online Training of the recommended system is as follows. When users browse the information flow, the recommendation system will capture the user's browsing, clicks, likes and other behaviors in real time. These behaviors will be spliced based on Flink's real-time processing computing engine to generate high-performance samples. Samples will be sent to the model in real time for prediction. At the same time, these short-lived accumulated samples will also be used for a very short online training to update model parameters. These updated model parameters will be published online immediately to serve the next request. The entire process is kept within minutes.

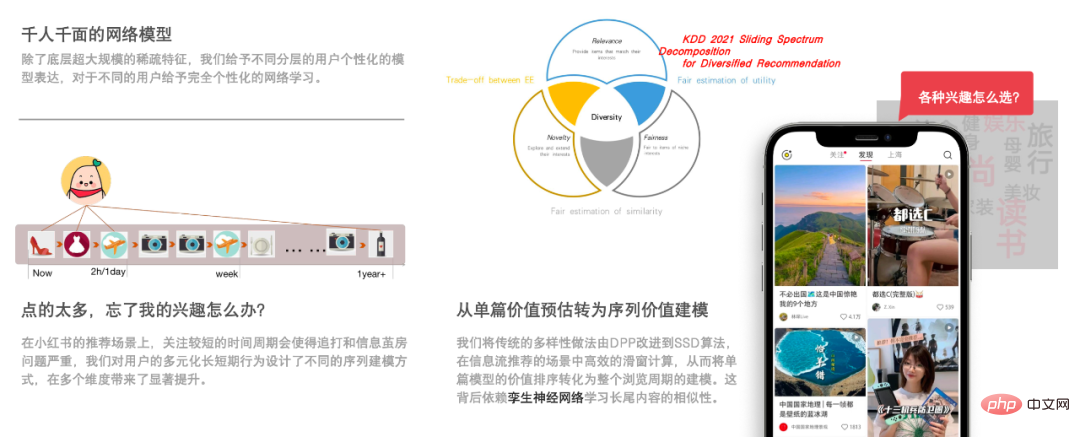

#There is also a classic question in the industry. For example, when people browse recommended content, they often find: Why are things that I have seen before intensively pushed? What should I do if the things I watch are not fresh enough?

In the recommendation scenario, focusing on a shorter time period will cause serious problems of chasing and information cocooning. Xiaohongshu’s technical team is concerned about the diversified long-term and short-term behaviors of users. Different sequence modeling methods were designed, which brought significant improvements in multiple dimensions. In addition, regarding the diversity issue of content recommendation, Xiaohongshu’s technical team improved the traditional diversity approach from DPP to SSD algorithm, and efficiently calculated the sliding window in the information flow recommendation scenario, thus transforming the value ranking of single article models. Model the entire browsing cycle. What this relies on is the twin neural network learning the similarity of long-tail content.

We have published related work results at the KDD 2021 conference. It has transformed from an estimate of the value of a single article to an estimate of the value of a sequence, and from the diversity of a single article to the diversity of multiple articles. Behind the scenes It is also based on the SSD algorithm and the assessment of content similarity based on this twin neural network.

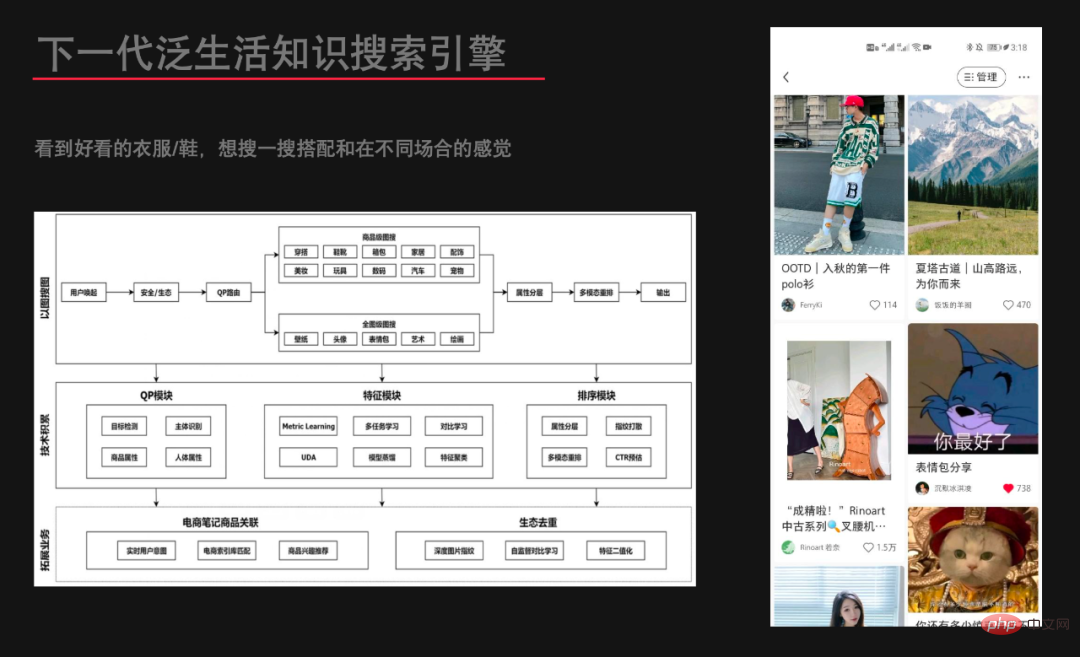

Multi-modal generalized life search engine

Because the Xiaohongshu community contains a large amount of very useful information in real life, many users will refer to Xiaohongshu Use the book as a search engine. This includes some challenges, such as searching in multiple data forms, serious long-tail phenomena, and intent understanding issues.

Existing image and text search engines can search for pictures through text, but the method is relatively simple. Usually, the pictures are tagged with text, and then the text is matched. The next-generation multi-modal pan-life search engine built by the Xiaohongshu team is based on an in-depth understanding of multi-modal content. It can truly search for visual content through images, text and text, and can also make more personalized searches based on the characteristics of users. search.

What is a pan-life knowledge search engine? For example, we see a good-looking piece of clothing or shoes on Xiaohongshu and want to search for its combinations and how it looks in different situations. This is a search for life knowledge, and it is also a multi-modal search.

This shows the multi-modality planned by the Xiaohongshu technical team, especially for technical architecture such as image search. One of the most critical dependencies is the feature multi-module, which requires reliance on large-scale neural networks. To do representation learning, you can have a good representation of the content contained in the picture, whether it is clothes, shoes or other commodities. It is very good to retrieve the same products or similar products from a large amount of multi-modal content. This is an application of our large-scale neural network in search.

AI generates more original commercial content

Compared with other platforms, Xiaohongshu’s commercial content has a big difference-original Biochemical. The so-called nativeization means that from the perspective of likes, comments and other behaviors, users appreciate the content very much and may not feel that it is commercial content at all. But for merchants on the platform, the threshold for producing such commercial content is very high. How to strike a good balance between the business intentions of merchants and the user value of content produced is a critical issue.

To this end, the Xiaohongshu technical team uses generative technology based on large-scale neural networks to help merchants generate better titles and content based on the content. For example, merchants can choose to express multiple selling points, or they can choose to highlight target customer groups, or their favorite Xiaohongshu style. The machine will automatically give suggested titles. After quoting the titles created by the machine, regardless of business effects, clicks or The length of stay has been greatly improved, and users also like this kind of content very much, so it achieves a good balance between business and user value.

This is actually based on large-scale pre-training models, including industry-leading T5, BERT, GPT and other model architectures. These model architectures are all available in Xiaohongshu trained on multi-modal data. Part of the pre-trained model is used to understand the content of notes, and part of the pre-trained model is used to guide the generative model to generate titles. These are how related technologies are applied in the business field.

Large-scale machine learning platform

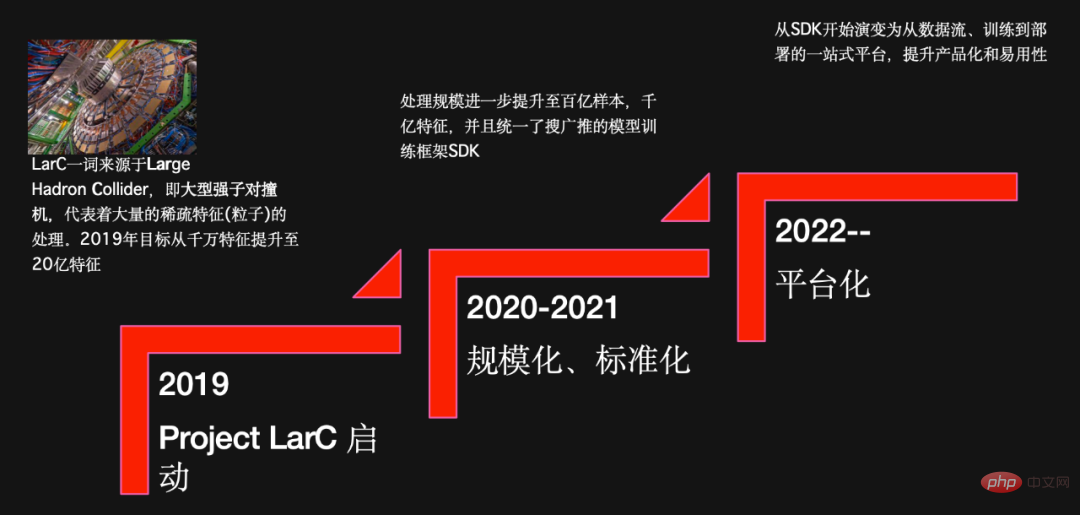

All the above machine learning content is actually based on small The LarC machine learning platform is self-developed by the Red Book technical team. It was launched in 2019, and by 2020 and 2021, related machine learning frameworks and platforms were promoted to all fields such as search, recommendation, and advertising. In 2022, LarC will become a platform.

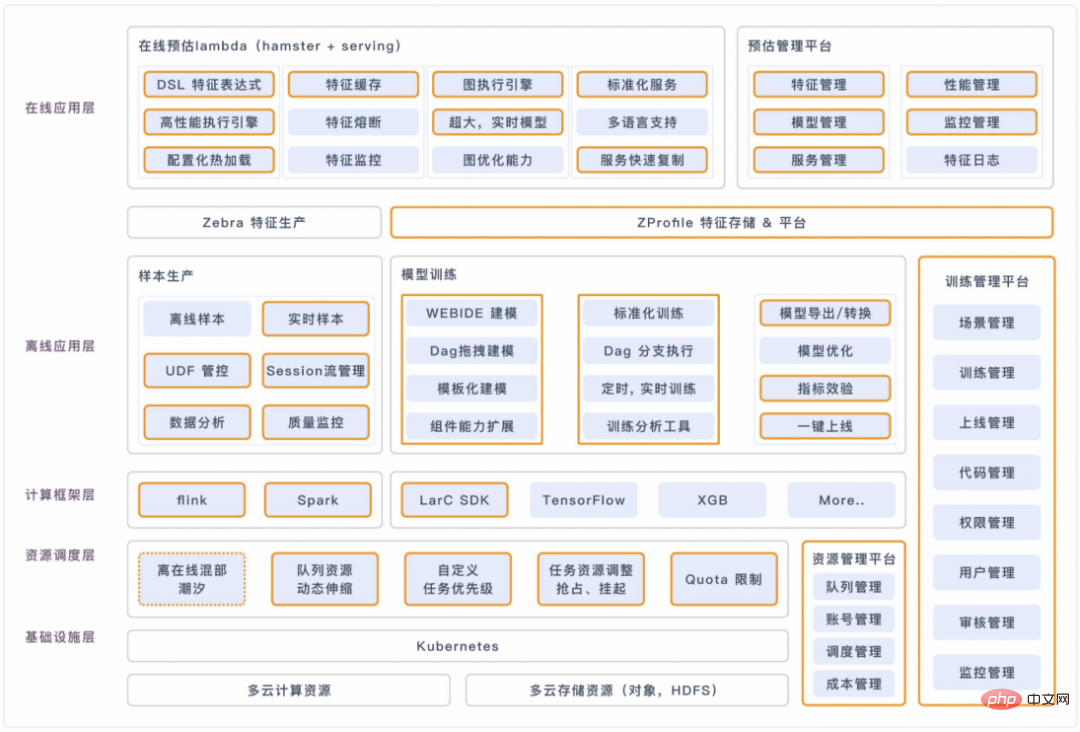

Currently, the capabilities of the LarC machine learning platform are quite complete, covering multiple levels from underlying infrastructure to computing framework, resource scheduling, offline applications and online deployment (including the bid The yellow part represents that it has been realized).

With the help of LarC machine learning platform, Xiaohongshu technical team hopes to help all algorithm students quickly and efficiently process massive data and train large-scale machine learning and deep learning models.

3. Summary

Xiaohongshu is a rapidly developing content community. "Ordinary people", "real sharing" and "life experience" are its keywords.

In such a scenario with massive multi-modal data and user feedback data, many cutting-edge technology explorations have been spawned. The above is a selection of some points from a large amount of technical work to share with you. In fact, there is a lot more content. I hope everyone can understand Xiaohongshu's technology and large-scale deep learning from it.

The above is the detailed content of Xiaohongshu's 'grass planting” mechanism is decrypted for the first time: how large-scale deep learning system technology is applied. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

BERT is a pre-trained deep learning language model proposed by Google in 2018. The full name is BidirectionalEncoderRepresentationsfromTransformers, which is based on the Transformer architecture and has the characteristics of bidirectional encoding. Compared with traditional one-way coding models, BERT can consider contextual information at the same time when processing text, so it performs well in natural language processing tasks. Its bidirectionality enables BERT to better understand the semantic relationships in sentences, thereby improving the expressive ability of the model. Through pre-training and fine-tuning methods, BERT can be used for various natural language processing tasks, such as sentiment analysis, naming

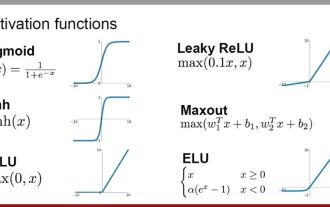

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Activation functions play a crucial role in deep learning. They can introduce nonlinear characteristics into neural networks, allowing the network to better learn and simulate complex input-output relationships. The correct selection and use of activation functions has an important impact on the performance and training results of neural networks. This article will introduce four commonly used activation functions: Sigmoid, Tanh, ReLU and Softmax, starting from the introduction, usage scenarios, advantages, disadvantages and optimization solutions. Dimensions are discussed to provide you with a comprehensive understanding of activation functions. 1. Sigmoid function Introduction to SIgmoid function formula: The Sigmoid function is a commonly used nonlinear function that can map any real number to between 0 and 1. It is usually used to unify the

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent Space Embedding (LatentSpaceEmbedding) is the process of mapping high-dimensional data to low-dimensional space. In the field of machine learning and deep learning, latent space embedding is usually a neural network model that maps high-dimensional input data into a set of low-dimensional vector representations. This set of vectors is often called "latent vectors" or "latent encodings". The purpose of latent space embedding is to capture important features in the data and represent them into a more concise and understandable form. Through latent space embedding, we can perform operations such as visualizing, classifying, and clustering data in low-dimensional space to better understand and utilize the data. Latent space embedding has wide applications in many fields, such as image generation, feature extraction, dimensionality reduction, etc. Latent space embedding is the main

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

1. Introduction Vector retrieval has become a core component of modern search and recommendation systems. It enables efficient query matching and recommendations by converting complex objects (such as text, images, or sounds) into numerical vectors and performing similarity searches in multidimensional spaces. From basics to practice, review the development history of Elasticsearch vector retrieval_elasticsearch As a popular open source search engine, Elasticsearch's development in vector retrieval has always attracted much attention. This article will review the development history of Elasticsearch vector retrieval, focusing on the characteristics and progress of each stage. Taking history as a guide, it is convenient for everyone to establish a full range of Elasticsearch vector retrieval.

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve