Researchers at Meta Corporation’s AI Research Department recently issued an announcement announcing key progress in robot adaptive skill coordination and visual cortex replication. They say these advances allow AI-powered robots to operate in the real world through vision and without needing to acquire any data from the real world.

They claim this is a major advance in creating general-purpose "Embodied AI" robots that can operate without human intervention. Interact with the real world. The researchers also said they created an artificial visual cortex called "VC-1" that was trained on the Ego4D dataset, which records daily activities from thousands of research participants around the world. video.

As the researchers explained in a previously published blog post, the visual cortex is the area of the brain that enables organisms to convert vision into movement. Therefore, having an artificial visual cortex is a key requirement for any robot that needs to perform tasks based on the scene in front of it.

Since the artificial visual cortex of "VC-1" is required to perform a range of different sensorimotor tasks well in a variety of environments, the Ego4D dataset plays a particularly important role as it contains research participants Users use wearable cameras to record thousands of hours of video of daily activities, including cooking, cleaning, exercising, crafting, and more.

The researchers said: "Biological organisms have a universal visual cortex, which is the embodiment agent we are looking for. Therefore, we set out to create a dataset that performs well in multiple tasks, with Ego4D as the core dataset, and improve VC-1 by adding additional datasets. Since Ego4D mainly focuses on daily activities such as cooking, gardening, and crafting, we also adopted a dataset of egocentric videos exploring houses and apartments."

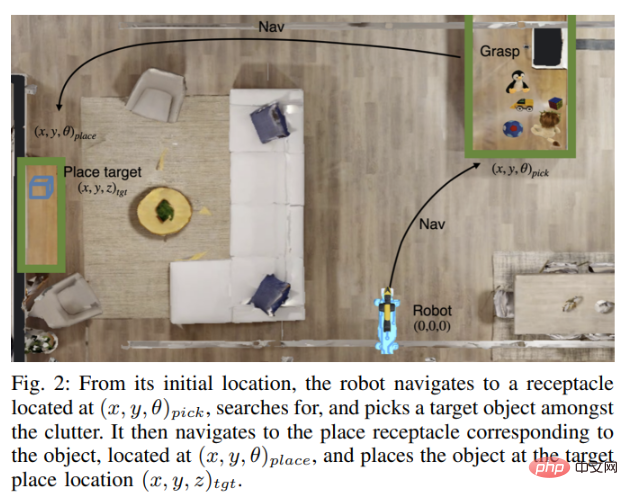

However, the visual cortex is only one element of "concrete AI". For a robot to work fully autonomously in the real world, it must also be able to manipulate objects in the real world. The robot needs vision to navigate, find and carry an object, move it to another location, and then place it correctly—all actions it performs autonomously based on what it sees and hears.

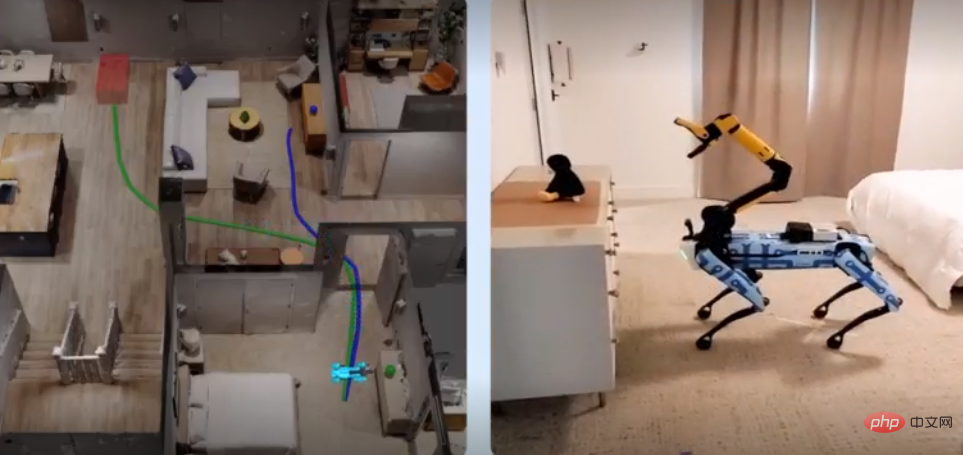

To solve this problem, Meta’s AI experts teamed up with researchers at Georgia Tech to develop a new technology called Adaptive Skill Coordination (ASC), which the robots use to simulate training and then replicating these skills into real-world robots.

Meta also collaborated with Boston Dynamics to demonstrate the effectiveness of its ASC technology. The two companies combined ASC technology with Boston Dynamics' Spot robot to give its robot powerful sensing, navigation and manipulation capabilities, although it also requires significant human intervention. For example, picking an object requires someone to click on the object displayed on the robot's tablet.

The researchers wrote in the article: "Our goal is to build an AI model that can perceive the world from onboard sensing and motor commands through the Boston Dynamics API."

Spot Robot Testing was conducted using the Habitat simulator, a simulation environment built with HM3D and ReplicaCAD datasets containing indoor 3D scan data of over 1,000 homes. The Spot robot was then trained to move around a house it had not seen before, carrying objects and placing them in appropriate locations. The knowledge and information gained by the trained Spot robots are then replicated to Spot robots operating in the real world, which automatically perform the same tasks based on their knowledge of the layout of the house.

We used two very different real-world environments: a 185-square-meter fully furnished apartment and a 65-square-meter university laboratory. The Spot robot was tested, requiring it to relocate various items. Overall, the Spot robot with ASC technology performed nearly flawlessly, succeeding 59 times out of 60 tests, overcoming hardware instability, picking failures, and movement. Adversarial interference such as obstacles or blocked paths." Meta researchers said that they also opened the source code of the VC-1 model and shared how to scale the model size in another paper. Details on data set size, etc. In the meantime, the team's next focus will be trying to integrate VC-1 with ASC to create a more human-like representational AI system.

The above is the detailed content of Meta researchers create artificial visual cortex that allows robots to operate visually. For more information, please follow other related articles on the PHP Chinese website!