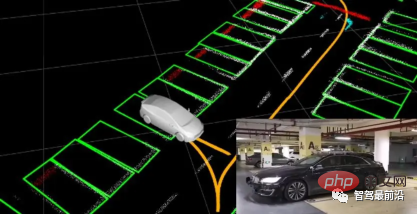

Positioning occupies an irreplaceable position in autonomous driving, and there is promising development in the future. Currently, positioning in autonomous driving relies on RTK and high-precision maps, which adds a lot of cost and difficulty to the implementation of autonomous driving. Just imagine that when humans drive, they do not need to know their own global high-precision positioning and the detailed surrounding environment. It is enough to have a global navigation path and match the vehicle's position on the path. What is involved here is the SLAM field. key technologies.

SLAM (Simultaneous Localization and Mapping), also known as CML (Concurrent Mapping and Localization), real-time positioning and map Construction, or concurrent mapping and positioning. The problem can be described as: Put a robot into an unknown location in an unknown environment. Is there a way for the robot to gradually draw a complete map of the environment while deciding in which direction the robot should move? For example, a sweeping robot is a very typical SLAM problem. The so-called complete map (a consistent map) means that it can travel to every accessible corner of the room without obstacles.

SLAM was first proposed by Smith, Self and Cheeseman in 1988. Due to its important theoretical and application value, it is considered by many scholars to be the key to realizing a truly fully autonomous mobile robot.

When simulated humans come to a strange environment, in order to quickly familiarize themselves with the environment and complete their tasks (such as finding a restaurant, finding a hotel), they should do the following in order:

a. Use your eyes to observe surrounding landmarks such as buildings, big trees, flower beds, etc., and remember their features (feature extraction)

b. In one's own mind, based on the information obtained by the binoculars, the characteristic landmarks are reconstructed in the three-dimensional map (three-dimensional reconstruction)

c. When walking, constantly acquire new Feature landmarks, and correct the map model in your mind (bundle adjustment or EKF)

d. Determine your position (trajectory) based on the feature landmarks you obtained from walking some time ago

e. When you walk a long way unintentionally, match it with the previous landmarks in your mind to see if you have returned to the original path (loop-closure detection). In reality, this step is optional. The above five steps are performed simultaneously, so they are Simultaneous Localization and Mapping.

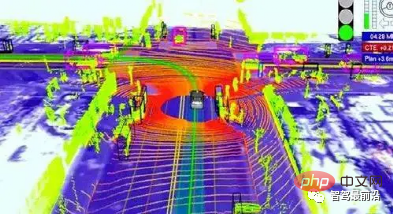

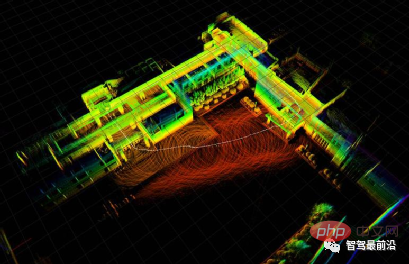

The sensors currently used in SLAM are mainly divided into two categories, lidar and camera. LiDAR can be divided into single-line and multi-line types, with different angular resolution and accuracy.

VSLAM is mainly implemented using cameras. There are many types of cameras, mainly divided into monocular, binocular, monocular structured light, and binocular. Structured light and ToF are several categories. Their core is to obtain RGB and depth map (depth information). Due to the impact of manufacturing costs, visual SLAM has become more and more popular in recent years. Real-time mapping and positioning through low-cost cameras is also very technically difficult. Take ToF (Time of Flight), a promising depth acquisition method, as an example.

The sensor emits modulated near-infrared light, which is reflected when it encounters an object. The sensor calculates the time difference or phase difference between light emission and reflection to convert the distance of the photographed scene to generate depth information. . Similar to radar, or imagine a bat, softkinetic's DS325 uses a ToF solution (designed by TI). However, its receiver microstructure is relatively special, with two or more shutters, and can measure ps-level time differences. However, its unit pixel size is usually 100um, so the current resolution is not high.

After the depth map is obtained, the SLAM algorithm begins to work. Due to different Sensors and requirements, the presentation form of SLAM is slightly different. . It can be roughly divided into laser SLAM (also divided into 2D and 3D) and visual SLAM (also divided into Sparse, semiDense, Dense), but the main ideas are similar.

SLAM technology is very practical, but also quite difficult. In the field of autonomous driving that requires precise positioning at all times, you want to complete the implementation of SLAM It is also full of difficulties. Generally speaking, the SLAM algorithm mainly considers the following four aspects when implementing it:

1. Map representation issues, such as dense and sparse are different expressions of it. This needs to be based on Actual scene needs to make a choice;

2. Information perception problem, you need to consider how to comprehensively perceive the environment. RGBD camera FOV is usually smaller, but lidar is larger;

3. Data association issues. Different sensors have different data types, timestamps, and coordinate system expressions, which need to be handled uniformly;

4. Positioning and composition problems refer to how to achieve pose estimation and modeling, which involves many mathematical problems, physical model establishment, state estimation and optimization; others include loop detection problems, exploration problems (exploration), and kidnapping problems (kidnapping).

The currently popular visual SLAM framework mainly includes front-end and back-end:

Front-end

#The front-end is equivalent to VO (visual odometry), which studies the transformation relationship between frames.

First extract the feature points of each frame image, use adjacent frame images to match the feature points, then use RANSAC to remove large noise, and then perform matching to obtain a pose information (position and attitude ), and at the same time, the attitude information provided by the IMU (Inertial Measurement Unit) can be used for filtering and fusion. The back-end is mainly to optimize the front-end results, using filtering theory (EKF, UKF, PF), or optimization theory TORO, G2O Perform tree or graph optimization. Finally, the optimal pose estimate is obtained.

Backend

The backend has more difficulties and involves more mathematical knowledge. Generally speaking, everyone has slowly abandoned the traditional filtering theory and moved towards graph optimization.

Because based on the filtering theory, the filter stability increases too fast, which puts a lot of pressure on the PF for EKF (Extended Kalman Filter) that requires frequent inversion.

Graph-based SLAM is usually based on keyframes to establish multiple nodes and relative transformation relationships between nodes, such as affine transformation matrices, and continuously Maintenance of key nodes is carried out to ensure the capacity of the graph and reduce the amount of calculation while ensuring accuracy.

Slam technology has achieved good implementation effects and achievements in many fields. Including indoor mobile robots, AR scenes, drones, etc. In the field of autonomous driving, SLAM technology has not received much attention. On the one hand, because most of the current autonomous driving industry is solved through RTK, too many resources will not be invested in in-depth research. On the other hand, SLAM technology has not received much attention. On the one hand, it is also because the current technology is not yet mature. In a life-related field such as autonomous driving, any new technology must pass the test of time before it can be accepted.

In the future, as sensor accuracy gradually improves, SLAM will also show its talents in the field of autonomous driving, with its low cost and high performance. Robustness will bring revolutionary changes to autonomous driving. As SLAM technology becomes more and more popular, more and more positioning talents will flood into the field of autonomous driving, injecting fresh blood into autonomous driving and bringing new technical directions and research areas.

The above is the detailed content of An article discussing the application of SLAM technology in autonomous driving. For more information, please follow other related articles on the PHP Chinese website!