Turing Award winner Yann Lecun, as one of the three giants in the AI field, the papers he published are naturally studied as the "Bible".

However, recently, someone suddenly jumped out and criticized LeCun for "sitting on the ground": "It's nothing more than rephrasing my core point of view."

Could it be...

Schmidhuber stated in this long article that he hopes readers can study the original papers and judge the scientific content of these comments for themselves, and also hopes that his work will be recognized and recognized.

LeCun stated at the beginning of the paper that many of the ideas described in this paper were (almost all) presented by many authors in different contexts and in different forms. Schmidhuber countered that unfortunately, most of the paper The content is "similar" to our papers since 1990, and there is no citation mark.

Let’s first take a look at the evidence (part) of his attack on LeCun this time.

Evidence 1:

LeCun: Today’s artificial intelligence research must solve three main challenges: (1) How can machines learn to represent the world? , learn to predict, and learn to act primarily through observation (2) How machines can reason and plan in a manner compatible with gradient-based learning (3) How machines can do so in a hierarchical manner, at multiple levels of abstraction and at multiple times Learning representations for perception (3a) and action planning (3b) at scale

Schmidhuber: These issues were addressed in detail in a series of papers published in 1990, 1991, 1997 and 2015.

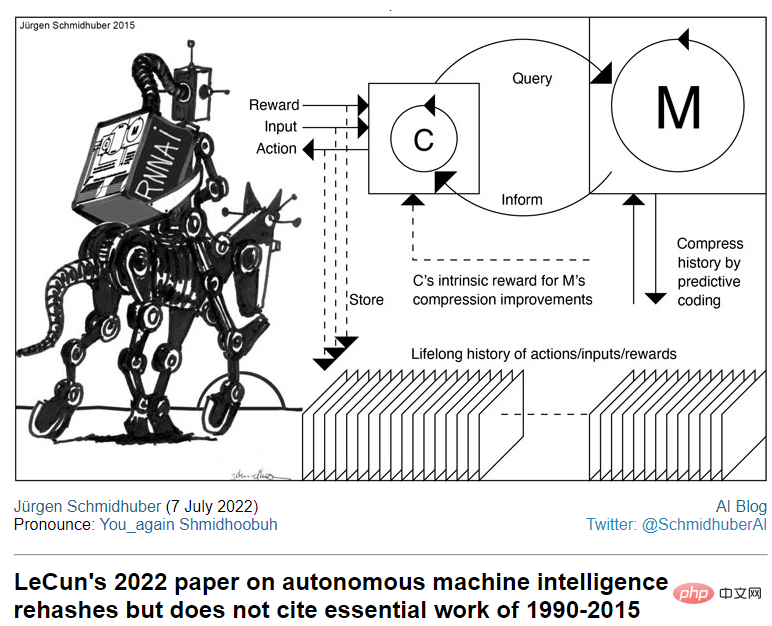

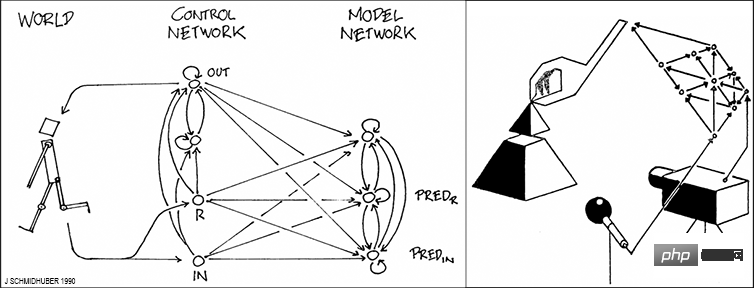

In 1990, the first work on gradient-based artificial neural networks (NN) for long-term planning and reinforcement learning (RL) and exploration through artificial curiosity was published.

It describes the combination of two recurrent neural networks (RNN, the most powerful NNN), called the controller and the world model.

Among them, the world model learns to predict the consequences of the controller's actions. The controller can use the world model to plan several time steps in advance and select the action sequence that maximizes the predicted reward.

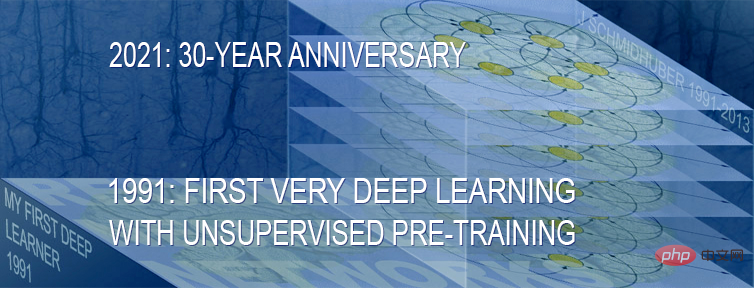

Regarding the answer to hierarchical perception based on neural networks (3a), this question is at least partially inspired by my 1991 publication of "The First Deep Learning Machine—Neural Sequence Analyzer" Blocker" solution.

It uses unsupervised learning and predictive coding in deep hierarchies of recurrent neural networks (RNN) to find " Internal representation of long data sequences".

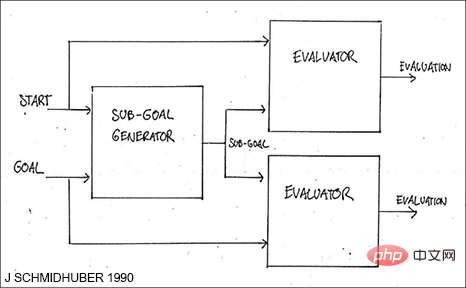

The answer to hierarchical action planning (3b) based on neural networks has been at least partially solved in 1990 by my paper on hierarchical reinforcement learning (HRL) solved this problem.

Evidence 2:

LeCun: Since both sub-modules of the cost module are differentiable , so the energy gradient can be back-propagated through other modules, especially the world module, performance module and perception module.

Schmidhuber: This is exactly what I published in 1990, citing the "System Identification with Feedforward Neural Networks" paper published in 1980.

In 2000, my former postdoc Marcus Hutter even published a theoretically optimal, general, non-differentiable method for learning world models and controllers. (See also the mathematically optimal self-referential AGI called a Gödel machine)

Evidence 3:

LeCun: Short-Term Memory The module architecture may be similar to a key-value memory network.

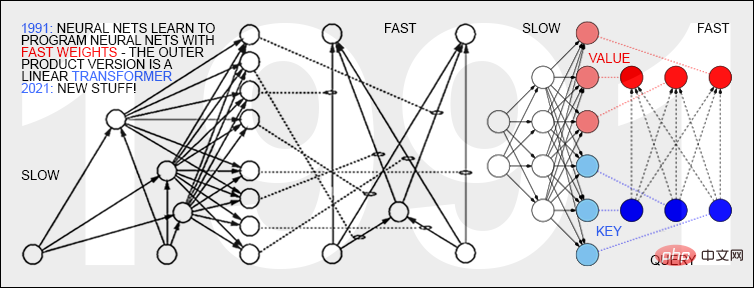

Schmidhuber: However, he failed to mention that I published the first such "key-value memory network" in 1991, when I described sequence processing "Fast Weight Controllers" or Fast Weight Programmers (FWPs) . FWP has a slow neural network that learns through backpropagation to quickly modify the fast weights of another neural network.

Evidence 4:

LeCun: The main originality of this paper The contribution lies in: (I) a holistic cognitive architecture in which all modules are differentiable and many of them trainable. (II) H-JEPA: Models that predict non-generative hierarchical architectures of the world that learn representations at multiple levels of abstraction and multiple time scales. (III) A series of non-contrastive self-supervised learning paradigms that produce representations that are simultaneously informative and predictable. (IV) Use H-JEPA as the basis for a predictive world model for hierarchical planning under uncertainty.

In this regard, Schmidhuber also proofread the four modules listed by LeCun one by one, and gave points that overlap with his paper.

At the end of the article, he stated that the focus of this article was not to attack the published papers or the ideas reflected by their authors. The key point is that these ideas are not as "original" as written in LeCun's paper.

He said that many of these ideas were put forward with the efforts of me and my colleagues. His "Main original contribution" that LeCun is now proposing is actually inseparable from my decades of research contributions. I Readers are expected to judge the validity of my comments for themselves.

From the father of LSTM to...

In fact, this is not the first time that this uncle has claimed that others have plagiarized his results.

As early as September last year, he posted on his blog that the most cited neural network paper results are based on the work completed in my laboratory:

" Needless to say, LSTM, and other pioneering work that is famous today such as ResNet, AlexNet, GAN, and Transformer are all related to my work. The first version of some work was done by me, but now these people are not The emphasis on martial ethics and irregular citations have caused problems with the current attribution of these results." Although the uncle is very angry, I have to say that Jürgen Schmidhuber has indeed been a bit unhappy over the years. They are both senior figures in the field of AI and have made many groundbreaking achievements, but the reputation and recognition they have received always seem to be far behind expectations.

Especially in 2018, when the three giants of deep learning: Yoshua Bengio, Geoffrey Hinton, and Yann LeCun won the Turing Award, many netizens questioned why the Turing Award was not awarded to LSTM. Father Jürgen Schmidhuber? He is also a master in the field of deep learning.

Back in 2015, three great minds, Bengio, Hinton, and LeCun, jointly posted a review on Nature, and the title was directly called "Deep Learning".

The article starts from traditional machine learning technology, summarizes the main architecture and methods of modern machine learning, describes the backpropagation algorithm for training multi-layer network architecture, and the birth of convolutional neural network, distributed Representation and language processing, as well as recurrent neural networks and their applications, among others.

Less than a month later, Schmidhuber posted a criticism on his blog.

Schmidhuber said that this article made him very unhappy because the entire article quoted the three authors’ own research results many times, while other pioneers had earlier views on deep learning. Contribution is not mentioned at all.

He believes that the "Three Deep Learning Giants" who won the Turing Award have become thieves who are greedy for others' credit and think they are self-interested. They use their status in the world to flatter each other and suppress their senior academics.

In 2016, Jürgen Schmidhuber had a head-to-head confrontation with "the father of GAN" Ian Goodfellow in the Tutorial of the NIPS conference.

At that time, when Goodfellow was talking about comparing GAN with other models, Schmidhuber stood up and interrupted with questions.

Schmidhuber’s question was very long, lasting about two minutes. The main content was to emphasize that he had proposed PM in 1992, and then talked about a lot of it. Principle, implementation process, etc., the final picture shows: Can you tell me if there are any similarities between your GAN and my PM?

Goodfellow did not show any weakness: We have communicated the issue you mentioned many times in emails before, and I have responded to you publicly a long time ago. I don’t want to waste the audience’s patience on this occasion.

Wait, wait...

Perhaps these "honey operations" of Schmidhuber can be explained by an email from LeCun:

"Jürgen said to everyone He is too obsessed with recognition and always says that he has not received many things that he deserves. Almost habitually, he always stands up at the end of every speech given by others and says that he is responsible for the results just presented. Generally speaking, , this behavior is unreasonable."

The above is the detailed content of LeCun's paper was accused of 'washing'? The father of LSTM wrote angrily: Copy my work and mark it as original.. For more information, please follow other related articles on the PHP Chinese website!