Hello, everyone.

I have shared with you fall recognition and fight recognition before. Today, we will take fall recognition as an example to see if we can completely leave it to ChatGPT.

Let ChatGPT do this. The most important thing is how to ask questions to ChatGPT. Throw all the questions directly to ChatGPT. For example, it is not advisable to write a fall detection code in Python. Instead, Like squeezing toothpaste, we guide ChatGPT step by step to get accurate answers, so that ChatGPT can truly improve our efficiency in solving problems.

The fall recognition case shared today has a clear idea of dialogue with ChatGPT and high code usability. According to the results returned by GPT, a fall recognition project can be developed, and there are some extended topics. Such as: Bi She’s improvement points.

The formal sharing begins below.

To ask a question to ChatGPT, you must first give him a role setting. In the algorithm field, this is called prompt. Only by entering the role can you answer the question better. Therefore, the first sentence of my question is:

If you are an AI engineer, and I want to identify whether there is a fall in the video, what are the methods?

There is no need to let it go as soon as you come up. He writes the code directly, and first asks him what methods or ideas he has.

Let’s take a look at ChatGPT’s answer

gives 3 solutions, including traditional image methods and deep learning methods.

Of course we are interested in deep learning, so we continue to ask about deep learning methods

What are the methods of using deep learning

ChatGPT answer

I have talked about it all from convolutional neural network to convolutional recurrent neural network.

It seems that the recurrent neural network RNN is simpler and more reliable. My next question is

I need the method of recurrent neural network (RNN)

ChatGPT answer

gives very detailed and very practical steps. I will briefly classify them into two steps:

The first step is to Video preprocessing, use a convolutional neural network (such as ResNet) to extract video action features (similar to wordembedding). The second step is to use the action features as the input of the lstm model, train the lstm recurrent neural network, classify the action features, and thereby identify falls. Inverted behavior

So far, ChatGPT has provided us with very clear ideas for solving problems and very strong executability.

So, at this time we can let ChatGPT write code.

According to the steps I divided above, I asked him to write the first step code at once, so my question is:

Write the video preprocessing code and use pytorch resnet Extracting video features

ChatGPT’s answer

好的,下面是通过 Pytorch 的 ResNet 提取视频特征的代码,以及视频预处理的示例代码: 视频预处理: import cv2 import numpy as np def preprocess_video(video_path, n_frames=16, resize=(224, 224)): # 读取视频 cap = cv2.VideoCapture(video_path) frames = [] while True: ret, frame = cap.read() if not ret: break # 强制转换为 RGB frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # 图像缩放 frame = cv2.resize(frame, resize) # 图像增强等操作 # ... frames.append(frame) cap.release() # 取固定帧数 n_frames,超过后从中间开始截取 n_frames = min(len(frames), n_frames) start = (len(frames) - n_frames) // 2 end = start + n_frames # 截取帧数后堆叠成四维数组 frames = np.stack(frames[start:end], axis=0) # 转换为支持 Pytorch 模型的格式 (B, C, T, H, W) frames = np.transpose(frames, (0, 3, 1, 2)) return frames 提取视频特征: import torch import torchvision.models as models def extract_features(frames): # 加载 ResNet 模型 resnet = models.resnet50(pretrained=True) # 去掉最后的全连接层 resnet = torch.nn.Sequential(*list(resnet.children())[:-1]) # 将 ResNet 设置为评估模式 resnet.eval() # 将预处理后的视频序列转化为 Tensor,并调整为指定的数据类型和范围 frames = torch.from_numpy(frames).float() / 255.0 # 减去 ImageNet 数据集的均值和标准差 normalize = torch.nn.Normalize( mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225] ) frames = normalize(frames) # 前向预测,输出 2048 维特征向量 with torch.no_grad(): features = resnet(frames) return features.reshape(-1, 2048) 在上面这两块代码中,preprocess_video() 函数将读取视频,并将其转换为指定大小的图像帧,返回处理后的数据;extract_features() 函数将接受上一步处理后的数据,并将其转化为 Pytorch 的 Tensor,使用训练好的 ResNet 模型对每一帧图像进行特征提取,并返回一个形状为 (B, 2048) 的 Tensor,其中 B 为帧数。

The picture is too long. In order for everyone to better understand ChatGPT’s answer, I directly posted the code text.

Defines two independent functions, which respectively complete the two small steps in the first step.

There is no problem with the code. First call the preprocess_video function to preprocess the video, and then call the extract_features convolution operation to embed a vector into a 2048-dimensional vector.

In the second step, I split it into two small steps, first building the lstm model, and then training

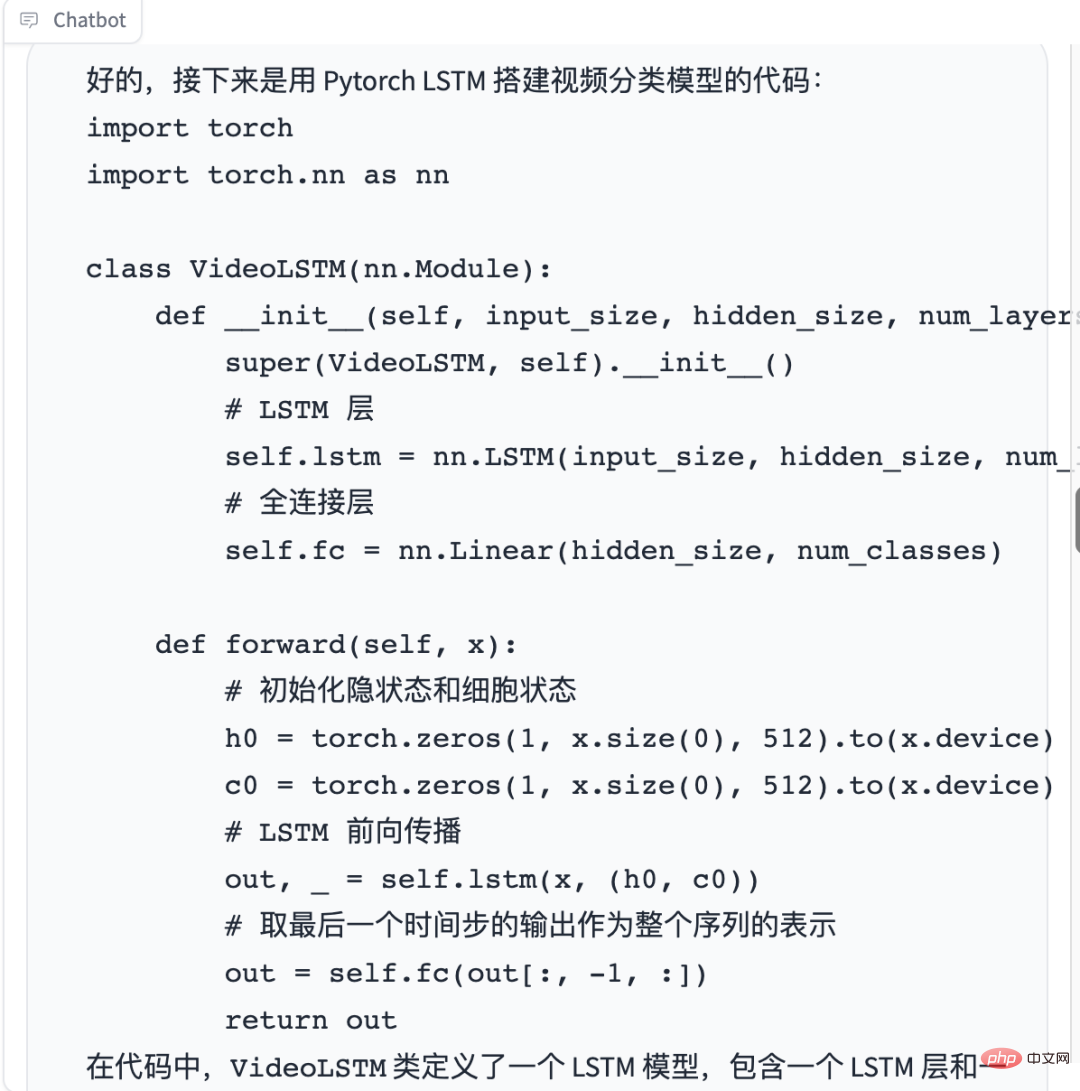

Based on the results produced above, use pytorch lstm to build the video classification model

ChatGPT’s answer

Write the model training code

ChatGPT’s answer

好的,下面是使用 Pytorch 训练视频分类模型的代码:

import torch

import torch.nn as nn

import torch.optim as optim

# 训练函数

def train(model, criterion, optimizer, dataloader, num_epochs=10):

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)# 将模型移动到 GPU 或 CPU 上

for epoch in range(num_epochs):

running_loss = 0.0

correct_predictions = 0

total_predictions = 0

for inputs, labels in dataloader:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(True):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

correct_predictions += torch.sum(preds == labels.data)

total_predictions += labels.size(0)

epoch_loss = running_loss / len(dataloader.dataset)

epoch_acc = correct_predictions.double() / total_predictions

print("Epoch {}: Loss {:.4f} Acc: {:.4f}".format(epoch + 1, epoch_loss, epoch_acc))

# 定义超参数

BATCH_SIZE = 4

NUM_EPOCHS = 20

# 定义数据集,假设已经准备好了训练数据集和测试数据集

train_dataset = ...

test_dataset = ...

# 定义数据加载器

train_dataloader = torch.utils.data.DataLoader(

train_dataset, batch_size=BATCH_SIZE, shuffle=True

)

test_dataloader = torch.utils.data.DataLoader(

test_dataset, batch_size=BATCH_SIZE, shuffle=False

)

# 定义模型

input_size = 2048

hidden_size = 512

num_layers = 1

num_classes = 2

model = VideoLSTM(input_size, hidden_size, num_layers, num_classes)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters())

# 训练模型

train(model, criterion, optimizer, train_dataloader, num_epochs=NUM_EPOCHS)

在代码中,首先定义了模型的超参数,如 BATCH_SIZE 和 NUM_EPOCHS。接着定义了训练数据集和测试数据集,并使用 Pytorch 的 DataLoader 将数据加载为 batch。然后定义了模型、损失函数和优化器。最后调用 train() 函数进行模型训练。

在训练过程中,每个 epoch 循环训练所有的视频帧,计算损失函数,计算准确率,并输出当前 epoch 的平均损失和准确率。训练完成后,可以将模型的参数保存到磁盘上,以便以后使用。The usability of the code is very high. Except for the cost of code concatenation, each independent module basically does not need to be moved, and the development efficiency is very efficient.

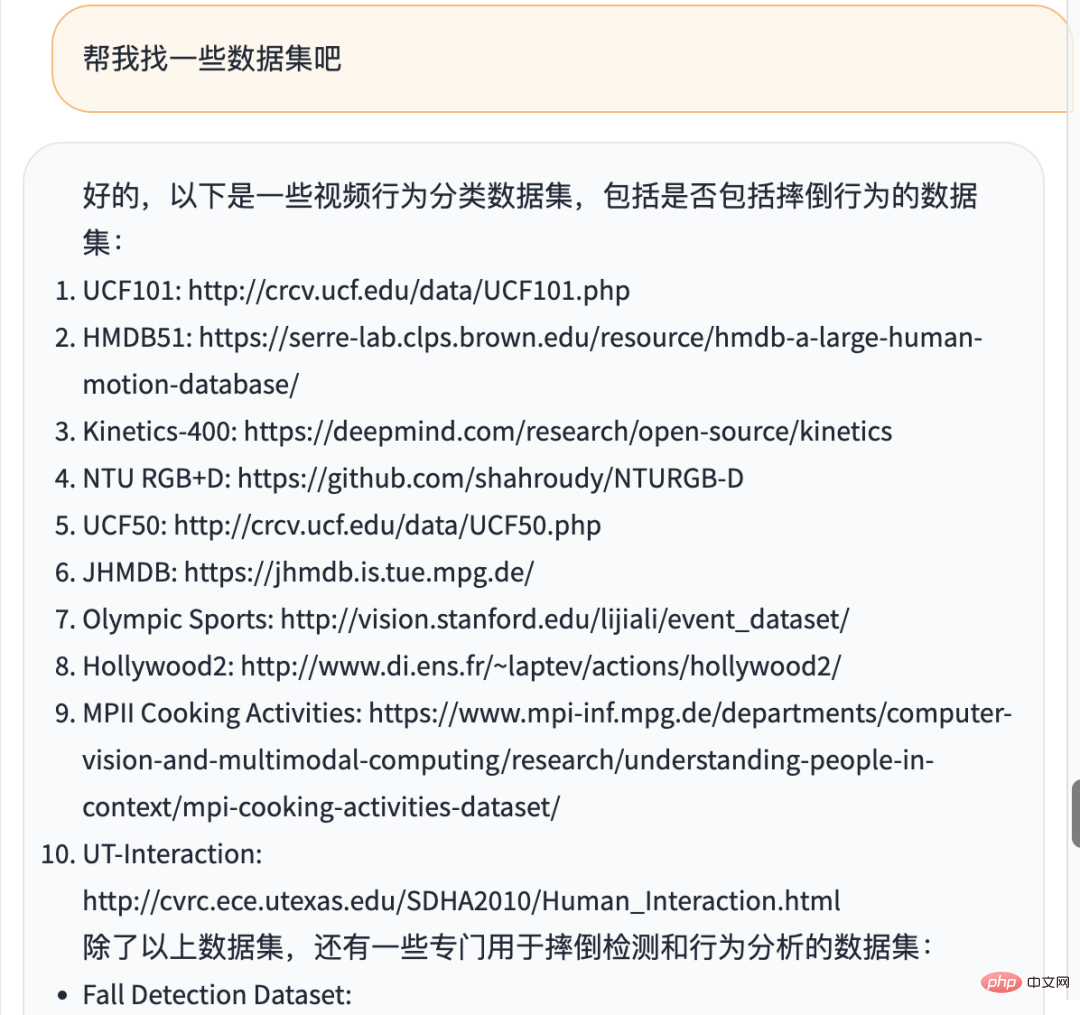

You can also ask ChatGPT to find some data sets

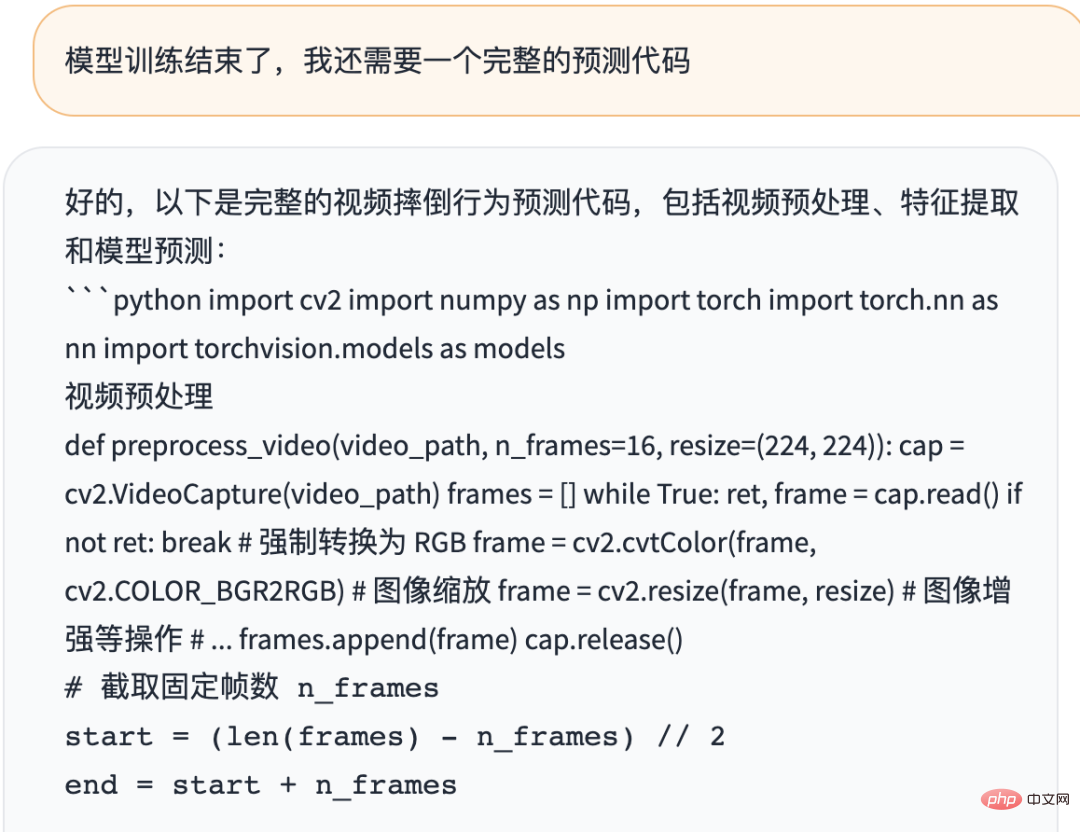

You can also ask him to write the code for model inference

It can automatically help us string together the three parts of video preprocessing, feature extraction and inference to form a complete project.

At this point, we have completely completed the project using ChatGPT.

We can also talk about some extended topics, such as:

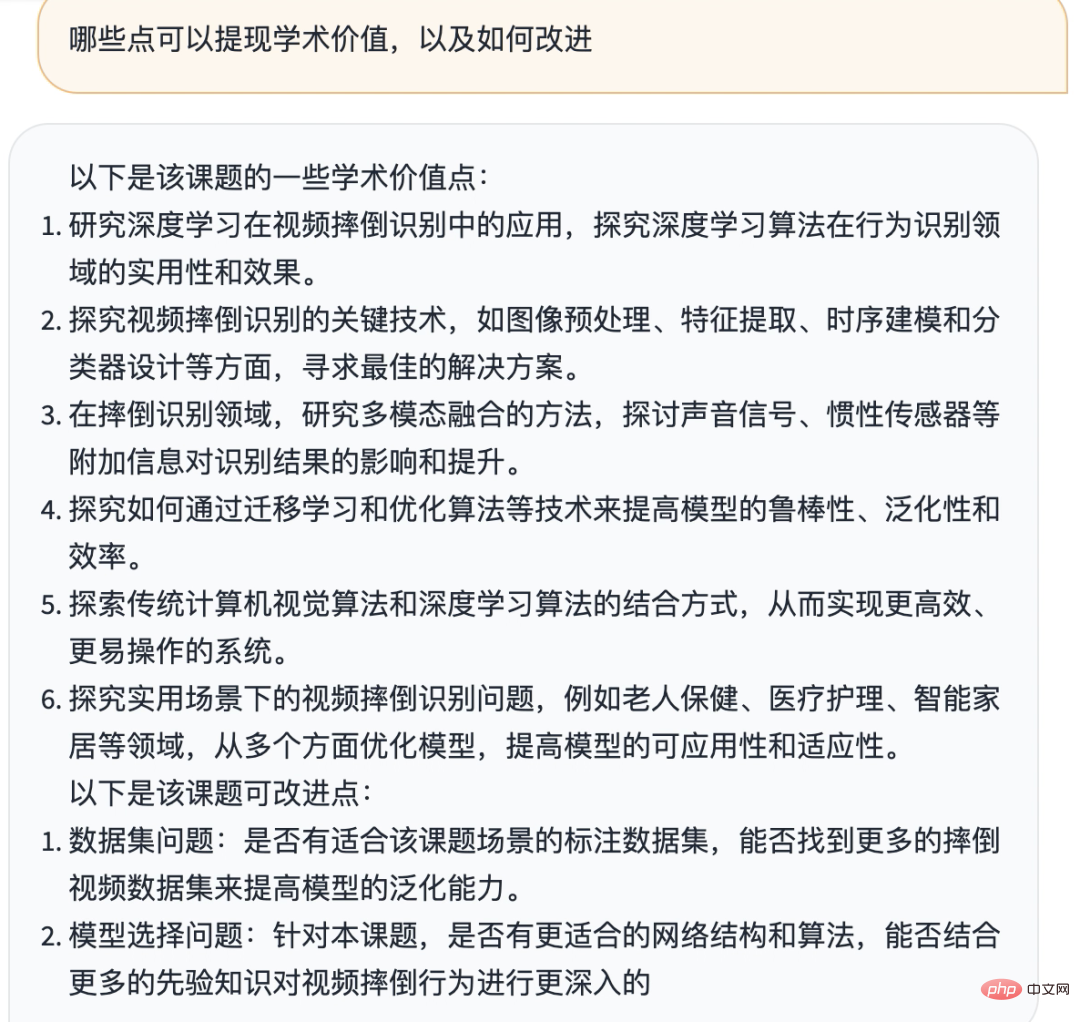

We can also ask ChatGPT to help us think of some points that can highlight academic value

Seeing this, it means that my article is of great significance to You have some help. Regardless of whether I join Planet or not, I am grateful for everyone's recognition and trust in me.

The above is the detailed content of Fall detection - completely developed with ChatGPT, share how to ask questions to ChatGPT correctly. For more information, please follow other related articles on the PHP Chinese website!