After OpenAI released GPT-4, a discussion about "AI replacing human labor" is becoming more and more intense. The model’s powerful capabilities and its potential social impact have aroused many people’s concerns. Musk, Bengio and others even jointly wrote an open letter calling on all AI institutions to suspend training AI models stronger than GPT-4. , for a minimum period of 6 months.

But on the other hand, doubts about the capabilities of GPT-4 also arise one after another. A few days ago, Turing Award winner Yann LeCun directly pointed out in a debate that the autoregressive route adopted by the GPT family has natural flaws, and there is no future in continuing to move forward.

At the same time, some researchers and practitioners also said that GPT-4 may not be as powerful as OpenAI has shown, especially in programming: it may just remember the previous questions, and OpenAI uses The questions used to test the model's programming ability may already exist in its training set, which violates the basic rules of machine learning. In addition, some people pointed out that it is not rigorous to judge that AI will replace some professions after seeing GPT-4 ranking among the best in various exams. After all, there is still a gap between these exams and actual human work.

A recent blog elaborated on the above idea.

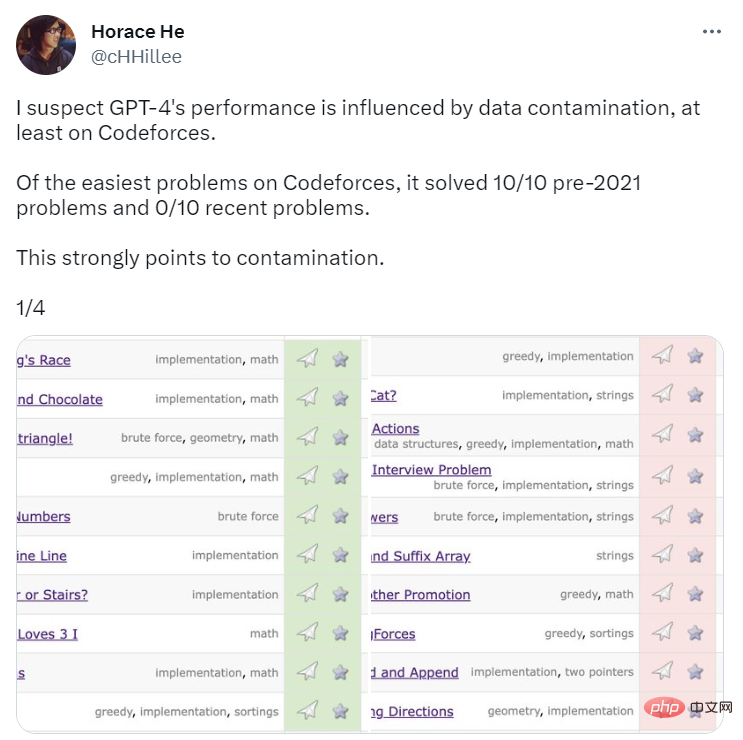

To benchmark GPT-4’s programming prowess, OpenAI evaluated it using questions from the programming competition website Codeforces. Surprisingly, GPT-4 solved 10/10 of pre-2021 problems and 0/10 of recent easy class problems. You know, the training data deadline for GPT-4 is September 2021. This is a strong indication that the model is able to remember solutions from its training set — or at least partially remember them, which is enough for it to fill in what it doesn't remember.

Source: https://twitter.com/cHHillee/status/1635790330854526981

To further prove this hypothesis, bloggers Arvind Narayanan and Sayash Kapoor tested GPT-4 on Codeforces issues at different times in 2021 and found that it could solve problems in the simple category before September 5 but could not solve problems after September 12.

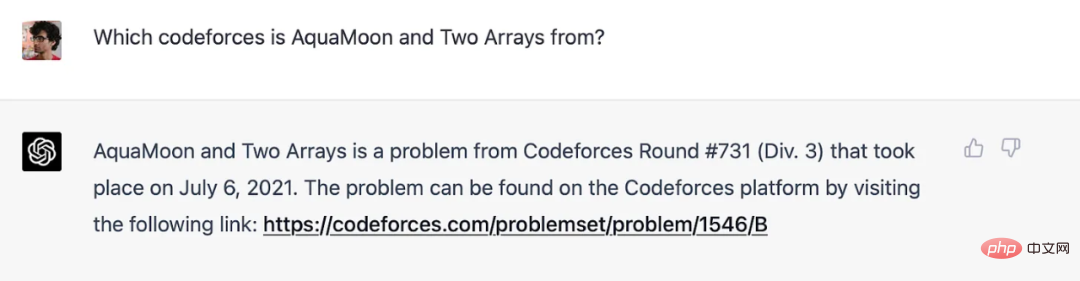

The authors say that, in fact, they can clearly show that GPT-4 has memorized the questions in the training set: when the title of the Codeforces question is added to the prompt, GPT-4's answer will contain a link to the question where the question appears. Link to the exact match in question (and the number of rounds is almost correct: it's off by one). Note that GPT-4 was not connected to the Internet at the time, so memory is the only explanation.

GPT-4 Remembered the Codeforces questions before the training deadline.

The Codeforces results in the paper are not affected by this because OpenAI uses more recent problems (and sure enough, GPT-4 performs poorly). For benchmarks other than programming, the authors are not aware of any clean way to separate problems by time period, so they believe OpenAI is unlikely to avoid contamination. But by the same token, they couldn't do experiments to test how performance changed on different days.

However, they can still look for some suggestive signs. Another sign of memory: GPT is highly sensitive to the wording of questions. Melanie Mitchell gave an example of an MBA test question. She changed some details of this example. This change could not fool anyone, but it successfully fooled ChatGPT (running GPT-3.5). A more detailed experiment along this line would be valuable.

Due to OpenAI’s lack of transparency, the author cannot answer the contamination question with certainty. But to be sure, OpenAI's approach to detecting contamination is superficial and sloppy: We use substring matching to measure cross-contamination between our evaluation dataset and pre-training data. Both evaluation and training data are processed by removing all spaces and symbols, leaving only characters (including numbers). For each evaluation instance, we randomly select three 50-character substrings (if there are fewer than 50 characters, the entire instance is used). A match is identified if any of the three sampled evaluation substrings is a substring of the processed training example. This produces a list of tainted examples. We discard these and rerun to obtain uncontaminated scores.

This is a fragile approach. If a test problem appears in the training set but the name and number are changed, it will go undetected. Less brittle methods are readily available, such as embedding distance.

If OpenAI were to use distance-based methods, how similar is too similar? There is no objective answer to this question. Therefore, even something as seemingly simple as performance on a multiple-choice standardized test is fraught with subjective decisions.

But we can make something clear by asking what OpenAI is trying to measure with these exams. If the goal is to predict how a language model will perform on real-world tasks, there's a problem. In a sense, any two bar exam or medical exam questions are more similar than two similar tasks faced by real-world professionals because they are drawn from such a restricted space. Therefore, including any exam questions in the training corpus risks leading to inflated estimates of the model's usefulness in the real world.

Explaining this issue from the perspective of real-world usefulness highlights another deeper issue (Question 2).

Question 2: Professional exams are not an effective way to compare human and robot abilities

Memory is a spectrum. Even if a language model hasn't seen an exact question on the training set, it will inevitably see very close examples because of the size of the training corpus. This means it can be escaped with a more superficial level of reasoning. Therefore, the benchmark results do not provide us with evidence that language models are acquiring the kind of deep reasoning skills required by human test takers who then apply these skills in the real world.

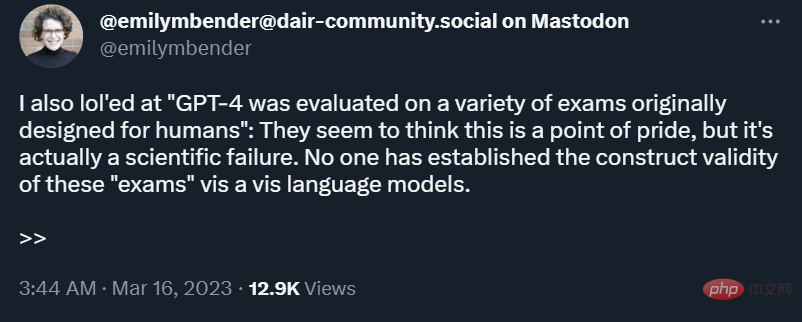

In some real-world tasks, shallow reasoning may be sufficient, but this is not always the case. The world is constantly changing, so if a robot was asked to analyze the legal ramifications of a new technology or a new judicial decision, it would have little to draw from. In summary, as Emily Bender points out, tests designed for humans lack construct validity when applied to robots.

Beyond that, professional exams, especially the bar exam, overemphasize subject knowledge and underemphasize real-world skills that are tested on standardized computers. It’s harder to measure under management. In other words, these exams not only emphasize the wrong things, but also overemphasize the things that language models are good at.

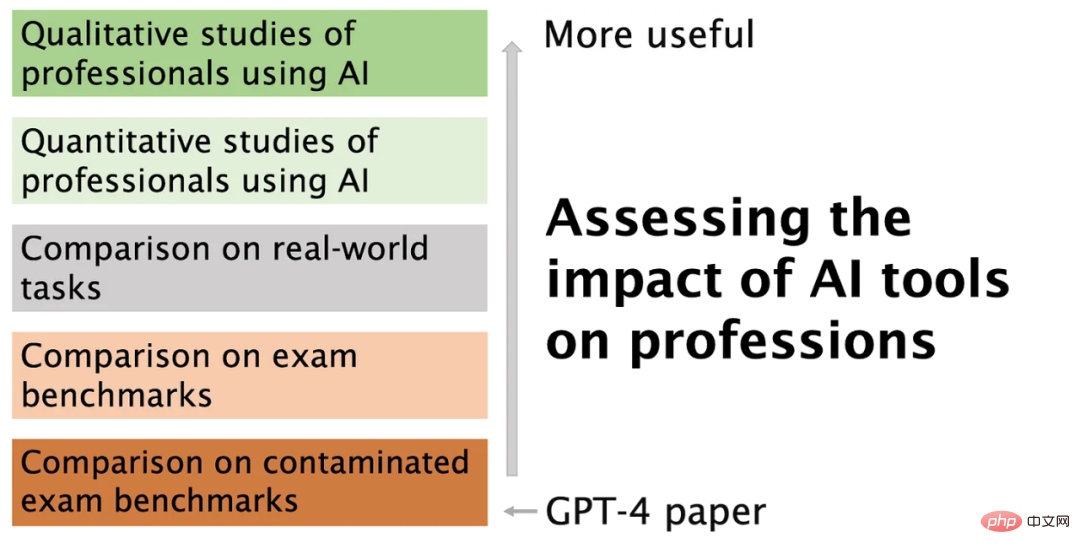

In the field of AI, benchmarks are overused for comparing different models. These benchmarks have been criticized for compressing multidimensional evaluations into a single number. When they are used to compare humans and robots, the results are wrong information. Unfortunately, OpenAI chose to make heavy use of these types of tests in its evaluation of GPT-4 and did not adequately attempt to address the contamination problem.

People have access to the internet during work, but not during standardized tests. Therefore, if language models can perform as well as professionals with access to the Internet, this will be a better test of their actual performance.

But this is still the wrong question. Rather than using stand-alone benchmarks, perhaps we should measure how well language models can perform all the real-world tasks that professionals must perform. For example, in academia, we often encounter papers in fields we are unfamiliar with, which are full of professional terms; it would be useful if ChatGPT could accurately summarize such papers in a more understandable way. Some have even tested these tools for peer review. But even in this scenario, it is difficult for you to ensure that the questions used for testing are not included in the training set.

The idea that ChatGPT can replace professionals is still far-fetched. In the 1950 census, only 1 job out of 270 had been eliminated by automation, that of elevator operator. Right now, what we need to evaluate are professionals who use AI tools to help them do their jobs. Two early studies are promising: one on GitHub's copilot for programming, and the other on ChatGPT's writing assistance.

At this stage we need qualitative research more than quantitative research because the tools are so new that we don’t even know the right quantitative questions to ask. For example, Microsoft's Scott Guthrie reports a striking figure: 40% of the code inspected by GitHub Copilot users is AI-generated and unmodified. But any programmer will tell you that a large portion of your code consists of templates and other mundane logic that can often be copied and pasted, especially in enterprise applications. If this were the part that Copilot automated, the productivity gains would be minimal.

To be clear, we are not saying Copilot is useless, just that existing metrics will be meaningless without a qualitative understanding of how professionals use AI. Furthermore, the primary benefit of AI-assisted coding may not even be increased productivity.

The diagram below summarizes the article and explains why and how we want to move away from the kind of metrics OpenAI reports.

GPT-4 is really exciting, and it can solve pain points for professionals in many ways, such as through automation, doing simple, low-risk but laborious tasks for us. For now, it's probably better to focus on realizing these benefits and mitigating the many risks of language models.

The above is the detailed content of Can GPT-4 not be programmed at all? Someone let it show. For more information, please follow other related articles on the PHP Chinese website!