Chinese doctors and Google scientists recently proposed the pre-trained visual language model Vid2Seq, which can distinguish and describe multiple events in a video. This paper has been accepted by CVPR 2023.

Recently, researchers from Google proposed a pre-trained visual language model for describing multi-event videos - Vid2Seq, which has been accepted by CVPR23.

Previously, understanding video content was a challenging task because videos often contained multiple events occurring at different time scales.

For example, a video of a musher tying a dog to a sled and then the dog starting to run involves a long event (dog sledding) and a short event (dog tied to sled).

One way to advance video understanding research is through dense video annotation tasks, which involve temporally locating and describing all events in a minute-long video.

Paper address: https://arxiv.org/abs/2302.14115

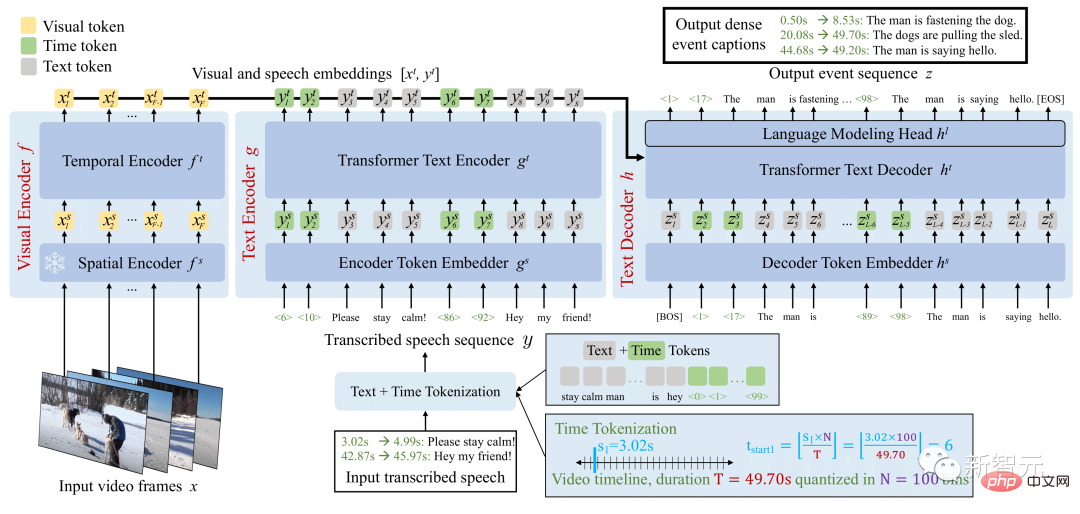

The Vid2Seq architecture enhances the language model with special time stamps, allowing it to Seamlessly predict event boundaries and textual descriptions in the same output sequence.

In order to pre-train this unified model, the researchers exploited unlabeled Narrated video.

Vid2Seq Model Overview

The resulting Vid2Seq model was pre-trained on millions of narrated videos, improving various dense The technical level of video annotation benchmarks, including YouCook2, ViTT and ActivityNet Captions.

Vid2Seq is also well suited to few-shot dense video annotation settings, video segment annotation tasks, and standard video annotation tasks.

The multi-modal Transformer architecture has refreshed the SOTA of various video tasks, such as action recognition. However, adapting such an architecture to the complex task of jointly locating and annotating events in minutes-long videos is not straightforward.

To achieve this goal, researchers enhance the visual language model with special time markers (such as text markers) that represent discrete timestamps in the video, similar to Pix2Seq in the spatial domain.

For a given visual input, the resulting Vid2Seq model can both accept the input and generate text and time-tagged sequences.

First, this enables the Vid2Seq model to understand the temporal information of the transcribed speech input, which is projected as a single sequence of tokens. Second, this enables Vid2Seq to jointly predict dense event annotations temporally within the video while generating a single sequence of markers.

The Vid2Seq architecture includes a visual encoder and a text encoder that encode video frames and transcribed speech input respectively. The resulting encodings are then forwarded to a text decoder, which automatically predicts the output sequence of dense event annotations, as well as their temporal positioning in the video. The architecture is initialized with a strong visual backbone and a strong language model.

Manually collecting annotations for dense video annotation is particularly costly due to the intensive nature of the task.

Therefore, the researchers used unlabeled narration videos to pre-train the Vid2Seq model, which are easily available at scale. They also used the YT-Temporal-1B dataset, which includes 18 million narrated videos covering a wide range of domains.

The researchers used transcribed speech sentences and their corresponding timestamps as supervision, and these sentences were projected as a single token sequence.

Vid2Seq is then pretrained with a generative objective that teaches the decoder to predict only transcribed speech sequences given visual input, and a denoising objective that encourages multimodal learning, requiring the model to Predictive masks in the context of noisy transcribed speech sequences and visual input. In particular, noise is added to the speech sequence by randomly masking span tokens.

The resulting pre-trained Vid2Seq model can be fine-tuned on downstream tasks via a simple maximum likelihood objective that uses teacher forcing (i.e., given Predict the next token based on the previous basic real token).

After fine-tuning, Vid2Seq surpasses SOTA on three standard downstream dense video annotation benchmarks (ActivityNet Captions, YouCook2, and ViTT) and two video clip annotation benchmarks (MSR-VTT, MSVD).

In the paper, there are additional ablation studies, qualitative results, and results in a few-shot setting and video paragraph annotation tasks.

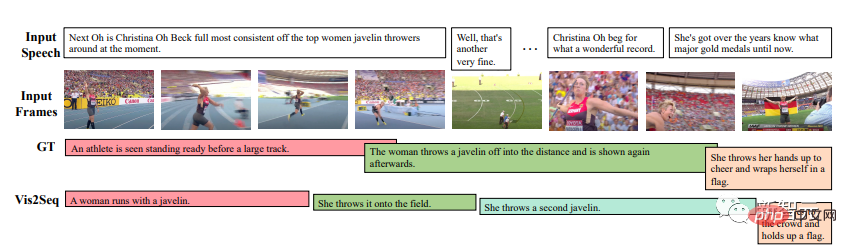

The results show that Vid2Seq can predict meaningful event boundaries and annotations, and that the predicted annotations and boundaries are significantly different from the transcribed speech input (this also shows that the input importance of visual markers).

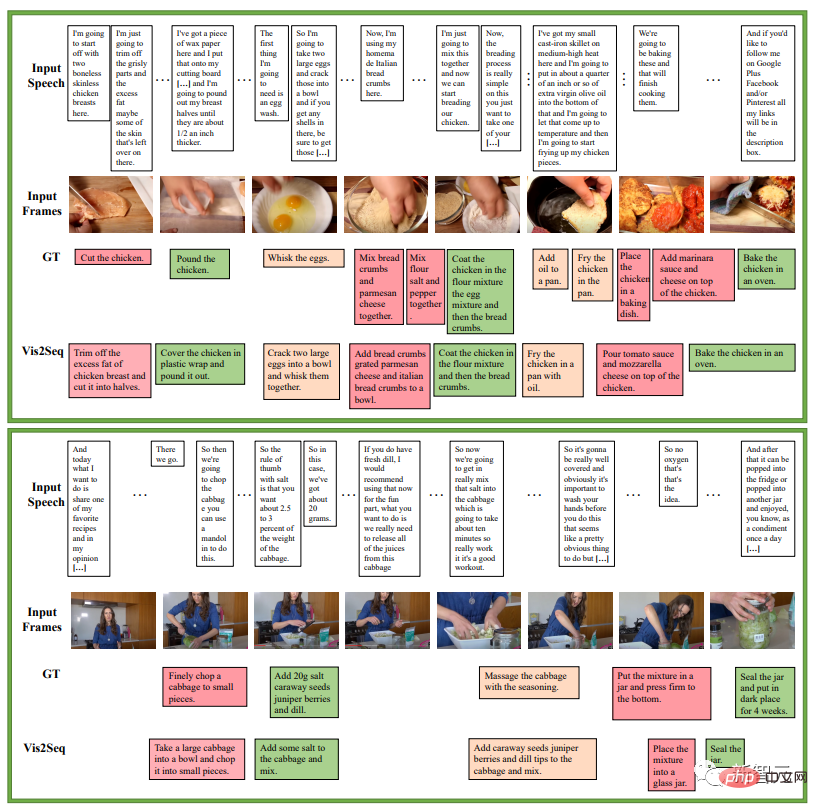

The next example is about a series of instructions in a cooking recipe, which is an example of intensive event annotation prediction by Vid2Seq on the YouCook2 validation set:

What follows is an example of Vid2Seq’s dense event annotation predictions on the ActivityNet Captions validation set. In all of these videos, there is no transcribed speech.

However, there will still be cases of failure. For example, the picture marked in red below, Vid2Seq said, is a person taking off his hat in front of the camera.

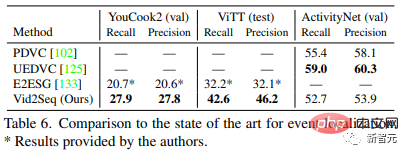

Table 5 compares Vid2Seq with the most advanced dense video annotation methods: Vid2Seq refreshes SOTA on the three data sets of YouCook2, ViTT and ActivityNet Captions.

Vid2Seq’s SODA indicators on YouCook2 and ActivityNet Captions are 3.5 and 0.3 points higher than PDVC and UEDVC respectively. And E2ESG uses in-domain plain text pre-training on Wikihow, and Vid2Seq is better than this method. These results show that the pre-trained Vid2Seq model has strong ability to label dense events.

Table 6 evaluates the event localization performance of the dense video annotation model. Compared with YouCook2 and ViTT, Vid2Seq is superior in handling dense video annotation as a single sequence generation task.

However, compared to PDVC and UEDVC, Vid2Seq performs poorly on ActivityNet Captions. Compared with these two methods, Vid2Seq integrates less prior knowledge about temporal localization, while the other two methods include task-specific components such as event counters or train a model separately for the localization subtask.

The visual temporal transformer encoder, text encoder and text decoder all have 12 layers, 12 heads, embedding dimensions 768, MLP hidden dimension 2048.

The sequences of the text encoder and decoder are truncated or padded to L=S=1000 tokens during pre-training, and S=1000 and L=256 tokens during fine-tuning. During inference, beam search decoding is used, the first 4 sequences are tracked and a length normalization of 0.6 is applied.

The author uses the Adam optimizer, β=(0.9, 0.999), without weight decay.

During pre-training, a learning rate of 1e^-4 is used, linearly warmed up (starting from 0) for the first 1000 iterations, and kept constant for the remaining iterations.

During fine-tuning, use a learning rate of 3e^-4, linearly warm up (starting from 0) in the first 10% of iterations, and maintain cosine decay (down to 0) in the remaining 90% of iterations. In the process, a batch size of 32 videos is used and divided on 16 TPU v4 chips.

The author made 40 epoch adjustments to YouCook2, 20 epoch adjustments to ActivityNet Captions and ViTT, 5 epoch adjustments to MSR-VTT, and 10 epoch adjustments to MSVD.

Vid2Seq proposed by Google is a new visual language model for dense video annotation. It can effectively perform large-scale pre-training on unlabeled narration videos. And achieved SOTA results on various downstream dense video annotation benchmarks.

Introduction to the author

First author of the paper: Antoine Yang

Antoine Yang is a third-year doctoral student in the WILLOW team of Inria and École Normale Supérieure in Paris. His supervisors are Antoine Miech, Josef Sivic, Ivan Laptev and Cordelia Schmid.

Current research focuses on learning visual language models for video understanding. He interned at Huawei's Noah's Ark Laboratory in 2019, received an engineering degree from Ecole Polytechnique in Paris and a master's degree in mathematics, vision and learning from the National University of Paris-Saclay in 2020, and interned at Google Research in 2022.

The above is the detailed content of Google launches multi-modal Vid2Seq, understanding video IQ online, subtitles will not be offline | CVPR 2023. For more information, please follow other related articles on the PHP Chinese website!