To learn about the design, constraints, and evolution behind contemporary large-scale language models, you can follow this article's reading list.

Large-scale language models have captured the public's attention, and in just five years, models such as Transforme have almost completely changed the field of natural language processing. Additionally, they are starting to revolutionize fields such as computer vision and computational biology.

Given that Transformers have such a big impact on everyone’s research process, this article will introduce you to a short reading list for machine learning researchers and practitioners to get started.

The following list is mainly expanded in chronological order, mainly some academic research papers. Of course, there are many other helpful resources. For example:

If you are new to Transformers and large language models , then these articles are best for you.

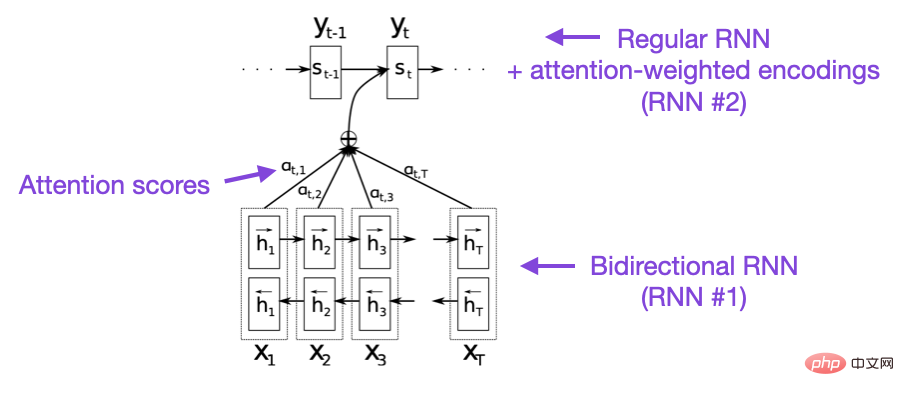

Paper 1: "Neural Machine Translation by Jointly Learning to Align and Translate"

Paper address: https:// arxiv.org/pdf/1409.0473.pdf

This article introduces a recurrent neural network (RNN) attention mechanism to improve the model's long-range sequence modeling capabilities. This enables RNNs to more accurately translate longer sentences - the motivation behind the development of the original Transformer architecture.

Image source: https://arxiv.org/abs/1409.0473

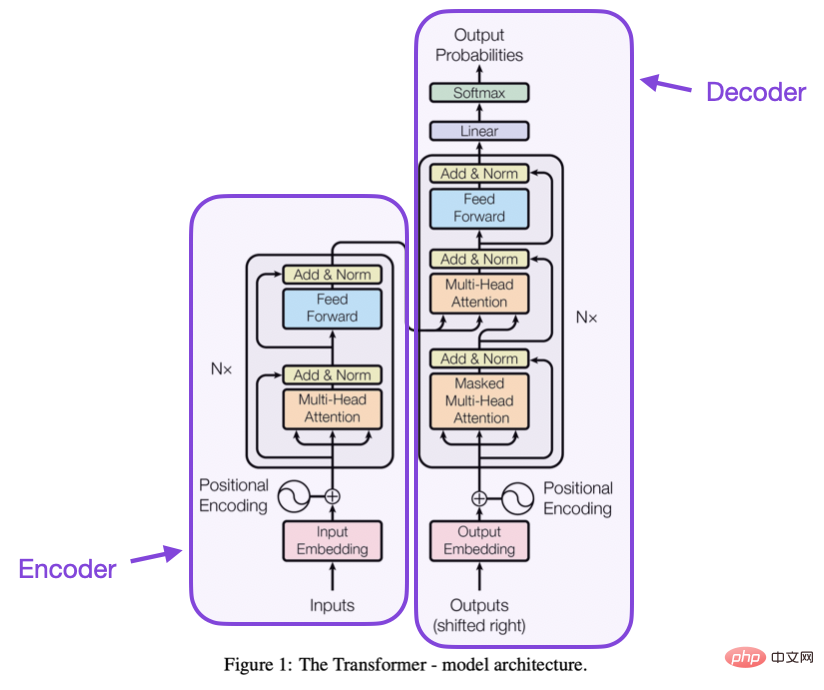

Paper 2: "Attention Is All You Need 》

Paper address: https://arxiv.org/abs/1706.03762

This article introduces the composition of encoder and decoder The original Transformer architecture, these parts will be introduced as separate modules later. In addition, this article introduces concepts such as scaling dot product attention mechanisms, multi-head attention blocks, and positional input encoding, which are still the foundation of modern Transformers.

Source: https://arxiv.org/abs/1706.03762

Paper 3: "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding》

Paper address: https://arxiv.org/abs/1810.04805

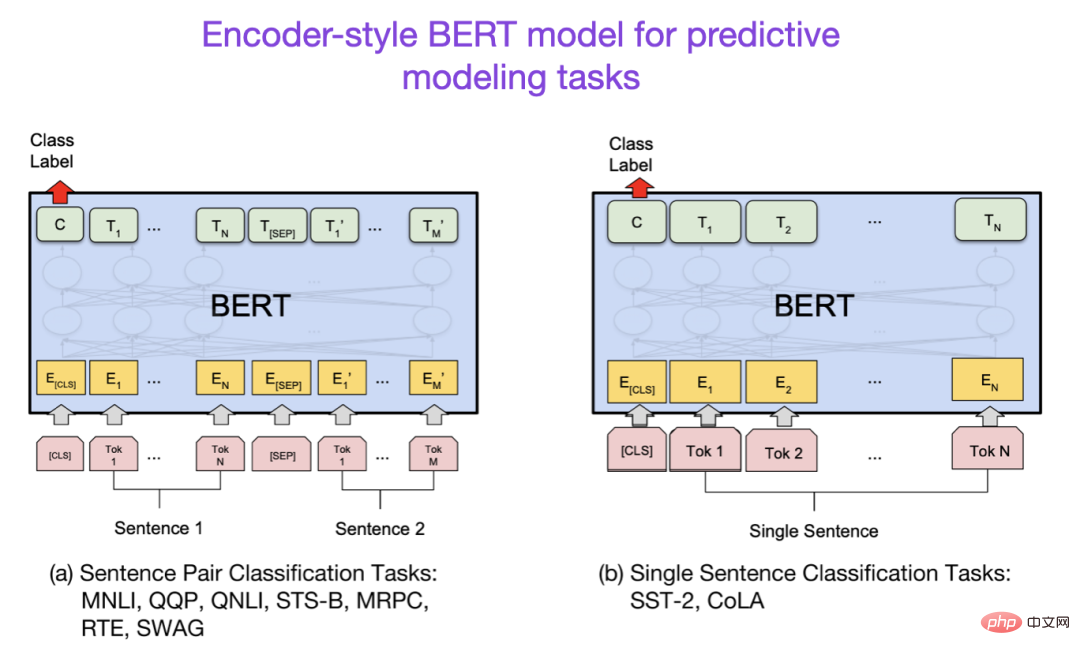

Large-scale language model research followed the initial Transformer architecture and then began to extend in two directions: Transformer for predictive modeling tasks (such as text classification) and Transformer for generative modeling tasks (such as translation, summarization, and other forms of text creation) Transformer.

The BERT paper introduces the original concept of masked language modeling. If you are interested in this research branch, you can follow up with RoBERTa, which simplifies the pre-training objectives.

Image source: https://arxiv.org/abs/1810.04805

Paper 4: "Improving Language Understanding by Generative Pre-Training》

Paper address: https://www.semanticscholar.org/paper/Improving-Language-Understanding-by-Generative- Radford-Narasimhan/cd18800a0fe0b668a1cc19f2ec95b5003d0a5035

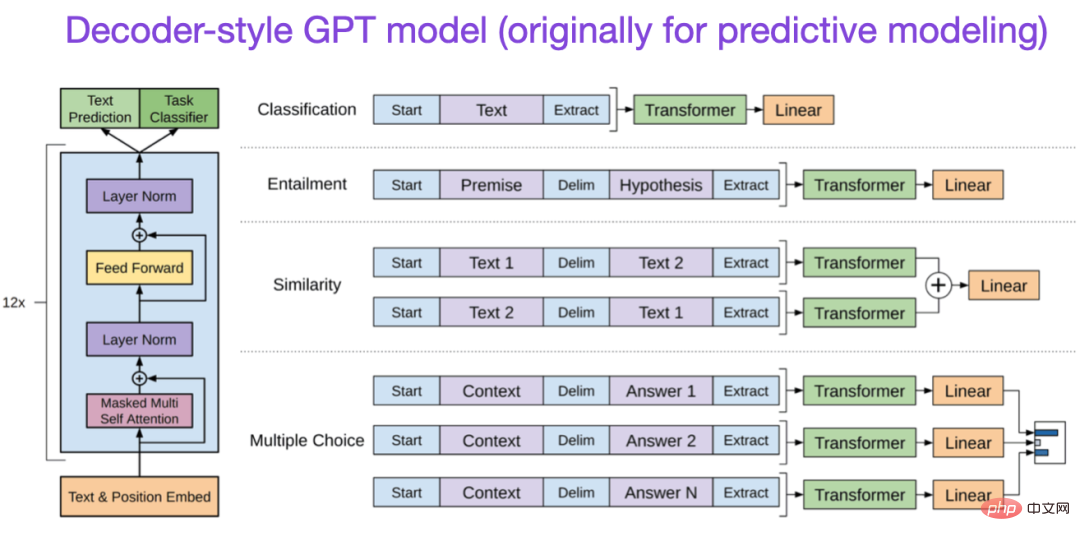

The original GPT paper introduced the popular decoder-style architecture and pre-training with next word prediction. BERT can be considered a two-way Transformer due to its masked language model pre-training goal, while GPT is a one-way autoregressive model. Although GPT embeddings can also be used for classification, GPT methods are at the core of today's most influential LLMs such as ChatGPT.

If you are interested in this research branch, you can follow up on the GPT-2 and GPT-3 papers. In addition, this article will introduce the InstructGPT method separately later.

Paper 5: "BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension"

Paper address https://arxiv.org/abs/1910.13461.

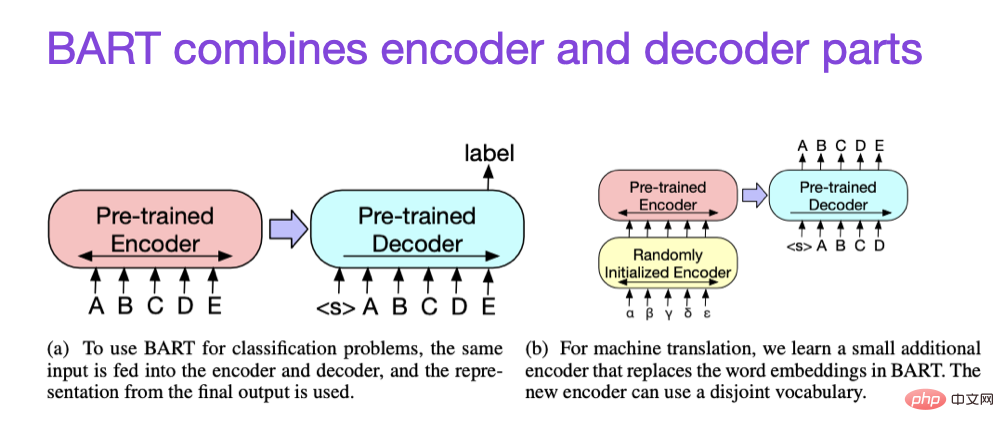

As mentioned above, BERT-type encoder-style LLM is usually the first choice for predictive modeling tasks, while GPT Decoder-style LLMs are better at generating text. To get the best of both worlds, the BART paper above combines the encoder and decoder parts.

If you want to know more about techniques to improve Transformer efficiency, you can refer to the following papers

In addition, there is also the paper "Training Compute-Optimal Large Language Models"

Paper address: https://arxiv.org/abs /2203.15556

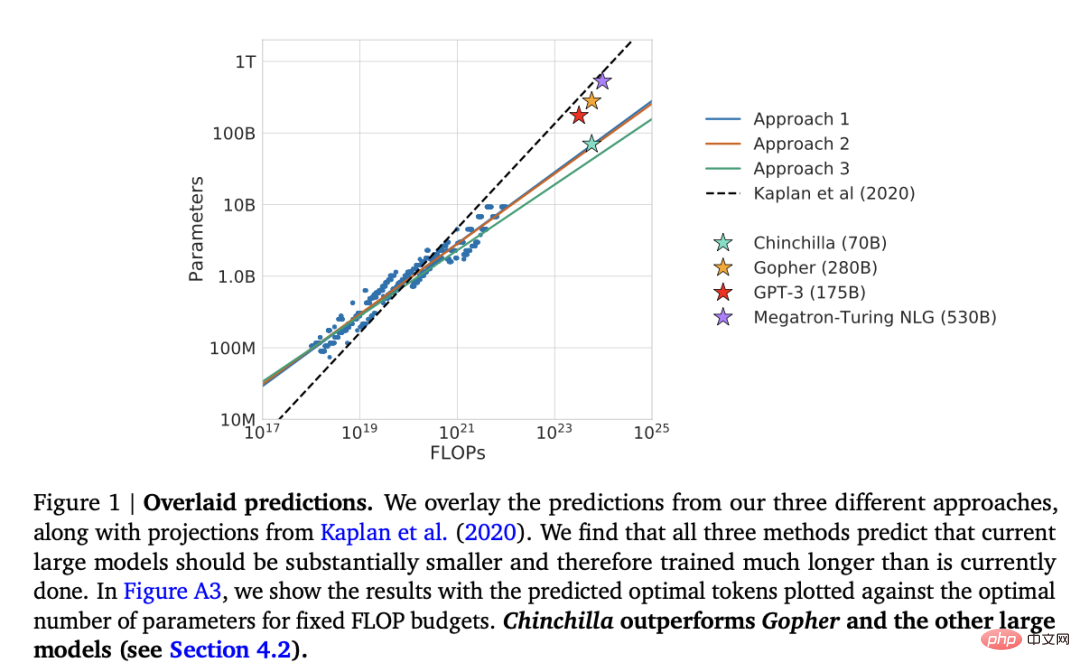

This article introduces the 70 billion parameter Chinchilla model, which outperforms the popular 175 billion parameter GPT-3 model on generative modeling tasks. However, its main highlight is that contemporary large-scale language models are severely undertrained.

This article defines linear scaling law for large language model training. For example, although Chinchilla is half the size of GPT-3, it outperforms GPT-3 because it is trained on 1.4 trillion (instead of 300 billion) tokens. In other words, the number of training tokens is as important as the model size.

Alignment - guiding large language models toward desired goals and interests

In recent years, many relatively powerful methods have emerged Large language models that can generate real text (e.g. GPT-3 and Chinchilla). In terms of commonly used pre-training paradigms, it seems that an upper limit has been reached.

In order to make the language model more helpful to humans and reduce misinformation and bad language, researchers have designed additional training paradigms to fine-tune the pre-trained basic model, including the following papers.

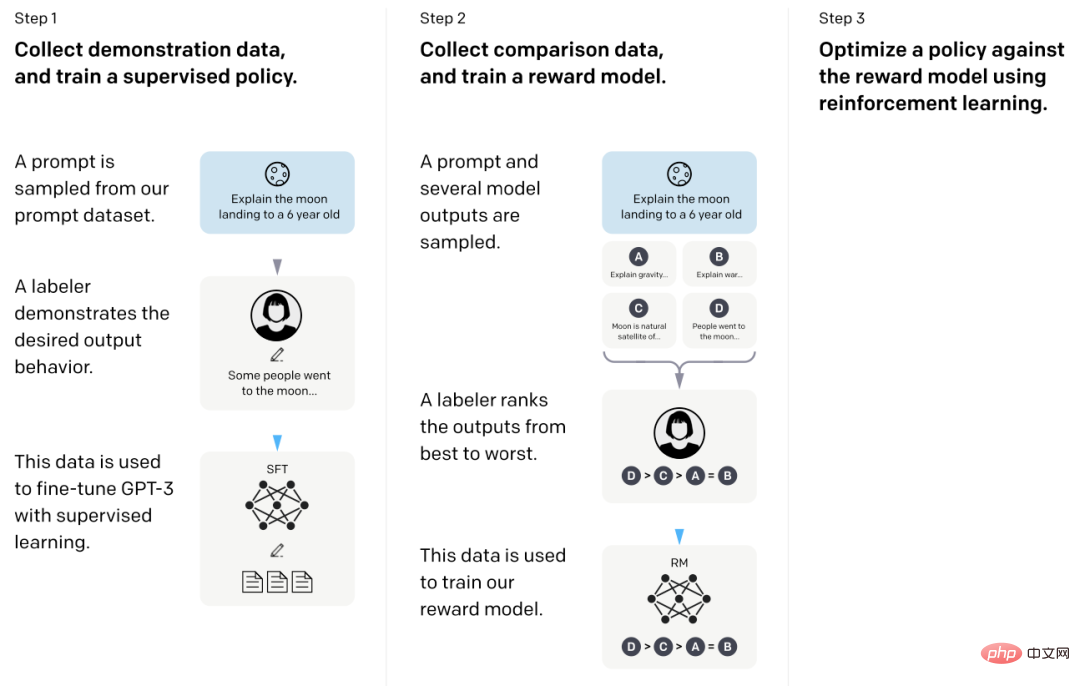

In this so-called InstructGPT paper, researchers used RLHF (Reinforcement Learning from Human Feedback). They started with a pretrained GPT-3 base model and further fine-tuned it on human-generated cue-response pairs using supervised learning (step 1). Next, they asked humans to rank the model outputs to train the reward model (step 2). Finally, they use the reward model to update the pretrained and fine-tuned GPT-3 model using reinforcement learning via proximal policy optimization (step 3).

By the way, this paper is also known as the paper that describes the ideas behind ChatGPT - according to recent rumors, ChatGPT is an extended version of InstructGPT that is fine-tuned on a larger dataset.

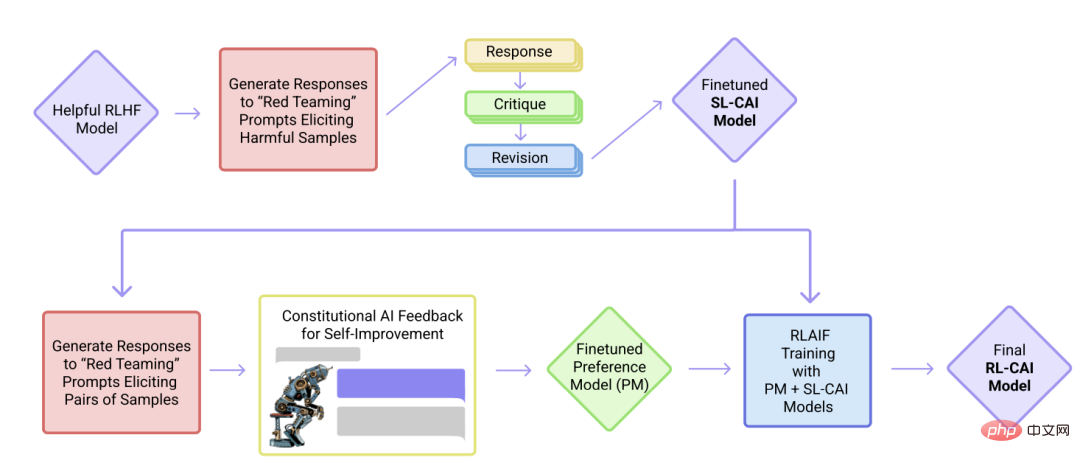

In this article In the paper, the researchers further advance the idea of alignment and propose a training mechanism to create a "harmless" AI system. The researchers proposed a self-training mechanism based on a list of rules (provided by humans), rather than direct human supervision. Similar to the InstructGPT paper mentioned above, the proposed method uses reinforcement learning methods.

This article tries to keep the arrangement of the form above as simple and beautiful as possible. It is recommended to focus on the first 10 papers to understand the ideas behind contemporary large-scale language models. Design, limitations and evolution.

If you want to read in depth, it is recommended to refer to the references in the above paper. Alternatively, here are some additional resources for readers to explore further:

Open Source Alternatives to GPT

ChatGPT alternative

Large-scale language models in computational biology

The above is the detailed content of For a comprehensive understanding of large language models, here is a reading list. For more information, please follow other related articles on the PHP Chinese website!