Docker storage drivers include: 1. AUFS, which is a file-level storage driver; 2. Overlay, which is a Union FS; 3. Device mapper, which is a mapping framework mechanism; 4. Btrfs, which is also a file-level storage Driver; 5. ZFS, a brand new file system.

The operating environment of this article: Windows 7 system, Docker version 20.10.11, Dell G3 computer.

What are the docker storage drivers? Docker’s five storage driver principles and their application scenarios

1. Principle description

Docker initially adopted AUFS as the file system. Thanks to the concept of AUFS layering, multiple Containers can share the same image. However, since AUFS is not integrated into the Linux kernel and only supports Ubuntu, considering compatibility issues, a storage driver was introduced in Docker version 0.7. Currently, Docker supports AUFS, Btrfs, Device mapper, OverlayFS, and ZFS Five types of storage drivers. As stated on the Docker official website, no single driver is suitable for all application scenarios. Only by selecting the appropriate storage driver according to different scenarios can the performance of Docker be effectively improved. How to choose a suitable storage driver? You must first understand the storage driver principles to make a better judgment. This article introduces a detailed explanation of Docker's five storage driver principles and a comparison of application scenarios and IO performance tests. Before talking about the principles, let’s talk about the two technologies of copy-on-write and allocation-on-write.

1. Copy-on-write (CoW)

A technology used by all drivers - copy-on-write (CoW). CoW is copy-on-write, which means copying only when writing is needed. This is for the modification scenario of existing files. For example, if multiple Containers are started based on an image, if each Container is allocated a file system similar to the image, it will take up a lot of disk space. The CoW technology allows all containers to share the file system of the image. All data is read from the image. Only when a file is to be written, the file to be written is copied from the image to its own file system for modification. . Therefore, no matter how many containers share the same image, the write operations are performed on the copy copied from the image to its own file system, and the source file of the image will not be modified, and multiple container operations are performed simultaneously. A file will generate a copy in the file system of each container. Each container modifies its own copy, which is isolated from each other and does not affect each other. Using CoW can effectively improve disk utilization.

2. Allocate-on-demand

On-demand allocation is used in scenarios where the file does not originally exist, only when a new file is to be written. Allocate space before allocating space, which can improve the utilization of storage resources. For example, when a container is started, some disk space will not be pre-allocated for the container. Instead, new space will be allocated on demand when new files are written.

2. Basic principles of five storage drivers

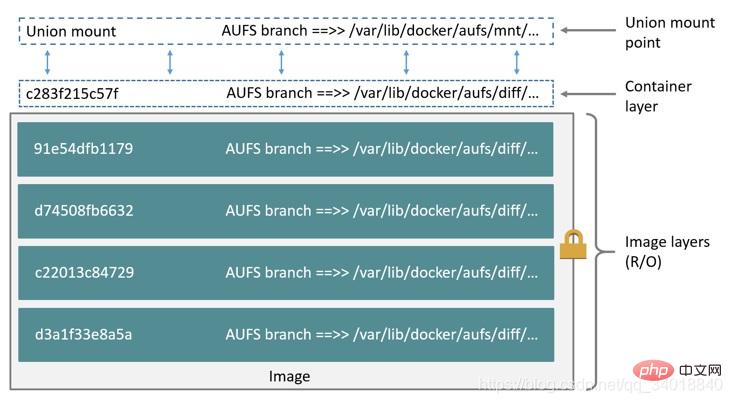

1, AUFS

AUFS (AnotherUnionFS ) is a Union FS, which is a file-level storage driver. AUFS can transparently overlay a layered file system on one or more existing file systems, merging multiple layers into a single-layer representation of the file system. Simply put, it supports mounting different directories to the file system under the same virtual file system. This file system can modify files layer by layer. No matter how many layers below are read-only, only the topmost file system is writable. When a file needs to be modified, AUFS creates a copy of the file, uses CoW to copy the file from the read-only layer to the writable layer for modification, and the results are also saved in the writable layer. In Docker, the read-only layer underneath is image, and the writable layer is Container. The structure is as shown below:

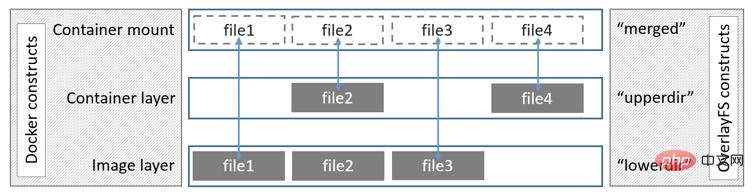

2, Overlay

Overlay is supported by the Linux kernel after 3.18 , is also a Union FS. Different from the multiple layers of AUFS, Overlay has only two layers: An upper file system and a lower file system, representing Docker's image layer and container layer respectively. When a file needs to be modified, CoW is used to copy the file from the read-only lower to the writable upper for modification, and the result is also saved in the upper layer. In Docker, the read-only layer underneath is image, and the writable layer is Container. The structure is as shown below:

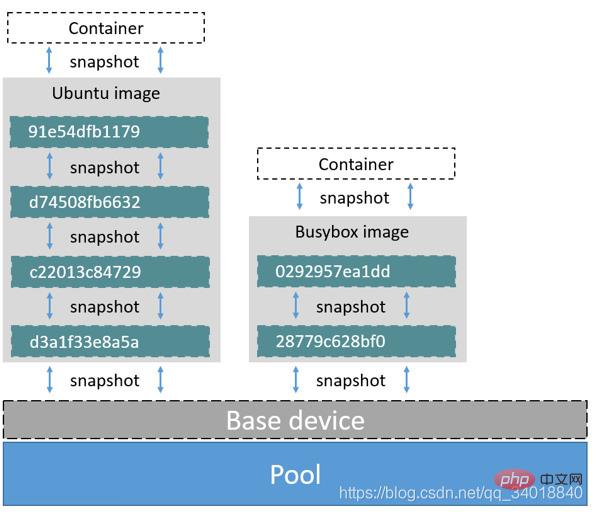

3, Device mapper

Device mapper is supported by the Linux kernel after 2.6.9. It provides a mapping framework mechanism from logical devices to physical devices. Under this mechanism, users can easily formulate and implement storage resource management according to their own needs. Strategy. The AUFS and OverlayFS mentioned earlier are file-level storage, while the Device mapper is block-level storage. All operations are performed directly on blocks, not files. The Device mapper driver will first create a resource pool on the block device, and then create a basic device with a file system on the resource pool. All images are snapshots of this basic device, and containers are snapshots of the image. Therefore, the file system seen in the container is a snapshot of the file system of the basic device on the resource pool, and no space is allocated for the container. When a new file is written, new blocks are allocated for it in the container's image and data is written. This is called time allocation. When you want to modify an existing file, use CoW to allocate block space for the container snapshot, copy the data to be modified to the new block in the container snapshot, and then modify it. The Device mapper driver will create a 100G file containing images and containers by default. Each container is limited to a 10G volume and can be configured and adjusted by yourself. The structure is as shown below:

4, Btrfs

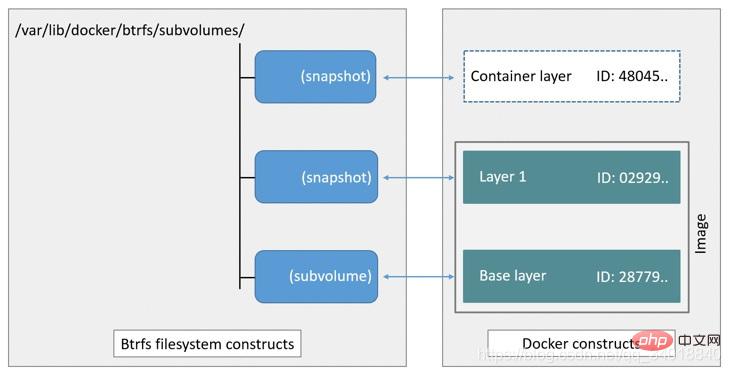

Btrfs is called the next generation write time Copy the file system and merge it into the Linux kernel. It is also a file-level storage driver, but it can directly operate the underlying device like a Device mapper. Btrfs configures a part of the file system as a complete sub-file system, called a subvolume. Using subvolume, a large file system can be divided into multiple sub-file systems. These sub-file systems share the underlying device space and are allocated from the underlying device when disk space is needed, similar to how an application calls malloc() to allocate memory. In order to utilize device space flexibly, Btrfs divides the disk space into multiple chunks. Each chunk can use different disk space allocation strategies. For example, some chunks only store metadata, and some chunks only store data. This model has many advantages, such as Btrfs supporting dynamic addition of devices. After the user adds a new disk to the system, he can use the Btrfs command to add the device to the file system. Btrfs treats a large file system as a resource pool and configures it into multiple complete sub-file systems. New sub-file systems can also be added to the resource pool. The base image is a snapshot of the sub-file system. Each sub-image and container Each has its own snapshot, and these snapshots are all snapshots of subvolume.

#When a new file is written, a new data block is allocated for it in the container's snapshot, and the file is written in this space. This is called time allocation. When you want to modify an existing file, use CoW copy to allocate a new original data and snapshot, change the data in this newly allocated space, and then update the relevant data structure to point to the new sub-file system and snapshot, the original original data And the snapshot has no pointer pointing to it and is overwritten.

5, ZFS

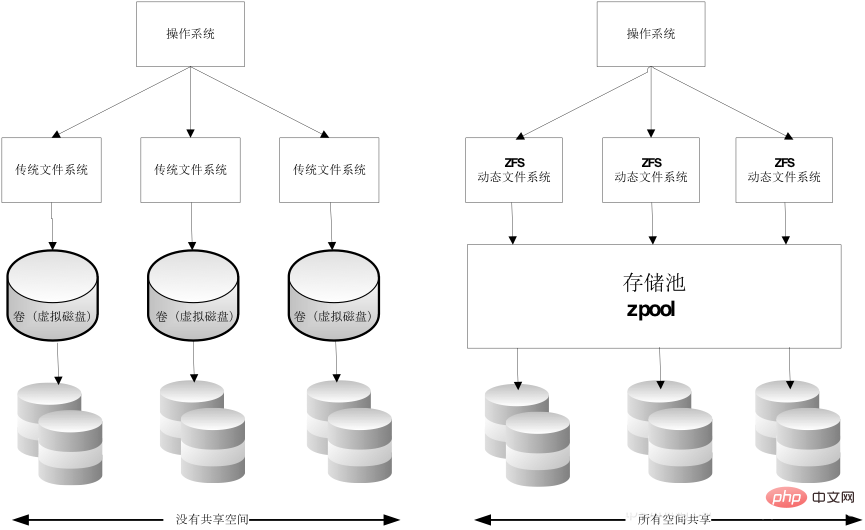

The ZFS file system is a revolutionary new file system that fundamentally changes the management of file systems In this way, ZFS completely abandons "volume management" and no longer creates virtual volumes. Instead, it concentrates all devices into a storage pool for management. Uses the concept of "storage pool" to manage physical storage space. In the past, file systems were built on physical devices. In order to manage these physical devices and provide redundancy for data, the concept of "volume management" provides a single device image. ZFS is built on virtual storage pools called "zpools". Each storage pool consists of several virtual devices (vdevs). These virtual devices can be raw disks, a RAID1 mirror device, or a multi-disk group with non-standard RAID levels. The file system on the zpool can then use the total storage capacity of these virtual devices.

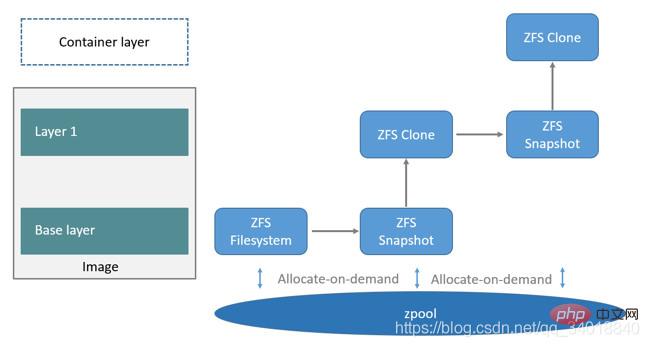

Let’s take a look at the use of ZFS in Docker. First, allocate a ZFS file system from zpool to the base layer of the image, and other image layers are clones of this ZFS file system snapshot. The snapshot is read-only, and the clone is writable. When the container starts, it is in the mirror. The topmost layer generates a writable layer. As shown below:

When you want to write a new file, use on-demand allocation, a new data is generated from the zpool, the new data is written to this block, and this new space is stored in the container (ZFS clone). When you want to modify an existing file, use copy-on-write to allocate a new space and copy the original data to the new space to complete the modification.

3. Storage driver comparison and adaptation scenarios

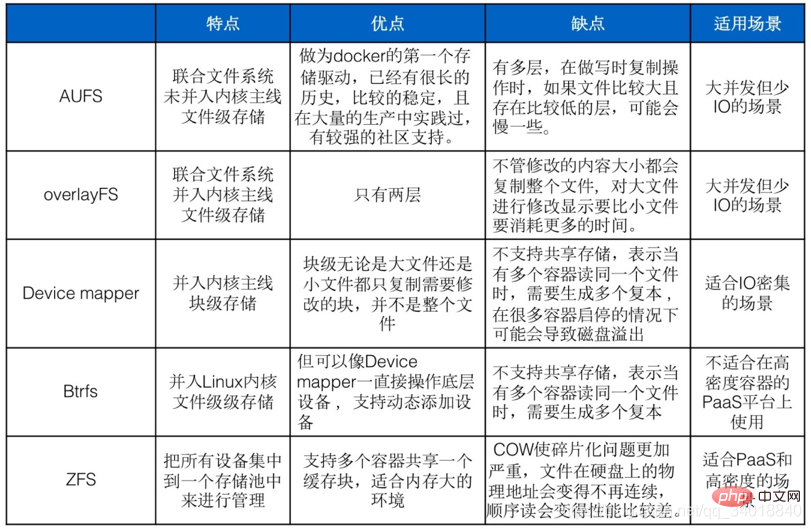

##1、AUFS VS Overlay## AUFS and Overlay are both joint file systems, but AUFS has multiple layers, while Overlay has only two layers. Therefore, when doing a copy-on-write operation, if the file is large and exists For lower layers, AUSF may be slower. Moreover, Overlay is integrated into the linux kernel mainline, but AUFS is not, so it may be faster than AUFS. But Overlay is still too young and should be used in production with caution. As the first storage driver of docker, AUFS has a long history, is relatively stable, has been practiced in a large number of productions, and has strong community support. The current open source DC/OS specifies the use of Overlay.

2,Overlay VS Device mapper Overlay is file-level storage, and Device mapper is block-level storage. The file is particularly large and the modified content is very small. Overlay will copy the entire file regardless of the size of the modified content. Modifying a large file will take more time than a small file. However, whether it is a large file or a small file at the block level, only Copying the blocks that need to be modified is not the entire file. In this scenario, the device mapper is obviously faster. Because the block level directly accesses the logical disk, it is suitable for IO-intensive scenarios. For scenarios with complex internal programs, large concurrency but little IO, Overlay's performance is relatively stronger.

3、Device mapper VS Btrfs Driver VS ZFS## Both Device mapper and Btrfs It operates directly on blocks and does not support shared storage. This means that when multiple containers read the same file, multiple copies need to be stored. Therefore, this storage driver is not suitable for use on high-density container PaaS platforms. Moreover, when many containers are started and stopped, it may cause disk overflow and cause the host to be unable to work. Device mapper is not recommended for production use, Btrfs can be very efficient in docker build. ZFS was originally designed for Salaris servers with a large amount of memory, so it will have an impact on memory when used, and is suitable for environments with large memory. ZFS's COW makes the fragmentation problem more serious. For large files generated by sequential writing, if part of it is randomly changed in the future, the physical address of the file on the hard disk will no longer be continuous, and future sequential reads will Performance will become poorer. ZFS supports multiple containers sharing a cache block, which is suitable for PaaS and high-density user scenarios.

Recommended learning: "

docker tutorial

The above is the detailed content of What are the docker storage drivers?. For more information, please follow other related articles on the PHP Chinese website!

The difference between k8s and docker

The difference between k8s and docker

What are the methods for docker to enter the container?

What are the methods for docker to enter the container?

What should I do if the docker container cannot access the external network?

What should I do if the docker container cannot access the external network?

What is the use of docker image?

What is the use of docker image?

How to make pictures scroll in ppt

How to make pictures scroll in ppt

How to check the ftp server address

How to check the ftp server address

Vue parent component calls the method of child component

Vue parent component calls the method of child component

Can Weibo members view visitor records?

Can Weibo members view visitor records?