GIL(Global Interpreter Lock, the global interpreter lock).

'''

@Author: Runsen

@微信公众号: Python之王

@博客: https://blog.csdn.net/weixin_44510615

@Date: 2020/6/4

'''import threading, timedef my_counter():

i = 0

for _ in range(100000000):

i = i+1

return Truedef main1():

start_time = time.time() for tid in range(2):

t = threading.Thread(target=my_counter)

t.start()

t.join() # 第一次循环的时候join方法引起主线程阻塞,但第二个线程并没有启动,所以两个线程是顺序执行的

print("单线程顺序执行total_time: {}".format(time.time() - start_time))def main2():

thread_ary = {}

start_time = time.time() for tid in range(2):

t = threading.Thread(target=my_counter)

t.start()

thread_ary[tid] = t for i in range(2):

thread_ary[i].join() # 两个线程均已启动,所以两个线程是并发的

print("多线程执行total_time: {}".format(time.time() - start_time))if __name__ == "__main__":

main1()

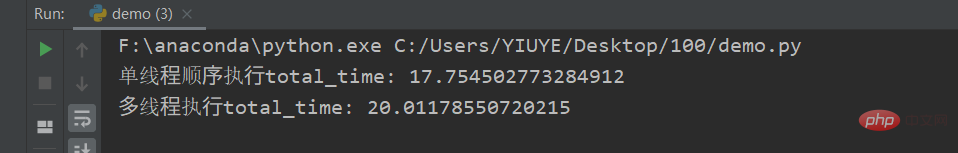

main2()复制代码单线程顺序执行total_time: 17.754502773284912多线程执行total_time: 20.01178550720215复制代码

Pthread (full name is POSIX Thread), while in the Windows system, it is Pthread Windows Thread

The concept of GIL can be explained in a simple sentence, that isAt any time, no matter how many threads there are, a single CPython interpreter can only execute one byte code

. Points to note about this definition:The first thing that needs to be made clear is that GIL is not a feature of Python

, it is a concept introduced when implementing the Python parser (CPython). C is a set of language (grammar) standards, but it can be compiled into executable code using different compilers. Famous compilers such as GCC, INTEL C, Visual C, etc. The same is true for Python. The same piece of code can be executed through different Python execution environments such as CPython, PyPy, and Psyco.Other Python interpreters may not have GIL

. For example, Jython (JVM) and IronPython (CLR) do not have GIL, while CPython and PyPy have GIL;because CPython is the default Python execution environment in most environments. Therefore, in the concept of many people, CPython is Python, and they take it for granted that GIL is a defect of the Python language. So let’s make it clear here: GIL is not a feature of Python. Python does not need to rely on GIL at all

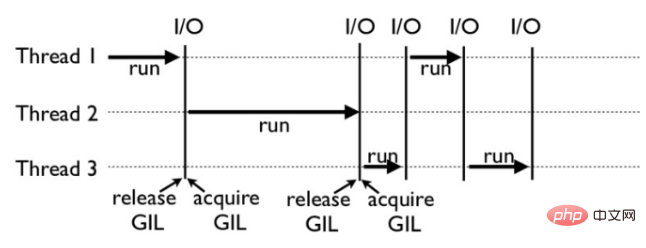

The essence of GIL is a mutex lockThe essence of GIL is Since a mutex lock is a mutex lock, the essence of all mutex locks is the same. They turn concurrent operations into serial operations, thereby controlling that shared data can only be modified by one task at the same time, thereby ensuring that Data Security. One thing that is certain is: to protect the security of different data, different locks should be added. How GIL works: For example, the picture below is an example of how GIL works in a Python program. Among them, Thread 1, 2, and 3 are executed in turn. When each thread starts execution, it will lock the GIL to prevent other threads from executing; similarly, after each thread finishes executing for a period, it will release the GIL to allow other threads to execute. The thread starts utilizing resources.

'''

@Author: Runsen

@微信公众号: Python之王

@博客: https://blog.csdn.net/weixin_44510615

@Date: 2020/6/4

'''import time

COUNT = 50_000_000def count_down():

global COUNT while COUNT > 0:

COUNT -= 1s = time.perf_counter()

count_down()

c = time.perf_counter() - s

print('time taken in seconds - >:', c)

time taken in seconds - >: 9.2957003复制代码'''

@Author: Runsen

@微信公众号: Python之王

@博客: https://blog.csdn.net/weixin_44510615

@Date: 2020/6/4

'''import timefrom threading import Thread

COUNT = 50_000_000def count_down():

global COUNT while COUNT > 0:

COUNT -= 1s = time.perf_counter()

t1 = Thread(target=count_down)

t2 = Thread(target=count_down)

t1.start()

t2.start()

t1.join()

t2.join()

c = time.perf_counter() - s

print('time taken in seconds - >:', c)

time taken in seconds - >: 17.110625复制代码Summary: For io-intensive work (Python crawler), multi-threading can greatly improve code efficiency. For CPU-intensive computing (Python data analysis, machine learning, deep learning), multi-threading may be slightly less efficient than single-threading. Therefore, there is no such thing as multi-threading to improve efficiency in the data field. The only way to improve computing power is to upgrade the CPU to GPU and TPU.

###Related free learning recommendations: python video tutorial

The above is the detailed content of Is it true that multi-threading is faster than single-threading?. For more information, please follow other related articles on the PHP Chinese website!