1.HDFSDistributed file system operation and maintenance

1.Create recursion in the root directory of theHDFSfile system Directory"1daoyun/file", upload theBigDataSkills.txtfile in the attachment Go to the1daoyun/filedirectory and use the relevant commands to view the files in the1daoyun/filedirectory in the system List information.

hadoop fs -mkdir -p /1daoyun/filehadoop fs -put BigDataSkills.txt /1daoyun/file

hadoop fs -ls /1daoyun/file

2.

atHDFSCreate a recursive directory under the root directory of the file system"1daoyun/file", and add the## in the attachment#BigDataSkills.txtfile, upload it to the1daoyun/filedirectory, and useHDFSFile systemCheck tool checks whether files are damaged.hadoop fs -mkdir -p /1daoyun/file

hadoop fs -put BigDataSkills.txt/1daoyun/file

hadoop fsck /1daoyun/file/BigDataSkills.txt

3.

atHDFSCreate a recursive directory in the root directory of the file system"1daoyun/file", and addin the attachmentBigDataSkills.txtfile, upload to the1daoyun/filedirectory, specifyBigDataSkills.txt # during the upload process The## file has a replication factor of#HDFSfile system of2and usesfsckToolTool checks the number of copies of storage blocks.

hadoop fs -mkdir -p /1daoyun/file

##hadoop fs -D dfs.replication=2 -put BigDataSkills.txt /1daoyun/file

hadoop fsck /1daoyun/file/BigDataSkills.txt

4.HDFSThere is one in the root directory of the file system/appsfile directory, it is required to enable thesnapshot creation function of the directory and create a snapshot for the directory file , the snapshot name isapps_1daoyun, souse related commands to view the list information of the snapshot file.

hadoop dfsadmin -allowSnapshot /apps

hadoop fs -createSnapshot /apps apps_1daoyun

hadoop fs -ls /apps/.snapshot

5.whenHadoopWhen the cluster starts, it will first enter the safe mode state, which will exit after30seconds by default. When the system is in safe mode, theHDFSfile system can only be read, andcannot be written, modified, deleted, etc. It is assumed that theHadoopcluster needs to be maintained. It is necessary toput the cluster into safe mode and check its status.

hdfs dfsadmin -safemode enter

##hdfs dfsadmin -safemode get6.

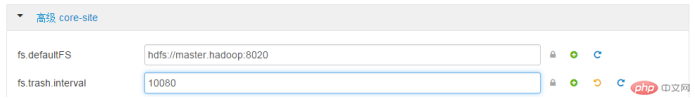

In order to prevent operators from accidentally deleting files,HDFSThe file system provides the recycle bin function, butMany junk files will take up a lot of storage space. It is required that theWEBinterfaceof the Xiandian big data platform completely delete the files in theHDFSfile system recycle bin The time interval is7days.Advancedcore-sitefs.trash.interval: 10080

##

##

7.In order to prevent operators from accidentally deleting files, theHDFSfile system provides a recycle bin function, but too many junk files will take up a lot of storage space. It is required to use the"vi"command inLinux Shellto modify thecorresponding configuration file and parameter information. Turn off the recycle bin function. After completion, restart the corresponding service.Advancedcore-sitefs.trash.interval: 0vi /etc/hadoop/2.4.3.0 -227/0/core-site.xml

##

## trash.interval

###

sbin/stop-dfs.sh##sbin/start- dfs.sh

8.HadoopThe hosts in the cluster may experience downtime or system damage under certain circumstances. One

Once these problems are encountered,

HDFSThe data files in the file system will inevitably be damaged or lost,

In order to ensure thatHDFSThe reliability of the file system now requires the redundancy replication factor of the cluster in theWEBinterface of the Xidian big data platformModify to5.GeneralBlock replication5

9.HadoopThe hosts in the cluster may experience downtime or system damage under certain circumstances. OnceDue to these problems,HDFSthe data files in the file system will inevitably be damaged or lost,In order to ensure thatHDFSFor the reliability of the file system, the redundancy replication factor of the cluster needs to be modified to5,inLinux ShellUse the"vi"command to modify the corresponding configuration file and parameter information. After completion,restart the corresponding service.

or

or

vi/etc/hadoop/2.4.3.0-227/0/hdfs- site.xml

##

##/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf stop {namenode/datenode}

/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start {namenode/datenode}

10.

Use the command to viewhdfs

in the file system/tmp

The number of directories under the directory, the number of files and the total size of the files.

hadoop fs -count /tmp2.MapREDUCECase question

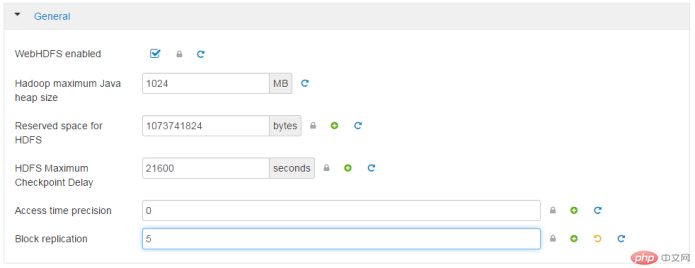

1.In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/## In the# directory, there is a caseJARPackagehadoop-mapreduce-examples.jar. Run thePIprogram in theJARpackage to calculatePiπ## Approximate value of#, requires running5Maptasks, eachMapThe number of throws for the task is5.

cd/usr/hdp/2.4.3.0-227/hadoop-mapreduce/##hadoop jar hadoop- mapreduce-examples-2.7.1.2.4.3.0-227.jar pi 5 5

##2.

##2.

In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/directory, there is a caseJARPackagehadoop-mapreduce-examples.jar. Run thewordcountprogram in theJARpackage to#/1daoyun/file/ BigDataSkills.txtfile counts words, outputs the operation results to the/1daoyun/outputdirectory, and uses related commands to query the word count results.hadoop jar/usr/hdp/2.4.3.0-227/hadoop-mapreduce/hadoop-mapreduce-examples-2.7.1.2.4.3.0-227.jar wordcount /1daoyun/ file/BigDataSkills.txt /1daoyun/output

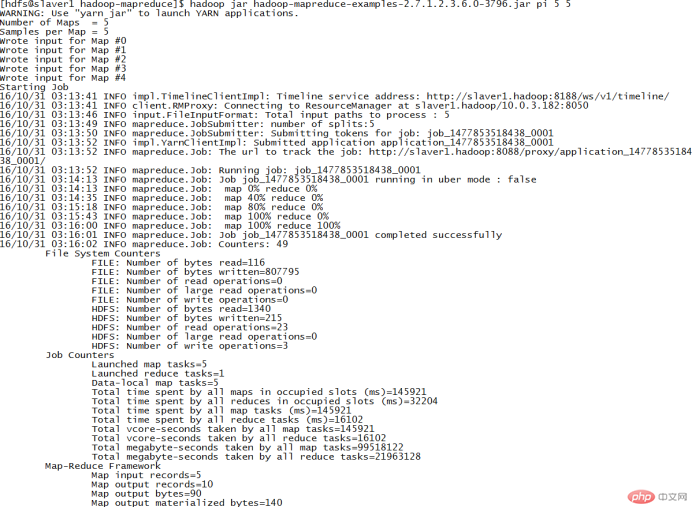

3.In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/## In the# directory, there is a caseJARPackagehadoop-mapreduce-examples.jar. Run thesudokuprogram in theJARpackage to calculate the results of the Sudoku problems in thetable below. .

cat puzzle1.dta

##hadoop jarhadoop-mapreduce-examples- 2.7.1.2.4.3.0-227.jar sudoku /root/puzzle1.dta4.

In the cluster node## There is a caseJARin the#/usr/hdp/2.4.3.0-227/hadoop-mapreduce/directory. Packagehadoop-mapreduce-examples.jar. Run thegrepprogram in theJARpackage to count/ in thefile system 1daoyun/file/BigDataSkills.txtThe number of times"Hadoop"appears in the file. After the statistics arecompleted, query the statistical result information.hadoop jarhadoop-mapreduce-examples-2.7.1.2.4.3.0-227.jar grep /1daoyun/file/BigDataSkills.txt /output hadoop

The above is the detailed content of BigData big data operation and maintenance. For more information, please follow other related articles on the PHP Chinese website!

Usage of fclose function

Usage of fclose function How to set cad point style

How to set cad point style What is digital currency trading

What is digital currency trading Introduction to input functions in c language

Introduction to input functions in c language Which platform can I buy Ripple coins on?

Which platform can I buy Ripple coins on? Is FIL worth holding for the long term?

Is FIL worth holding for the long term? What does the other party show after being blocked on WeChat?

What does the other party show after being blocked on WeChat? The performance of microcomputers mainly depends on

The performance of microcomputers mainly depends on