1. Database paradigm

First paradigm: columns are indivisible, eg: [Contact] (name, gender, phone number), a contact has a home phone number and Company phone number, then this table structure design does not reach 1NF;

Second normal form: There is a primary key, ensuring complete dependence. eg: Order details table [OrderDetail] (OrderID, ProductID, UnitPrice, Discount, Quantity, ProductName), Discount (discount), Quantity (quantity) are completely dependent on the primary key (OderID, ProductID), while UnitPrice, ProductName only depend on For ProductID, it does not comply with 2NF;

Third normal form: no transitive dependency (non-primary key column A depends on non-primary key column B, non-primary key column B depends on the primary key), eg: order table [Order] (OrderID, OrderDate, CustomerID, CustomerName, CustomerAddr, CustomerCity) The primary key is (OrderID), CustomerName, CustomerAddr, CustomerCity directly depends on CustomerID (non-primary key column), rather than directly relying on the primary key, it depends on the primary key through passing , so it does not comply with 3NF.

2. What is an anti-pattern?

Paradigm can avoid data redundancy, reduce database space, and reduce the trouble of maintaining data integrity.

However, through the normalized design of the database, more tables will be involved in the database business, and the involved business tables may need to be queried through multi-table connections, which will lead to poor performance and is not conducive to database sharding. Sub-table. Therefore, for the sake of performance priority, it may be necessary to use an anti-pattern design in the database structure, that is, trading space for time, and adopting data redundancy to avoid associated queries between tables. As for the issue of data consistency, because it is difficult to meet strong data consistency, in general, the stored data should be as consistent as possible among users to ensure that the system will eventually achieve consistency after self-recovery and correction within a short period of time.

You need to use anti-patterns carefully when designing your database. In general, use a normalized database design as much as possible, because a normalized database design can make the product more flexible and maintain data integrity at the database layer.

Sometimes, the best way to improve performance is to save redundant data in the same table. If a small amount of dirty data can be tolerated, creating a completely independent summary table or cache table is a very good method. . For example, designing a "download count table" to cache download count information can increase the speed of querying total information in the case of massive data.

Another typical scenario, for scalability considerations, BLOB and TEXT type columns may be used to store JSON structured data. The advantage of this is that new attributes can be added to this at any time. fields without changing the table structure. However, the shortcomings of this design are also obvious, that is, the entire field content needs to be obtained and decoded to obtain the specified attributes, and operations such as indexing, sorting, and aggregation cannot be performed. Therefore, if you need to consider more complex usage scenarios, it is more recommended to use a document database such as MongoDB.

3. Database transaction

A transaction is an indivisible sequence of database operations and the basic unit of database concurrency control. The result of its execution must make the database Change from one consistency state to another consistency state.

(1). Characteristics of transactions

Atomicity: A series of database operations contained in a transaction are either all executed successfully or all are rolled back;

are consistent Consistency: The execution result of the transaction must make the database move from one consistency state to another consistency state;

Isolation: Concurrently executed transactions cannot affect each other;

Durability: Once a transaction is committed, the changes to the data in the database are permanent.

(2). Problems caused by transaction concurrency

Dirty read: one transaction reads uncommitted data of another transaction;

Non-repeatable read: non-repeatable The focus of reading is modification. The results of two reads are different under the same conditions. That is to say, the read data can be modified by other transactions;

Phantom reading: The focus of phantom reading is to add or delete. The number of records read out twice under the same conditions is different.

(3). Isolation level

The isolation level determines the impact that transactions in one session may have on transactions in another session.

ANSI standard defines 4 isolation levels, which are supported by MySQL's InnoDB, namely:

READ UNCOMMITTED (uncommitted read): the lowest level of isolation, usually called dirty read, It allows one transaction to read data that has not been committed by another transaction, which may improve performance, but will lead to dirty read problems;

READ COMMITTED (committed read): Only other transactions are allowed in a transaction Records that have been committed are visible. This isolation level cannot avoid non-repeatable read problems;

REPEATABLE READ (repeatable read): After a transaction starts, modifications to the database by other transactions are not visible in this transaction until This transaction is committed or rolled back. However, the insert/delete operations of other transactions are visible to this transaction, which means that this isolation level cannot avoid the phantom read problem. The results of repeated select in a transaction are the same unless the database is updated in this transaction.

SERIALIZABLE (serializable): The highest level of isolation, only allowing transactions to be executed serially.

The default isolation level of MySQL is REPEATABLE READ.

4. What is a stored procedure? What are the advantages and disadvantages?

A stored procedure is a collection of SQL statements that have been compiled in advance and stored in the database. Furthermore, a stored procedure is a code block composed of some T-SQL statements. These T-SQL statement codes implement some functions like a method (add, delete, modify and query a single table or multiple tables), and then give this code block Just give it a name and call it when you use this function. Stored procedures have the following characteristics:

4.1. Stored procedures are only compiled when they are created. There is no need to recompile each time the stored procedure is executed in the future. Generally, SQL statements are compiled once every time they are executed, so using storage The process can improve the execution efficiency of the database;

4.2. When the SQL statement changes, you can only modify the stored procedure in the database without modifying the code;

4.3. Reduce network transmission, on the client Of course, the amount of data transferred by calling a stored procedure is smaller than executing a series of SQL;

4.4. Through stored procedures, users without permission can indirectly access the database under control, thereby ensuring data security.

5. Let’s briefly talk about the differences between drop, delete, and truncate.

drop, delete, and truncate in SQL all mean deletion, but there are some differences among the three. Difference:

Delete is used to delete all or part of the data rows in the table. After executing delete, the user needs to commit or rollback to perform deletion or undelete. The delete command will trigger the operation on this table. All delete triggers;

Truncate deletes all data in the table. This operation cannot be rolled back and will not trigger triggers on this table. TRUNCATE is faster than delete and takes up less space;

The Drop command deletes a table from the database. All data rows, indexes and permissions will also be deleted. All DML triggers will not be triggered, and this command cannot be rolled back.

Therefore, when a table is no longer needed, use drop; when you want to delete some data rows, use delete; when retaining the table and deleting all data, use truncate.

6. What is a view? What is a cursor?

A view is a virtual table, usually a subset of rows or columns in one table or multiple tables. It has the same functions as a physical table and can be added to the view. , delete, modify, check and other operations. In particular, modifications to the view do not affect the underlying table. Compared with multi-table queries, it makes it easier for us to obtain data.

The cursor effectively processes the query result set as a unit. The cursor can be positioned on a specific row in the cell to retrieve one or more rows from the current row in the result set. You can make changes to the current row of the result set. Cursors are generally not used, but when data needs to be processed one by one, cursors are very important.

When operating mysql, we know that the MySQL retrieval operation returns a set of rows called a result set. The set of rows returned are all rows that match the SQL statement (zero or more rows). With a simple SELECT statement, for example, there is no way to get the first row, the next row, or the first 10 rows, nor is there an easy way to process all rows one at a time (as opposed to processing them in batches). Sometimes it is necessary to move forward or backward one or more rows in the retrieved rows. This is why cursors are used. A cursor is a database query stored on the MySQL server. It is not a SELECT statement, but a result set retrieved by the statement. After the cursor is stored, the application can scroll or browse the data within it as needed. Cursors are primarily used in interactive applications where the user needs to scroll through data on the screen and browse or make changes to the data.

7. What is a trigger?

A trigger is a database object related to a table that is triggered when defined conditions are met and executes the set of statements defined in the trigger. This feature of triggers can help applications ensure database integrity on the database side.

8. Super key, candidate key, primary key, foreign key

Super key: The set of attributes that can uniquely identify a tuple in a relationship is called the super key of the relational schema. key. An attribute can be used as a super key, and multiple attributes combined can also be used as a super key. Super key contains candidate key and primary key.

Candidate key: It is the minimum superkey, that is, a superkey without redundant elements.

Primary key: A combination of data columns or attributes in a database table that uniquely and completely identifies the stored data object. A data column can only have one primary key, and the value of the primary key cannot be missing, that is, it cannot be null.

Foreign key: The primary key of another table that exists in one table is called the foreign key of this table.

9. What is a transaction? What is a lock?

Transaction: It is a group of SQL statements that are bound together as a logical unit of work. If any statement operation fails, the entire operation will fail, and future operations will be rolled back to the state before the operation, or there will be a node. To ensure that something is either executed or not executed, transactions can be used. For a grouped statement to be considered a transaction, it needs to pass the ACID tests, namely atomicity, consistency, isolation, and durability.

Lock: In all DBMS, locks are the key to realizing transactions. Locks can ensure the integrity and concurrency of transactions. Just like a lock in real life, it can prevent the owner of certain data from using certain data or data structures for a certain period of time. Of course, locks are also divided into levels.

10. Database locking mechanism

The database locking mechanism simply means that in order to ensure the consistency of the data, the database allows various shared resources to be accessed concurrently, and the access changes. A rule designed in an orderly manner. Each MySQL storage engine uses three types (levels) of locking mechanisms: row-level locking, page-level locking and table-level locking.

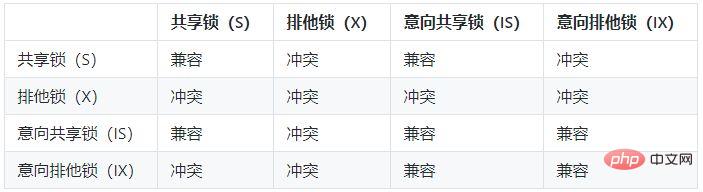

Table-level locking (table-level): Table-level locking is the most granular locking mechanism among MySQL storage engines. The biggest feature of this locking mechanism is that the implementation logic is very simple and brings minimal negative impact on the system. So acquiring and releasing locks is very fast. Since table-level locks lock the entire table at one time, the deadlock problem that plagues us can be avoided. Of course, the biggest negative impact of large locking granularity is that the probability of contention for locking resources will be the highest, which will greatly reduce the efficiency. Table-level locks are divided into read locks and write locks. Page-level locking (page-level): The characteristic of page-level locking is that the locking granularity is between row-level locking and table-level locking, so the resource overhead required to obtain the lock and the concurrent processing capability it can provide are also Between the above two. In addition, page-level locking and row-level locking will cause deadlock. Row-level locking (row-level): The biggest feature of row-level locking is that the granularity of the locked object is very small, and it is also the smallest locking granularity achieved by currently major database management software. Since the locking granularity is very small, the probability of contention for locking resources is also minimal, which can give the application as much concurrent processing capability as possible and improve the overall performance of some application systems that require high concurrency. Although it has great advantages in concurrent processing capabilities, row-level locking also brings many disadvantages. Since the granularity of locking resources is very small, more things need to be done each time to acquire and release the lock, which naturally causes greater consumption. In addition, row-level locking is also the most prone to deadlock. InnoDB's row-level locks are also divided into two types, shared locks and exclusive locks. Similarly, InnoDB also introduced the concept of intention locks (table-level locks), so there are intention shared locks and intention exclusive locks, so InnoDB actually has Four types of locks, namely shared lock (S), exclusive lock (X), intention shared lock (IS), and intention exclusive lock (IX);

In the MySQL database, table-level locking is mainly used by MyISAM , Memory, CSV and other non-transactional storage engines. Row-level locking is mainly used by the Innodb storage engine and NDBCluster storage engine. Page-level locking is mainly the locking method of the BerkeleyDB storage engine.

The function of intention lock is that when a transaction needs to obtain a resource lock, if the resource it needs is already occupied by an exclusive lock, the transaction can add an appropriate row to the table that needs to lock the row. Intention lock. If you need a shared lock, add an intention shared lock on the table. If you need to add an exclusive lock on a certain row (or some rows), first add an intention exclusive lock on the table. Multiple intention shared locks can exist at the same time, but only one intention exclusive lock can exist at the same time.

For more information, please visit the PHP Chinese website:mysql video tutorial

The above is the detailed content of Detailed introduction to basic database theory. For more information, please follow other related articles on the PHP Chinese website!