What is load balancing? How to configure load balancing in Apache? The following article will introduce you to the Apache load balancing configuration method. I hope it will be helpful to you.

What is load balancing

Load Balance (Load Balance) is a part of distributed system architecture design One of the factors that must be considered, it usually refers to, Allocating requests/data [evenly] to multiple operating units for execution. The key to load balancing is [evenly].

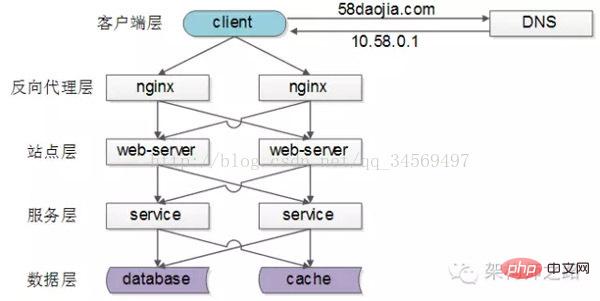

Common load balancing solutions

Client layer, reverse proxy nginx layer, site layer, service layer, data layer. It can be seen that each downstream has multiple upstream calls. As long as each upstream accesses each downstream evenly, it can achieve "evenly distribute the requests/data to multiple operation units for execution".

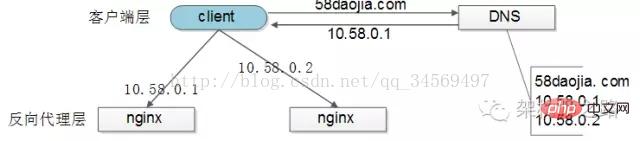

Load balancing of [Client layer->Reverse proxy layer]

DNS polling": DNS-server is configured with multiple resolution IPs for a domain name, and each DNS resolution request is accessed DNS-server will poll and return these ip to ensure that the resolution probability of each ip is the same. These IPs are the external network IPs of nginx, so that the request distribution of each nginx is also balanced.

[Reverse proxy layer->site layer] Load balancing

"nginx". By modifying nginx.conf, a variety of load balancing strategies can be implemented:

1) Request polling: Similar to DNS polling, requests are routed to each web-server in turn2 )Least connection routing: Which web-server has fewer connections, which web-server is routed to 3) IP hash: The web-server is routed according to the IP hash value of the accessing user, as long as the user's IP distribution It is uniform, and the requests are theoretically uniform. The IP hash balancing method can achieve this. The same user's request will always fall on the same web-server. This strategy is suitable for stateful services, such as session (58 Chen Jian's remarks: You can do this, but it is strongly not recommended. The statelessness of the site layer is one of the basic principles of distributed architecture design. It is best to store the session in the data layer)4)…[Site layer->Service layer] Load balancing

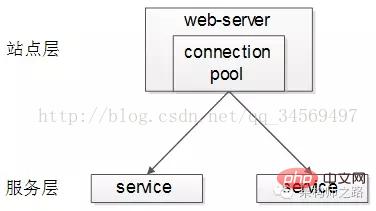

##[Site layer] to [Service layer] load balancing is achieved through "

". The upstream connection pool will establish multiple connections with downstream services, and each request will "randomly" select a connection to access the downstream service.

The previous article "RPC-client Implementation Details" has detailed descriptions of load balancing, failover, and timeout processing. You are welcome to click on the link to view it and will not expand it here.

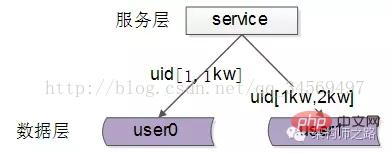

Load balancing of [data layer]When the amount of data is large, since the data layer (db, cache) involves horizontal segmentation of data, so The load balancing of the data layer is more complicated. It is divided into

"data balancing" and "request balancing". The balance of data refers to: the amount of data

of each service (db, cache) after horizontal segmentation is almost the same.The balance of requests refers to: each service (db, cache) after horizontal segmentation, request volume

is almost the same.There are several common horizontal segmentation methods in the industry:

1. Horizontal segmentation according to range

Each data service, stores a certain range of data

Each data service, stores a certain range of data

user0 service, stores uid range 1-1kw

user1 service, storage uid range 1kw-2kwThe benefits of this solution are: (1) The rules are simple, and the service only needs to determine the uid range to route to the corresponding storage service(2) The data balance is better(3) It is relatively easy to expand. You can add a data service with uid [2kw, 3kw] at any timeThe disadvantages are:(1) The load of requests is not necessarily balanced. Generally speaking, newly registered users will be more active than old users, and the pressure on service requests in a large range will be greater.

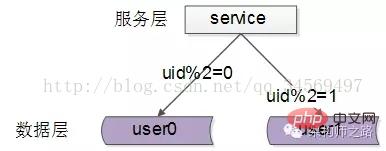

2. According to id Hash horizontal segmentation

Each data service,stores part of the data hashed by a certain key value, as shown above For example:

user0 service, stores even uid data

user1 service, stores odd uid data

The benefits of this solution are:

(1) The rules are simple, the service only needs to hash the uid to be routed to the corresponding storage service

(2) The data balance is good

(3) The request uniformity is good

Disadvantages are:

(1) It is not easy to expand. When expanding a data service, when the hash method changes, data migration may be required

Summary

Load Balance (Load Balance) is one of the factors that must be considered in the design of distributed system architecture. It usually refers to allocating requests/data [evenly] to multiple operating units for execution. The key to load balancing is [evenly] .

(1) Load balancing from [Client layer] to [Reverse proxy layer] is achieved through "DNS polling"

(2) [Reverse proxy layer] to [Site layer] load balancing is achieved through "nginx"

(3) [Site layer] to [Service layer] load balancing is achieved through "service connection pool"

(4) [Data layer] load balancing should consider two points: "data balancing" and "request balancing". Common methods include "horizontal segmentation according to range" and "hash horizontal segmentation"

Apache load balancing setting method

Generally speaking, load balancing is to distribute client requests to various real servers on the backend to achieve Load balancing purpose. Another way is to use two servers, one as the main server (Master) and the other as the hot backup (Hot Standby). All requests are given to the main server. When the main server goes down, it is immediately switched to the backup server. In order to improve the overall availability of the system

I was also surprised when I first saw this title. Apache can actually do load balancing? It's so powerful. After some investigation, I found that it is indeed possible, and the functionality is not bad at all. This is all thanks to the mod_proxy module. It is indeed a powerful Apache.

Without further ado, let’s explain how to set up load balancing.

Generally speaking, load balancing is to distribute client requests to various real servers on the backend to achieve the purpose of load balancing. Another way is to use two servers, one as the main server (Master) and the other as the hot backup (Hot Standby). All requests are given to the main server. When the main server goes down, it is immediately switched to the backup server. to improve the overall reliability of the system.

1. Load balancing settings

1). Basic configuration

Apache can meet the above two needs. Let’s first discuss how to do load balancing. Assuming that the domain name of an apache server is www.a.com, you first need to enable several modules of Apache:

The code is as follows:

LoadModule proxy_module modules/mod_proxy.so LoadModule proxy_balancer_module modules/mod_proxy_balancer.so LoadModule proxy_http_module modules/mod_proxy_http.so

mod_proxy provides the proxy server function, and mod_proxy_balancer provides the load Balanced function, mod_proxy_http enables proxy servers to support the HTTP protocol. If you replace mod_proxy_http with other protocol modules (such as mod_proxy_ftp), it may be able to support load balancing of other protocols. Interested friends can try it themselves.

Then add the following configuration:

The code is as follows:

ProxyRequests Off <Proxy balancer://mycluster> BalancerMember http://node-a.myserver.com:8080 BalancerMember http://node-b.myserver.com:8080 </Proxy> ProxyPass / balancer://mycluster/ # 警告:以下这段配置仅用于调试,绝不要添加到生产环境中!!! <Location /balancer-manager> SetHandler balancer-manager order Deny,Allow Deny from all Allow from localhost </Location>

Note: node-a.myserver.com, node-b.myserver.com are the domain names of the other two servers. Not the domain name of the current server

As can be seen from the ProxyRequests Off above, the load balancer is actually a reverse proxy, but its proxy forwarding address is not a specific server, but a balancer:// protocol:

ProxyPass / balancer://mycluster protocol address can be defined arbitrarily. Then, set the content of the balancer protocol in the

The following paragraph

OK, after making the changes, restart the server and visit the address of your Apache server (www.a.com) to see the load balancing effect.

出错提示: 访问网页提示Internal Serveral Error,察看error.log文件

Error.log code

[warn] proxy: No protocol handler was valid for the URL /admin/login_form. If you are using a DSO version of mod_proxy, make sure the proxy submodules are included in the configuration using LoadModule.

The reason is the configuration: # ProxyPass / balancer://mycluster may be missing one /

2). Load proportional distribution

Open the balancer-manager interface and you can see that requests are evenly distributed.

如果不想平均分配怎么办?给 BalancerMember 加上 loadfactor 参数即可,取值范围为1-100。比如你有三台服务器,负载分配比例为 7:2:1,只需这样设置:

Httpd.conf代码

ProxyRequests Off <Proxy balancer://mycluster> BalancerMember http://node-a.myserver.com:8080 loadfactor=7 BalancerMember http://node-b.myserver.com:8080 loadfactor=2 BalancerMember http://node-c.myserver.com:8080 loadfactor=1 </Proxy> ProxyPass / balancer://mycluster

3).负载分配算法

默认情况下,负载均衡会尽量让各个服务器接受的请求次数满足预设的比例。如果要改变算法,可以使用 lbmethod 属性。如:

代码如下:

ProxyRequests Off <Proxy balancer://mycluster> BalancerMember http://node-a.myserver.com:8080 loadfactor=7 BalancerMember http://node-b.myserver.com:8080 loadfactor=2 BalancerMember http://node-c.myserver.com:8080 loadfactor=1 </Proxy> ProxyPass / balancer://mycluster ProxySet lbmethod=bytraffic

lbmethod可能的取值有:

lbmethod=byrequests 按照请求次数均衡(默认)

lbmethod=bytraffic 按照流量均衡

lbmethod=bybusyness 按照繁忙程度均衡(总是分配给活跃请求数最少的服务器)

各种算法的原理请参见Apache的文档。

2. 热备份(Hot Standby)

热备份的实现很简单,只需添加 status=+H 属性,就可以把某台服务器指定为备份服务器:

代码如下:

ProxyRequests Off <Proxy balancer://mycluster> BalancerMember http://node-a.myserver.com:8080 BalancerMember http://node-b.myserver.com:8080 status=+H </Proxy> ProxyPass / balancer://mycluster

从 balancer-manager 界面中可以看到,请求总是流向 node-a ,一旦node-a挂掉, Apache会检测到错误并把请求分流给 node-b。Apache会每隔几分钟检测一下 node-a 的状况,如果node-a恢复,就继续使用node-a。

apache负载均衡的安装和实现方法

其实无论是分布式,数据缓存,还是负载均衡,无非就是改善网站的性能瓶颈,在网站源码不做优化的情况下,负载均衡可以说是最直接的手段了。其实抛开这个名词,放开了说,就是希望用户能够分流,也就是说把所有用户的访问压力分散到多台服务器上,也可以分散到多个tomcat里,如果一台服务器装多个tomcat,那么即使是负载均衡,性能也提高不了太多,不过可以提高稳定性,即容错性。当其中一个主tomcat当掉,其他的tomcat也可以补上,因为tomcat之间实现了Session共享。待tomcat服务器修复后再次启动,就会自动拷贝所有session数据,然后加入集群。这样就可以不间断的提供服务。如果要真正从本质上提升性能,必须要分布到多台服务器。同样tomcat也可以做到。网上相关资料比较多,可以很方便的查到,但是质量不算高。我希望可以通过这篇随笔,系统的总结。

本文的 例子是同一台服务器上运行两个tomcat,做两个tomcat之间的负载均衡。其实多台服务器各配置一个tomcat也可以,而且那样的话,可以使用安装版的tomcat,而不用是下文中的免安装的tomcat,而且tomcat端口配置也就不用修改了。下文也会提到。

tomcat的负载均衡需要apache服务器的加入来实现。在进行配置之前请先卸载调已安装的tomcat,然后检查apache的版本。我这次配置使用的是apache-tomcat-6.0.18免安装版本,我亲自测试后推断安装版的tomcat在同一台机子上会不能启动两个以上,可能是因为安装版的tomcat侵入了系统,导致即使在server.xml里修改了配置,还是会引起冲突。所以我使用tomcat免安装版。

apache使用的是apache_2.2.11-win32-x86-no_ssl.msi。如果版本低于2.2Apache负载均衡的配置要有所不同,因为这个2.2.11和2.2.8版本集成了jk2等负载均衡工具,所以配置要简单许多。别的版本我没有具体测试,有待考究。这两个软件可以到官方网站下载。

把Apache安装为运行在80端口的Windows服务,安装成功后在系统服务列表中可以看到Apache2.2服务。服务启动后在浏览器中输入http://localhost进行测试,如果能看到一个"It works!"的页面就代表Apache已经正常工作了。把tomcat解压到任意目录,赋值一个另命名。起名和路径对配置没有影响。但要保证端口不要冲突,如果装有Oracle或IIS的用户需要修改或关闭相关接口的服务。当然jdk的配置也是必须的,这个不再过多叙述。

想要达到负载均衡的目的,首先,在Apache安装目录下找到conf/httpd.conf文件,去掉以下文本前的注释符(#)以便让Apache在启动时自动加载代理(proxy)模块。

代码如下:

LoadModule proxy_module modules/mod_proxy.so LoadModule proxy_ajp_module modules/mod_proxy_ajp.so LoadModule proxy_balancer_module modules/mod_proxy_balancer.so LoadModule proxy_connect_module modules/mod_proxy_connect.so LoadModule proxy_ftp_module modules/mod_proxy_ftp.so LoadModule proxy_http_module modules/mod_proxy_http.so

向下拉动文档找到

然后打开conf/extra/httpd-vhosts.conf,配置虚拟站点,在最下面加上

代码如下:

<VirtualHost *:80> ServerAdmin 管理员邮箱 ServerName localhost ServerAlias localhost ProxyPass / balancer://sy/ stickysession=jsessionid nofailover=On ProxyPassReverse / balancer://sy/ ErrorLog "logs/sy-error.log" CustomLog "logs/sy-access.log" common </VirtualHost>

然后回到httpd.conf,在文档最下面加上

代码如下:

ProxyRequests Off <proxy balancer://sy> BalancerMember ajp://127.0.0.1:8009 loadfactor=1 route=jvm1 BalancerMember ajp://127.0.0.1:9009 loadfactor=1 route=jvm2 </proxy>

ProxyRequests Off 是告诉Apache需要使用反向代理,ip地址和端口唯一确定了tomcat节点和配置的ajp接受端口。loadfactor是负载因子,Apache会按负载因子的比例向后端tomcat节点转发请求,负载因子越大,对应的tomcat服务器就会处理越多的请求,如两个tomcat都是1,Apache就按1:1的比例转发,如果是2和1就按2:1的比例转发。这样就可以使配置更灵活,例如可以给性能好的服务器增加处理工作的比例,如果采取多台服务器,只需要修改ip地址和端口就可以了。route参数对应后续tomcat负载均衡配置中的引擎路径(jvmRoute)

The above is the detailed content of How to configure load balancing in Apache. For more information, please follow other related articles on the PHP Chinese website!

What does apache mean?

What does apache mean?

apache startup failed

apache startup failed

How to solve the problem of black screen after turning on the computer and unable to enter the desktop

How to solve the problem of black screen after turning on the computer and unable to enter the desktop

What to do if the Bluetooth switch is missing in Windows 10

What to do if the Bluetooth switch is missing in Windows 10

STYLE.BACKGROUND

STYLE.BACKGROUND

Usage of source command in linux

Usage of source command in linux

Does Hongmeng OS count as Android?

Does Hongmeng OS count as Android?

Can windows.old be deleted?

Can windows.old be deleted?