MapReduce principle

MapReduce is a programming model for parallel operations on large-scale data sets (larger than 1TB). The concepts "Map" and "Reduce", which are their main ideas, are borrowed from functional programming languages, as well as features borrowed from vector programming languages.

It greatly facilitates programmers to run their own programs on distributed systems without knowing distributed parallel programming. The current software implementation specifies a Map function to map a set of key-value pairs into a new set of key-value pairs, and specifies a concurrent Reduce function to ensure that all mapped key-value pairs are Each of them share the same set of keys.

Working principle(Recommended learning: Java video tutorial)

MapReduce execution process

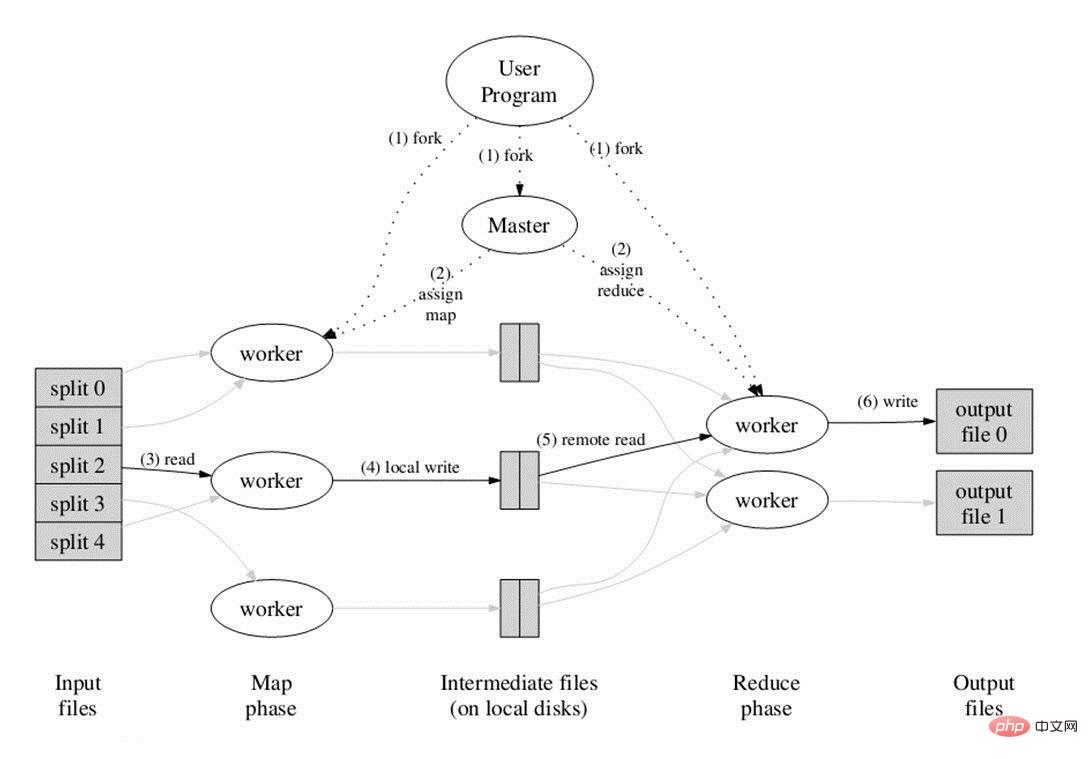

The above picture is the flow chart given in the paper. Everything starts from the user program at the top. The user program is linked to the MapReduce library and implements the most basic Map function and Reduce function. The order of execution in the figure is marked with numbers.

The above picture is the flow chart given in the paper. Everything starts from the user program at the top. The user program is linked to the MapReduce library and implements the most basic Map function and Reduce function. The order of execution in the figure is marked with numbers.

1. The MapReduce library first divides the input file of the user program into M parts (M is user-defined). Each part is usually 16MB to 64MB, as shown on the left side of the figure, divided into split0~4; then Use fork to copy the user process to other machines in the cluster.

2. One copy of the user program is called master, and the others are called workers. The master is responsible for scheduling and allocating jobs (Map jobs or Reduce jobs) to idle workers. The number of workers can also be determined by the user. Specified.

3. The worker assigned to the Map job begins to read the input data of the corresponding shard. The number of Map jobs is determined by M and corresponds to split one-to-one; the Map job extracts the key from the input data. Value pairs, each key-value pair is passed to the map function as a parameter, and the intermediate key-value pairs generated by the map function are cached in memory.

4. The cached intermediate key-value pairs will be regularly written to the local disk and divided into R areas. The size of R is defined by the user. In the future, each area will correspond to a Reduce job; these The location of the intermediate key-value pair will be notified to the master, and the master is responsible for forwarding the information to the Reduce worker.

5. The master notifies the worker assigned the Reduce job where the partition it is responsible for is located (there must be more than one place, and the intermediate key-value pairs generated by each Map job may be mapped to all R different partitions), After the Reduce worker reads all the intermediate key-value pairs it is responsible for, it first sorts them so that key-value pairs with the same key are gathered together. Because different keys may be mapped to the same partition, that is, the same Reduce job (who has fewer partitions), sorting is necessary.

6. The reduce worker traverses the sorted intermediate key-value pairs. For each unique key, it passes the key and associated value to the reduce function. The output generated by the reduce function will be added to the output of this partition. in the file.

7. When all Map and Reduce jobs are completed, the master wakes up the genuine user program, and the MapReduce function call returns the code of the user program.

After all executions are completed, the MapReduce output is placed in the output files of R partitions (each corresponding to a Reduce job). Users usually do not need to merge these R files, but use them as input to another MapReduce program for processing. During the entire process, the input data comes from the underlying distributed file system (GFS), the intermediate data is placed in the local file system, and the final output data is written to the underlying distributed file system (GFS). And we should pay attention to the difference between Map/Reduce jobs and map/reduce functions: Map jobs process a shard of input data and may need to call the map function multiple times to process each input key-value pair; Reduce jobs process the intermediate keys of a partition Value pairs, during which the reduce function is called once for each different key, and the Reduce job finally corresponds to an output file.

For more Java-related technical articles, please visit the Java Development Tutorial column to learn!

The above is the detailed content of MapReduce principle. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

How does a HashMap work internally in Java?

Jul 15, 2025 am 03:10 AM

How does a HashMap work internally in Java?

Jul 15, 2025 am 03:10 AM

HashMap implements key-value pair storage through hash tables in Java, and its core lies in quickly positioning data locations. 1. First use the hashCode() method of the key to generate a hash value and convert it into an array index through bit operations; 2. Different objects may generate the same hash value, resulting in conflicts. At this time, the node is mounted in the form of a linked list. After JDK8, the linked list is too long (default length 8) and it will be converted to a red and black tree to improve efficiency; 3. When using a custom class as a key, the equals() and hashCode() methods must be rewritten; 4. HashMap dynamically expands capacity. When the number of elements exceeds the capacity and multiplies by the load factor (default 0.75), expand and rehash; 5. HashMap is not thread-safe, and Concu should be used in multithreaded

Java Optional example

Jul 12, 2025 am 02:55 AM

Java Optional example

Jul 12, 2025 am 02:55 AM

Optional can clearly express intentions and reduce code noise for null judgments. 1. Optional.ofNullable is a common way to deal with null objects. For example, when taking values from maps, orElse can be used to provide default values, so that the logic is clearer and concise; 2. Use chain calls maps to achieve nested values to safely avoid NPE, and automatically terminate if any link is null and return the default value; 3. Filter can be used for conditional filtering, and subsequent operations will continue to be performed only if the conditions are met, otherwise it will jump directly to orElse, which is suitable for lightweight business judgment; 4. It is not recommended to overuse Optional, such as basic types or simple logic, which will increase complexity, and some scenarios will directly return to nu.

How to fix java.io.NotSerializableException?

Jul 12, 2025 am 03:07 AM

How to fix java.io.NotSerializableException?

Jul 12, 2025 am 03:07 AM

The core workaround for encountering java.io.NotSerializableException is to ensure that all classes that need to be serialized implement the Serializable interface and check the serialization support of nested objects. 1. Add implementsSerializable to the main class; 2. Ensure that the corresponding classes of custom fields in the class also implement Serializable; 3. Use transient to mark fields that do not need to be serialized; 4. Check the non-serialized types in collections or nested objects; 5. Check which class does not implement the interface; 6. Consider replacement design for classes that cannot be modified, such as saving key data or using serializable intermediate structures; 7. Consider modifying

How to handle character encoding issues in Java?

Jul 13, 2025 am 02:46 AM

How to handle character encoding issues in Java?

Jul 13, 2025 am 02:46 AM

To deal with character encoding problems in Java, the key is to clearly specify the encoding used at each step. 1. Always specify encoding when reading and writing text, use InputStreamReader and OutputStreamWriter and pass in an explicit character set to avoid relying on system default encoding. 2. Make sure both ends are consistent when processing strings on the network boundary, set the correct Content-Type header and explicitly specify the encoding with the library. 3. Use String.getBytes() and newString(byte[]) with caution, and always manually specify StandardCharsets.UTF_8 to avoid data corruption caused by platform differences. In short, by

Java Socket Programming Fundamentals and Examples

Jul 12, 2025 am 02:53 AM

Java Socket Programming Fundamentals and Examples

Jul 12, 2025 am 02:53 AM

JavaSocket programming is the basis of network communication, and data exchange between clients and servers is realized through Socket. 1. Socket in Java is divided into the Socket class used by the client and the ServerSocket class used by the server; 2. When writing a Socket program, you must first start the server listening port, and then initiate the connection by the client; 3. The communication process includes connection establishment, data reading and writing, and stream closure; 4. Precautions include avoiding port conflicts, correctly configuring IP addresses, reasonably closing resources, and supporting multiple clients. Mastering these can realize basic network communication functions.

Comparable vs Comparator in Java

Jul 13, 2025 am 02:31 AM

Comparable vs Comparator in Java

Jul 13, 2025 am 02:31 AM

In Java, Comparable is used to define default sorting rules internally, and Comparator is used to define multiple sorting logic externally. 1.Comparable is an interface implemented by the class itself. It defines the natural order by rewriting the compareTo() method. It is suitable for classes with fixed and most commonly used sorting methods, such as String or Integer. 2. Comparator is an externally defined functional interface, implemented through the compare() method, suitable for situations where multiple sorting methods are required for the same class, the class source code cannot be modified, or the sorting logic is often changed. The difference between the two is that Comparable can only define a sorting logic and needs to modify the class itself, while Compar

How to iterate over a Map in Java?

Jul 13, 2025 am 02:54 AM

How to iterate over a Map in Java?

Jul 13, 2025 am 02:54 AM

There are three common methods to traverse Map in Java: 1. Use entrySet to obtain keys and values at the same time, which is suitable for most scenarios; 2. Use keySet or values to traverse keys or values respectively; 3. Use Java8's forEach to simplify the code structure. entrySet returns a Set set containing all key-value pairs, and each loop gets the Map.Entry object, suitable for frequent access to keys and values; if only keys or values are required, you can call keySet() or values() respectively, or you can get the value through map.get(key) when traversing the keys; Java 8 can use forEach((key,value)->

How to parse JSON in Java?

Jul 11, 2025 am 02:18 AM

How to parse JSON in Java?

Jul 11, 2025 am 02:18 AM

There are three common ways to parse JSON in Java: use Jackson, Gson, or org.json. 1. Jackson is suitable for most projects, with good performance and comprehensive functions, and supports conversion and annotation mapping between objects and JSON strings; 2. Gson is more suitable for Android projects or lightweight needs, and is simple to use but slightly inferior in handling complex structures and high-performance scenarios; 3.org.json is suitable for simple tasks or small scripts, and is not recommended for large projects because of its lack of flexibility and type safety. The choice should be decided based on actual needs.