This article brings you the reasons and solutions for Python crawling garbled web pages. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

When using python2 to crawl web pages, we often encounter that the captured content is displayed as garbled characters.

The most likely possibility of this happening is an encoding problem:The character encoding of the running environment is inconsistent with the character encoding of the web page.

For example, a utf-8 encoded website is captured in the windows console (gbk). Or, scrape a gbk-encoded website in a Mac/Linux terminal (utf-8). Because most websites use UTF-8 encoding, and many people use windows, this situation is quite common.

If you find that the English, numbers, and symbols in the content you captured appear to be correct, but there are some garbled characters mixed in, you can basically conclude that this is the case.

#The way to solve this problem is to first decode the result into unicode according to the encoding method of the web page, and then output it. If you are not sure about the encoding of the web page, you can refer to the following code:

import urllib req = urllib.urlopen("http://some.web.site") info = req.info() charset = info.getparam('charset') content = req.read() print content.decode(charset, 'ignore')

The 'ignore' parameter is used to ignore characters that cannot be decoded.

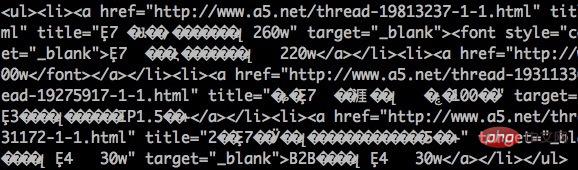

However, this method does not always work. Another way is to directly match the encoding settings in the web page code through regular expressions:

In addition to encoding problems causing garbled characters, there is another situation that is often overlooked, which is thatthe target web page has gzip compression enabled. Compressed web pages transmit less data and open faster. When you open it in a browser, the browser will automatically decompress it based on the header information of the web page. But grabbing it directly with code will not. Therefore, you may be confused as to why it is correct to open the web page address but not to crawl it with the program. Even I myself have been fooled by this problem.

The performance of this situation is that the captured content is almost all garbled and cannot even be displayed.

To determine whether compression is enabled on a web page and decompress it, you can refer to the following code:

import urllib import gzip from StringIO import StringIO req = urllib.urlopen("http://some.web.site") info = req.info() encoding = info.getheader('Content-Encoding') content = req.read() if encoding == 'gzip': buf = StringIO(content) gf = gzip.GzipFile(fileobj=buf) content = gf.read() print content

In our classroom programming example weather check series (click to view), these two problems are troubled Quite a few people. Let me give a special explanation here.

Finally, there is another "sharp weapon" to introduce. If you use it from the beginning, you won't even know that the above two problems exist.

This is therequestsmodule.

Similarly crawl web pages, only need:

import requests print requests.get("http://some.web.site").text

No encoding problems, no compression problems.

This is why I love Python.

As for how to install the requests module, please refer to the previous article:

How to install third-party modules of Python-Crossin's Programming Classroom- Zhihu column

pip install requests

The above is the detailed content of Reasons and solutions for garbled web pages captured by Python. For more information, please follow other related articles on the PHP Chinese website!