The content of this article is about how to use Redis's Bloomfilter to remove duplicates. It not only uses Bloomfilter's massive duplicate removal capabilities, but also uses Redis's persistence capabilities. It has certain reference value. Friends in need can refer to it, I hope it will be helpful to you.

"Removal" is a skill that is often used in daily work. It is even more commonly used in the crawler field and is of average scale. All are relatively large. Two points need to be considered for deduplication: the amount of data to be deduplicated and the speed of deduplication. In order to maintain a fast deduplication speed, deduplication is generally performed in memory.

When the amount of data is not large, it can be placed directly in the memory for deduplication. For example, python can use set() for deduplication.

When deduplication data needs to be persisted, the set data structure of redis can be used.

When the amount of data is larger, you can use different encryption algorithms to compress the long string into 16/32/40 characters, and then use the above two methods to remove duplicates;

When the amount of data reaches the order of hundreds of millions (or even billions or tens of billions), the memory is limited, and "bits" must be used to remove duplicates to meet the demand. Bloomfilter maps deduplication objects to several memory "bits" and uses the 0/1 values of several bits to determine whether an object already exists.

However, Bloomfilter runs on the memory of a machine, which is not convenient for persistence (there will be nothing if the machine is down), and it is not convenient for unified deduplication of distributed crawlers. If you can apply for memory on Redis for Bloomfilter, both of the above problems will be solved.

# encoding=utf-8import redisfrom hashlib import md5class SimpleHash(object):

def __init__(self, cap, seed):

self.cap = cap

self.seed = seed def hash(self, value):

ret = 0

for i in range(len(value)):

ret += self.seed * ret + ord(value[i]) return (self.cap - 1) & retclass BloomFilter(object):

def __init__(self, host='localhost', port=6379, db=0, blockNum=1, key='bloomfilter'):

"""

:param host: the host of Redis

:param port: the port of Redis

:param db: witch db in Redis

:param blockNum: one blockNum for about 90,000,000; if you have more strings for filtering, increase it.

:param key: the key's name in Redis

"""

self.server = redis.Redis(host=host, port=port, db=db)

self.bit_size = 1 << 31 # Redis的String类型最大容量为512M,现使用256M

self.seeds = [5, 7, 11, 13, 31, 37, 61]

self.key = key

self.blockNum = blockNum

self.hashfunc = [] for seed in self.seeds:

self.hashfunc.append(SimpleHash(self.bit_size, seed)) def isContains(self, str_input):

if not str_input: return False

m5 = md5()

m5.update(str_input)

str_input = m5.hexdigest()

ret = True

name = self.key + str(int(str_input[0:2], 16) % self.blockNum) for f in self.hashfunc:

loc = f.hash(str_input)

ret = ret & self.server.getbit(name, loc) return ret def insert(self, str_input):

m5 = md5()

m5.update(str_input)

str_input = m5.hexdigest()

name = self.key + str(int(str_input[0:2], 16) % self.blockNum) for f in self.hashfunc:

loc = f.hash(str_input)

self.server.setbit(name, loc, 1)if __name__ == '__main__':""" 第一次运行时会显示 not exists!,之后再运行会显示 exists! """

bf = BloomFilter() if bf.isContains('http://www.baidu.com'): # 判断字符串是否存在

print 'exists!'

else: print 'not exists!'

bf.insert('http://www.baidu.com')How is Bloomfilter algorithm There are many explanations on Baidu about using bit deduplication. To put it simply, there are several seeds. Now apply for a section of memory space. A seed can be hashed with a string and mapped to a bit on this memory. If several bits are 1, it means that the string already exists. The same is true when inserting, setting all mapped bits to 1.

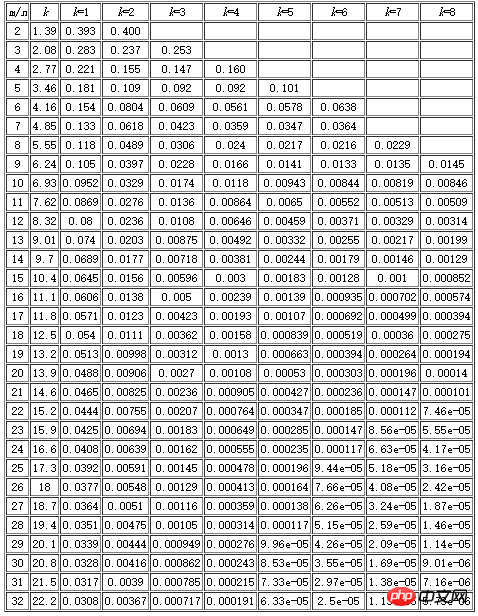

It should be reminded that the Bloomfilter algorithm has a missing probability, that is, there is a certain probability that a non-existent string will be misjudged as already existing. The size of this probability is related to the number of seeds, the memory size requested, and the number of deduplication objects. There is a table below, m represents the memory size (how many bits), n represents the number of deduplication objects, and k represents the number of seeds. For example, I applied for 256M in my code, which is 1

Bloomfilter deduplication based on Redis actually uses the String data structure of Redis, but a Redis String can only be up to 512M, so if the deduplication data The volume is large and you need to apply for multiple deduplication blocks (blockNum in the code represents the number of deduplication blocks).

The code uses MD5 encryption and compression to compress the string to 32 characters (hashlib.sha1() can also be used to compress it to 40 characters). It has two functions. First, Bloomfilter will make errors when hashing a very long string, often misjudging it as already existing. This problem no longer exists after compression; second, the compressed characters are 0~f. There are 16 possibilities in total. I intercepted the first two characters, and then assigned the string to different deduplication blocks based on blockNum for deduplication.

Bloomfilter deduplication based on Redis uses both Bloomfilter's massive deduplication capabilities and Redis's Persistence capability, based on Redis, also facilitates deduplication of distributed machines. During use, it is necessary to budget the amount of data to be deduplicated, and appropriately adjust the number of seeds and blockNum according to the above table (the fewer seeds, the faster the deduplication will be, but the greater the leakage rate).

The above is the detailed content of How to use Redis's Bloomfilter to remove duplicates during the crawler process. For more information, please follow other related articles on the PHP Chinese website!